KubeFlow安装指南

组件

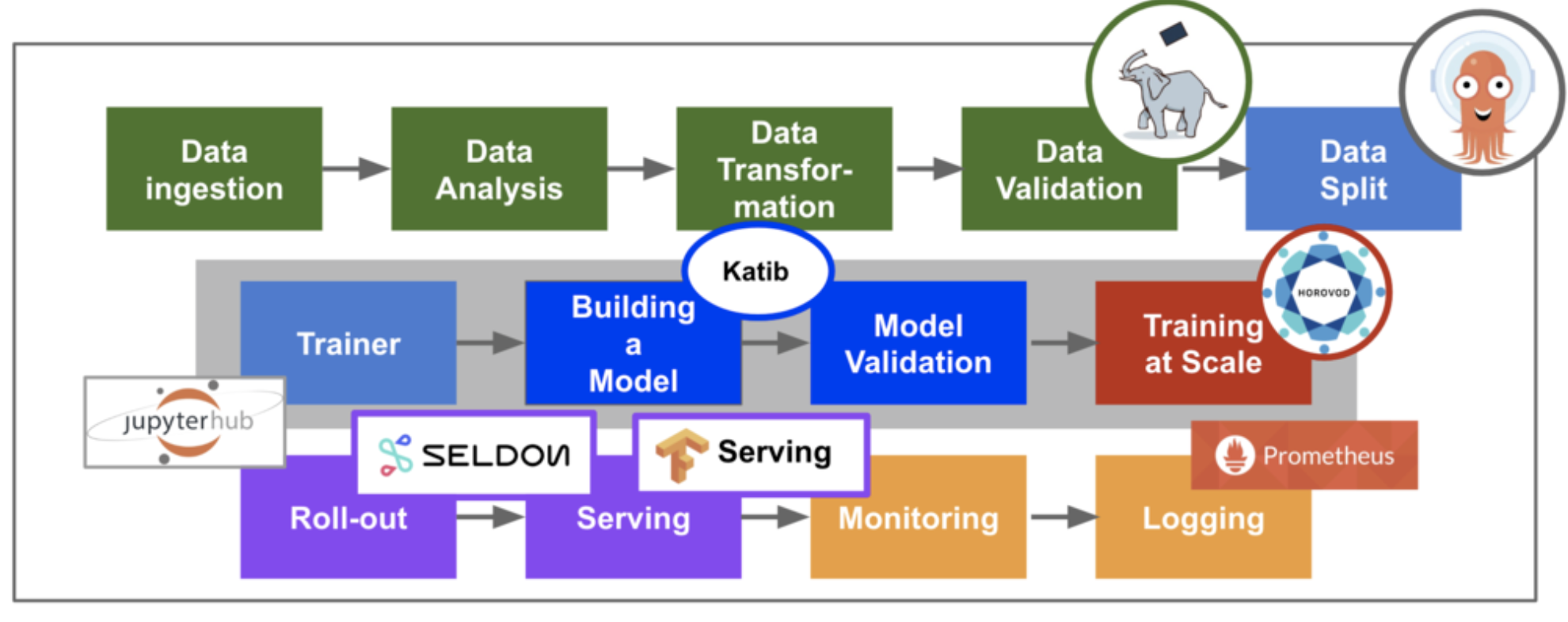

Kubeflow使用场景

希望训练tensorflow模型且可以使用模型接口发布应用服务在k8s环境中(eg.local,prem,cloud)

希望使用Jupyter notebooks来调试代码,多用户的notebook server

在训练的Job中,需要对的CPU或者GPU资源进行调度编排

希望Tensorflow和其他组件进行组合来发布服务

依赖库

ksonnet 0.11.0以上版本 /可以直接从github上下载,scp ks文件到usr/local/bin

kubernetes 1.8以上(直接使用CCE服务节点,需要创建一个CCE集群和若干节点,并为某个节点绑定EIP)

kubectl tools

1、安装ksonnet

ksonnet 安装过程,可以去网址里面查看ks最新版本

wget https://github.com/ksonnet/ksonnet/releases/download/v0.13.0/ks_0.13.0_linux_amd64.tar.gz

tar -vxf ks_0.13.0_linux_amd64.tar.gz

cd -vxf ks_0.13.0_linux_amd64

sudo cp ks /usr/local/bin

安装完成后

2、安装kubectl工具

wget https://cce-storage.obs.cn-north-1.myhwclouds.com/kubectl.zip

yum install unzip

unzip kubectl.zip

cp kubectl /usr/local/bin/

#在集群页面查看kubectl工具

#下载并复制下图中的config文件内容

mkdir /root/.kube/

touch /root/.kube/config

vi /root/.kube/config

#黏贴内容 :wq!保存

#因为这边节点已经绑定了EIP,直接选择集群内访问即可

kubectl config use-context internal

安装成功后执行kubectl version查看版本信息是否符合要求

3、安装kubeflow

配置参数介绍

KUBEFLOW_SRC:下载文件存放的目录

KUBEFLOW_TAG:代码分支的tag,如Master(当前还有一个v0.2分支)

KFAPP:用来保存deployment、tfjob等应用的目录,ksonnet app会保存在${KFAPP}/ks_app目录下面

KUBEFLOW_REPO: kubeflow仓库路径,可以自己load下来,放到指定位置。具体做法:curl -L -o /root/kubeflow/kubeflow.tar.gz https://github.com/kubeflow/kubeflow/archive/master.tar.gz;tar -xzvf kubeflow.tar.gz;KUBEFLOW_REPO=/root/kubeflow/kubeflow-master/

安装过程

mkdir ${KUBEFLOW_SRC}

cd ${KUBEFLOW_SRC}

export KUBEFLOW_TAG=<version>

curl https://raw.githubusercontent.com/kubeflow/kubeflow/${KUBEFLOW_TAG}/scripts/download.sh | bash #如果要自行安装可以看下download.sh 这个脚本

${KUBEFLOW_REPO}/scripts/kfctl.sh init ${KFAPP} --platform none #初始化,会生成一个KFAPP目录

cd ${KFAPP}

${KUBEFLOW_REPO}/scripts/kfctl.sh generate k8s #生成需要的component

${KUBEFLOW_REPO}/scripts/kfctl.sh apply k8s #生成对应的资源

4、查看当前启动的工作负载

Argo 基于K8s的工作流引擎 https://argoproj.github.io/

Ambassador API Gateway https://www.getambassador.io/

tf-operator https://github.com/kubeflow/tf-operator/blob/master/developer_guide.md

对外暴露了的workflow和jupyter notebook,提供可视化的交互

5、下载kubeflow官方的example:基于TensorFlow的分布式CNN模型训练

执行脚本

CNN_JOB_NAME=mycnnjob

VERSION=v0.2-branch

KS_APP=cnnjob

KF_ENV=default

ks init ${KS_APP}

cd ${KS_APP}

ks registry add kubeflow-git github.com/kubeflow/kubeflow/tree/${VERSION}/kubeflow

ks pkg install kubeflow-git/examples

ks generate tf-job-simple ${CNN_JOB_NAME} --name=${CNN_JOB_NAME}

ks apply ${KF_ENV} -c ${CNN_JOB_NAME}

# |

查看定义TFjob资源

启动了一个ps(相当于参数服务器)和worker(相当于计算服务器)

tfjob的yaml定义

apiVersion: kubeflow.org/v1alpha2

kind: TFJob

metadata:

annotations:

ksonnet.io/managed: '{"pristine":"H4sIAAAAAAAA/+yRTWvcTAzH78/H0Nle7zpPaGPwqSWUHtqlCc2hBCOPZe/U84ZGs8Fd9ruXcUi3L5+g0DkM0l8aifn/ToBBfyaO2jtoYE49jcY/bTxP1XGHJhywhgJm7QZo4P72ve+hAEuCAwpCcwKDPZmYozl650g22lfK2+AdOYEG7KKc++p7OBfg0NLP0rMSA6osDzRiMpIbYyCVZ8r4iYLRCu8CqXXLfr2FbDAolOOXXuWdoHbEEZovJ0CecgBhkYN3UICMnXKu68mpg0We4yYsUEBZ9ijq0EX9jdqrelWsH8i0TNGRXG9X6YissTfUpTCgUBuQ0ZIQd5H4SLw2jSbFQxdl8Ela4USr6pLtppBiu1tT4xWa7vJ+oKNW1KqQ1vLvKQp2o2eL0n549/AGHgvQFqds2KQ4u/1CrZKxvPyuVCE1x3q7e7Wrt3XZD9hf39yUg2ZZyvr1/zhewQ8iQi56zkOggCfPs3bTW83QQOWD/Dq4iop1kFj9YSicHwtgioIse2+0WqCBj+4WtUlMcD4XF6D3S8iL93cZ94PnmThD5OdqhGZX/KP8d1LO57/vAAAA//8BAAD//zMKTHZaBAAA"}'

clusterName: ""

creationTimestamp: 2018-09-26T15:33:07Z

generation: 0

labels:

app.kubernetes.io/deploy-manager: ksonnet

ksonnet.io/component: mycnnjob

name: mycnnjob

namespace: default

resourceVersion: "2293964"

selfLink: /apis/kubeflow.org/v1alpha2/namespaces/default/tfjobs/mycnnjob

uid: 777da1bb-c1a1-11e8-8661-fa163e4006b8

spec:

cleanPodPolicy: Running

tfReplicaSpecs:

PS:

replicas: 1

restartPolicy: Never

template:

metadata:

creationTimestamp: null

spec:

containers:

- args:

- python

- tf_cnn_benchmarks.py

- --batch_size=32

- --model=resnet50

- --variable_update=parameter_server

- --flush_stdout=true

- --num_gpus=1

- --local_parameter_device=cpu

- --device=cpu

- --data_format=NHWC

image: gcr.io/kubeflow/tf-benchmarks-cpu:v20171202-bdab599-dirty-284af3

name: tensorflow

ports:

- containerPort: 2222

name: tfjob-port

resources: {}

workingDir: /opt/tf-benchmarks/scripts/tf_cnn_benchmarks

restartPolicy: OnFailure

Worker:

replicas: 1

restartPolicy: Never

template:

metadata:

creationTimestamp: null

spec:

containers:

- args:

- python

- tf_cnn_benchmarks.py

- --batch_size=32

- --model=resnet50

- --variable_update=parameter_server

- --flush_stdout=true

- --num_gpus=1

- --local_parameter_device=cpu

- --device=cpu

- --data_format=NHWC

image: gcr.io/kubeflow/tf-benchmarks-cpu:v20171202-bdab599-dirty-284af3

name: tensorflow

ports:

- containerPort: 2222

name: tfjob-port

resources: {}

workingDir: /opt/tf-benchmarks/scripts/tf_cnn_benchmarks

restartPolicy: OnFailure

status:

completionTime: 2018-09-26T15:33:58Z

conditions:

- lastTransitionTime: 2018-09-26T15:33:07Z

lastUpdateTime: 2018-09-26T15:33:07Z

message: TFJob mycnnjob is created.

reason: TFJobCreated

status: "True"

type: Created

- lastTransitionTime: 2018-09-26T15:33:07Z

lastUpdateTime: 2018-09-26T15:33:10Z

message: TFJob mycnnjob is running.

reason: TFJobRunning

status: "False"

type: Running

- lastTransitionTime: 2018-09-26T15:33:07Z

lastUpdateTime: 2018-09-26T15:33:58Z

message: TFJob mycnnjob is failed.

reason: TFJobFailed

status: "True"

type: Failed

startTime: 2018-09-26T15:33:10Z

tfReplicaStatuses:

Chief: {}

Master: {}

PS: {}

Worker: {}

6、分布式TensorFlow编程

样例:使用worker和parameter servers进行矩阵点乘运算 https://github.com/tensorflow/k8s/tree/master/examples/tf_sample

1 | for job_name in cluster_spec.keys(): |

tf.train.ClusterSpec为这个集群内部的master调度运算的信息。

(1、ps类型的job定义需要计算的参数值,worker对参数值进行计算,2、参数的更新以及传输,ps向worker发送更新后的参数,worker返回给ps计算好的梯度值)

1 | with tf.device("/job:ps/task:0"): |

- 点赞

- 收藏

- 关注作者

评论(0)