神经网络与深度学习第二周测验 Neural Network Basics

1.What does a neuron compute?

什么是神经元计算

- 🔲A neuron computes the mean of all features before applying the output to an activation function

在将输出应用于激活函数之前,神经元会计算所有特征的均值

- 🔲A neuron computes a function g that scales the input x linearly (Wx + b)

神经元计算一个函数g,该函数对输入x进行线性缩放为(Wx + b)

- 🔲A neuron computes an activation function followed by a linear function (z = Wx + b)

神经元先计算激活函数,然后计算线性函数(z = Wx + b)

- ✅A neuron computes a linear function (z = Wx + b) followed by an activation function

神经元计算线性函数(z = Wx + b),然后计算激活函数

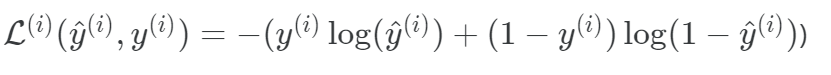

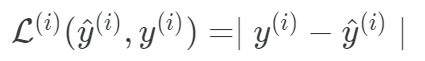

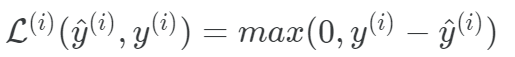

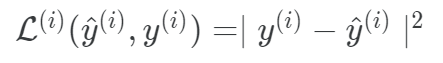

2.Which of these is the "Logistic Loss"?

哪个是逻辑损失函数

- ✅

- 🔲

- 🔲

- 🔲

3.Suppose img is a (32,32,3) array, representing a 32x32 image with 3 color channels red, green and blue. How do you reshape this into a column vector?

假设img是(32,32,3)数组,表示一个32x32图像,具有3个颜色通道,红色,绿色和蓝色。 如何将其重塑为列向量?

- 🔲x = img.reshape((1,32*32,*3))

- 🔲x = img.reshape((3,32*32))

- ✅x = img.reshape((32*32*3,1))

- 🔲x = img.reshape((32*32,3))

4.Consider the two following random arrays "a" and "b":

考虑以下两个随机数组“ a”和“ b”

a = np.random.randn(2, 3) # a.shape = (2, 3)

b = np.random.randn(2, 1) # b.shape = (2, 1)

c = a + b

What will be the shape of "c"?

c的形状为?

- 🔲c.shape = (3, 2)

- 🔲c.shape = (2, 1)

- 🔲The computation cannot happen because the sizes don't match. It's going to be "Error"!

- ✅c.shape = (2, 3)

5.Consider the two following random arrays "a" and "b":

考虑以下两个随机数组“ a”和“ b”

a = np.random.randn(4, 3) # a.shape = (4, 3)

b = np.random.randn(3, 2) # b.shape = (3, 2)

c = a*b

What will be the shape of "c"?

c的形状为?

- ✅The computation cannot happen because the sizes don't match. It's going to be "Error"!

由于大小不匹配,因此无法进行计算。 这将是“错误”!

- 🔲c.shape = (4,2)

- 🔲c.shape = (4, 3)

- 🔲c.shape = (3, 3)

6.Suppose you have n_x input features per example.

假设每个示例都有n_x个输入功能。

Recall that X=[x(1)x(2)...x(m)].

回想一下X

What is the dimension of X?

X的维度是?

- ✅(nx,m)

- 🔲(m,1)

- 🔲(1,m)

- 🔲(m,nx)

7.Recall that "np.dot(a,b)" performs a matrix multiplication on a and b, whereas "a*b" performs an element-wise multiplication.

回想一下,np.dot(a,b)对a和b执行矩阵乘法,而a * b对元素进行乘法。

Consider the two following random arrays "a" and "b":

考虑以下两个随机数组“ a”和“ b”

a = np.random.randn(12288, 150) # a.shape = (12288, 150)

b = np.random.randn(150, 45) # b.shape = (150, 45)

c = np.dot(a,b)

What is the shape of c?

c的形状为?

- 🔲The computation cannot happen because the sizes don't match. It's going to be "Error"!

由于大小不匹配,因此无法进行计算。 这将是“错误”!

- 🔲c.shape = (12288, 150)

- ✅c.shape = (12288, 45)

- 🔲c.shape = (150,150)

8.Consider the following code snippet:

考虑以下代码片段

# a.shape = (3,4)

# b.shape = (4,1)

for i in range(3):

for j in range(4):

c[i][j] = a[i][j] + b[j]

How do you vectorize this?

它的功能是?

- 🔲c = a + b

- ✅c = a + b.T

- 🔲c = a.T + b.T

- 🔲c = a.T + b

9.Consider the following code:

考虑以下代码

a = np.random.randn(3, 3)

b = np.random.randn(3, 1)

c = a*b

What will be c?

c是啥?

- ✅This will invoke broadcasting, so b is copied three times to become (3,3), and ∗ is an element-wise product so c.shape will be (3, 3)

这将调用python的广播机制,因此b被复制了3次成为(3,3),并且∗是元素方式的乘积,因此c.shape将为(3,3)

- 🔲It will lead to an error since you cannot use “*” to operate on these two matrices. You need to instead use np.dot(a,b)

由于不能使用“ *”在这两个矩阵上进行运算,因此将导致错误。 需要改为使用np.dot(a,b)

- 🔲This will multiply a 3x3 matrix a with a 3x1 vector, thus resulting in a 3x1 vector. That is, c.shape = (3,1).

这会将3x3矩阵a与3x1向量相乘,从而得到3x1向量。 即c.shape =(3,1)。

- 🔲This will invoke broadcasting, so b is copied three times to become (3, 3), and *∗ invokes a matrix multiplication operation of two 3x3 matrices so c.shape will be (3, 3)

这将调用python广播,因此b被复制三次以成为(3,3),而∗调用两个3x3矩阵的矩阵乘法运算,因此c.shape将为(3,3)

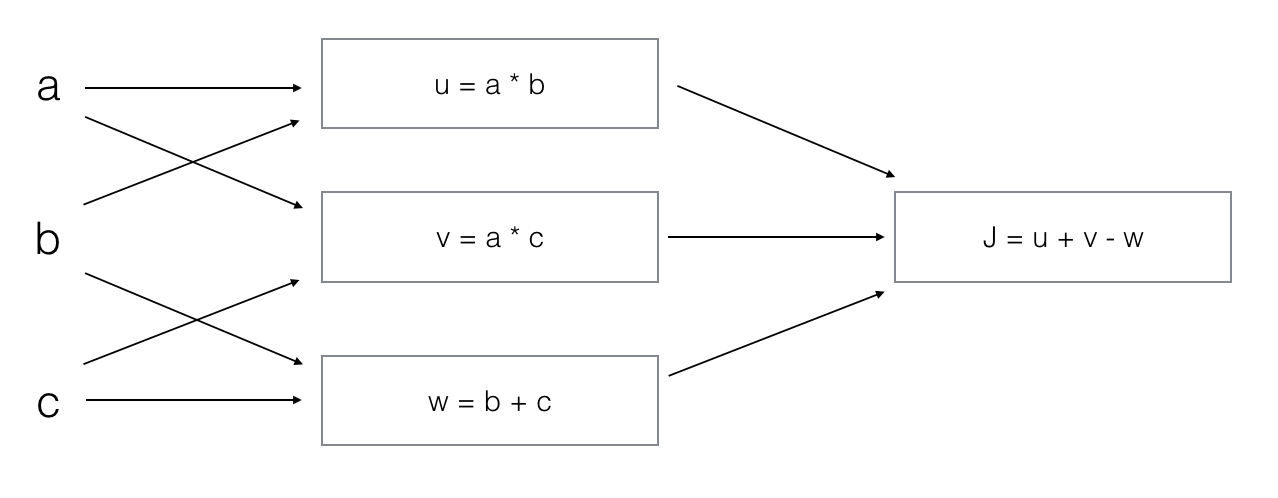

10.Consider the following computation graph.

考虑以下计算图

What is the output J?

J的输出是什么?

- 🔲J = (c - 1)*(b + a)

- ✅J = (a - 1) * (b + c)

- 🔲J = (b - 1) * (c + a)

- 🔲J = a*b + b*c + a*c

文章来源: blog.csdn.net,作者:沧夜2021,版权归原作者所有,如需转载,请联系作者。

原文链接:blog.csdn.net/CANGYE0504/article/details/117230389

- 点赞

- 收藏

- 关注作者

评论(0)