七段液晶数字识别-处理程序

【摘要】

简 介: 对于七段数码管图片进行增强,利用LeNet对数据集建立识别模型。基于这个模型将来用于实际图片中的数字进行识别。 关键词: LCD,LENET

...

简 介: 对于七段数码管图片进行增强,利用LeNet对数据集建立识别模型。基于这个模型将来用于实际图片中的数字进行识别。

关键词: LCD,LENET

§01 七段数码识别

1.1 数据集合准备

1.1.1 论文中图片

(1)原始图片

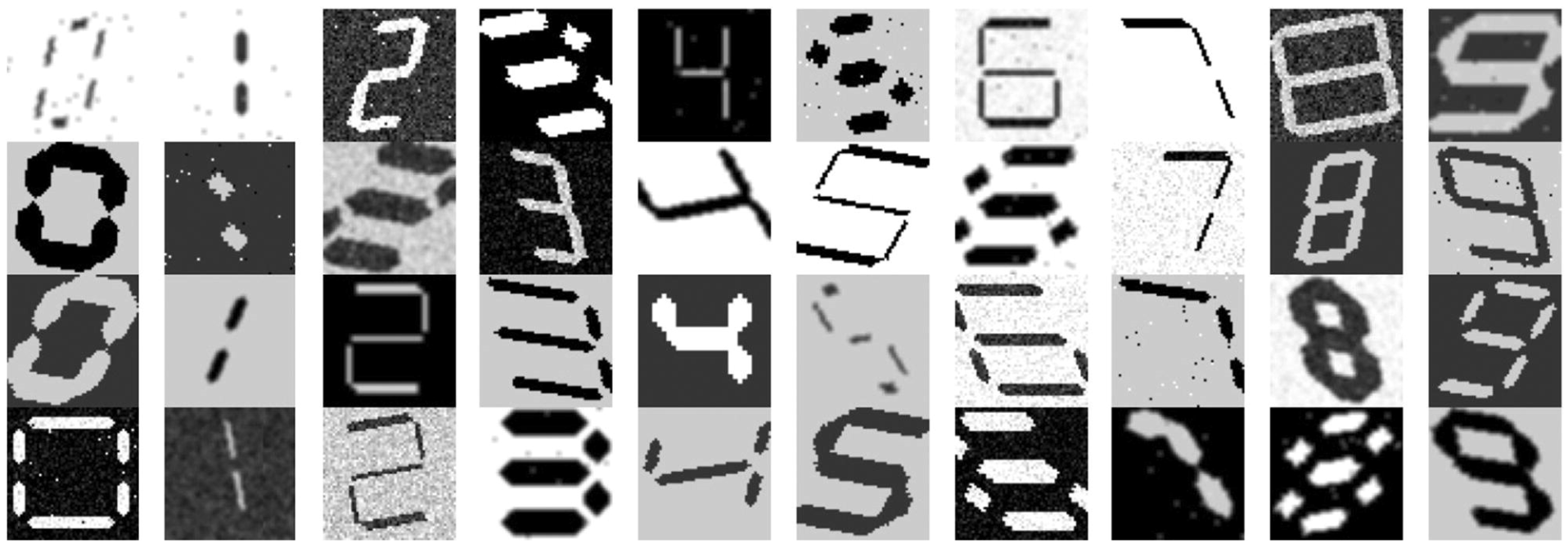

如下是来自于 7 segment LCD Optical Character Recognition 的十个字符的数据集合。将它们分割成各自的图片。

▲ 七段数码识别

▲ 图1.1 七段数码变形图片

(2)分割数字图片

from headm import * # =

import cv2

iddim = [8, 11, 9, 10]

outdir = r'd:\temp\lcd2seg'

if not os.path.isdir(outdir):

os.makedirs(outdir)

def pic2num(picid, boxid, rownum, headstr):

global outdir

imgfile = tspgetdopfile(picid)

printt(imgfile:)

img = cv2.imread(imgfile)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

printt(img.shape, gray.shape)

plt.imshow(gray, plt.cm.gray)

plt.savefig(r'd:\temp\figure1.jpg')

tspshowimage(image=r'd:\temp\figure1.jpg')

imgrect = tspgetrange(picid)

boxrect = tspgetrange(boxid)

imgwidth = gray.shape[1]

imgheight = gray.shape[0]

boxheight = boxrect[3] - boxrect[1]

boxwidth = boxrect[2] - boxrect[0]

for i in range(rownum):

rowstart = boxrect[1] - imgrect[1] + boxheight * i / rownum

rowend = boxrect[1] - imgrect[1] + boxheight * (i+1) / rownum

imgtop = int(rowstart * imgheight / (imgrect[3] - imgrect[1]))

imgbottom = int(rowend * imgheight / (imgrect[3] - imgrect[1]))

for j in range(10):

colstart = boxrect[0] - imgrect[0] + boxwidth * j / 10

colend = boxrect[0] - imgrect[0] + boxwidth * (j + 1) / 10

imgleft = int(colstart * imgwidth / (imgrect[2] - imgrect[0]))

imgright = int(colend * imgwidth / (imgrect[2] - imgrect[0]))

imgdata = gray[imgtop:imgbottom+1, imgleft:imgright+1]

fn = os.path.join(outdir, '%s_%d_%d.jpg'%(headstr, i, j))

cv2.imwrite(fn, imgdata)

pic2num(iddim[2], iddim[3], 4, '2')

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

▲ 图1.1.2 分割出的数字图片

总共获得图片70个。图片的命名标准:最后一个字母代表数字。

1.2 数据集合扩增

对图片进行扩增,扩增方法:

- 旋转:-25 ~ 25

- 比例:0.9 ~ 1.1

- 平移:x,y: -5,5

每个图片扩增到原来的225个。

1.2.1 图片扩增程序

from headm import * # =

import cv2

import paddle

from paddle.vision.transforms import Resize,rotate,adjust_brightness,adjust_contrast

from PIL import Image

from tqdm import tqdm

from tqdm import tqdm

indir = '/home/aistudio/work/lcd7seg/lcd2seg'

outdir = '/home/aistudio/work/lcd7seg/lcdaugment'

infile = os.listdir(indir)

printt(infile:)

BUF_SIZE = 512

def rotateimg(img, degree, ratio, shift_x, shift_y):

imgavg = mean(img.flatten())

if imgavg < 100:

img[img >= 200] = imgavg

imgavg = mean(img.flatten())

img[img >= 200] = imgavg

else: imgavg = 255

whiteimg = ones((BUF_SIZE, BUF_SIZE, 3))*(imgavg/255)

imgshape = img.shape

imgwidth = imgshape[0]

imgheight = imgshape[1]

left = (BUF_SIZE - imgwidth) // 2

top = (BUF_SIZE - imgheight) // 2

whiteimg[left:left+imgwidth, top:top+imgheight, :] = img/255

img1 = rotate(whiteimg, degree)

centerx = BUF_SIZE//2+shift_x

centery = BUF_SIZE//2+shift_y

imgwidth1 = int(imgwidth * ratio)

imgheight1 = int(imgheight * ratio)

imgleft = centerx - imgwidth1//2

imgtop = centery - imgheight1//2

imgnew = img1[imgleft:imgwidth1+imgleft, imgtop:imgtop+imgheight1, :]

imgnew = cv2.resize(imgnew, (32,32))

return imgnew*255

gifpath = '/home/aistudio/GIF'

if not os.path.isdir(gifpath):

os.makedirs(gifpath)

gifdim = os.listdir(gifpath)

for f in gifdim:

fn = os.path.join(gifpath, f)

if os.path.isfile(fn):

os.remove(fn)

count = 0

def outimg(fn):

global count

imgfile = os.path.join(indir, fn)

img = cv2.imread(imgfile)

num = fn.split('.')[0][-1]

rotate = linspace(-25, 25, 5).astype(int)

ratio = linspace(0.8, 1.2, 5)

shiftxy = linspace(-5, 5, 3).astype(int)

for r in rotate:

for t in ratio:

for s in shiftxy:

for h in shiftxy:

imgout = rotateimg(img, r, t, s, h)

outfilename = os.path.join(gifpath, '%04d_%s.jpg'%(count, num))

count += 1

cv2.imwrite(outfilename, imgout)

outfilename = os.path.join(gifpath, '%04d_%s.jpg'%(count, num))

count += 1

cv2.imwrite(outfilename, 255-imgout)

for id,f in tqdm(enumerate(infile)):

if f.find('jpg') < 0: continue

outimg(f)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

1.2.2 扩增结果

▲ 图 每个图片倍增后的图片

▲ 图 每个图片倍增后的图片

每个图片扩增后为225,加上颜色反向,70个图片最终获得数字图片为 31500个图片。

- 数据库参数:

-

数字: 0 ~ 9

个数: 31500

尺寸: 32×32×3

1.3 存储数据文件

1.3.1 转换代码

from headm import * # =

import cv2

lcddir = '/home/aistudio/work/lcd7seg/GIF'

filedim = os.listdir(lcddir)

printt(len(filedim))

imagedim = []

labeldim = []

for f in filedim:

fn = os.path.join(lcddir, f)

if fn.find('.jpg') < 0: continue

gray = cv2.cvtColor(cv2.imread(fn), cv2.COLOR_BGR2GRAY)

imagedim.append(gray)

num = int(f.split('.')[0][-1])

labeldim.append(num)

imgarray = array(imagedim)

label = array(labeldim)

savez('/home/aistudio/work/lcddata', lcd=imgarray, label=label)

printt("Save lcd data.")

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

1.3.2 数据文件

- 数据文件:

-

文件名称:lcddata.npz

lcd: (31500,32,32)

label:(31500)

§02 构建LeNet

2.1 构建LeNet网络

from headm import * # =

import paddle

import paddle.fluid as fluid

from paddle import to_tensor as TT

from paddle.nn.functional import square_error_cost as SQRC

datafile = '/home/aistudio/work/lcddata.npz'

data = load(datafile)

lcd = data['lcd']

llabel = data['label']

printt(lcd.shape, llabel.shape)

class Dataset(paddle.io.Dataset):

def __init__(self, num_samples):

super(Dataset, self).__init__()

self.num_samples = num_samples

def __getitem__(self, index):

data = lcd[index][newaxis,:,:]

label = llabel[index]

return paddle.to_tensor(data,dtype='float32'), paddle.to_tensor(label,dtype='int64')

def __len__(self):

return self.num_samples

_dataset = Dataset(len(llabel))

train_loader = paddle.io.DataLoader(_dataset, batch_size=100, shuffle=True)

imgwidth = 32

imgheight = 32

inputchannel = 1

kernelsize = 5

targetsize = 10

ftwidth = ((imgwidth-kernelsize+1)//2-kernelsize+1)//2

ftheight = ((imgheight-kernelsize+1)//2-kernelsize+1)//2

class lenet(paddle.nn.Layer):

def __init__(self, ):

super(lenet, self).__init__()

self.conv1 = paddle.nn.Conv2D(in_channels=inputchannel, out_channels=6, kernel_size=kernelsize, stride=1, padding=0)

self.conv2 = paddle.nn.Conv2D(in_channels=6, out_channels=16, kernel_size=kernelsize, stride=1, padding=0)

self.mp1 = paddle.nn.MaxPool2D(kernel_size=2, stride=2)

self.mp2 = paddle.nn.MaxPool2D(kernel_size=2, stride=2)

self.L1 = paddle.nn.Linear(in_features=ftwidth*ftheight*16, out_features=120)

self.L2 = paddle.nn.Linear(in_features=120, out_features=86)

self.L3 = paddle.nn.Linear(in_features=86, out_features=targetsize)

def forward(self, x):

x = self.conv1(x)

x = paddle.nn.functional.relu(x)

x = self.mp1(x)

x = self.conv2(x)

x = paddle.nn.functional.relu(x)

x = self.mp2(x)

x = paddle.flatten(x, start_axis=1, stop_axis=-1)

x = self.L1(x)

x = paddle.nn.functional.relu(x)

x = self.L2(x)

x = paddle.nn.functional.relu(x)

x = self.L3(x)

return x

model = lenet()

optimizer = paddle.optimizer.Adam(learning_rate=0.001, parameters=model.parameters())

def train(model):

model.train()

epochs = 100

for epoch in range(epochs):

for batch, data in enumerate(train_loader()):

out = model(data[0])

loss = paddle.nn.functional.cross_entropy(out, data[1])

acc = paddle.metric.accuracy(out, data[1])

loss.backward()

optimizer.step()

optimizer.clear_grad()

print('Epoch:{}, Accuracys:{}'.format(epoch, acc.numpy()))

train(model)

paddle.save(model.state_dict(), './work/model.pdparams')

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

2.2 训练结果

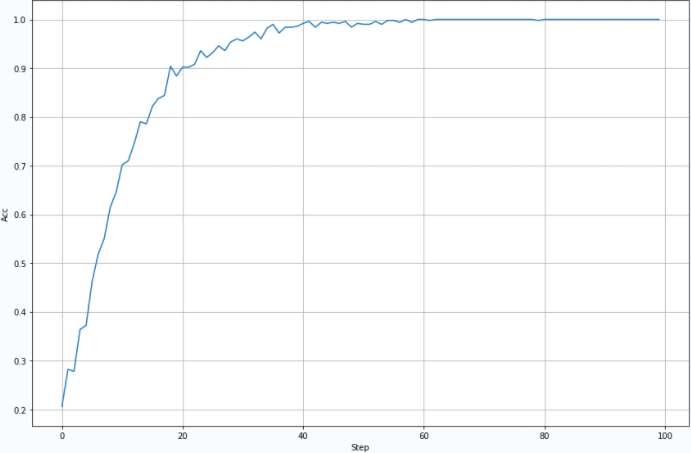

2.2.1 第一次训练

- 训练参数:

-

Lr=0.001:

BatchSize=100:

▲ 图2.2.1 训练精度

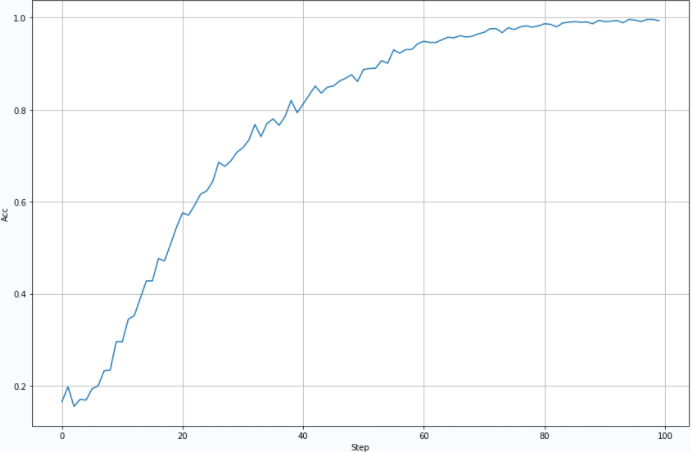

2.2.2 第二次训练

- 训练参数:

-

BatchSize=1000:

Lr=0.001:

▲ 图2.2.2 训练过程中的识别精度

2.2.3 第三次训练

- 训练参数:

-

BatchSize=5000:

Lr=0.005:

▲ 图2.2.3 训练过程中的识别精度

2.2.4 第四次训练

- 训练参数:

-

BatchSize:2000

Lr=0.002:

▲ 图2.2.4 训练过程中的识别精度

2.2.5 第五次训练

- 训练参数:

-

BatchSize:2000

Lr=0.001:

▲ 图2.2.5 训练过程中的识别精度

※ 处理总结 ※

对于七段数码管图片进行增强,利用LeNet对数据集建立识别模型。基于这个模型将来用于实际图片中的数字进行识别。

后期实验

对于通过少量的样本进行变形之后所获得的模型,在 测试LCDNet对于万用表读数识别效果 中进行了测试,发现效果好很不理想,主要原因:

- 训练样本太少,仅仅通过原有的模板进行初步图片变形,还是无法适应大多数的情况;这说明图片的扩增与实际的分布之间还是有很大的差别。

- 对于样本的归一化,Normalization,非常重要。在 一个中等规模的七段数码数据库以及利用它训练的识别网络 引入了归一化,使得训练输出的模型适应度得到了很大的提高。

▲ 图3.1.1 一个中等规模的数码库

3.1 附件:处理程序

3.1.1 LCDLENET

from headm import * # =

import paddle

import paddle.fluid as fluid

from paddle import to_tensor as TT

from paddle.nn.functional import square_error_cost as SQRC

datafile = '/home/aistudio/work/lcddata.npz'

data = load(datafile)

lcd = data['lcd']

llabel = data['label']

printt(lcd.shape, llabel.shape)

class Dataset(paddle.io.Dataset):

def __init__(self, num_samples):

super(Dataset, self).__init__()

self.num_samples = num_samples

def __getitem__(self, index):

data = lcd[index][newaxis,:,:]

label = llabel[index]

return paddle.to_tensor(data,dtype='float32'), paddle.to_tensor(label,dtype='int64')

def __len__(self):

return self.num_samples

_dataset = Dataset(len(llabel))

train_loader = paddle.io.DataLoader(_dataset, batch_size=2000, shuffle=True)

imgwidth = 32

imgheight = 32

inputchannel = 1

kernelsize = 5

targetsize = 10

ftwidth = ((imgwidth-kernelsize+1)//2-kernelsize+1)//2

ftheight = ((imgheight-kernelsize+1)//2-kernelsize+1)//2

class lenet(paddle.nn.Layer):

def __init__(self, ):

super(lenet, self).__init__()

self.conv1 = paddle.nn.Conv2D(in_channels=inputchannel, out_channels=6, kernel_size=kernelsize, stride=1, padding=0)

self.conv2 = paddle.nn.Conv2D(in_channels=6, out_channels=16, kernel_size=kernelsize, stride=1, padding=0)

self.mp1 = paddle.nn.MaxPool2D(kernel_size=2, stride=2)

self.mp2 = paddle.nn.MaxPool2D(kernel_size=2, stride=2)

self.L1 = paddle.nn.Linear(in_features=ftwidth*ftheight*16, out_features=120)

self.L2 = paddle.nn.Linear(in_features=120, out_features=86)

self.L3 = paddle.nn.Linear(in_features=86, out_features=targetsize)

def forward(self, x):

x = self.conv1(x)

x = paddle.nn.functional.relu(x)

x = self.mp1(x)

x = self.conv2(x)

x = paddle.nn.functional.relu(x)

x = self.mp2(x)

x = paddle.flatten(x, start_axis=1, stop_axis=-1)

x = self.L1(x)

x = paddle.nn.functional.relu(x)

x = self.L2(x)

x = paddle.nn.functional.relu(x)

x = self.L3(x)

return x

model = lenet()

optimizer = paddle.optimizer.Adam(learning_rate=0.001, parameters=model.parameters())

def train(model):

model.train()

epochs = 200

for epoch in range(epochs):

for batch, data in enumerate(train_loader()):

out = model(data[0])

loss = paddle.nn.functional.cross_entropy(out, data[1])

acc = paddle.metric.accuracy(out, data[1])

loss.backward()

optimizer.step()

optimizer.clear_grad()

printt('Epoch:{}, Accuracys:{}'.format(epoch, acc.numpy()))

train(model)

paddle.save(model.state_dict(), './work/model3.pdparams')

filename = '/home/aistudio/stdout.txt'

accdim = []

with open(filename, 'r') as f:

for l in f.readlines():

ll = l.split('[')

if len(ll) < 2: continue

ll = ll[-1].split(']')

if len(ll) < 2: continue

accdim.append(float(ll[0]))

plt.figure(figsize=(12, 8))

plt.plot(accdim)

plt.xlabel("Step")

plt.ylabel("Acc")

plt.grid(True)

plt.tight_layout()

plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

3.1.2 DrawCurve

accdim = []

with open(filename, 'r') as f:

for l in f.readlines():

ll = l.split('[')

if len(ll) < 2: continue

ll = ll[-1].split(']')

if len(ll) < 2: continue

accdim.append(float(ll[0]))

plt.figure(figsize=(12, 8))

plt.plot(accdim)

plt.xlabel("Step")

plt.ylabel("Acc")

plt.grid(True)

plt.tight_layout()

plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

3.1.3 数据处理程序

from headm import * # =

import cv2

lcddir = '/home/aistudio/work/lcd7seg/GIF'

filedim = os.listdir(lcddir)

printt(len(filedim))

imagedim = []

labeldim = []

for f in filedim:

fn = os.path.join(lcddir, f)

if fn.find('.jpg') < 0: continue

gray = cv2.cvtColor(cv2.imread(fn), cv2.COLOR_BGR2GRAY)

imagedim.append(gray)

num = int(f.split('.')[0][-1])

labeldim.append(num)

imgarray = array(imagedim)

label = array(labeldim)

savez('/home/aistudio/work/lcddata', lcd=imgarray, label=label)

printt("Save lcd data.")

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

■ 相关文献链接:

● 相关图表链接:

- 图1.1 七段数码变形图片

- 图1.1.2 分割出的数字图片

- 图 每个图片倍增后的图片

- 图 每个图片倍增后的图片

- 图2.2.1 训练精度

- 图2.2.2 训练过程中的识别精度

- 图2.2.3 训练过程中的识别精度

- 图2.2.4 训练过程中的识别精度

- 图2.2.5 训练过程中的识别精度

文章来源: zhuoqing.blog.csdn.net,作者:卓晴,版权归原作者所有,如需转载,请联系作者。

原文链接:zhuoqing.blog.csdn.net/article/details/122347898

【版权声明】本文为华为云社区用户转载文章,如果您发现本社区中有涉嫌抄袭的内容,欢迎发送邮件进行举报,并提供相关证据,一经查实,本社区将立刻删除涉嫌侵权内容,举报邮箱:

cloudbbs@huaweicloud.com

- 点赞

- 收藏

- 关注作者

评论(0)