Python入门autoscraper

In the last few years, web scraping has been one of my day to day and frequently needed tasks. I was wondering if I can make it smart and automatic to save lots of time. So I made AutoScraper!

在过去的几年中,Web抓取一直是我的日常工作之一,也是经常需要执行的任务。 我想知道是否可以使其变得智能且自动以节省大量时间。 所以我做了AutoScraper!

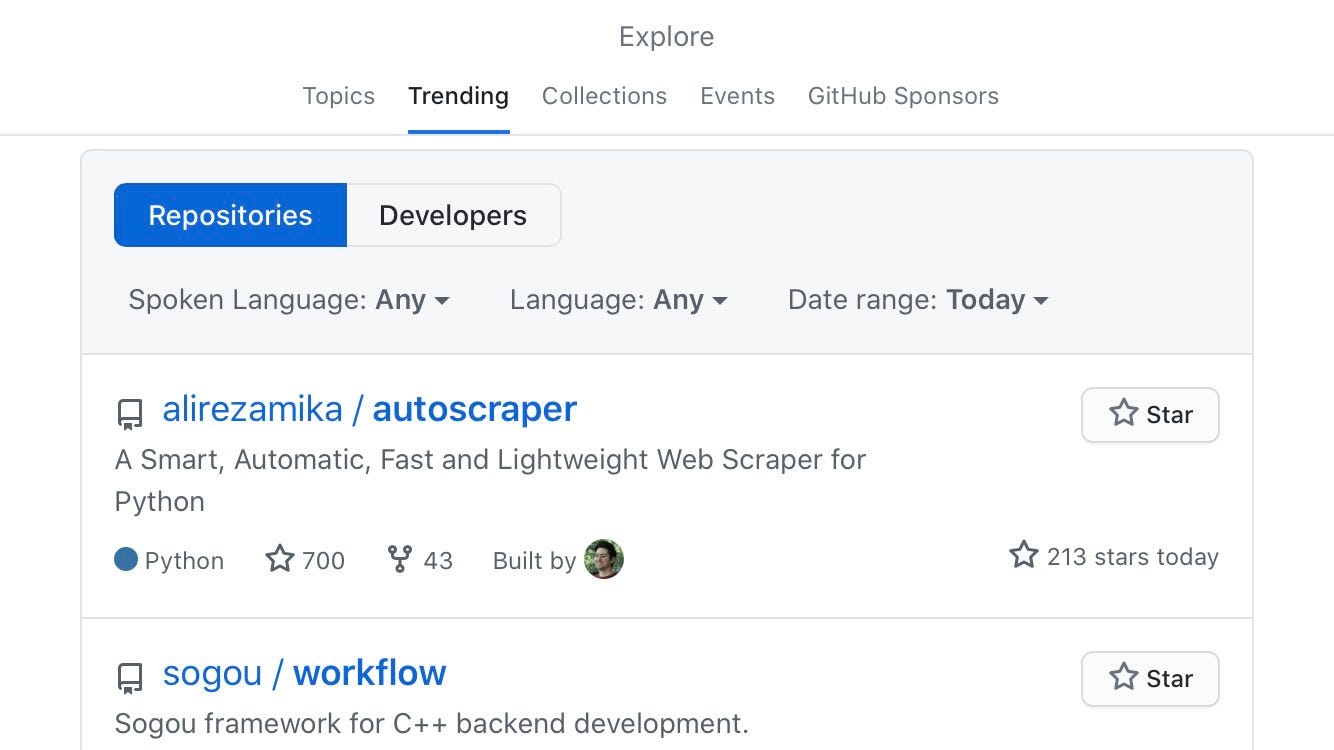

The project code is available here. It became the number one trending project on Github.

项目代码可在此处获得 。 它成为Github上排名第一的趋势项目。

This project is made for automatic web scraping to make scraping easy. It gets a URL or the HTML content of a web page and a list of sample data that we want to scrape from that page. This data can be text, URL, or any HTML tag value of that page. It learns the scraping rules and returns similar elements. Then you can use this learned object with new URLs to get similar content or the exact same element of those new pages.

该项目专为自动刮刮而设计,使刮刮变得容易。 它获取网页的URL或HTML内容以及我们要从该页面抓取的示例数据列表。 该数据可以是该页面的文本,URL或任何HTML标记值。 它学习抓取规则并返回相似的元素。 然后,您可以将此学习到的对象与新的URL结合使用,以获取这些新页面的相似内容或完全相同的元素。

安装 (Installation)

It’s compatible with Python 3.

它与Python 3兼容。

Install the latest version from the git repository using pip:

使用pip从git仓库安装最新版本:

$ pip install git+https://github.com/alirezamika/autoscraper.git如何使用 (How to use)

获得相似的结果 (Getting similar results)

Say we want to fetch all related post titles in a StackOverflow page:

假设我们要在StackOverflow页面中获取所有相关的帖子标题:

from autoscraper import AutoScraper

url = 'https://stackoverflow.com/questions/2081586/web-scraping-with-python'

# We can add one or multiple candidates here.

# You can also put urls here to retrieve urls.

wanted_list = ["How to call an external command?"]

scraper = AutoScraper()

result = scraper.build(url, wanted_list)

print(result)Here’s the output:

这是输出:

[

'How do I merge two dictionaries in a single expression in Python (taking union of dictionaries)?',

'How to call an external command?',

'What are metaclasses in Python?',

'Does Python have a ternary conditional operator?',

'How do you remove duplicates from a list whilst preserving order?',

'Convert bytes to a string',

'How to get line count of a large file cheaply in Python?',

"Does Python have a string 'contains' substring method?",

'Why is "1000000000000000 in range(1000000000000001)" so fast in Python 3?'

]Now you can use the scraper object to get related topics of any StackOverflow page:

现在,您可以使用scraper对象获取任何StackOverflow页面的相关主题:

scraper.get_result_similar('https://stackoverflow.com/questions/606191/convert-bytes-to-a-string')获得准确的结果 (Getting exact results)

Say we want to scrape live stock prices from Yahoo Finance:

假设我们想从Yahoo Finance中抓取实时股票价格:

from autoscraper import AutoScraperurl = 'https://finance.yahoo.com/quote/AAPL/'wanted_list = ["124.81"]scraper = AutoScraper()# Here we can also pass html content via the html parameter instead of the url (html=html_content)

result = scraper.build(url, wanted_list)

print(result)You can also pass any custom requests module parameter. For example, you may want to use proxies or custom headers:

您还可以传递任何自定义requests模块参数。 例如,您可能要使用代理或自定义标头:

proxies = {

"http": 'http://127.0.0.1:8001',

"https": 'https://127.0.0.1:8001',

}result = scraper.build(url, wanted_list, request_args=dict(proxies=proxies))Now we can get the price of any symbol:

现在我们可以获得任何交易品种的价格:

scraper.get_result_exact('https://finance.yahoo.com/quote/MSFT/')You may want to get other info as well. For example, if you want to get market cap too, you can just append it to the wanted list. By using the get_result_exact method, it will retrieve the data as the same exact order in the wanted list.

您可能还希望获得其他信息。 例如,如果您也想获得市值,则可以将其附加到所需列表中。 通过使用get_result_exact方法,它将以与所需列表中相同的顺序检索数据。

Another example: Say we want to scrape the about text, number of stars, and the link to pull requests of Github repo pages:

另一个示例:假设我们要抓取about文本,星星数和拉动Github回购页面请求的链接:

url = 'https://github.com/alirezamika/autoscraper'wanted_list = ['A Smart, Automatic, Fast and Lightweight Web Scraper for Python', '662', 'https://github.com/alirezamika/autoscraper/issues']scraper.build(url, wanted_list)Simple, right?

简单吧?

保存模型 (Saving the model)

We can now save the built model to use it later. To save:

现在,我们可以保存构建的模型以供以后使用。 保存:

# Give it a file path

scraper.save('yahoo-finance')And to load:

并加载:

scraper.load('yahoo-finance')生成scraper python代码 (Generating the scraper python code)

We can also generate a stand-alone code for the learned scraper to use it anywhere:

我们还可以为学习的刮板生成一个独立的代码,以便在任何地方使用它:

code = scraper.generate_python_code()

print(code)It will print the generated code. There’s a class named GeneratedAutoScraper which has the methods get_result_similar and get_result_exact which you can use. You can also use the get_result method to get both.

它将打印生成的代码。 有一个名为GeneratedAutoScraper的类,该类具有可以使用的方法get_result_similar和get_result_exact 。 您也可以使用get_result方法来获取两者。

谢谢。 (Thanks.)

I hope this project is useful for you and saves your time, too. If you have any question, just ask below and I will answer it. I look forward to hear your feedback and suggestions.

我希望这个项目对您有用,也可以节省您的时间。 如果您有任何疑问,请在下面提问,我会回答。 我期待听到您的反馈和建议。

快乐编码❤️ (Happy Coding ❤️)

- 点赞

- 收藏

- 关注作者

评论(0)