[论文阅读] (19)英文论文Evaluation(实验数据集、指标和环境)如何描述及精句摘抄——以系统AI安全顶会为例

《娜璋带你读论文》系列主要是督促自己阅读优秀论文及听取学术讲座,并分享给大家,希望您喜欢。由于作者的英文水平和学术能力不高,需要不断提升,所以还请大家批评指正,非常欢迎大家给我留言评论,学术路上期待与您前行,加油。

前一篇介绍了英文论文模型设计(Model Design)和概述(Overview)如何撰写,并摘抄系统AI安全顶会论文的精句。这篇文章将从个人角度介绍英文论文实验评估(Evaluation)的数据集、评价指标和环境设置如何撰写,继续以系统AI安全的顶会论文为例。一方面自己英文太差,只能通过最土的办法慢慢提升,另一方面是自己的个人学习笔记,并分享出来希望大家批评和指正。希望这篇文章对您有所帮助,这些大佬是真的值得我们去学习,献上小弟的膝盖~fighting!

由于作者之前做NLP和AI,现在转安全方向,因此本文选择的论文主要为近四年篇AI安全和系统安全的四大顶会(S&P、USENIX Sec、CCS、NDSS)。同时,作者能力有限,只能结合自己的实力和实际阅读情况出发,也希望自己能不断进步,每个部分都会持续补充。可能五年十年后,也会详细分享一篇英文论文如何撰写,目前主要以学习和笔记为主。大佬还请飘过O(∩_∩)O

文章目录

前文赏析:

- [论文阅读] (01) 拿什么来拯救我的拖延症?初学者如何提升编程兴趣及LATEX入门详解

- [论文阅读] (02) SP2019-Neural Cleanse: 神经网络中的后门攻击识别与缓解

- [论文阅读] (03) 清华张超老师 - Fuzzing漏洞挖掘详细总结 GreyOne

- [论文阅读] (04) 人工智能真的安全吗?浙大团队分享AI对抗样本技术

- [论文阅读] (05) NLP知识总结及NLP论文撰写之道——Pvop老师

- [论文阅读] (06) 万字详解什么是生成对抗网络GAN?经典论文及案例普及

- [论文阅读] (07) RAID2020 Cyber Threat Intelligence Modeling Based on Heterogeneous GCN

- [论文阅读] (08) NDSS2020 UNICORN: Runtime Provenance-Based Detector for Advanced Persistent Threats

- [论文阅读] (09)S&P2019 HOLMES Real-time APT Detection through Correlation of Suspicious Information Flow

- [论文阅读] (10)基于溯源图的APT攻击检测安全顶会总结

- [论文阅读] (11)ACE算法和暗通道先验图像去雾算法(Rizzi | 何恺明老师)

- [论文阅读] (12)英文论文引言introduction如何撰写及精句摘抄——以入侵检测系统(IDS)为例

- [论文阅读] (13)英文论文模型设计(Model Design)如何撰写及精句摘抄——以入侵检测系统(IDS)为例

- [论文阅读] (14)英文论文实验评估(Evaluation)如何撰写及精句摘抄(上)——以入侵检测系统(IDS)为例

- [论文阅读] (15)英文SCI论文审稿意见及应对策略学习笔记总结

- [论文阅读] (16)Powershell恶意代码检测论文总结及抽象语法树(AST)提取

- [论文阅读] (17)CCS2019 针对PowerShell脚本的轻量级去混淆和语义感知攻击检测

- [论文阅读] (18)英文论文Model Design和Overview如何撰写及精句摘抄——以系统AI安全顶会为例

- [论文阅读] (19)英文论文Evaluation(实验数据集、指标和环境)如何描述及精句摘抄——以系统AI安全顶会为例

论文如何撰写因人而异,作者仅分享自己的观点,欢迎大家提出意见。然而,坚持阅读所研究领域最新和经典论文,这个大家应该会赞成,如果能做到相关领域文献如数家珍,就离你撰写第一篇英文论文更近一步了,甚至高质量论文。

在实验设计中,重点是如何通过实验说服审稿老师,赞同你的创新点,体现你论文的价值。好的图表能更好地表达你论文的idea,因此我们需要学习优秀论文,一个惊喜的实验更是论文成功的关键。注意,安全论文已经不再是对比PRF的阶段了,一定要让实验支撑你整个论文的框架。同时,多读多写是基操,共勉!

该部分回顾和参考周老师的博士课程内容,感谢老师的分享。典型的论文框架包括两种(The typical “anatomy” of a paper),如下所示:

第一种格式:理论研究

- Title and authors

- Abstract

- Introduction

- Related Work (可置后)

- Materials and Methods

- Results

- Acknowledgements

- References

第二种格式:系统研究

- Title and authors

- Abstract

- Introduction

- Related Work (可置后)

- System Model

- Mathematics and algorithms

- Experiments

- Acknowledgements

- References

实验评估介绍(Evaluation)

- 许多论文对他们的方法进行了实证校验

- 当你刚到一个领域时,你应该仔细检查这项工作通常是如何完成的

- 注意所使用的数据集和代码也很有帮助——因为您可能在将来自己使用它们

该部分主要是学习易莉老师书籍《学术写作原来是这样》,后面我也会分享我的想法,具体如下:

结果与方法一种是相对容易写作的部分,其内容其实就是你对收集来的数据做了什么样的分析。对于相对简单的结果(3个分析以内),按部就班地写就好。有专业文献的积累,相信难度不大。写起来比较困难的是复杂数据的结果,比如包括10个分析,图片就有七八张。这时候对结果的组织就非常重要了。老师推荐《10条简单规则》一文中推荐的 结论驱动(conclusion-driven) 方法。

个人感觉:

实验部分同样重要,但更重要是如何通过实验结果、对比实验、图表描述来支撑你的创新点,让审稿老师觉得,就应该这么做,amazing的工作。作为初学者,我们可能还不能做到非常完美的实验,但一定要让文章的实验足够详细,力争像该领域的顶级期刊或会议一样,并且能够很好的和论文主题相契合,最终文章的价值也体现出来了。

在数据处理的过程中,梳理、总结自己的主要发现,以这些发现为大纲(小标题),来组织结果的写作(而不是传统上按照自己数据处理的顺序来组织)。以作者发表论文为例,他们使用了这种方法来组织结果部分,分为四个小标题,每个小标题下列出相应的分析及结果。

- (1) Sampling optimality may increase or decrease with autistic traits in different conditions

- (2) Bimodal decision times suggest two consecutive decision processes

- (3) Sampling is controlled by cost and evidence in two separate stages

- (4) Autistic traits influence the strategic diversity of sampling decisions

如果还有其他结果不能归入任何一个结论,那就说明这个结果并不重要,没有对形成文章的结论做出什么贡献,这时候果断舍弃(或放到补充材料中)是明智的选择。

另外,同一种结果可能有不同的呈现方式,可以依据你的研究目的来采用不同的方式。我在修改学生文章时遇到比较多的一个问题是采用奇怪的方式,突出了不重要的结果。举例:

示例句子:

The two groups were similar at the 2nd, 4th, and 8th trials; They differed from each other in the remaining trials.

这句话有两个比较明显的问题:(1)相似的试次并不是重点,重点是大部分的试次是有差异的,但是这个重点没有被突出,反而仅有的三个一样的试次突出了。(2)语言的模糊,differ的使用来来的模糊性(不知道是更好还是更差)。

修改如下:

Four-year-olds outperformed 3-year-olds in most trials, except the 2nd, 4th, and 8th trials, in which they performed similarly.

对于结果的呈现,作图是特别重要的,一张好图胜过千言万语。 但我不是作图方面的专家,如果你需要这方面的指导,建议你阅读《10个简单规则,创造更优图形》,文中为怎么做出一张好图提供了非常全面而有用的指导。

该部分主要是学习易莉老师书籍《学术写作原来是这样》,后面我也会分享我的想法,具体如下:

讨论是一个非常头疼的部分。先来讲讲讨论的写法,在前面强调了从大纲开始写的好处,从大纲开始写是一种自上而下的写法,在写大纲的过程中确定主题句,然后再确定其他内容。还有一种方法是自下而上地写,就是先随心所以地写第一稿,从笔记开始写,然后对这些笔记进行梳理和归纳,提炼主题句。老师通常混合两种写法,先从零星的点进行归纳(写前言时对文献观点做笔记,写讨论时对结果的发现做笔记),之后通过梳理,整理出大纲,再从大纲开始写作。

比如我对某篇文章的讨论部分做过相关笔记,然后对这些点进行梳理和归纳,再结合前沿提出来的三个研究问题形成讨论的大纲,如下:

- (1) 总结主要发现

- (2) Distrust and deception learning in ASD

- (3) Anthropomorphic thinking of robot and distrust

- (4) Human-robot vs. interpersonal interactions

- (5) Limitations

- (6) Conclusions

在(1)到(4)段的讨论中,要先总结自己最重要的发现,不要忘记回顾前言中提出的实验预期,说明结果是否符合自己的预期。然后回顾前人研究与自己的研究发现是否一致,如果不一致,就可以讨论可能的原因(取样、实验方法的不同等)。

此外还需要注意,很多学生把讨论的重点放在了与前人研究不一致的结果和自己的局限性上,这些是需要写的,但是最重要的是突出自己研究的贡献。

讨论中最常出现的问题就是把结果里的话换个说法再说一遍。其实讨论部分给了我们一个从更高层面梳理和解读研究结果的机会。更重要的是,需要明确提出自己的研究贡献,进一步强调研究的重要性、意义以及创新性。因此,不要停留在就事论事的结果描述上。读者读完结果后,很容易产生“so what”的问题——“是的,你发现了这些,那又怎么样呢?”。

这时候,最重要的是告诉读者研究的启示(implication)——你的发现说明了什么,加深了对什么问题的理解,对未解决的问题提供了什么新的解决方法,揭示了什么新的机制。这也是影响稿件录用的最重要部分,所以一定要花最多时间和精力来写这个部分。

用前文提到的“机器人”文章的结论作为例子,说明如何总结和升华自己的结论。

Overall, our study contributes several promising preliminary findings on the potential involvement of humanoid robots in social rules training for children with ASD. Our results also shed light for the direction of future research, which should address whether social learning from robots can be generalized to a universal case (e.g., whether distrusting/deceiving the robot contributes to an equivalent effect on distrusting/deceiving a real person); a validation test would be required in future work to test whether children with ASD who manage to distrust and deceive a robot are capable of doing the same to a real person.

首先我们要清楚实验写作的目的,通过详细准确的数据集、环境、实验描述,仿佛能让别人模仿出整个实验的过程,更让读者或审稿老师信服研究方法的科学性,增加结果数据的准确性和有效性。

- 研究问题、数据集(开源 | 自制)、数据预处理、特征提取、baseline实验、对比实验、统计分析结果、实验展示(图表可视化)、实验结果说明、论证结论和方法

如果我们的实验能发现某些有趣的结论会非常棒;如果我们的论文就是新问题并有对应的解决方法(创新性强),则实验需要支撑对应的贡献或系统,说服审稿老师;如果上述都不能实现,我们尽量保证实验详细,并通过对比实验(baseline对比)来巩固我们的观点和方法。

切勿只是简单地对准确率、召回率比较,每个实验结果都应该结合研究背景和论文主旨进行说明,有开源数据集的更好,没有的数据集建议开源,重要的是说服审稿老师认可你的工作。同时,实验步骤的描述也非常重要,包括实验的图表、研究结论、简明扼要的描述(给出精读)等。

在时态方面,由于是描述已经发生的实验过程,一般用过去时态,也有现在时。大部分期刊建议用被动句描述实验过程,但是也有一些期刊鼓励用主动句,因此,在投稿前,可以在期刊主页上查看“Instructions to Authors”等投稿指导性文档来明确要求。一起加油喔~

下面结合周老师的博士英语课程,总结实验部分我们应该怎么表达。

图/表的十个关键点(10 key points)

- 说明部分要尽量把相应图表的内容表达清楚

- 图的说明一般在图的下边

- 表的说明一般在标的上边

- 表示整体数据的分布趋势的图不需太大

- 表示不同方法间细微差别的图不能太小

- 几个图并排放在一起,如果有可比性,并排图的取值范围最好一致,利于比较

- 实验结果跟baseline在绝对数值上差别不大,用列表价黑体字

- 实验结果跟baseline在绝对数值上差别较大,用柱状图/折线图视觉表现力更好

- 折线图要选择适当的颜色和图标,颜色选择要考虑黑白打印的效果

- 折线图的图标选择要有针对性:比如对比A, A+B, B+四种方法:

A和A+的图标要相对应(例如实心圆和空心圆),B和B+的图标相对应(例如实心三角形和空心三角形)

说明部分要尽量把相应图表的内容表达清楚

图的说明一般在图的下边;表的说明一般在表的上边;表示整体数据的分布趋势的图不需太大;表示不同方法间细微差别的图不能太小。

几个图并排放在一起,如果有可比性,并排图的x/y轴的取值范围最好一致,利于比

较。

实验结果跟baseline在绝对数值上差别不大,用列表加黑体字;实验结果跟baseline在绝对数值上差别较大,用柱状图/折线图视觉表现力更好。

折线图要选择适当的颜色和图标,颜色选择要考虑黑白打印的效果;折线图的图标选择要有针对性,比如对比A, A+,B, B+四种方法。

同时,模型设计整体结构和写作细节补充几点:(引用周老师博士课程,受益匪浅)

由于作者偏向于AI系统安全领域,因此会先介绍数据集、评价指标和实验环境(含Baseline),然后才是具体的对比实验和性能比较及讨论(后续博客分享),所有实验都应层层递进证明本文的贡献和Insight。下面主要以四大顶会论文为主进行介绍,重点以系统安全和AI安全领域为主。

从这些论文中,我们能学习到什么呢?具体如下:

- 如何通过实验结果、对比实验、图表描述来支撑你的创新点

- 一幅优美的顶会论文实验对比图或表格(好图胜过千言万语)

- 论文实验部分的整体框架

- 如何精准的描述实验结果,包括安全术语

- 论文实验的前后关联及转折关键词

- 深度学习与系统安全结合的论文方向实验描述

- 实验突出框架的贡献, 注意十二个字: 环环相扣、步步坚实、逻辑严密

- 写论文一定要多看别人的论文、多反思自己的论文

该部分在实验评估环节主要作为引入,通常是介绍实验模块由哪几部分组成。同时,有些论文会直接给出实验的各个小标题,这时会省略该部分。主要包括两种类型描述(个人划分,欢迎指正):

第一种方法:介绍该部分所包含的内容,通常“In this section”并按顺序介绍。

In this section, we employ four datasets and experimentally evaluate four aspects of WATSON: 1) the explicability of inferred event semantics; 2) the accuracy of behavior abstraction; 3) the overall experience and manual workload reduction in attack investigation; and 4) the performance overhead (性能开销).

Jun Zeng, et al. WATSON: Abstracting Behaviors from Audit Logs via Aggregation of Contextual Semantics. NDSS 2021.

第二种方法:结合研究背景、解决问题和本文方法及创新来描述,包括设计的实验内容。

- 作者对比发现:顶会论文更多采取这种方式引入,而其他论文采取上面的方式更多。

Previous binary analysis studies usually evaluate their approaches by designing specific experiments in an end-to-end manner, since their instruction embeddings are only for individual tasks. In this paper, we focus on evaluating different instruction embedding schemes. To this end, we have designed and implemented an extensive evaluation framework to evaluate PalmTree and the baseline approaches. Evaluations can be classified into two categories: intrinsic evaluation and extrinsic evaluation. In the remainder of this section, we first introduce our evaluation framework and experimental configurations, then report and discuss the experimental results.

Xuezixiang Li, et al. PalmTree: Learning an Assembly Language Model for Instruction Embedding, CCS21.

In this section, we prototype Whisper and evaluate its performance by using 42 real-world attacks. In particular, the experiments will answer the three questions:

- (1) If Whisper achieves higher detection accuracy than the state-of-the-art method? (Section 6.3)

- (2) If Whisper is robust to detect attacks even if an attackers try to evade the detection of Whisper by leveraging the benign traffic? (Section 6.4)

- (3) If Whisper achieves high detection throughput and low detection latency? (Section 6.5)

Chuanpu Fu, et al. Realtime Robust Malicious Traffic Detection via Frequency Domain Analysis. CCS 2021.

In this section, we evaluate our approach with the following major goals:

- Demonstrating (证明) the intrusion detection effectiveness of vNIDS. We run our virtualized NIDS and compare its detection results with those generated by Bro NIDS based on multiple real-world traffic traces (Figure 4).

- Evaluating the performance overhead of detection state sharing among instances in different scenarios: 1) without detection state sharing; 2) sharing all detection states; and 3) only sharing global detection states. The results are shown in Figure 5. The statistics of global states, local states, and forward statements are shown in Table 2.

- Demonstrating the flexibility of vNIDS regarding placement location. In particular, we quantify (量化) the communication overhead between virtualized NIDS instances across different data centers that are geographically distributed (Figure 8).

Hongda Li, et al. vNIDS: Towards Elastic Security with Safe and Efficient Virtualization of Network Intrusion Detection Systems. CCS 2018.

In this section, we present our evaluation of DEEPREFLECT. First, we outline our objectives for each evaluation experiment and list which research goals (§2.4) are achieved by the experiment. We evaluate DEEPREFLECT’s (1) reliability by running it on three real-world malware samples we compiled and compared it to a machine learning classifier, a signature-based solution, and a function similarity tool, (2) cohesiveness by tasking malware analysts to randomly sample and label functions identified in in-the-wild samples and compare how DEEPREFLECT clustered these functions together, (3) focus by computing the number of functions an analyst has to reverse engineer given an entire malware binary, (4) insight by observing different malware families sharing the same functionality and how DEEPREFLECT handles new incoming malware families, and (5) robustness by obfuscating and modifying a malware’s source code to attempt to evade DEEPREFLECT.

Evan Downing, et al. DeepReflect: Discovering Malicious Functionality through Binary Reconstruction, USENIX Sec 2021.

In this section, we evaluate Slimium on a 64-bit Ubuntu 16.04 system equipped with Intel® Xeon® E5-2658 v3 CPU (with 48 2.20 G cores) and 128 GB RAM. In particular, we assess Slimium from the following three perspectives:

- Correctness of our discovery approaches: How well does a relation vector technique discover relevant code for feature-code mapping (Section 6.1) and how well does a prompt web profiling unveil non-deterministic paths (Section 6.2)?

- Hyper-parameter exploration (探索): What would be the best hyper-parameters (thresholds) to maximize code reduction while preserving all needed features reliably (Section 6.3)?

- Reliability and practicality: Can a debloated variant work well for popular websites in practice (Section 6.4)? In particular, we have quantified the amount of code that can be removed (Section 6.4.1) from feature exploration (Section 6.4.2).

IWe then highlight security benefits along with the number of CVEs discarded accordingly (Section 6.4.3).

Chenxiong Qian, et al. Slimium: Debloating the Chromium Browser with Feature Subsetting, CCS 2020.

In this section, we evaluate DEEPBINDIFF with respect to its effectiveness and efficiency for two different diffing scenarios (场景): cross-version and cross-optimization-level. To our best knowledge, this is the first research work that comprehensively (全面地) examines the effectiveness of program-wide binary diffing tools under the cross-version setting. Furthermore, we conduct a case study to demonstrate the usefulness of DEEPBINDIFF in real-world vulnerability analysis.

Yue Duan, et al. DEEPBINDIFF: Learning Program-Wide Code Representations for Binary Diffing, NDSS 2020.

Datasets. To pre-train PalmTree and evaluate its transferability and generalizability, and evaluate baseline schemes in different downstream applications, we used different binaries from different compilers. The pre-training dataset contains different versions of Binutils4, Coreutils5, Diffutils6, and Findutils7 on x86-64 platform and compiled with Clang8 and GCC9 with different optimization levels. The whole pre-training dataset contains 3,266 binaries and 2.25 billion instructions in total. There are about 2.36 billion positive and negative sample pairs during training. To make sure that training and testing datasets do not have much code in common in extrinsic evaluations, we selected completely different testing dataset from different binary families and compiled by different compilers. Please refer to the following sections for more details about dataset settings.

Xuezixiang Li, et al. PalmTree: Learning an Assembly Language Model for Instruction Embedding, CCS21.

Constructing a good benign dataset is crucial (至关重要) to our model’s performance. If we do not provide enough diverse behaviors of benign binaries, then everything within the malware binary will appear as unfamiliar. For example, if we do not train the autoencoder on binaries which perform network activities, then any network behaviors will be highlighted.

To collect our benign dataset, we crawled CNET [4] in 2018 for Portable Executable (PE) and Microsoft Installer (MSI) files from 22 different categories as defined by CNET to ensure a diversity of types of benign files. We collected a total of 60,261 binaries. After labeling our dataset, we ran our samples through Unipacker [11], a tool to extract unpacked executables. Though not complete as compared to prior work [21, 58], the tool produces a valid executable if it was successful (i.e., the malware sample was packed using one of several techniques Unipacker is designed to unpack). Since Unipacker covers most of the popular packers used by malware [67], it is reasonable to use this tool on our dataset. By default, if Unipacker cannot unpack a file successfully,it will not produce an output. Unipacker was able to unpack 34,929 samples. However, even after unpacking we found a few samples which still seemed partially packed or not complete (e.g., missing import symbols). We further filtered PE files which did not have a valid start address and whose import table size was zero (i.e., were likely not unpacked properly). We also deduplicatedthe unpacked binaries. Uniqueness was determined by taking the SHA-256 hash value of the contents of each file. To improve the quality of our dataset, we only accepted benign samples which were classified as malicious by less than three antivirus companies (according to VirusTotal). In total, after filtering, we obtained 23,307 unique samples. The sizes of each category can be found in Table 1.

To acquire our malicious dataset, we gathered 64,245 malware PE files from VirusTotal [12] during 2018. We then ran these samples through AVClass [62] to retrieve malware family labels. Similar to the benign samples, we unpacked, deduplicated, and filtered samples. Unipacker was able to unpack 47,878 samples. In total, we were left with 36,396 unique PE files from 4,407 families (3,301 of which were singleton families – i.e., only one sample belonged to that family). The sizes of the top-10 most populous families can be found in Table 2.

After collecting our datasets, we extracted our features from each sample using BinaryNinja, an industry-standard binary disassembler, and ordered each feature vector according to its basic block’s address location in a sample’s binary.

Evan Downing, et al. DeepReflect: Discovering Malicious Functionality through Binary Reconstruction, USENIX Sec 2021.

We evaluate WATSON on four datasets: a benign dataset, a malicious dataset, a background dataset, and the DARPA TRACE dataset. The first three datasets are collected from ssh sessions on five enterprise servers running Ubuntu 16.04 (64-bit). The last dataset is collected on a network of hosts running Ubuntu 14.04 (64-bit). The audit log source is Linux Audit [9].

In the benign dataset, four users independently complete seven daily tasks, as described in Table I. Each user performs a task 150 times in 150 sessions. In total, we collect 17 (expected to be 4×7 = 28) classes of benign behaviors because different users may conduct the same operations to accomplish tasks. Note that there are user-specific artifacts, like launched commands, between each time the task is performed. For our benign dataset, there are 55,296,982 audit events, which make up 4,200 benign sessions.

In the malicious dataset, following the procedure found in previous works [2], [10], [30], [53], [57], [82], we simulate eight attacks from real-world scenarios as shown in Table II. Each attack is carefully performed ten times by two security engineers on the enterprise servers. In order to incorporate (融合) the impact of typical noisy enterprise environments [53], [57], we continuously execute extensive ordinary user behaviors and underlying system activities in parallel to the attacks. For our malicious dataset, there are 37,229,686 audit events, which make up 80 malicious sessions.

In the background dataset, we record behaviors of developers and administrators on the servers for two weeks. To ensure the correctness of evaluation, we manually analyze these sessions and only incorporate sessions without behaviors in Table I and Table II into the dataset. For our background dataset, there are 183,336,624 audit events, which make up 1,000 background sessions.

The DARPA TRACE dataset [13] is a publicly available APT attack dataset released by the TRACE team in the DARPA Transparent Computing (TC) program [4]. The dataset was derived from a network of hosts during a two-week-long red-team vs. blue-team adversarial Engagement 3 in April 2018. In the engagement, an enterprise is simulated with different security-critical services such as a web server, an SSH server, an email server, and an SMB server [63]. The red team carries out a series of nation-state and common attacks on the target hosts while simultaneously performing benign behaviors, such as ssh login, web browsing, and email checking. For the DARPA TRACE dataset, there are 726,072,596 audit events, which make up 211 graphs. Note that we analyze only events that match our rules for triple translation in Section IV.

We test WATSON’s explicability and accuracy on our first three datasets as we need the precise ground truth of the event semantics and high-level (both benign and malicious) behaviors for verification. We further explore WATSON’s efficacy in attack investigation against our malicious dataset and DARPA TRACE dataset because the ground truth of malicious behaviors related to attack cases is available to us.

In general, our experimental behaviors for abstraction are comprehensive as compared to behaviors in real-world systems. Particularly, the benign behaviors are designed based upon basic system activities [84] claimed to have drawn attention in cybersecurity study; the malicious behaviors are either selected from typical attack scenarios in previous work or generated by a red team with expertise in instrumenting and collecting data for attack investigation.

Jun Zeng, et al. WATSON: Abstracting Behaviors from Audit Logs via Aggregation of Contextual Semantics, NDSS 2021.

We evaluate TEXTSHIELD on three datasets of which two are used for toxic content detection and one is used for adversarial NMT. Each dataset is divided into three parts, i.e., 80%, 10%, 10% as training, validation and testing, respectively [26].

- Toxic Content Detection. Since there currently does not exist a benchmark dataset for Chinese toxic content detection, we used two user generated content (UGC) datasets, i.e., Abusive UGC (Abuse) and Pornographic UGC (Porn) collected from online social media (the data collection details can be found in Appendix B). Each dataset contains 10,000 toxic and 10,000 normal samples that are well annotated by Chinese native speakers. The average text length of the Abuse and Porn datasets are 42.1 and 39.6 characters, respectively. The two datasets are used for building binary classification models for abuse detection and porn detection tasks.

- Adversarial NMT. To increase the diversity of the adversarial parallel corpora and ensure that the NMT model can learn more language knowledge, we applied the Douban Movie Short Comments (DMSC) dataset released by Kaggle along with Abuse and Porn. We then generate a corpora that consists of 2 million (xadv, xori) sentence pairs for each task respectively, of which half is generated from DMSC and half is generated from the toxic datasets. The method used for generating sentence pairs is detailed in Section 4.3.

Jinfeng Li, et al. TextShield: Robust Text Classification Based on Multimodal Embedding and Neural Machine Translation, USENIX Sec 2020.

Datasets. To thoroughly evaluate the effectiveness of DEEP- BINDIFF, we utilize three popular binary sets - Coreutils [2], Diffutils [3] and Findutils [4] with a total of 113 binaries. Multiple different versions of the binaries (5 versions for Coreutils, 4 versions for Diffutils and 3 versions of Findutils) are collected with wide time spans between the oldest and newest versions (13, 15, and 7 years respectively). This setting ensures that each version has enough distinctions so that binary diffing results among them are meaningful and representative.

We then compile them using GCC v5.4 with 4 different compiler optimization levels (O0, O1, O2 and O3) in order to produce binaries equipped with different optimization techniques. This dataset is to show the effectiveness of DEEPBIN- DIFF in terms of cross-optimization-level diffing. We randomly select half of the binaries in our dataset for token embedding model training.

To demonstrate the effectiveness with C++ programs, we also collect 2 popular open-source C++ projects LSHBOX [8] and indicators [6], which contain plenty of virtual functions, from GitHub. The two projects include 4 and 6 binaries respectively. In LSHBOX, the 4 binaries are psdlsh, rbslsh, rhplsh and thlsh. And in indicators, there exist 6 binaries - blockprogressbar, multithreadedbar, progressbarsetprogress, progressbartick, progressspinner and timemeter. For each project, we select 3 major versions and compile them with the default optimization levels for testing.

Finally, we leverage two different real-world vulnerabilities in a popular crypto library OpenSSL [9] for a case study to demonstrate the usefulness of DEEPBINDIFF in practice.

Yue Duan, et al. DEEPBINDIFF: Learning Program-Wide Code Representations for Binary Diffing, NDSS 2020.

To answer the above research questions, we collect relevant datasets. The details are as follows:

-

Phishing Webpage Dataset. To collect live phishing web-pages and their target brands as ground truth, we subscribed to OpenPhish Premium Service [4] for a period of six months; this gave us 350K phishing URLs. We ran a daily crawler that, based on the OpenPhish daily feeds, not only gathered the web contents (HTML code) but also took screenshots of the webpages corresponding to the phishing URLs. This allowed us to obtain all relevant information before the URLs became obsolete. Moreover, we manually cleaned the dead webpages (i.e., those not available when we visited them) and non-phishing webpages (e.g., the webpage is not used for phishing any more and has been cleaned up, or it is a pure blank page when we accessed). In addition, we use VPN to change our IP addresses while visiting a phishing page multiple times to minimize the effect of cloaking techniques [30, 81]. We also manually verified (and sometimes corrected) the target brands for the samples. As a result, we finally collected 29,496 phishing webpages for our experimental evaluations. Note that, conventional datasets crawled from PhishTank and the free version of OpenPhish do not have phishing target brand information. Though existing works such as [36] and [80] use larger phishing datasets for phishing detection experiments (i.e., without identifying target brands), to the best of our knowledge, we collected the largest dataset for phishing identification experiments.

-

Benign Webpage Dataset. We collected 29,951 benign web-pages from the top-ranked Alexa list [1] for this experiment. Similar to phishing webpage dataset, we also keep the screenshot of each URL.

-

Labelled Webpage Screenshot Dataset. For evaluating the object detection model independently, we use the ∼30K Alexa benign webpages collected (for the benign dataset) along with their screenshots. We outsourced the task of labelling the identity logos and user inputs on the screenshots.

We publish all the above three datasets at [7] for the research community.

Yun Lin, et al. Phishpedia: A Hybrid Deep Learning Based Approach to Visually Identify Phishing Webpages, USENIX Sec 2021.

Intrinsic Evaluation. In NLP domain, intrinsic evaluation refers to the evaluations that compare the generated embeddings with human assessments [2]. Hence, for each intrinsic metric, manually organized datasets are needed. This kind of dataset could be collected either in laboratory on a limited number of examinees or through crowd-sourcing [25] by using web platforms or offline survey [2]. Unlike the evaluations in NLP domain, programming languages including assembly language (instructions) do not necessarily rely on human assessments. Instead, each opcode and operand in instructions has clear semantic meanings, which can be extracted from instruction reference manuals. Furthermore, debug information generated by different compilers and compiler options can also indicate whether two pieces of code are semantically equivalent. More specifically, we design two intrinsic evaluations: instruction outlier detection based on the knowledge of semantic meanings of opcodes and operands from instruction manuals, and basic block search by leveraging the debug information associated with source code.

Extrinsic Evaluation. Extrinsic evaluation aims to evaluate the quality of an embedding scheme along with a downstream machine learning model in an end-to-end manner [2]. So if a downstream model is more accurate when integrated with instruction embedding scheme A than the one with scheme B, then A is considered better than B. In this paper, we choose three different binary analysis tasks for extrinsic evaluation, i.e., Gemini [40] for binary code similarity detection, EKLAVYA [5] for function type signatures inference, and DeepVSA [14] for value set analysis. We obtained the original implementations of these downstream tasks for this evaluation. All of the downstream applications are implemented based on TensorFlow. Therefore we choose the first way of deploying PalmTree in extrinsic evaluations (see Section 3.4.6). We encoded all the instructions in the corresponding training and testing datasets and then fed the embeddings into downstream applications.

Xuezixiang Li, et al. PalmTree: Learning an Assembly Language Model for Instruction Embedding, CCS21.

To evaluate DEEPREFLECT’s reliability, we explore and contrast the models’ performance in localizing the malware components within binaries.

Baseline Models. To evaluate the localization capability of DEEPREFLECT’s autoencoder, we compare it to a general method and domain specific method for localizing concepts in samples: (1) SHAP, a classification model explanation tool [40], (2) CAPA [3], a signature based tool by FireEye for identifying malicious behaviors within binaries,4 and (3) FunctionSimSearch [5], a function similarity tool.

Given a trained classifier and the sample x, SHAP provides each feature x(i) in x a contribution score for the classifier’s prediction. For SHAP’s model, we trained a modified deep neural network VGG19 [64] to predict a sample’s malware family and whether the sample is benign. For this model, we could not use our features because the model would not converge. Instead, we used the classic ACFG features without the string or integer features. We call these features attributed basic block (ABB) features. We trained this model for classification (on both malicious and benign samples) and achieved a training accuracy of 90.03% and a testing accuracy of 83.91%. In addition to SHAP, we trained another autoencoder on ABB features to compare to our new features as explained in §3.2.1.

Evan Downing, et al. DeepReflect: Discovering Malicious Functionality through Binary Reconstruction, USENIX Sec 2021.

In this paper, we first evaluate the performance of our subtree-based deobfuscation, which is divided into three parts. First, we evaluate whether we can find the minimum subtrees involved in obfuscation, which can directly determine the quality of the deobfuscation. This is dependent on the classifier and thus we cross-validate the classifier with manually-labelled ground truth. Second, we verify the quality of the entire obfuscation by comparing the similarity between the deobfuscated scripts and the original scripts. In this evaluation, we modify the AST-based similarity calculation algorithm provided by [39]. Third, we evaluate the efficiency of deobfuscation by calculating the average time required to deobfuscate scripts obfuscated by different obfuscation methods.

Next, we evaluate the benefit of our deobfuscation method on PowerShell attack detection. In §2, we find that obfuscation can evade most of the existing anti-virus engine. In this section, we compare the detection results for the same PowerShell scripts before and after applying our deobfuscation method. In addition, we also evaluate the effectiveness of the semantic-based detection algorithm in Section 5.

6.1.1 PowerShell Sample Collection

To evaluate our system, we create a collection of malicious and benign, obfuscated and non-obfuscated PowerShell samples. We attempt to cover all possible download sources that can have PowerShell scripts, e.g., GitHub, security blogs, open-source PowerShell attack repositories, etc., instead of intentionally making selections among them.

-

Benign Samples: To collect benign PowerShell Scripts, we download the top 500 repositories on GitHub under PowerShell language type using Chrome add-on Web Scraper [12]. We then find out the ones with PowerShell extension ’.ps1’ and manually check them one by one to remove attacking modules. After this process, 2342 benign samples are collected in total.

-

Malicious Samples: The malicious scripts we use to evaluate detection are based on recovered scripts which consist of two parts.

– 1) 4098 unique real-world attack samples collected from security blogs and attack analysis white papers [55]. Limited by the method of data collection, the semantics of the samples are relatively simple. Most of the samples belong to the initialization or execution phase.

– 2) To enrich the collection of malicious scripts, we pick other 43 samples from 3 famous open source attack repositories, namely, PowerSploit [9], PowerShell Empire [1] and PowerShell-RAT [43]. Obfuscated Samples: In addition to the collected real world malicious samples, which are already obfuscated, we also construct obfuscated samples through the combination of obfuscation methods and non-obfuscated scripts. More specifically (具体而言), we deploy four kinds of obfuscation methods in Invoke-Obfuscation, mentioned in §2.3, namely, token-based, string-based, hex-encoding and security string-encoding on 2342 benign samples and 75 malicious. After this step, a total of 9968 obfuscated samples are generated.

6.1.2 Script Similarity Comparison

Deobfuscation can be regarded as the reverse process of obfuscation. In the ideal case, deobfuscated scripts should be exactly the same as the original ones. However, in practice, it is difficult to achieve such perfect recovery for various reasons. However, the similarity between the recovered script and the original script is still a good indicator to evaluate the overall recovery effect.

To measure the similarity of scripts, we adopt the methods of code clone detection. This problem is widely studied in the past decades [50]. Different clone granularity levels apply to different intermediate source representations. Match detection algorithms are a critical issue in the clone detection process. After a source code representation is decided, a carefully selected match detection algorithm is applied to the units of source code representation. We employ suffix tree matching based on ASTs [40]. Both the suffix tree and AST are widely used in similarity calculation. Moreover, such combination can be used to distinguish three types of clones, namely, Type 1(Exact Clones), Type 2(Renamed Clones), Type 3(Near Miss Clones), which fits well for our situation.

To this end, we parse each PowerShell script into an AST. Most of the code clone detection algorithm is line-based. However, lines wrapping is not reliable after obfuscation. We utilize subtrees instead of lines. We serialize the subtree by pre-order traversal and apply suffix tree works on sequences. Therefore, each subtree in one script is compared to each subtree in the other script. The similarity between the two subtrees is computed by the following formula:

Zhenyuan Li, et al. Effective and Light-Weight Deobfuscation and Semantic-Aware Attack Detection for PowerShell Scripts, CCS 2019.

Baselines.

We implement and compare two state-of-the-art methods with TEXTSHIELD to evaluate their robustness against the extended TextBugger. In total, the two methods are:

- (1) Pycorrector: This method was first proposed in [47] for dealing with Chinese spelling errors or glyph-based and phonetic-based word variations in user generated texts based on the n-gram language model. In our experiments, we use an online version of Pycorrector implemented in Python 5.

- (2) TextCorrector: It is a Chinese text error correction service developed by Baidu AI for correcting spelling errors, grammatical errors and knowledge errors based on language knowledge, contextual understanding and knowledge computing techniques. In our experiments, we study the efficacy of these two defenses by combining them with the common TextCNN and BiLSTM, respectively. In addition, the common TextCNN and BiLSTM are baseline models themselves.

Evaluation Metrics.

Translation Evaluation. We use three metrics, i.e, word error rate, bilingual evaluation understudy and semantic similarity to evaluate the translation performance of our adversarial NMT model from word, feature and semantics levels.

- (1) Word Error Rate (WER). It is derived from the Levenshtein distance and is a word-level metric to evaluate the performance of NMT systems [1]. It is calculated based on the sum of substitutions (S), deletions (D) and insertions (I) for transforming the reference sequence to the target sequence. Suppose that there are total N words in the reference sequence. Then, WER can be calculated by WER = (S+D+I) / N . The range of WER is [0,1] and a smaller value reflects a better translation performance.

- (2) Bilingual Evaluation Understudy (BLEU). This metric was first proposed in [38]. It evaluates the quality of translation by comparing the n-grams of the candidate sequence with the n-grams of the reference sequence and counting the number of matches. Concretely, it can be computed as

-

where pn is the modified n-grams precision (co-occurrence), wn is the weight of n-grams co-occurrence and BP is the sentence brevity penalty. The range of BLEU is [0,1) and a larger value indicates a better performance. In our experiment, we use the BLEU implementation provided in [30].

-

(3) Semantic Similarity (SS). We use this metric to evaluate the similarity between the corrected texts and reference texts from the semantic-level. Here, we use an industry-leading model SimNet developed by Baidu to calculate it, which provides the state-of-the-art performance for measuring the semantic similarity of Chinese texts [40].

Jinfeng Li, et al. TextShield: Robust Text Classification Based on Multimodal Embedding and Neural Machine Translation, USENIX Sec 2020.

With the datasets and ground truth information, we evaluate the effectiveness of DEEPBINDIFF by performing diffing between binaries across different versions and optimization levels, and comparing the results with the baseline techniques.

Evaluation Metrics. We use precision and recall metrics to measure the effectiveness of the diffing results produced by diffing tools. The matching result M from DEEPBINDIFF can be presented as a set of basic block matching pairs with a length of x as Equation 5. Similarly, the ground truth information G for the two binaries can be presented as a set of basic block matching pairs with a length of y as Equation 6.

We then introduce two subsets, Mc and Mu, which represent correct matching and unknown matching respectively. Correct match Mc = M ∩ G is the intersection of our result M and ground truth G. It gives us the correct basic block matching pairs. Unknown matching result Mu represents the basic block matching pairs in which no basic block ever appears in ground truth. Thus, we have no idea whether these matching pairs are correct. This could happen because of the conservativeness of our ground truth collection process. Consequently, M − Mu − Mc portrays the matching pairs in M that are not in Mc nor in Mu, therefore, all pairs in M − Mu − Mc are confirmed to be incorrect matching pairs. Once M and G are formally presented, the precision metric presented in Equation 7 gives the percentage of correct matching pairs among all the known pairs (correct and incorrect).

The recall metric shown in Equation 8 is produced by dividing the size of intersection of M and G with the size of G. This metric shows the percentage of ground truth pairs that are confirmed to be correctly matched.

Yue Duan, et al. DEEPBINDIFF: Learning Program-Wide Code Representations for Binary Diffing, NDSS 2020.

Baseline Schemes and PalmTree Configurations. We choose Instruction2Vec, word2vec, and Asm2Vec as baseline schemes. For fair comparison, we set the embedding dimension as 128 for each model. We performed the same normalization method as PalmTree on word2vec and Asm2Vec. We did not set any limitation on the vocabulary size of Asm2Vec and word2vec. We implemented these baseline embedding models and PalmTree using PyTorch [30]. PalmTree is based on BERT but has fewer parameters. While in BERT #Layers = 12, Head = 12 and Hidden_dimension = 768, we set #Layers = 12, Head = 8, Hidden_dimension = 128 in PalmTree, for the sake of efficiency and training costs. The ratio between the positive and negative pairs in both CWP and DUP is 1:1. Furthermore, to evaluate the contributions of three training tasks of PalmTree, we set up three configurations:

- PalmTree-M: PalmTree trained with MLM only

- PalmTree-MC: PalmTree trained with MLM and CWP

- PalmTree: PalmTree trained with MLM, CWP, and DUP

Hardware Configuration. All the experiments were conducted on a dedicated server with a Ryzen 3900X CPU@3.80GHz×12, one GTX 2080Ti GPU, 64 GB memory, and 500 GB SSD.

Xuezixiang Li, et al. PalmTree: Learning an Assembly Language Model for Instruction Embedding, CCS21.

In this section, we evaluate the effectiveness and efficiency of our approach using the collected PowerShell samples described earlier (§6.1.1). The experiment results are obtained using a PC with Intel Core i5-7400 Processor 3.5 GHz, 4 Cores, and 16 Gigabytes of memory, running Windows 10 64-bit Professional.

Zhenyuan Li, et al. Effective and Light-Weight Deobfuscation and Semantic-Aware Attack Detection for PowerShell Scripts, CCS 2019.

We measure what semantics WATSON learns for audit events both visually and quantitatively: Visually, we use t-SNE to project the embedding space into a 2D-plane giving us an intuition of the embedding distribution=; quantitatively, for each triple, we compare the training loss in the TransE model against our knowledge of event semantics and their similarities.

Embedding of system entities. Each element’s semantics in events is represented as a 64-dimensional vector, where the spatial distance between vectors encodes their semantic similarity. To visualize the distance, we apply t-SNE to project high-dimensional embedding space into a two-dimensional (2D) space while largely preserving structural information among vectors. To manage the complexity, we randomly sampled 20 sessions from the first three datasets for visualization, obtaining a scatter plot of 2,142 points. Figure 5a shows the 2D visualization of the embedding space. Points in the space are distributed in clusters, suggesting that events are indeed grouped based on some metric of similarity.

We further select eight programs (git, scp, gcc, ssh, scp, vim, vi, and wget) to investigate process element embeddings. Figure 5b shows a zoom in view of Figure 5a containing 53 elements corresponding to the eight programs. For clarity, the elements are labeled with process names and partial arguments. Note that identity information is erased during one-hot encoding and thus does not contribute to semantics inference. While most elements of the same program are clustered together, there are a few interesting cases supporting the hypothesis that the embeddings are actually semantic-based.= For example, git has a few subcommands (push, add, commit). Git push is mapped closer to scp and wget instead of git commit and git add. This agrees with the high-level behaviors where git push uploads a local file to a remote repository while git add and git commit manipulate files locally. Another interesting example involves ssh where two different clusters can be identified, but both represent ssh connection to a remote host. Upon closer inspection, we notice that these two clusters, in effect, correspond to the usage of ssh with and without X-forwarding, reflecting the difference in semantics.

In summary, WATSON learns semantics that consistently mirrors our intuitive understanding of event contexts.

Jun Zeng, et al. WATSON: Abstracting Behaviors from Audit Logs via Aggregation of Contextual Semantics, NDSS 2021.

To fairly study the performance and robustness of the baselines and TEXTSHIELD, our experiments have the following settings:

- (i) the backbone networks applied in all the models have the same architecture, and concretely, the TextCNN backbone is designed with 32 filters of size 2, 3 and 4, and the BiLSTM backbone is designed with one bidirectional layer of 128 hidden units;

- (ii) all the models have the same maximum sequence length of 50 (since the majority of the texts in our datasets are shorter than 50) and the same embedding size of 128;

- (iii) all the models are trained from scratch with the Adam optimizer by using a basic setup without any complex tricks;

- (iv) the optimal hyperparameters such as learning rate, batch size, maximum training epochs, and dropout rate are tuned for each task and each model separately.

We conducted all the experiments on a server with two Intel Xeon E5-2682 v4 CPUs running at 2.50GHz, 120 GB memory, 2 TB HDD and two Tesla P100 GPU cards.

Jinfeng Li, et al. TextShield: Robust Text Classification Based on Multimodal Embedding and Neural Machine Translation, USENIX Sec 2020.

Our experiments are performed on a moderate desktop computer running Ubuntu 18.04LTS operating system with Intel Core i7 CPU, 16GB memory and no GPU. The feature vector generation and basic block embedding generation components in DEEPBINDIFF are expected to be significantly faster if GPUs are utilized since they are built upon deep learning models.

Baseline Techniques. With the aforementioned datasets, we compare DEEPBINDIFF with two state-of-the-art baseline techniques (Asm2Vec [23] and BinDiff [10]). Note that Asm2Vec is designed only for function level similarity detection. We leverage its algorithm to generate embeddings, and use the same k-hop greedy matching algorithm to perform diffing. Therefore, we denote it as ASM2VEC+k-HOP. Also, to demonstrate the usefulness of the contextual information, we modify DEEPBINDIFF to exclude contextual information and only include semantics information for embedding generation, shown as DEEPBINDIFF-CTX. As mentioned in Section I, another state-of-the-art technique InnerEye [58] has scalability issue for binary diffing. Hence, we only compare it with DEEPBINDIFF using a set of small binaries in Coreutils. Note that we also apply the same k-hop greedy matching algorithm in InnerEye, and denote it as INNEREYE+k-HOP.

Ground Truth Collection. For the purpose of evaluation, we rely on source code level matching and debug symbol information to conservatively collect ground truth that indicates how basic blocks from two binaries should match.

Particularly, for two input binaries, we first extract source file names from the binaries and use Myers algorithm [46] to perform text based matching for the source code in order to get the line number matching. To ensure the soundness of our extracted ground truth, 1) we only collect identical lines of source code as matching but ignore the modified ones; 2) our ground truth collection conservatively removes the code statements that lead to multiple basic blocks. Therefore, although our source code matching is by no means complete, it is guaranteed to be sound. Once we have the line number mapping between the two binaries, we extract debug information to understand the mapping between line numbers and program addresses. Eventually, the ground truth is collected by examining the basic blocks of the two binaries containing program addresses that map to the matched line numbers.

Yue Duan, et al. DEEPBINDIFF: Learning Program-Wide Code Representations for Binary Diffing, NDSS 2020.

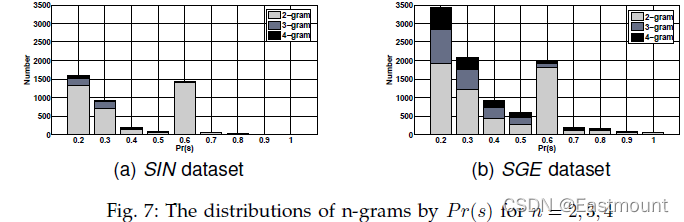

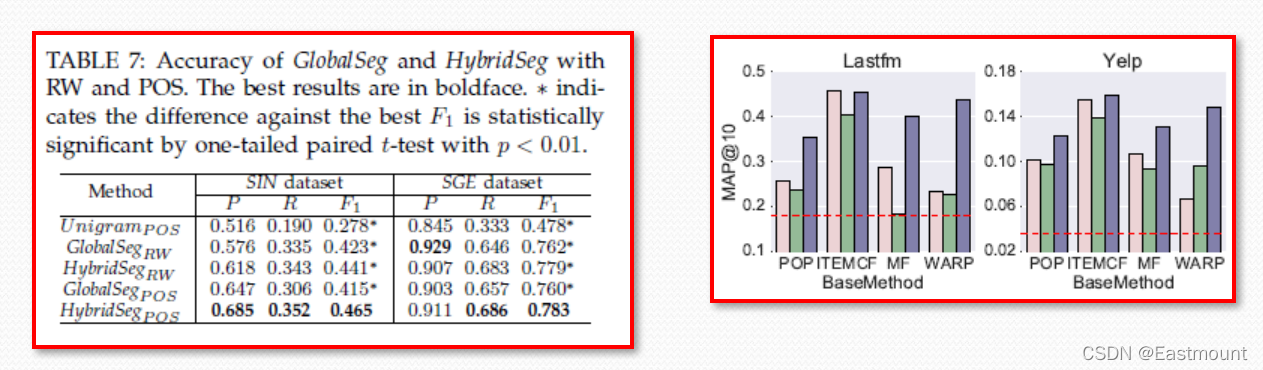

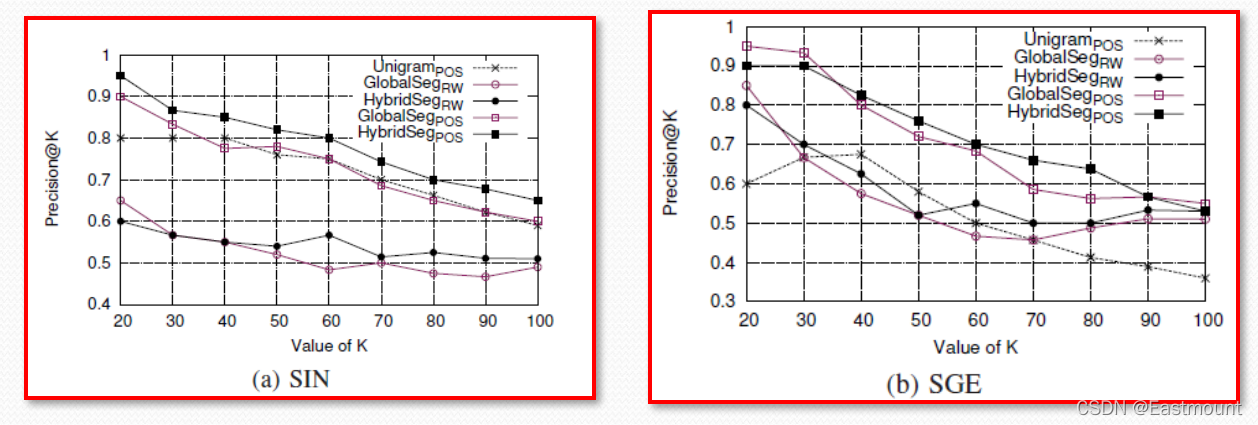

这里先让大家学习顶会论文的优美作图,具体描述将在后续的博客中详细介绍。

这篇文章就写到这里了,希望对您有所帮助。由于作者英语实在太差,论文的水平也很低,写得不好的地方还请海涵和批评。同时,也欢迎大家讨论,真心推荐原文,这些大佬真的值得我们学习,继续加油,且看且珍惜!

忙碌的三月结束,时间流逝得真快,也很充实。虽然很忙,但三月还是挤时间回答了很多博友的问题,有咨询技术和代码问题的,有找工作和方向入门的,也有复试面试的。尽管自己科研和技术很菜,今年闭关也非常忙,但总忍不住。唉,性格使然,感恩遇见,问心无愧就好。但行好事,莫问前程。继续奋斗,继续闭关,。小珞珞这嘴巴,哈哈,每日快乐源泉,晚安娜O(∩_∩)O

独在异乡为异客,每逢佳节倍思亲。思念亲人

感恩能与大家在华为云遇见!

希望能与大家一起在华为云社区共同成长。原文地址:https://blog.csdn.net/Eastmount/article/details/123923071

(By:Eastmount 2022-06-15 周五夜于武汉)

- 点赞

- 收藏

- 关注作者

评论(0)