VGG-M神经网络

【摘要】

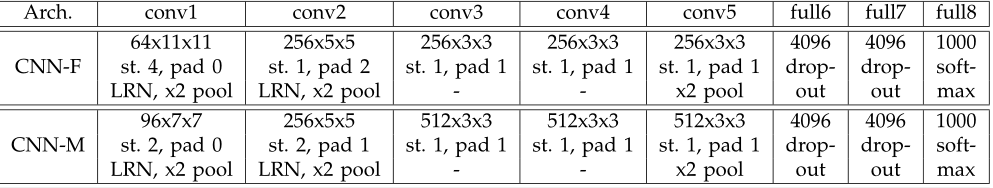

目标跟踪论文一般提到VGG-M神经网络,也就是CNN-M神经网络,其出处是论文《Return of the Devil in the Details: Delving Deep into Convolutional Nets》,其定义如下:

其架构包含5个卷积层和3个全连接层,它的特点是第一个卷积层的步幅减小和感受野较小,这在ILSV...

目标跟踪论文一般提到VGG-M神经网络,也就是CNN-M神经网络,其出处是论文《Return of the Devil in the Details:

Delving Deep into Convolutional Nets》,其定义如下:

其架构包含5个卷积层和3个全连接层,它的特点是第一个卷积层的步幅减小和感受野较小,这在ILSVRC数据集上被证明是有益的。同时,conv2使用更大的步幅(stride=2而不是1)来保持合理的计算时间。还在conv4层使用更少的过滤器(512)。

有时候为了神经网络的鲁棒性和泛化性,也会在VGG-M中添加跨通道局部响应归一化(SpatialMapLRN)。具体代码如下:

-

class SpatialCrossMapLRN(nn.Module):

-

def __init__(self, local_size=1, alpha=1.0, beta=0.75, k=1, ACROSS_CHANNELS=True):

-

super(SpatialCrossMapLRN, self).__init__()

-

self.ACROSS_CHANNELS = ACROSS_CHANNELS

-

if ACROSS_CHANNELS:

-

self.average=nn.AvgPool3d(kernel_size=(local_size, 1, 1),

-

stride=1,

-

padding=(int((local_size-1.0)/2), 0, 0))

-

else:

-

self.average=nn.AvgPool2d(kernel_size=local_size,

-

stride=1,

-

padding=int((local_size-1.0)/2))

-

self.alpha = alpha

-

self.beta = beta

-

self.k = k

-

-

def forward(self, x):

-

if self.ACROSS_CHANNELS:

-

div = x.pow(2).unsqueeze(1)

-

div = self.average(div).squeeze(1)

-

div = div.mul(self.alpha).add(self.k).pow(self.beta)

-

else:

-

div = x.pow(2)

-

div = self.average(div)

-

div = div.mul(self.alpha).add(self.k).pow(self.beta)

-

x = x.div(div)

-

return x

-

-

-

class VGGM(nn.Module):

-

-

def __init__(self, num_classes=1000):

-

super(VGGM, self).__init__()

-

self.num_classes = num_classes

-

self.features = nn.Sequential(

-

nn.Conv2d(3, 96, (7, 7), (2, 2)), # conv1

-

nn.ReLU(),

-

SpatialCrossMapLRN(5, 0.0005, 0.75, 2),

-

nn.MaxPool2d((3, 3), (2, 2), (0, 0), ceil_mode=True),

-

nn.Conv2d(96, 256, (5, 5), (2, 2), (1, 1)), # conv2

-

nn.ReLU(),

-

SpatialCrossMapLRN(5, 0.0005, 0.75, 2),

-

nn.MaxPool2d((3, 3), (2, 2), (0, 0), ceil_mode=True),

-

nn.Conv2d(256, 512, (3, 3), (1, 1), (1, 1)), # conv3

-

nn.ReLU(),

-

nn.Conv2d(512, 512, (3, 3), (1, 1), (1, 1)), # conv4

-

nn.ReLU(),

-

nn.Conv2d(512, 512, (3, 3), (1, 1), (1, 1)), # conv5

-

nn.ReLU(),

-

nn.MaxPool2d((3, 3), (2, 2), (0, 0), ceil_mode=True)

-

)

-

self.classifier = nn.Sequential(

-

nn.Linear(18432, 4096),

-

nn.ReLU(),

-

nn.Dropout(0.5),

-

nn.Linear(4096, 4096),

-

nn.ReLU(),

-

nn.Dropout(0.5),

-

nn.Linear(4096, num_classes)

-

)

-

-

def forward(self, x):

-

x = self.features(x)

-

x = x.view(x.size(0), -1)

-

x = self.classifier(x)

-

return x

-

-

-

def vggm(num_classes=1000, pretrained='imagenet'):

-

if pretrained:

-

settings = pretrained_settings['vggm'][pretrained]

-

assert num_classes == settings['num_classes'], \

-

"num_classes should be {}, but is {}".format(settings['num_classes'], num_classes)

-

-

model = VGGM(num_classes=num_classes)

-

model.load_state_dict(torch.load('../vggm.pth', map_location=lambda storage, loc: storage))

-

-

model.input_space = settings['input_space']

-

model.input_size = settings['input_size']

-

model.input_range = settings['input_range']

-

model.mean = settings['mean']

-

model.std = settings['std']

-

else:

-

model = VGGM(num_classes=num_classes)

-

return model

文章来源: blog.csdn.net,作者:小小谢先生,版权归原作者所有,如需转载,请联系作者。

原文链接:blog.csdn.net/xiewenrui1996/article/details/107253152

【版权声明】本文为华为云社区用户转载文章,如果您发现本社区中有涉嫌抄袭的内容,欢迎发送邮件进行举报,并提供相关证据,一经查实,本社区将立刻删除涉嫌侵权内容,举报邮箱:

cloudbbs@huaweicloud.com

- 点赞

- 收藏

- 关注作者

评论(0)