2022CUDA夏季训练营Day4实践之统一内存

2022CUDA夏季训练营Day1实践 https://bbs.huaweicloud.com/blogs/364478

2022CUDA夏季训练营Day2实践 https://bbs.huaweicloud.com/blogs/364479

2022CUDA夏季训练营Day3实践 https://bbs.huaweicloud.com/blogs/364480

今天是第四天,主题是统一内存、原子操作等。

(一)统一内存

从前几天的矩阵乘的代码中可以看出,要写好一个CUDA的代码,需要分配HOST内存(malloc或cudaMallocHost),需要分配DEVICE内存(cudaMalloc),需要将HOST内存数据复制到DEVICE(cudaMemcpy),需要完成GPU核函数的调用,需要把核函数的调用结果在复制回HOST(cudaMemcpy),还需要对前面的各种内存做释放工作(free,cudaFreeHost,cudaFree)。

这些工作,虽然是套路,显然还是太繁琐了。

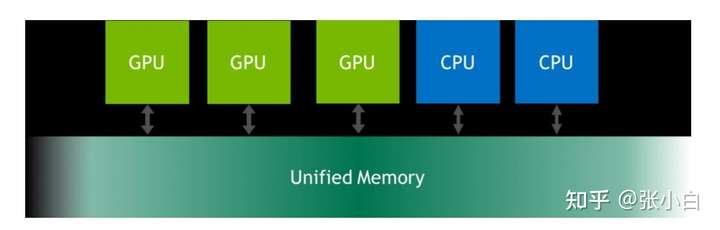

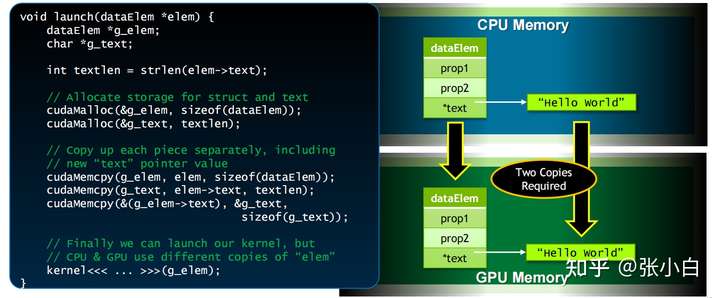

于是,聪明的Nvidia在CUDA 6.0以上的版本提出了一个叫做Unified Memory(统一内存)的概念。它把GPU内存、CPU内存在编码层面屏蔽起来:

它是可以从系统的任何CPU、GPU访问的单个内存地址空间。它允许应用程序分配可以从CPUs或GPUs上允许的代码读取或者写入数据。

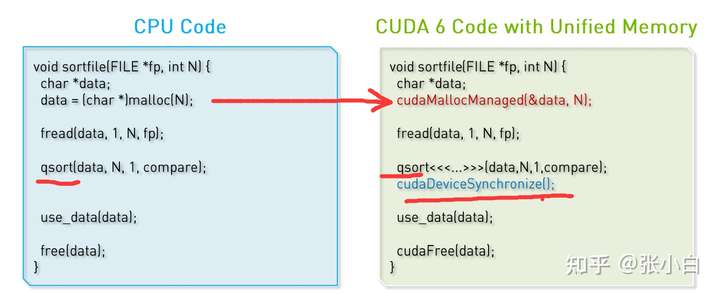

具体的方式如下所示:

它把原来CPU上的malloc改为cudaMallocManaged,并且分配好的内存地址可以直接被GPU的核函数(图中的 qsort)使用(还记得原来的代码需要先cudaMallocHost/malloc,在cudaMemcpy吗?这里统统不要了。

统一内存除了上面使用的 cudaMallocManaged函数来定义变量以外,还可以使用 __managed__ 标识符来表示这是一块统一内存。(这个前面可能还需要再加上 __device__ 标识符供 核函数使用。

统一内存使用的时候要借助于 cudaDeviceSynchronize() 来确保CPU和GPU同步。

统一内存不显式的区分HOST还是DEVICE的memory,它简化了代码,增强了代码的通用性。

统一内存只能在HOST申请。

这里面有几个误区需要澄清下:

(1)张小白原来以为,只有 Nvidia Jetson Orin那种显存和内存合二为一的设备才有统一内存的概念。但其实并不是——满足 SM架构大于3.0(Kepler架构以上)都可以使用统一内存的方式来编程。逻辑上任何GPU卡或者设备都可以使用统一内存,但是从效果上来看,只有真正的融合为一体的设备(如Jetson AGX Orin),才有最好的统一内存的效果。

(2)对于矩阵乘的代码而言,统一内存相当于对Global Memory的一个等效版本,而共享内存则是对SM内部的一种速度优化方式。两者是无关的。也就是说,你在使用统一内存的代码中可以同时使用共享内存。

(2)使用了 __managed__ 标识符或 cudaMallocManaged 之后,确实代码中不需要 cudaMalloc,cudaMemcpy这些代码了。但是系统底层其实还会根据情况,决定自己是否需要执行相关的GPU内存分配和 HOST和DEVICE内存的互相拷贝的动作。

举个例子,对于张小白的Nvidia Quardo P1000的显卡而言,HOST内存在自己的笔记本内存上(大概有64G),DEVICE内存在GPU显卡(大概有4G)。在这样的环境运行代码,系统仍然会做 申请HOST内存,申请DEVICE内存,HOST内存与DEVICE内存复制等动作。

但是对于张小白新购置的了不起的Nvidia AGX Orin而言,HOST内存就是DEVICE内存(大概有32G)。两者不仅仅叫做统一内存,其实还叫做同一内存(张小白自创的)。也就是说,ARM CPU和Nvidia GPU共享一个物理内存。具体的说明可参见:https://zhuanlan.zhihu.com/p/486130961

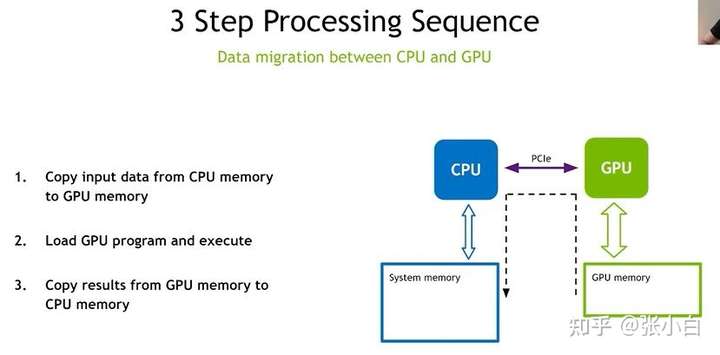

同一内存最大的好处就是:下面典型的三个动作,1、3都可以省略了:

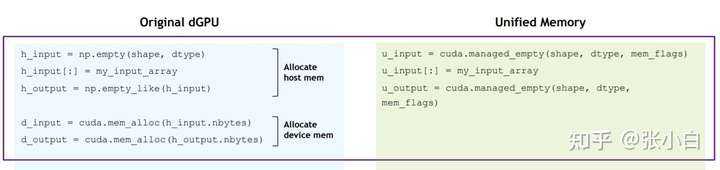

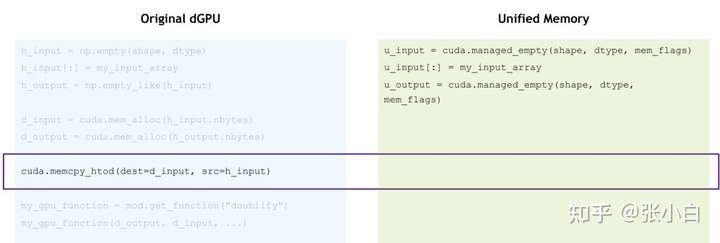

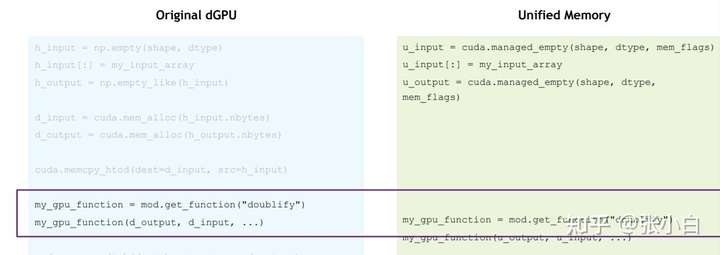

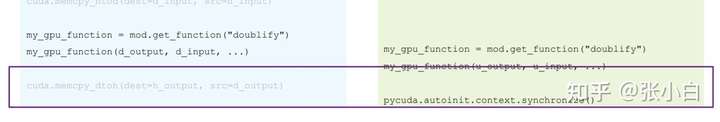

所以典型的代码就从左边的模式变成了右边的模式:

(1)定义变量:仅需要定义unified memory的变量。节省了空间。

(2)HOST->DEVICE:步骤省略

(3)执行核函数:跟原来一样

(4)DEVICE->HOST:步骤省略

(5)显式同步:只是统一内存比原来的方式多一个CPU等待GPU完成的动作。

注:上述图片(含代码)来自于上面链接中的文章。

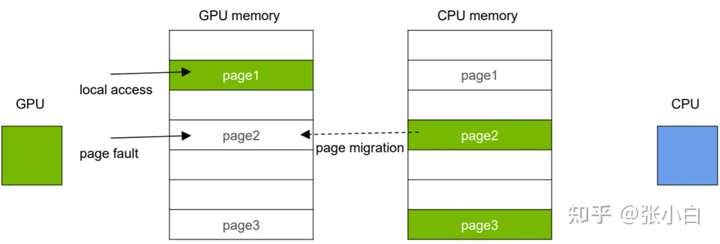

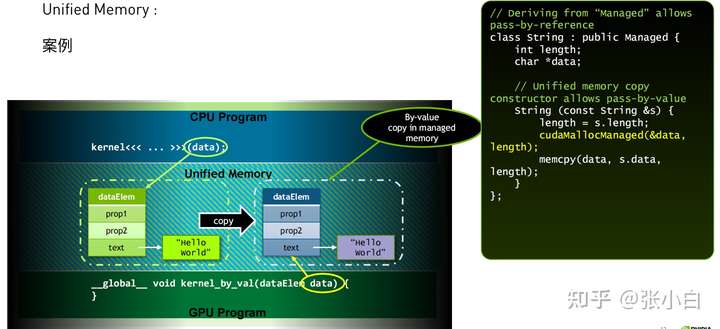

那么,统一内存到底是怎么实现的呢?这里借助了下图的做法:

CUDA在现有内存池的结构上增加了一个 统一内存系统。开发人员可以直接访问任何内存或者显存资源。

当CUDA发现需要访问GPU内存时,如果一开始定义在HOST侧,并且对其进行了初始化,CUDA会自动执行数据拷贝,所以,仍然会受制于PCI-E的带宽和延迟。

我们可以看到在这个情况下,代码和运行时变量前后的变迁:

好了,概念好像整理得差不多了。下面开始实战:

我们把昨天的矩阵乘的代码(包含共享内存优化部分)拿过来,然后看看该怎么优化。

原来的代码是这样的:

matrix_mul.cuh

#pragma once

#include <stdio.h>

#define CHECK(call) \

do \

{ \

const cudaError_t error_code = call; \

if (error_code != cudaSuccess) \

{ \

printf("CUDA Error:\n"); \

printf(" File: %s\n", __FILE__); \

printf(" Line: %d\n", __LINE__); \

printf(" Error code: %d\n", error_code); \

printf(" Error text: %s\n", \

cudaGetErrorString(error_code)); \

exit(1); \

} \

} while (0)matrix_mul_old.cu

#include <stdio.h>

#include <math.h>

#include "error.cuh"

#include "matrix_mul.cuh"

#define BLOCK_SIZE 32

__global__ void gpu_matrix_mult(int *a,int *b, int *c, int m, int n, int k)

{

int row = blockIdx.y * blockDim.y + threadIdx.y;

int col = blockIdx.x * blockDim.x + threadIdx.x;

int sum = 0;

if( col < k && row < m)

{

for(int i = 0; i < n; i++)

{

sum += a[row * n + i] * b[i * k + col];

}

c[row * k + col] = sum;

}

}

__global__ void gpu_matrix_mult_shared(int *d_a, int *d_b, int *d_result, int m, int n, int k)

{

__shared__ int tile_a[BLOCK_SIZE][BLOCK_SIZE];

__shared__ int tile_b[BLOCK_SIZE][BLOCK_SIZE];

int row = blockIdx.y * BLOCK_SIZE + threadIdx.y;

int col = blockIdx.x * BLOCK_SIZE + threadIdx.x;

int tmp = 0;

int idx;

for (int sub = 0; sub < gridDim.x; ++sub)

{

idx = row * n + sub * BLOCK_SIZE + threadIdx.x;

tile_a[threadIdx.y][threadIdx.x] = row<n && (sub * BLOCK_SIZE + threadIdx.x)<n? d_a[idx]:0;

idx = (sub * BLOCK_SIZE + threadIdx.y) * n + col;

tile_b[threadIdx.y][threadIdx.x] = col<n && (sub * BLOCK_SIZE + threadIdx.y)<n? d_b[idx]:0;

__syncthreads();

for (int k = 0; k < BLOCK_SIZE; ++k)

{

tmp += tile_a[threadIdx.y][k] * tile_b[k][threadIdx.x];

}

__syncthreads();

}

if(row < n && col < n)

{

d_result[row * n + col] = tmp;

}

}

void cpu_matrix_mult(int *h_a, int *h_b, int *h_result, int m, int n, int k) {

for (int i = 0; i < m; ++i)

{

for (int j = 0; j < k; ++j)

{

int tmp = 0.0;

for (int h = 0; h < n; ++h)

{

tmp += h_a[i * n + h] * h_b[h * k + j];

}

h_result[i * k + j] = tmp;

}

}

}

int main(int argc, char const *argv[])

{

int m=100;

int n=100;

int k=100;

//声明Event

cudaEvent_t start, stop, stop2, stop3 , stop4 ;

//创建Event

CHECK(cudaEventCreate(&start));

CHECK(cudaEventCreate(&stop));

CHECK(cudaEventCreate(&stop2));

int *h_a, *h_b, *h_c, *h_cc;

CHECK(cudaMallocHost((void **) &h_a, sizeof(int)*m*n));

CHECK(cudaMallocHost((void **) &h_b, sizeof(int)*n*k));

CHECK(cudaMallocHost((void **) &h_c, sizeof(int)*m*k));

CHECK(cudaMallocHost((void **) &h_cc, sizeof(int)*m*k));

for (int i = 0; i < m; ++i) {

for (int j = 0; j < n; ++j) {

h_a[i * n + j] = rand() % 1024;

}

}

for (int i = 0; i < n; ++i) {

for (int j = 0; j < k; ++j) {

h_b[i * k + j] = rand() % 1024;

}

}

int *d_a, *d_b, *d_c;

CHECK(cudaMalloc((void **) &d_a, sizeof(int)*m*n));

CHECK(cudaMalloc((void **) &d_b, sizeof(int)*n*k));

CHECK(cudaMalloc((void **) &d_c, sizeof(int)*m*k));

// copy matrix A and B from host to device memory

CHECK(cudaMemcpy(d_a, h_a, sizeof(int)*m*n, cudaMemcpyHostToDevice));

CHECK(cudaMemcpy(d_b, h_b, sizeof(int)*n*k, cudaMemcpyHostToDevice));

unsigned int grid_rows = (m + BLOCK_SIZE - 1) / BLOCK_SIZE;

unsigned int grid_cols = (k + BLOCK_SIZE - 1) / BLOCK_SIZE;

dim3 dimGrid(grid_cols, grid_rows);

dim3 dimBlock(BLOCK_SIZE, BLOCK_SIZE);

//开始start Event

cudaEventRecord(start);

//非阻塞模式

cudaEventQuery(start);

//gpu_matrix_mult<<<dimGrid, dimBlock>>>(d_a, d_b, d_c, m, n, k);

gpu_matrix_mult_shared<<<dimGrid, dimBlock>>>(d_a, d_b, d_c, m, n, k);

//开始stop Event

cudaEventRecord(stop);

//由于要等待核函数执行完毕,所以选择阻塞模式

cudaEventSynchronize(stop);

//计算时间 stop-start

float elapsed_time;

CHECK(cudaEventElapsedTime(&elapsed_time, start, stop));

printf("start-》stop:Time = %g ms.\n", elapsed_time);

CHECK(cudaMemcpy(h_c, d_c, (sizeof(int)*m*k), cudaMemcpyDeviceToHost));

//cudaThreadSynchronize();

//开始stop2 Event

CHECK(cudaEventRecord(stop2));

//非阻塞模式

//CHECK(cudaEventSynchronize(stop2));

cudaEventQuery(stop2);

//计算时间 stop-stop2

float elapsed_time2;

cudaEventElapsedTime(&elapsed_time2, stop, stop2);

printf("stop-》stop2:Time = %g ms.\n", elapsed_time2);

//销毁Event

CHECK(cudaEventDestroy(start));

CHECK(cudaEventDestroy(stop));

CHECK(cudaEventDestroy(stop2));

//CPU函数计算

cpu_matrix_mult(h_a, h_b, h_cc, m, n, k);

int ok = 1;

for (int i = 0; i < m; ++i)

{

for (int j = 0; j < k; ++j)

{

if(fabs(h_cc[i*k + j] - h_c[i*k + j])>(1.0e-10))

{

ok = 0;

}

}

}

if(ok)

{

printf("Pass!!!\n");

}

else

{

printf("Error!!!\n");

}

// free memory

cudaFree(d_a);

cudaFree(d_b);

cudaFree(d_c);

cudaFreeHost(h_a);

cudaFreeHost(h_b);

cudaFreeHost(h_c);

return 0;

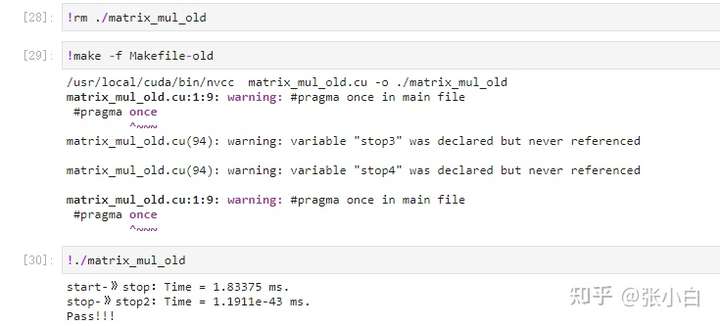

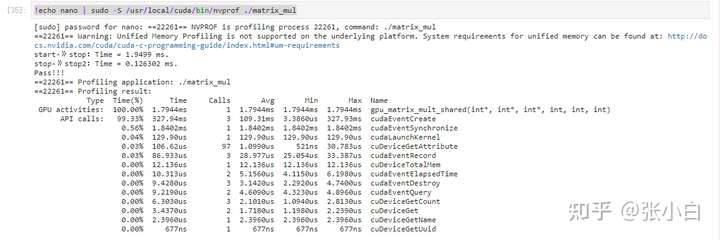

}先执行一下:

没啥问题。

我们来分析一下:

上面的代码用到了 h_a, h_b, h_c, h_cc 4个HOST内存,还用到了 d_a, d_b, d_c 三个DEVICE内存。

其中,abc是对应的。而cc是放CPU运算结果专用的。其实我们可以把h_cc直接改为malloc的内存就行了。但是为了好看,也可以将这4个HOST内存都改为统一内存。

我们将统一内存起名为 u_a, u_b, u_c, u_cc吧!

魔改开始:

将代码中 h_a->u_a,h_b->u_b,h_c->u_c,h_cc->u_cc,其他变量做相应的适当修改。

matrix_mul.cu

#include <stdio.h>

#include <math.h>

#include "error.cuh"

#include "matrix_mul.cuh"

#define BLOCK_SIZE 32

__managed__ int u_a[100*100];

__managed__ int u_b[100*100];

__managed__ int u_c[100*100];

__managed__ int u_cc[100*100];

__global__ void gpu_matrix_mult(int *a,int *b, int *c, int m, int n, int k)

{

int row = blockIdx.y * blockDim.y + threadIdx.y;

int col = blockIdx.x * blockDim.x + threadIdx.x;

int sum = 0;

if( col < k && row < m)

{

for(int i = 0; i < n; i++)

{

sum += u_a[row * n + i] * u_b[i * k + col];

}

u_c[row * k + col] = sum;

}

}

__global__ void gpu_matrix_mult_shared(int *u_a, int *u_b, int *u_result, int m, int n, int k)

{

__shared__ int tile_a[BLOCK_SIZE][BLOCK_SIZE];

__shared__ int tile_b[BLOCK_SIZE][BLOCK_SIZE];

int row = blockIdx.y * BLOCK_SIZE + threadIdx.y;

int col = blockIdx.x * BLOCK_SIZE + threadIdx.x;

int tmp = 0;

int idx;

for (int sub = 0; sub < gridDim.x; ++sub)

{

idx = row * n + sub * BLOCK_SIZE + threadIdx.x;

tile_a[threadIdx.y][threadIdx.x] = row<n && (sub * BLOCK_SIZE + threadIdx.x)<n? u_a[idx]:0;

idx = (sub * BLOCK_SIZE + threadIdx.y) * n + col;

tile_b[threadIdx.y][threadIdx.x] = col<n && (sub * BLOCK_SIZE + threadIdx.y)<n? u_b[idx]:0;

__syncthreads();

for (int k = 0; k < BLOCK_SIZE; ++k)

{

tmp += tile_a[threadIdx.y][k] * tile_b[k][threadIdx.x];

}

__syncthreads();

}

if(row < n && col < n)

{

u_result[row * n + col] = tmp;

}

}

void cpu_matrix_mult(int *u_a, int *u_b, int *u_result, int m, int n, int k) {

for (int i = 0; i < m; ++i)

{

for (int j = 0; j < k; ++j)

{

int tmp = 0.0;

for (int h = 0; h < n; ++h)

{

tmp += u_a[i * n + h] * u_b[h * k + j];

}

u_result[i * k + j] = tmp;

}

}

}

int main(int argc, char const *argv[])

{

int m=100;

int n=100;

int k=100;

//声明Event

cudaEvent_t start, stop, stop2, stop3 , stop4 ;

//创建Event

CHECK(cudaEventCreate(&start));

CHECK(cudaEventCreate(&stop));

CHECK(cudaEventCreate(&stop2));

//int *h_a, *h_b, *h_c, *h_cc;

//CHECK(cudaMallocHost((void **) &h_a, sizeof(int)*m*n));

//CHECK(cudaMallocHost((void **) &h_b, sizeof(int)*n*k));

//CHECK(cudaMallocHost((void **) &h_c, sizeof(int)*m*k));

//CHECK(cudaMallocHost((void **) &h_cc, sizeof(int)*m*k));

for (int i = 0; i < m; ++i) {

for (int j = 0; j < n; ++j) {

u_a[i * n + j] = rand() % 1024;

}

}

for (int i = 0; i < n; ++i) {

for (int j = 0; j < k; ++j) {

u_b[i * k + j] = rand() % 1024;

}

}

//int *d_a, *d_b, *d_c;

//CHECK(cudaMalloc((void **) &d_a, sizeof(int)*m*n));

//CHECK(cudaMalloc((void **) &d_b, sizeof(int)*n*k));

//CHECK(cudaMalloc((void **) &d_c, sizeof(int)*m*k));

// copy matrix A and B from host to device memory

//CHECK(cudaMemcpy(d_a, h_a, sizeof(int)*m*n, cudaMemcpyHostToDevice));

//CHECK(cudaMemcpy(d_b, h_b, sizeof(int)*n*k, cudaMemcpyHostToDevice));

unsigned int grid_rows = (m + BLOCK_SIZE - 1) / BLOCK_SIZE;

unsigned int grid_cols = (k + BLOCK_SIZE - 1) / BLOCK_SIZE;

dim3 dimGrid(grid_cols, grid_rows);

dim3 dimBlock(BLOCK_SIZE, BLOCK_SIZE);

//开始start Event

cudaEventRecord(start);

//非阻塞模式

cudaEventQuery(start);

//gpu_matrix_mult<<<dimGrid, dimBlock>>>(d_a, d_b, d_c, m, n, k);

gpu_matrix_mult_shared<<<dimGrid, dimBlock>>>(u_a, u_b, u_c, m, n, k);

//开始stop Event

cudaEventRecord(stop);

//由于要等待核函数执行完毕,所以选择阻塞模式

cudaEventSynchronize(stop);

//计算时间 stop-start

float elapsed_time;

CHECK(cudaEventElapsedTime(&elapsed_time, start, stop));

printf("start-》stop:Time = %g ms.\n", elapsed_time);

//CHECK(cudaMemcpy(h_c, d_c, (sizeof(int)*m*k), cudaMemcpyDeviceToHost));

//cudaThreadSynchronize();

//开始stop2 Event

CHECK(cudaEventRecord(stop2));

//非阻塞模式

//CHECK(cudaEventSynchronize(stop2));

cudaEventQuery(stop2);

//计算时间 stop-stop2

float elapsed_time2;

cudaEventElapsedTime(&elapsed_time2, stop, stop2);

printf("stop-》stop2:Time = %g ms.\n", elapsed_time2);

//销毁Event

CHECK(cudaEventDestroy(start));

CHECK(cudaEventDestroy(stop));

CHECK(cudaEventDestroy(stop2));

//CPU函数计算

cpu_matrix_mult(u_a, u_b, u_cc, m, n, k);

int ok = 1;

for (int i = 0; i < m; ++i)

{

for (int j = 0; j < k; ++j)

{

if(fabs(u_cc[i*k + j] - u_c[i*k + j])>(1.0e-10))

{

ok = 0;

}

}

}

if(ok)

{

printf("Pass!!!\n");

}

else

{

printf("Error!!!\n");

}

// free memory

//cudaFree(d_a);

//cudaFree(d_b);

//cudaFree(d_c);

//cudaFreeHost(h_a);

//cudaFreeHost(h_b);

//cudaFreeHost(h_c);

return 0;

}执行一下:

额,好像速度没啥变化。

查看下性能:

是不是矩阵只有100X100,太小了看不出来?

不妨将其改为1000X1000的两个矩阵乘看看(当然,这个时候需要注释掉CPU计算的部分)。

改为 1000:

#include <stdio.h>

#include <math.h>

#include "error.cuh"

#include "matrix_mul.cuh"

#define BLOCK_SIZE 32

__global__ void gpu_matrix_mult(int *a,int *b, int *c, int m, int n, int k)

{

int row = blockIdx.y * blockDim.y + threadIdx.y;

int col = blockIdx.x * blockDim.x + threadIdx.x;

int sum = 0;

if( col < k && row < m)

{

for(int i = 0; i < n; i++)

{

sum += a[row * n + i] * b[i * k + col];

}

c[row * k + col] = sum;

}

}

__global__ void gpu_matrix_mult_shared(int *d_a, int *d_b, int *d_result, int m, int n, int k)

{

__shared__ int tile_a[BLOCK_SIZE][BLOCK_SIZE];

__shared__ int tile_b[BLOCK_SIZE][BLOCK_SIZE];

int row = blockIdx.y * BLOCK_SIZE + threadIdx.y;

int col = blockIdx.x * BLOCK_SIZE + threadIdx.x;

int tmp = 0;

int idx;

for (int sub = 0; sub < gridDim.x; ++sub)

{

idx = row * n + sub * BLOCK_SIZE + threadIdx.x;

tile_a[threadIdx.y][threadIdx.x] = row<n && (sub * BLOCK_SIZE + threadIdx.x)<n? d_a[idx]:0;

idx = (sub * BLOCK_SIZE + threadIdx.y) * n + col;

tile_b[threadIdx.y][threadIdx.x] = col<n && (sub * BLOCK_SIZE + threadIdx.y)<n? d_b[idx]:0;

__syncthreads();

for (int k = 0; k < BLOCK_SIZE; ++k)

{

tmp += tile_a[threadIdx.y][k] * tile_b[k][threadIdx.x];

}

__syncthreads();

}

if(row < n && col < n)

{

d_result[row * n + col] = tmp;

}

}

void cpu_matrix_mult(int *h_a, int *h_b, int *h_result, int m, int n, int k) {

for (int i = 0; i < m; ++i)

{

for (int j = 0; j < k; ++j)

{

int tmp = 0.0;

for (int h = 0; h < n; ++h)

{

tmp += h_a[i * n + h] * h_b[h * k + j];

}

h_result[i * k + j] = tmp;

}

}

}

int main(int argc, char const *argv[])

{

int m=1000;

int n=1000;

int k=1000;

//声明Event

cudaEvent_t start, stop, stop2, stop3 , stop4 ;

//创建Event

CHECK(cudaEventCreate(&start));

CHECK(cudaEventCreate(&stop));

CHECK(cudaEventCreate(&stop2));

int *h_a, *h_b, *h_c, *h_cc;

CHECK(cudaMallocHost((void **) &h_a, sizeof(int)*m*n));

CHECK(cudaMallocHost((void **) &h_b, sizeof(int)*n*k));

CHECK(cudaMallocHost((void **) &h_c, sizeof(int)*m*k));

CHECK(cudaMallocHost((void **) &h_cc, sizeof(int)*m*k));

for (int i = 0; i < m; ++i) {

for (int j = 0; j < n; ++j) {

h_a[i * n + j] = rand() % 1024;

}

}

for (int i = 0; i < n; ++i) {

for (int j = 0; j < k; ++j) {

h_b[i * k + j] = rand() % 1024;

}

}

int *d_a, *d_b, *d_c;

CHECK(cudaMalloc((void **) &d_a, sizeof(int)*m*n));

CHECK(cudaMalloc((void **) &d_b, sizeof(int)*n*k));

CHECK(cudaMalloc((void **) &d_c, sizeof(int)*m*k));

// copy matrix A and B from host to device memory

CHECK(cudaMemcpy(d_a, h_a, sizeof(int)*m*n, cudaMemcpyHostToDevice));

CHECK(cudaMemcpy(d_b, h_b, sizeof(int)*n*k, cudaMemcpyHostToDevice));

unsigned int grid_rows = (m + BLOCK_SIZE - 1) / BLOCK_SIZE;

unsigned int grid_cols = (k + BLOCK_SIZE - 1) / BLOCK_SIZE;

dim3 dimGrid(grid_cols, grid_rows);

dim3 dimBlock(BLOCK_SIZE, BLOCK_SIZE);

//开始start Event

cudaEventRecord(start);

//非阻塞模式

cudaEventQuery(start);

//gpu_matrix_mult<<<dimGrid, dimBlock>>>(d_a, d_b, d_c, m, n, k);

gpu_matrix_mult_shared<<<dimGrid, dimBlock>>>(d_a, d_b, d_c, m, n, k);

//开始stop Event

cudaEventRecord(stop);

//由于要等待核函数执行完毕,所以选择阻塞模式

cudaEventSynchronize(stop);

//计算时间 stop-start

float elapsed_time;

CHECK(cudaEventElapsedTime(&elapsed_time, start, stop));

printf("start-》stop:Time = %g ms.\n", elapsed_time);

CHECK(cudaMemcpy(h_c, d_c, (sizeof(int)*m*k), cudaMemcpyDeviceToHost));

//cudaThreadSynchronize();

//开始stop2 Event

CHECK(cudaEventRecord(stop2));

//非阻塞模式

//CHECK(cudaEventSynchronize(stop2));

cudaEventQuery(stop2);

//计算时间 stop-stop2

float elapsed_time2;

cudaEventElapsedTime(&elapsed_time2, stop, stop2);

printf("stop-》stop2:Time = %g ms.\n", elapsed_time2);

//销毁Event

CHECK(cudaEventDestroy(start));

CHECK(cudaEventDestroy(stop));

CHECK(cudaEventDestroy(stop2));

//CPU函数计算

/*

cpu_matrix_mult(h_a, h_b, h_cc, m, n, k);

int ok = 1;

for (int i = 0; i < m; ++i)

{

for (int j = 0; j < k; ++j)

{

if(fabs(h_cc[i*k + j] - h_c[i*k + j])>(1.0e-10))

{

ok = 0;

}

}

}

if(ok)

{

printf("Pass!!!\n");

}

else

{

printf("Error!!!\n");

}

*/

// free memory

cudaFree(d_a);

cudaFree(d_b);

cudaFree(d_c);

cudaFreeHost(h_a);

cudaFreeHost(h_b);

cudaFreeHost(h_c);

return 0;

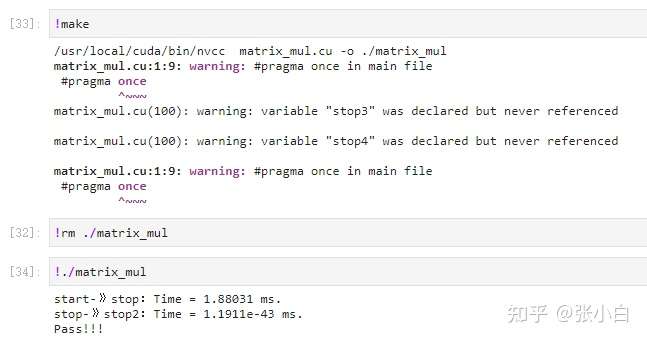

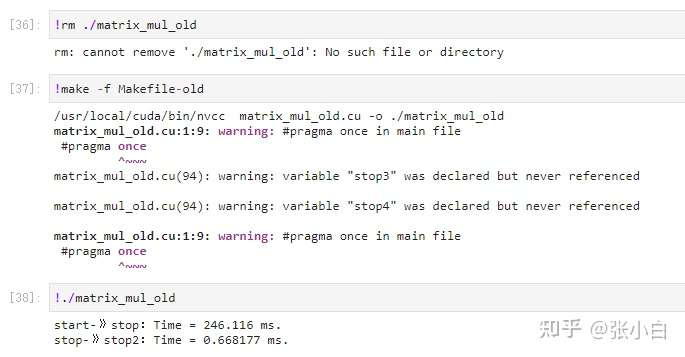

}重新执行下:

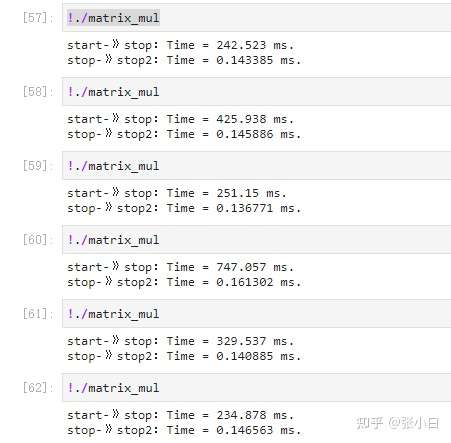

运行结果为 246ms。

改为统一内存后的代码呢?

修改如下:

#include <stdio.h>

#include <math.h>

#include "error.cuh"

#include "matrix_mul.cuh"

#define BLOCK_SIZE 32

__managed__ int u_a[1000*1000];

__managed__ int u_b[1000*1000];

__managed__ int u_c[1000*1000];

__managed__ int u_cc[1000*1000];

__global__ void gpu_matrix_mult(int *a,int *b, int *c, int m, int n, int k)

{

int row = blockIdx.y * blockDim.y + threadIdx.y;

int col = blockIdx.x * blockDim.x + threadIdx.x;

int sum = 0;

if( col < k && row < m)

{

for(int i = 0; i < n; i++)

{

sum += u_a[row * n + i] * u_b[i * k + col];

}

u_c[row * k + col] = sum;

}

}

__global__ void gpu_matrix_mult_shared(int *u_a, int *u_b, int *u_result, int m, int n, int k)

{

__shared__ int tile_a[BLOCK_SIZE][BLOCK_SIZE];

__shared__ int tile_b[BLOCK_SIZE][BLOCK_SIZE];

int row = blockIdx.y * BLOCK_SIZE + threadIdx.y;

int col = blockIdx.x * BLOCK_SIZE + threadIdx.x;

int tmp = 0;

int idx;

for (int sub = 0; sub < gridDim.x; ++sub)

{

idx = row * n + sub * BLOCK_SIZE + threadIdx.x;

tile_a[threadIdx.y][threadIdx.x] = row<n && (sub * BLOCK_SIZE + threadIdx.x)<n? u_a[idx]:0;

idx = (sub * BLOCK_SIZE + threadIdx.y) * n + col;

tile_b[threadIdx.y][threadIdx.x] = col<n && (sub * BLOCK_SIZE + threadIdx.y)<n? u_b[idx]:0;

__syncthreads();

for (int k = 0; k < BLOCK_SIZE; ++k)

{

tmp += tile_a[threadIdx.y][k] * tile_b[k][threadIdx.x];

}

__syncthreads();

}

if(row < n && col < n)

{

u_result[row * n + col] = tmp;

}

}

void cpu_matrix_mult(int *u_a, int *u_b, int *u_result, int m, int n, int k) {

for (int i = 0; i < m; ++i)

{

for (int j = 0; j < k; ++j)

{

int tmp = 0.0;

for (int h = 0; h < n; ++h)

{

tmp += u_a[i * n + h] * u_b[h * k + j];

}

u_result[i * k + j] = tmp;

}

}

}

int main(int argc, char const *argv[])

{

int m=1000;

int n=1000;

int k=1000;

//声明Event

cudaEvent_t start, stop, stop2, stop3 , stop4 ;

//创建Event

CHECK(cudaEventCreate(&start));

CHECK(cudaEventCreate(&stop));

CHECK(cudaEventCreate(&stop2));

//int *h_a, *h_b, *h_c, *h_cc;

//CHECK(cudaMallocHost((void **) &h_a, sizeof(int)*m*n));

//CHECK(cudaMallocHost((void **) &h_b, sizeof(int)*n*k));

//CHECK(cudaMallocHost((void **) &h_c, sizeof(int)*m*k));

//CHECK(cudaMallocHost((void **) &h_cc, sizeof(int)*m*k));

for (int i = 0; i < m; ++i) {

for (int j = 0; j < n; ++j) {

u_a[i * n + j] = rand() % 1024;

}

}

for (int i = 0; i < n; ++i) {

for (int j = 0; j < k; ++j) {

u_b[i * k + j] = rand() % 1024;

}

}

//int *d_a, *d_b, *d_c;

//CHECK(cudaMalloc((void **) &d_a, sizeof(int)*m*n));

//CHECK(cudaMalloc((void **) &d_b, sizeof(int)*n*k));

//CHECK(cudaMalloc((void **) &d_c, sizeof(int)*m*k));

// copy matrix A and B from host to device memory

//CHECK(cudaMemcpy(d_a, h_a, sizeof(int)*m*n, cudaMemcpyHostToDevice));

//CHECK(cudaMemcpy(d_b, h_b, sizeof(int)*n*k, cudaMemcpyHostToDevice));

unsigned int grid_rows = (m + BLOCK_SIZE - 1) / BLOCK_SIZE;

unsigned int grid_cols = (k + BLOCK_SIZE - 1) / BLOCK_SIZE;

dim3 dimGrid(grid_cols, grid_rows);

dim3 dimBlock(BLOCK_SIZE, BLOCK_SIZE);

//开始start Event

cudaEventRecord(start);

//非阻塞模式

cudaEventQuery(start);

//gpu_matrix_mult<<<dimGrid, dimBlock>>>(d_a, d_b, d_c, m, n, k);

gpu_matrix_mult_shared<<<dimGrid, dimBlock>>>(u_a, u_b, u_c, m, n, k);

//开始stop Event

cudaEventRecord(stop);

//由于要等待核函数执行完毕,所以选择阻塞模式

cudaEventSynchronize(stop);

//计算时间 stop-start

float elapsed_time;

CHECK(cudaEventElapsedTime(&elapsed_time, start, stop));

printf("start-》stop:Time = %g ms.\n", elapsed_time);

//CHECK(cudaMemcpy(h_c, d_c, (sizeof(int)*m*k), cudaMemcpyDeviceToHost));

//cudaThreadSynchronize();

//开始stop2 Event

CHECK(cudaEventRecord(stop2));

//非阻塞模式

//CHECK(cudaEventSynchronize(stop2));

cudaEventQuery(stop2);

//计算时间 stop-stop2

float elapsed_time2;

cudaEventElapsedTime(&elapsed_time2, stop, stop2);

printf("stop-》stop2:Time = %g ms.\n", elapsed_time2);

//销毁Event

CHECK(cudaEventDestroy(start));

CHECK(cudaEventDestroy(stop));

CHECK(cudaEventDestroy(stop2));

//CPU函数计算

/*

cpu_matrix_mult(u_a, u_b, u_cc, m, n, k);

int ok = 1;

for (int i = 0; i < m; ++i)

{

for (int j = 0; j < k; ++j)

{

if(fabs(u_cc[i*k + j] - u_c[i*k + j])>(1.0e-10))

{

ok = 0;

}

}

}

if(ok)

{

printf("Pass!!!\n");

}

else

{

printf("Error!!!\n");

}

*/

// free memory

//cudaFree(d_a);

//cudaFree(d_b);

//cudaFree(d_c);

//cudaFreeHost(h_a);

//cudaFreeHost(h_b);

//cudaFreeHost(h_c);

return 0;

}

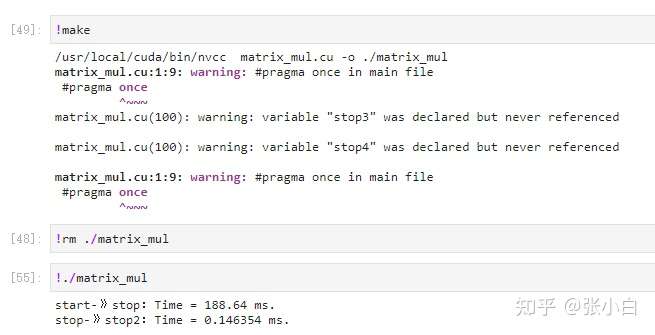

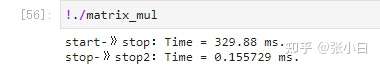

张小白发现了一个奇怪的现象:

多次运行统一内存的代码,在Nano上每次时间都不一样。有的比以前快,有些比以前慢。

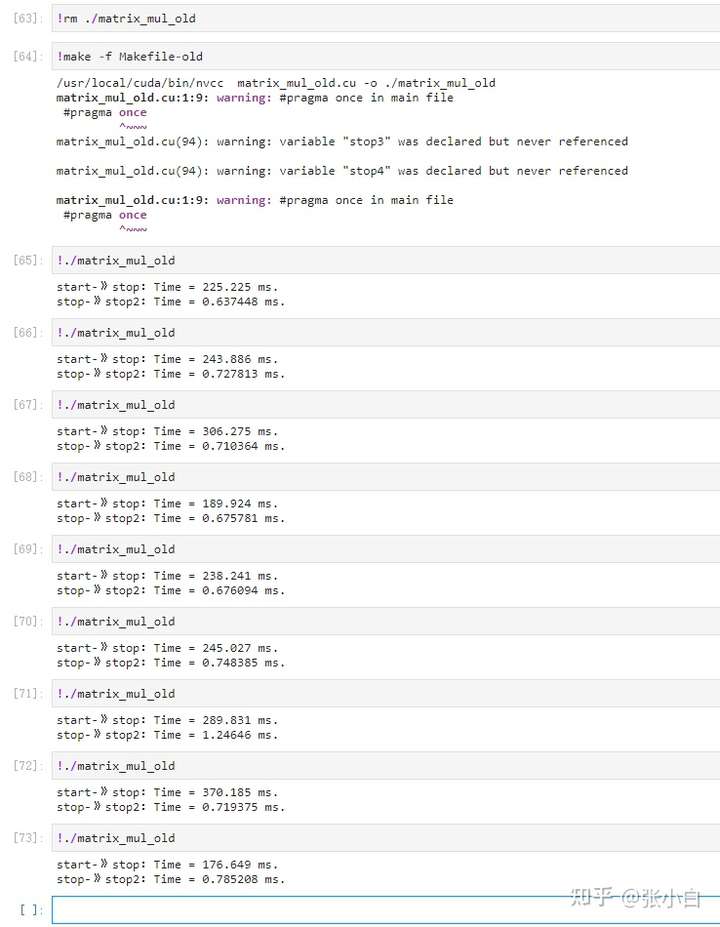

张小白又运行了改造前的代码:

好像也有点飘忽不定。。。

这是怎么回事呢?

原来,Jetson设备运行的时候需要一定的热身时间,热身过后,频率才能上去。可以先运行别的什么程序把kernel搞起来,然后再运行这段代码,也许就不会出现执行时间不一致的情况了。再说了,都是几秒钟的事情。其实刚热身好像就冷却下去了,这就像一个人刚跑了10米就不跑了。他的短跑成绩怎么能好呢?

要多学习刘耕宏(及其夫人),天天锻炼才行,对吧?!

(未完待续)

- 点赞

- 收藏

- 关注作者

评论(0)