Ascend310部署Qwen-VL-7B实现吸烟动作识别

【摘要】 本文详细介绍了在OrangePi AI Studio上使用Docker容器部署MindIE环境并运行Qwen2.5-VL-7B-Instruct多模态大模型实现吸烟动作识别的完整过程,验证了在Ascned 310p设备上运行多模态理解大模型的可靠性。

Ascend310部署Qwen-VL-7B实现吸烟动作识别

OrangePi AI Studio Pro是基于2个昇腾310P处理器的新一代高性能推理解析卡,提供基础通用算力+超强AI算力,整合了训练和推理的全部底层软件栈,实现训推一体。其中AI半精度FP16算力约为176TFLOPS,整数Int8精度可达352TOPS,本文将带领大家在Ascend 310P上部署Qwen2.5-VL-7B多模态理解大模型实现吸烟动作的识别。

一、环境配置

- 我们在

OrangePi AI Stuido上使用Docker容器部署MindIE:

docker pull swr.cn-south-1.myhuaweicloud.com/ascendhub/mindie:2.1.RC1-300I-Duo-py311-openeuler24.03-lts

root@orangepi:~# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

swr.cn-south-1.myhuaweicloud.com/ascendhub/mindie 2.1.RC1-300I-Duo-py311-openeuler24.03-lts 0574b8d4403f 3 months ago 20.4GB

langgenius/dify-web 1.0.1 b2b7363571c2 8 months ago 475MB

langgenius/dify-api 1.0.1 3dd892f50a2d 8 months ago 2.14GB

langgenius/dify-plugin-daemon 0.0.4-local 3f180f39bfbe 8 months ago 1.35GB

ubuntu/squid latest dae40da440fe 8 months ago 243MB

postgres 15-alpine afbf3abf6aeb 8 months ago 273MB

nginx latest b52e0b094bc0 9 months ago 192MB

swr.cn-south-1.myhuaweicloud.com/ascendhub/mindie 1.0.0-300I-Duo-py311-openeuler24.03-lts 74a5b9615370 10 months ago 17.5GB

redis 6-alpine 6dd588768b9b 10 months ago 30.2MB

langgenius/dify-sandbox 0.2.10 4328059557e8 13 months ago 567MB

semitechnologies/weaviate 1.19.0 8ec9f084ab23 2 years ago 52.5MB

- 之后创建一个名为

start-docker.sh的启动脚本,内容如下:

NAME=$1

if [ $# -ne 1 ]; then

echo "warning: need input container name.Use default: mindie"

NAME=mindie

fi

docker run --name ${NAME} -it -d --net=host --shm-size=500g \

--privileged=true \

-w /usr/local/Ascend/atb-models \

--device=/dev/davinci_manager \

--device=/dev/hisi_hdc \

--device=/dev/devmm_svm \

--entrypoint=bash \

-v /models:/models \

-v /data:/data \

-v /usr/local/Ascend/driver:/usr/local/Ascend/driver \

-v /usr/local/dcmi:/usr/local/dcmi \

-v /usr/local/bin/npu-smi:/usr/local/bin/npu-smi \

-v /usr/local/sbin:/usr/local/sbin \

-v /home:/home \

-v /tmp:/tmp \

-v /usr/share/zoneinfo/Asia/Shanghai:/etc/localtime \

-e http_proxy=$http_proxy \

-e https_proxy=$https_proxy \

-e "PATH=/usr/local/python3.11.6/bin:$PATH" \

swr.cn-south-1.myhuaweicloud.com/ascendhub/mindie:2.1.RC1-300I-Duo-py311-openeuler24.03-lts

bash start-docker.sh

- 启动容器后,我们需要替换几个文件并安装

Ascend-cann-nnal软件包:

root@orangepi:~# docker exec -it mindie bash

Welcome to 5.15.0-126-generic

System information as of time: Sat Nov 15 22:06:48 CST 2025

System load: 1.87

Memory used: 6.3%

Swap used: 0.0%

Usage On: 33%

Users online: 0

[root@orangepi atb-models]# cd /usr/local/Ascend/ascend-toolkit/8.2.RC1/lib64/

[root@orangepi lib64]# ls /data/fix_openeuler_docker/fixhccl/8.2hccl/

libhccl.so libhccl_alg.so libhccl_heterog.so libhccl_plf.so

[root@orangepi lib64]# cp /data/fix_openeuler_docker/fixhccl/8.2hccl/* ./

cp: overwrite './libhccl.so'?

cp: overwrite './libhccl_alg.so'?

cp: overwrite './libhccl_heterog.so'?

cp: overwrite './libhccl_plf.so'?

[root@orangepi lib64]# source /usr/local/Ascend/ascend-toolkit/set_env.sh

[root@orangepi lib64]# chmod +x /data/fix_openeuler_docker/Ascend-cann-nnal/Ascend-cann-nnal_8.3.RC1_linux-x86_64.run

[root@orangepi lib64]# /data/fix_openeuler_docker/Ascend-cann-nnal/Ascend-cann-nnal_8.3.RC1_linux-x86_64.run --install --quiet

[NNAL] [20251115-22:41:45] [INFO] LogFile:/var/log/ascend_seclog/ascend_nnal_install.log

[NNAL] [20251115-22:41:45] [INFO] Ascend-cann-atb_8.3.RC1_linux-x86_64.run --install --install-path=/usr/local/Ascend/nnal --install-for-all --quiet --nox11 start

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

[NNAL] [20251115-22:41:58] [INFO] Ascend-cann-atb_8.3.RC1_linux-x86_64.run --install --install-path=/usr/local/Ascend/nnal --install-for-all --quiet --nox11 install success

[NNAL] [20251115-22:41:58] [INFO] Ascend-cann-SIP_8.3.RC1_linux-x86_64.run --install --install-path=/usr/local/Ascend/nnal --install-for-all --quiet --nox11 start

[NNAL] [20251115-22:41:59] [INFO] Ascend-cann-SIP_8.3.RC1_linux-x86_64.run --install --install-path=/usr/local/Ascend/nnal --install-for-all --quiet --nox11 install success

[NNAL] [20251115-22:41:59] [INFO] Ascend-cann-nnal_8.3.RC1_linux-x86_64.run install success

Warning!!! If the environment variables of atb and asdsip are set at the same time, unexpected consequences will occur.

Import the corresponding environment variables based on the usage scenarios: atb for large model scenarios, asdsip for embedded scenarios.

Please make sure that the environment variables have been configured.

If you want to use atb module:

- To take effect for current user, you can exec command below: source /usr/local/Ascend/nnal/atb/set_env.sh or add "source /usr/local/Ascend/nnal/atb/set_env.sh" to ~/.bashrc.

If you want to use asdsip module:

- To take effect for current user, you can exec command below: source /usr/local/Ascend/nnal/asdsip/set_env.sh or add "source /usr/local/Ascend/nnal/asdsip/set_env.sh" to ~/.bashrc.

[root@orangepi lib64]# cat /usr/local/Ascend/nnal/atb/latest/version.info

Ascend-cann-atb : 8.3.RC1

Ascend-cann-atb Version : 8.3.RC1.B106

Platform : x86_64

branch : 8.3.rc1-0702

commit id : 16004f23040e0dcdd3cf0c64ecf36622487038ba

- 修改推理使用的逻辑

NPU核心为0,1,测试多模态理解大模型:Qwen2.5-VL-7B-Instruct:

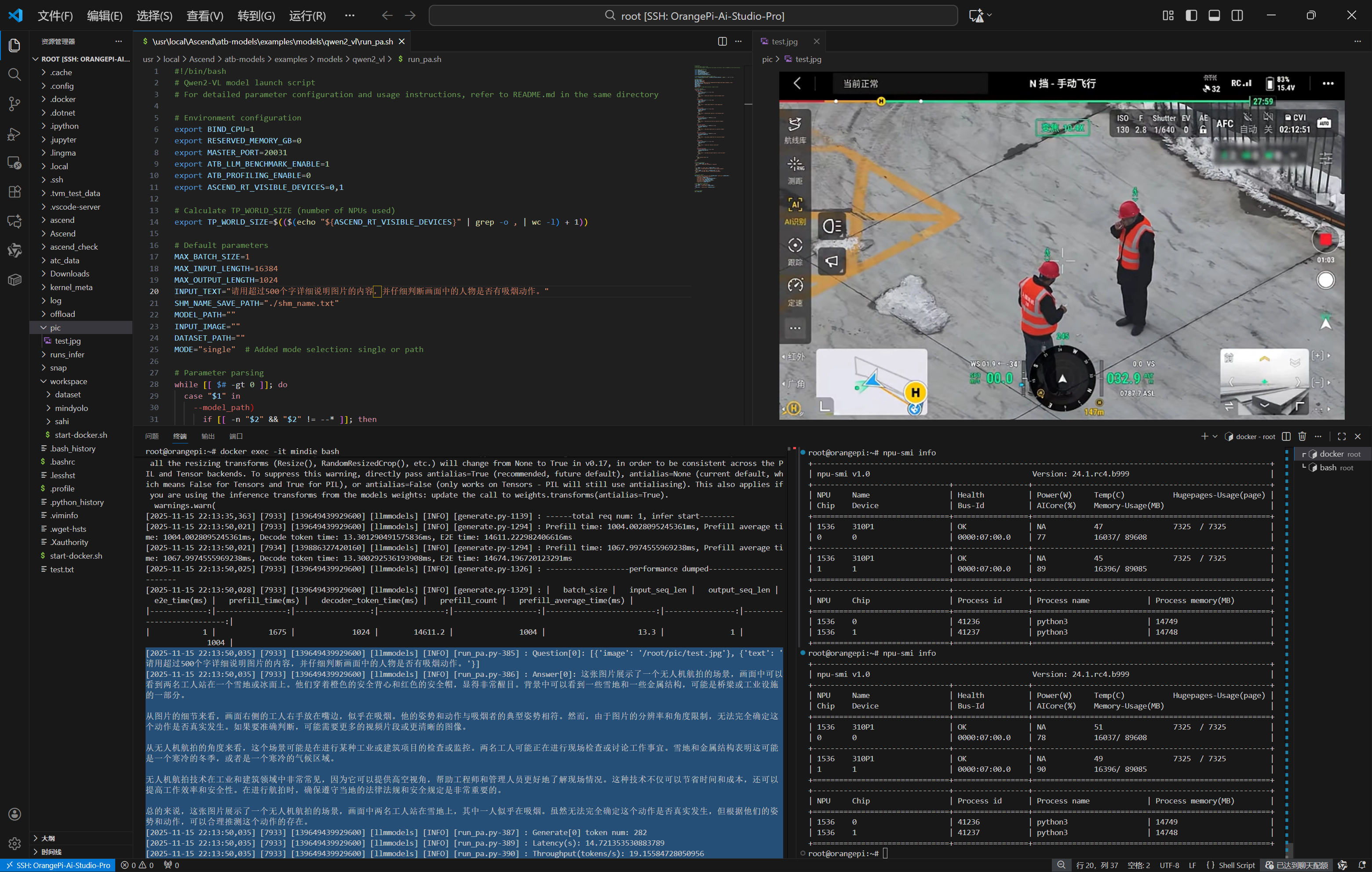

运行结果表明,Qwen2.5-VL-7B-Instruct在2 x Ascned 310P上推理平均每秒可以输出20个tokens,同时准确理解画面中的人物信息和行为动作。

[root@orangepi atb-models]# bash examples/models/qwen2_vl/run_pa.sh --model_path /models/Qwen2.5-VL-7B-Instruct/ --input_image /root/pic/test.jpg

[2025-11-15 22:12:49,663] torch.distributed.run: [WARNING]

[2025-11-15 22:12:49,663] torch.distributed.run: [WARNING] *****************************************

[2025-11-15 22:12:49,663] torch.distributed.run: [WARNING] Setting OMP_NUM_THREADS environment variable for each process to be 1 in default, to avoid your system being overloaded, please further tune the variable for optimal performance in your application as needed.

[2025-11-15 22:12:49,663] torch.distributed.run: [WARNING] *****************************************

/usr/local/lib64/python3.11/site-packages/torchvision/io/image.py:13: UserWarning: Failed to load image Python extension: 'libc10_cuda.so: cannot open shared object file: No such file or directory'If you don't plan on using image functionality from `torchvision.io`, you can ignore this warning. Otherwise, there might be something wrong with your environment. Did you have `libjpeg` or `libpng` installed before building `torchvision` from source?

warn(

/usr/local/lib64/python3.11/site-packages/torchvision/io/image.py:13: UserWarning: Failed to load image Python extension: 'libc10_cuda.so: cannot open shared object file: No such file or directory'If you don't plan on using image functionality from `torchvision.io`, you can ignore this warning. Otherwise, there might be something wrong with your environment. Did you have `libjpeg` or `libpng` installed before building `torchvision` from source?

warn(

2025-11-15 22:12:53.250 7934 LLM log default format: [yyyy-mm-dd hh:mm:ss.uuuuuu] [processid] [threadid] [llmmodels] [loglevel] [file:line] [status code] msg

2025-11-15 22:12:53.250 7933 LLM log default format: [yyyy-mm-dd hh:mm:ss.uuuuuu] [processid] [threadid] [llmmodels] [loglevel] [file:line] [status code] msg

[2025-11-15 22:12:53.250] [7934] [139886327420160] [llmmodels] [WARN] [model_factory.cpp:28] deepseekV2_DecoderModel model already exists, but the duplication doesn't matter.

[2025-11-15 22:12:53.250] [7933] [139649439929600] [llmmodels] [WARN] [model_factory.cpp:28] deepseekV2_DecoderModel model already exists, but the duplication doesn't matter.

[2025-11-15 22:12:53.250] [7934] [139886327420160] [llmmodels] [WARN] [model_factory.cpp:28] deepseekV2_DecoderModel model already exists, but the duplication doesn't matter.

[2025-11-15 22:12:53.250] [7933] [139649439929600] [llmmodels] [WARN] [model_factory.cpp:28] deepseekV2_DecoderModel model already exists, but the duplication doesn't matter.

[2025-11-15 22:12:53.250] [7934] [139886327420160] [llmmodels] [WARN] [model_factory.cpp:28] llama_LlamaDecoderModel model already exists, but the duplication doesn't matter.

[2025-11-15 22:12:53.250] [7933] [139649439929600] [llmmodels] [WARN] [model_factory.cpp:28] llama_LlamaDecoderModel model already exists, but the duplication doesn't matter.

[2025-11-15 22:12:55,335] [7934] [139886327420160] [llmmodels] [INFO] [cpu_binding.py-254] : rank_id: 1, device_id: 1, numa_id: 0, shard_devices: [0, 1], cpus: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15]

[2025-11-15 22:12:55,336] [7934] [139886327420160] [llmmodels] [INFO] [cpu_binding.py-280] : process 7934, new_affinity is [8, 9, 10, 11, 12, 13, 14, 15], cpu count 8

[2025-11-15 22:12:55,356] [7933] [139649439929600] [llmmodels] [INFO] [cpu_binding.py-254] : rank_id: 0, device_id: 0, numa_id: 0, shard_devices: [0, 1], cpus: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15]

[2025-11-15 22:12:55,357] [7933] [139649439929600] [llmmodels] [INFO] [cpu_binding.py-280] : process 7933, new_affinity is [0, 1, 2, 3, 4, 5, 6, 7], cpu count 8

[2025-11-15 22:12:56,032] [7933] [139649439929600] [llmmodels] [INFO] [model_runner.py-156] : model_runner.quantize: None, model_runner.kv_quant_type: None, model_runner.fa_quant_type: None, model_runner.dtype: torch.float16

[2025-11-15 22:13:01,826] [7933] [139649439929600] [llmmodels] [INFO] [dist.py-81] : initialize_distributed has been Set

[2025-11-15 22:13:01,827] [7933] [139649439929600] [llmmodels] [INFO] [model_runner.py-187] : init tokenizer done

Using a slow image processor as `use_fast` is unset and a slow processor was saved with this model. `use_fast=True` will be the default behavior in v4.48, even if the model was saved with a slow processor. This will result in minor differences in outputs. You'll still be able to use a slow processor with `use_fast=False`.

[2025-11-15 22:13:02,070] [7934] [139886327420160] [llmmodels] [INFO] [dist.py-81] : initialize_distributed has been Set

Using a slow image processor as `use_fast` is unset and a slow processor was saved with this model. `use_fast=True` will be the default behavior in v4.48, even if the model was saved with a slow processor. This will result in minor differences in outputs. You'll still be able to use a slow processor with `use_fast=False`.

[W InferFormat.cpp:62] Warning: Cannot create tensor with NZ format while dim < 2, tensor will be created with ND format. (function operator())

[W InferFormat.cpp:62] Warning: Cannot create tensor with NZ format while dim < 2, tensor will be created with ND format. (function operator())

[2025-11-15 22:13:08,435] [7933] [139649439929600] [llmmodels] [INFO] [flash_causal_qwen2.py-153] : >>>> qwen_QwenDecoderModel is called.

[2025-11-15 22:13:08,526] [7934] [139886327420160] [llmmodels] [INFO] [flash_causal_qwen2.py-153] : >>>> qwen_QwenDecoderModel is called.

[2025-11-15 22:13:16.666] [7933] [139649439929600] [llmmodels] [WARN] [operation_factory.cpp:42] OperationName: TransdataOperation not find in operation factory map

[2025-11-15 22:13:16.698] [7934] [139886327420160] [llmmodels] [WARN] [operation_factory.cpp:42] OperationName: TransdataOperation not find in operation factory map

[2025-11-15 22:13:22,379] [7933] [139649439929600] [llmmodels] [INFO] [model_runner.py-282] : model:

FlashQwen2vlForCausalLM(

(rotary_embedding): PositionRotaryEmbedding()

(attn_mask): AttentionMask()

(vision_tower): Qwen25VisionTransformerPretrainedModelATB(

(encoder): Qwen25VLVisionEncoderATB(

(layers): ModuleList(

(0-31): 32 x Qwen25VLVisionLayerATB(

(attn): VisionAttention(

(qkv): TensorParallelColumnLinear(

(linear): FastLinear()

)

(proj): TensorParallelRowLinear(

(linear): FastLinear()

)

)

(mlp): VisionMlp(

(gate_up_proj): TensorParallelColumnLinear(

(linear): FastLinear()

)

(down_proj): TensorParallelRowLinear(

(linear): FastLinear()

)

)

(norm1): BaseRMSNorm()

(norm2): BaseRMSNorm()

)

)

(patch_embed): FastPatchEmbed(

(proj): TensorReplicatedLinear(

(linear): FastLinear()

)

)

(patch_merger): PatchMerger(

(patch_merger_mlp_0): TensorParallelColumnLinear(

(linear): FastLinear()

)

(patch_merger_mlp_2): TensorParallelRowLinear(

(linear): FastLinear()

)

(patch_merger_ln_q): BaseRMSNorm()

)

)

(rotary_pos_emb): VisionRotaryEmbedding()

)

(language_model): FlashQwen2UsingMROPEForCausalLM(

(rotary_embedding): PositionRotaryEmbedding()

(attn_mask): AttentionMask()

(transformer): FlashQwenModel(

(wte): TensorEmbeddingWithoutChecking()

(h): ModuleList(

(0-27): 28 x FlashQwenLayer(

(attn): FlashQwenAttention(

(rotary_emb): PositionRotaryEmbedding()

(c_attn): TensorParallelColumnLinear(

(linear): FastLinear()

)

(c_proj): TensorParallelRowLinear(

(linear): FastLinear()

)

)

(mlp): QwenMLP(

(act): SiLU()

(w2_w1): TensorParallelColumnLinear(

(linear): FastLinear()

)

(c_proj): TensorParallelRowLinear(

(linear): FastLinear()

)

)

(ln_1): QwenRMSNorm()

(ln_2): QwenRMSNorm()

)

)

(ln_f): QwenRMSNorm()

)

(lm_head): TensorParallelHead(

(linear): FastLinear()

)

)

)

[2025-11-15 22:13:24,268] [7933] [139649439929600] [llmmodels] [INFO] [run_pa.py-134] : hbm_capacity(GB): 87.5078125, init_memory(GB): 11.376015624962747

[2025-11-15 22:13:24,789] [7933] [139649439929600] [llmmodels] [INFO] [run_pa.py-342] : pa_runner: PARunner(model_path=/models/Qwen2.5-VL-7B-Instruct/, input_text=请用超过500个字详细说明图片的内容,并仔细判断画面中的人物是否有吸烟动作。, max_position_embeddings=None, max_input_length=16384, max_output_length=1024, max_prefill_tokens=-1, load_tokenizer=True, enable_atb_torch=False, max_prefill_batch_size=None, max_batch_size=1, dtype=torch.float16, block_size=128, model_config=ModelConfig(num_heads=14, num_kv_heads=2, num_kv_heads_origin=4, head_size=128, k_head_size=128, v_head_size=128, num_layers=28, device=npu:0, dtype=torch.float16, soc_info=NPUSocInfo(soc_name='', soc_version=200, need_nz=True, matmul_nd_nz=False), kv_quant_type=None, fa_quant_type=None, mapping=Mapping(world_size=2, rank=0, num_nodes=1,pp_rank=0, pp_groups=[[0], [1]], micro_batch_size=1, attn_dp_groups=[[0], [1]], attn_tp_groups=[[0, 1]], attn_inner_sp_groups=[[0], [1]], attn_cp_groups=[[0], [1]], attn_o_proj_tp_groups=[[0], [1]], mlp_tp_groups=[[0, 1]], moe_ep_groups=[[0], [1]], moe_tp_groups=[[0, 1]]), cla_share_factor=1, model_type=qwen2_5_vl, enable_nz=False), max_memory=93960798208,

[2025-11-15 22:13:24,794] [7933] [139649439929600] [llmmodels] [INFO] [run_pa.py-122] : ---------------Begin warm_up---------------

[2025-11-15 22:13:24,794] [7933] [139649439929600] [llmmodels] [INFO] [cache.py-154] : kv cache will allocate 0.46484375GB memory

[2025-11-15 22:13:24,821] [7934] [139886327420160] [llmmodels] [INFO] [cache.py-154] : kv cache will allocate 0.46484375GB memory

[2025-11-15 22:13:24,827] [7933] [139649439929600] [llmmodels] [INFO] [generate.py-1139] : ------total req num: 1, infer start--------

[2025-11-15 22:13:26,002] [7934] [139886327420160] [llmmodels] [INFO] [flash_causal_qwen2.py-680] : <<<<<<<after transdata k_caches[0].shape=torch.Size([136, 16, 128, 16])

[2025-11-15 22:13:26,023] [7933] [139649439929600] [llmmodels] [INFO] [flash_causal_qwen2.py-676] : <<<<<<< ori k_caches[0].shape=torch.Size([136, 16, 128, 16])

[2025-11-15 22:13:26,023] [7933] [139649439929600] [llmmodels] [INFO] [flash_causal_qwen2.py-680] : <<<<<<<after transdata k_caches[0].shape=torch.Size([136, 16, 128, 16])

[2025-11-15 22:13:26,024] [7933] [139649439929600] [llmmodels] [INFO] [flash_causal_qwen2.py-705] : >>>>>>id of kcache is 139645634198608 id of vcache is 139645634198320

[2025-11-15 22:13:34,363] [7933] [139649439929600] [llmmodels] [INFO] [generate.py-1294] : Prefill time: 9476.590633392334ms, Prefill average time: 9476.590633392334ms, Decode token time: 54.94809150695801ms, E2E time: 9531.538724899292ms

[2025-11-15 22:13:34,363] [7934] [139886327420160] [llmmodels] [INFO] [generate.py-1294] : Prefill time: 9452.020645141602ms, Prefill average time: 9452.020645141602ms, Decode token time: 54.654598236083984ms, E2E time: 9506.675243377686ms

[2025-11-15 22:13:34,366] [7933] [139649439929600] [llmmodels] [INFO] [generate.py-1326] : -------------------performance dumped------------------------

[2025-11-15 22:13:34,371] [7933] [139649439929600] [llmmodels] [INFO] [generate.py-1329] : | batch_size | input_seq_len | output_seq_len | e2e_time(ms) | prefill_time(ms) | decoder_token_time(ms) | prefill_count | prefill_average_time(ms) |

|-------------:|----------------:|-----------------:|---------------:|-------------------:|-------------------------:|----------------:|---------------------------:|

| 1 | 16384 | 2 | 9531.54 | 9476.59 | 54.95 | 1 | 9476.59 |

/usr/local/lib64/python3.11/site-packages/torchvision/transforms/functional.py:1603: UserWarning: The default value of the antialias parameter of all the resizing transforms (Resize(), RandomResizedCrop(), etc.) will change from None to True in v0.17, in order to be consistent across the PIL and Tensor backends. To suppress this warning, directly pass antialias=True (recommended, future default), antialias=None (current default, which means False for Tensors and True for PIL), or antialias=False (only works on Tensors - PIL will still use antialiasing). This also applies if you are using the inference transforms from the models weights: update the call to weights.transforms(antialias=True).

warnings.warn(

[2025-11-15 22:13:35,307] [7933] [139649439929600] [llmmodels] [INFO] [run_pa.py-148] : warmup_memory(GB): 15.75

[2025-11-15 22:13:35,307] [7933] [139649439929600] [llmmodels] [INFO] [run_pa.py-153] : ---------------End warm_up---------------

/usr/local/lib64/python3.11/site-packages/torchvision/transforms/functional.py:1603: UserWarning: The default value of the antialias parameter of all the resizing transforms (Resize(), RandomResizedCrop(), etc.) will change from None to True in v0.17, in order to be consistent across the PIL and Tensor backends. To suppress this warning, directly pass antialias=True (recommended, future default), antialias=None (current default, which means False for Tensors and True for PIL), or antialias=False (only works on Tensors - PIL will still use antialiasing). This also applies if you are using the inference transforms from the models weights: update the call to weights.transforms(antialias=True).

warnings.warn(

[2025-11-15 22:13:35,363] [7933] [139649439929600] [llmmodels] [INFO] [generate.py-1139] : ------total req num: 1, infer start--------

[2025-11-15 22:13:50,021] [7933] [139649439929600] [llmmodels] [INFO] [generate.py-1294] : Prefill time: 1004.0028095245361ms, Prefill average time: 1004.0028095245361ms, Decode token time: 13.301290491575836ms, E2E time: 14611.222982406616ms

[2025-11-15 22:13:50,021] [7934] [139886327420160] [llmmodels] [INFO] [generate.py-1294] : Prefill time: 1067.9974555969238ms, Prefill average time: 1067.9974555969238ms, Decode token time: 13.300292536193908ms, E2E time: 14674.196720123291ms

[2025-11-15 22:13:50,025] [7933] [139649439929600] [llmmodels] [INFO] [generate.py-1326] : -------------------performance dumped------------------------

[2025-11-15 22:13:50,028] [7933] [139649439929600] [llmmodels] [INFO] [generate.py-1329] : | batch_size | input_seq_len | output_seq_len | e2e_time(ms) | prefill_time(ms) | decoder_token_time(ms) | prefill_count | prefill_average_time(ms) |

|-------------:|----------------:|-----------------:|---------------:|-------------------:|-------------------------:|----------------:|---------------------------:|

| 1 | 1675 | 1024 | 14611.2 | 1004 | 13.3 | 1 | 1004 |

[2025-11-15 22:13:50,035] [7933] [139649439929600] [llmmodels] [INFO] [run_pa.py-385] : Question[0]: [{'image': '/root/pic/test.jpg'}, {'text': '请用超过500个字详细说明图片的内容,并仔细判断画面中的人物是否有吸烟动作。'}]

[2025-11-15 22:13:50,035] [7933] [139649439929600] [llmmodels] [INFO] [run_pa.py-386] : Answer[0]: 这张图片展示了一个无人机航拍的场景,画面中可以看到两名工人站在一个雪地或冰面上。他们穿着橙色的安全背心和红色的安全帽,显得非常醒目。背景中可以看到一些雪地和一些金属结构,可能是桥梁或工业设施的一部分。

从图片的细节来看,画面右侧的工人右手放在嘴边,似乎在吸烟。他的姿势和动作与吸烟者的典型姿势相符。然而,由于图片的分辨率和角度限制,无法完全确定这个动作是否真实发生。如果要准确判断,可能需要更多的视频片段或更清晰的图像。

从无人机航拍的角度来看,这个场景可能是在进行某种工业或建筑项目的检查或监控。两名工人可能正在进行现场检查或讨论工作事宜。雪地和金属结构表明这可能是一个寒冷的冬季,或者是一个寒冷的气候区域。

无人机航拍技术在工业和建筑领域中非常常见,因为它可以提供高空视角,帮助工程师和管理人员更好地了解现场情况。这种技术不仅可以节省时间和成本,还可以提高工作效率和安全性。在进行航拍时,确保遵守当地的法律法规和安全规定是非常重要的。

总的来说,这张图片展示了一个无人机航拍的场景,画面中两名工人站在雪地上,其中一人似乎在吸烟。虽然无法完全确定这个动作是否真实发生,但根据他们的姿势和动作,可以合理推测这个动作的存在。

[2025-11-15 22:13:50,035] [7933] [139649439929600] [llmmodels] [INFO] [run_pa.py-387] : Generate[0] token num: 282

[2025-11-15 22:13:50,035] [7933] [139649439929600] [llmmodels] [INFO] [run_pa.py-389] : Latency(s): 14.721353530883789

[2025-11-15 22:13:50,035] [7933] [139649439929600] [llmmodels] [INFO] [run_pa.py-390] : Throughput(tokens/s): 19.15584728050956

本文详细介绍了在OrangePi AI Studio上使用Docker容器部署MindIE环境并运行Qwen2.5-VL-7B-Instruct多模态大模型实现吸烟动作识别的完整过程,验证了在Ascned 310p设备上运行多模态理解大模型的可靠性。

【声明】本内容来自华为云开发者社区博主,不代表华为云及华为云开发者社区的观点和立场。转载时必须标注文章的来源(华为云社区)、文章链接、文章作者等基本信息,否则作者和本社区有权追究责任。如果您发现本社区中有涉嫌抄袭的内容,欢迎发送邮件进行举报,并提供相关证据,一经查实,本社区将立刻删除涉嫌侵权内容,举报邮箱:

cloudbbs@huaweicloud.com

- 点赞

- 收藏

- 关注作者

评论(0)