@[toc]

1、激活函数的实现

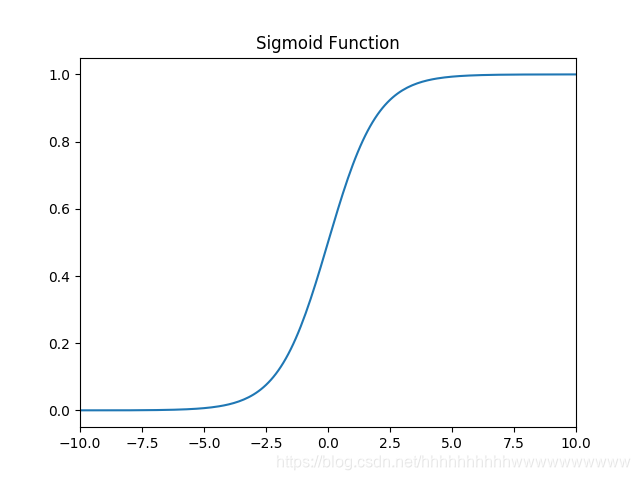

1.1 sigmoid

1.1.1 函数

函数:

f(x)=1+e−x1

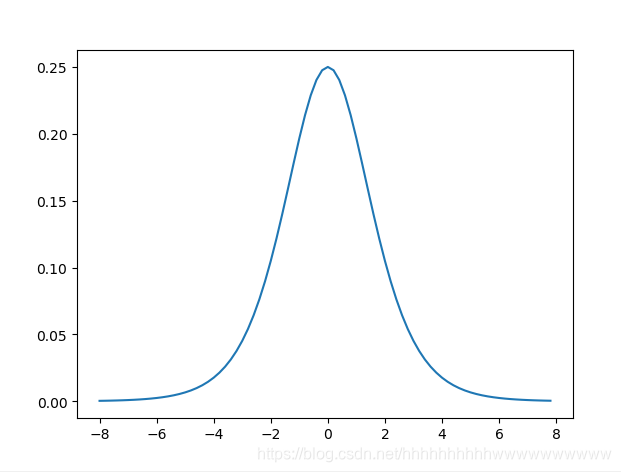

1.1.2 导数

求导过程:

根据:

(vu)′=v2u′v−uv′

f(x)′=(1+e−x1)′=(1+e−x)21′×(1+e−x)−1×(1+e−x)′=(1+e−x)2e−x=(1+e−x)21+e−x−1=(1+e−x1)(1−1+e−x1)=f(x)(1−f(x))

1.1.3 代码实现

import numpy as np

class Sigmoid():

def __call__(self, x):

return 1 / (1 + np.exp(-x))

def gradient(self, x):

return self.__call__(x) * (1 - self.__call__(x))

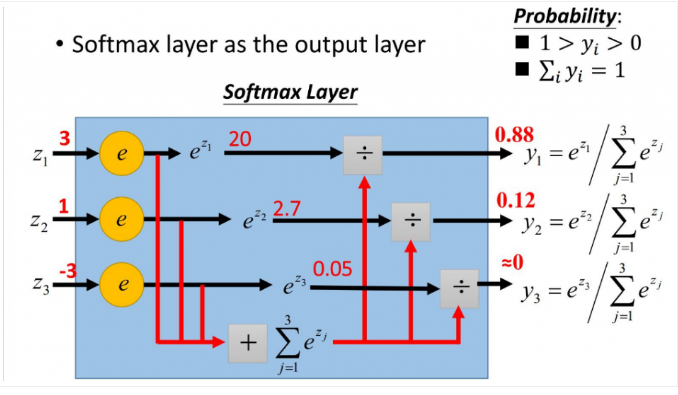

1.2 softmax

1.2.1 函数

softmax用于多分类过程中,它将多个神经元的输出,映射到(0,1)区间内,可以看成概率来理解,从而来进行多分类!

假设我们有一个数组,V,Vi表示V中的第i个元素,那么这个元素的softmax值就是:

Si=∑jejei

更形象的如下图表示:

y1=ez1+ez2+ez3ez1y2=ez1+ez2+ez3ez2y3=ez1+ez2+ez3ez3(1)

要使用梯度下降,就需要一个损失函数,一般使用交叉熵作为损失函数,交叉熵函数形式如下:

Loss=−i∑yilnai(2)

1.2.2 导数

求导分为两种情况。

第一种j=i:

Si=∑jejei=∑ieiei

推导过程如下:

f′=(∑ieiei)′=(∑iei)2(ei)×∑iei−ei×ei=∑ieiei−∑ieiei×∑ieiei=∑ieiei(1−∑ieiei)=f(1−f)

1.2.3 代码实现

import numpy as np

class Softmax():

def __call__(self, x):

e_x = np.exp(x - np.max(x, axis=-1, keepdims=True))

return e_x / np.sum(e_x, axis=-1, keepdims=True)

def gradient(self, x):

p = self.__call__(x)

return p * (1 - p)

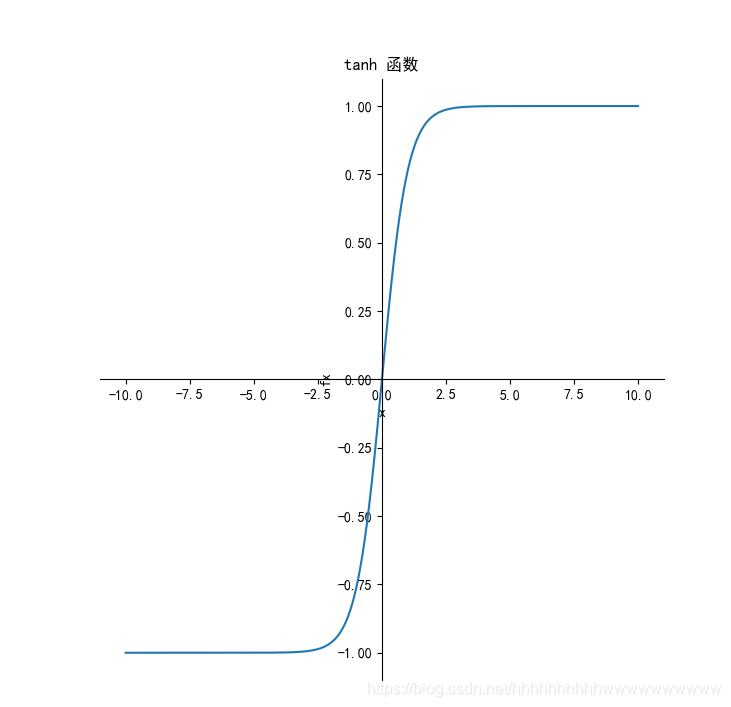

1.3 tanh

1.3.1 函数

tanh(x)=ex+e−xex−e−x

1.3.2 导数

求导过程:

tanh(x)′=(ex+e−xex−e−x)′=(ex+e−x)2(ex−e−x)′(ex+e−x)−(ex−e−x)(ex+e−x)′=(ex+e−x)2(ex+e−x)2−(ex⋅e−x)2=1−(ex+e−xex−e−x)2=1−tanh(x)2

1.3.3 代码实现

import numpy as np

class TanH():

def __call__(self, x):

return 2 / (1 + np.exp(-2*x)) - 1

def gradient(self, x):

return 1 - np.power(self.__call__(x), 2)

1.4 relu

1.4.1 函数

f(x)=max(0,x)

1.4.2 导数

f′(x)={10 if (x>0) if (x<=0)

1.4.3 代码实现

import numpy as np

class ReLU():

def __call__(self, x):

return np.where(x >= 0, x, 0)

def gradient(self, x):

return np.where(x >= 0, 1, 0)

1.5 leakyrelu

1.5.1 函数

f(x)=max(ax,x)

1.5.2 导数

f′(x)={1a if (x>0) if (x<=0)

1.5.3 代码实现

import numpy as np

class LeakyReLU():

def __init__(self, alpha=0.2):

self.alpha = alpha

def __call__(self, x):

return np.where(x >= 0, x, self.alpha * x)

def gradient(self, x):

return np.where(x >= 0, 1, self.alpha)

1.6 ELU

1.61 函数

f(x)={x,a(ex−1), if x≥0 if (x<0)

1.6.2 导数

当x>=0时,导数为1。

当x<0时,导数的推导过程:

f(x)′=(a(ex−1))′=aex=a(ex−1)+a=f(x)+a=aex

所以,完整的导数为:

f′={1f(x)+a=aex if if x≥0x<0

1.6.3 代码实现

import numpy as np

class ELU():

def __init__(self, alpha=0.1):

self.alpha = alpha

def __call__(self, x):

return np.where(x >= 0.0, x, self.alpha * (np.exp(x) - 1))

def gradient(self, x):

return np.where(x >= 0.0, 1, self.__call__(x) + self.alpha)

1.7 selu

1.7.1 函数

selu(x)=λ{xαex−α if (x>0) if (x⩽0)

1.7.2 导数

selu′(x)=λ{1αexx>0⩽0

1.7.3 代码实现

import numpy as np

class SELU():

def __init__(self):

self.alpha = 1.6732632423543772848170429916717

self.scale = 1.0507009873554804934193349852946

def __call__(self, x):

return self.scale * np.where(x >= 0.0, x, self.alpha*(np.exp(x)-1))

def gradient(self, x):

return self.scale * np.where(x >= 0.0, 1, self.alpha * np.exp(x))

1.8 softplus

1.81 函数

Softplus(x)=log(1+ex)

1.8.2 导数

log默认的底数是

e

f′(x)=(1+ex)lneex=1+e−x1=σ(x)

1.8.3 代码实现

import numpy as np

class SoftPlus():

def __call__(self, x):

return np.log(1 + np.exp(x))

def gradient(self, x):

return 1 / (1 + np.exp(-x))

1.9 Swish

1.9.1 函数

f(x)=x⋅sigmoid(βx)

1.9.2 导数

f′(x)=σ(βx)+βx⋅σ(βx)(1−σ(βx))=σ(βx)+βx⋅σ(βx)−βx⋅σ(βx)2=βx⋅σ(x)+σ(βx)(1−βx⋅σ(βx))=βf(x)+σ(βx)(1−βf(x))

1.9.3 代码实现

import numpy as np

class Swish(object):

def __init__(self, b):

self.b = b

def __call__(self, x):

return x * (np.exp(self.b * x) / (np.exp(self.b * x) + 1))

def gradient(self, x):

return self.b * x / (1 + np.exp(-self.b * x)) + (1 / (1 + np.exp(-self.b * x)))(

1 - self.b * (x / (1 + np.exp(-self.b * x))))

1.10 Mish

1.10.1 函数

f(x)=x∗tanh(ln(1+ex))

1.10.2 导数

f′(x)=sech2(softplus(x))xsigmoid(x)+xf(x)=Δ(x)swish(x)+xf(x)

where softplus

(x)=ln(1+ex) and sigmoid

(x)=1/(1+e−x).

1.10.3 代码实现

import numpy as np

def sech(x):

"""sech函数"""

return 2 / (np.exp(x) + np.exp(-x))

def sigmoid(x):

"""sigmoid函数"""

return 1 / (1 + np.exp(-x))

def soft_plus(x):

"""softplus函数"""

return np.log(1 + np.exp(x))

def tan_h(x):

"""tanh函数"""

return (np.exp(x) - np.exp(-x)) / (np.exp(x) + np.exp(-x))

class Mish:

def __call__(self, x):

return x * tan_h(soft_plus(x))

def gradient(self, x):

return sech(soft_plus(x)) * sech(soft_plus(x)) * x * sigmoid(x) + tan_h(soft_plus(x))

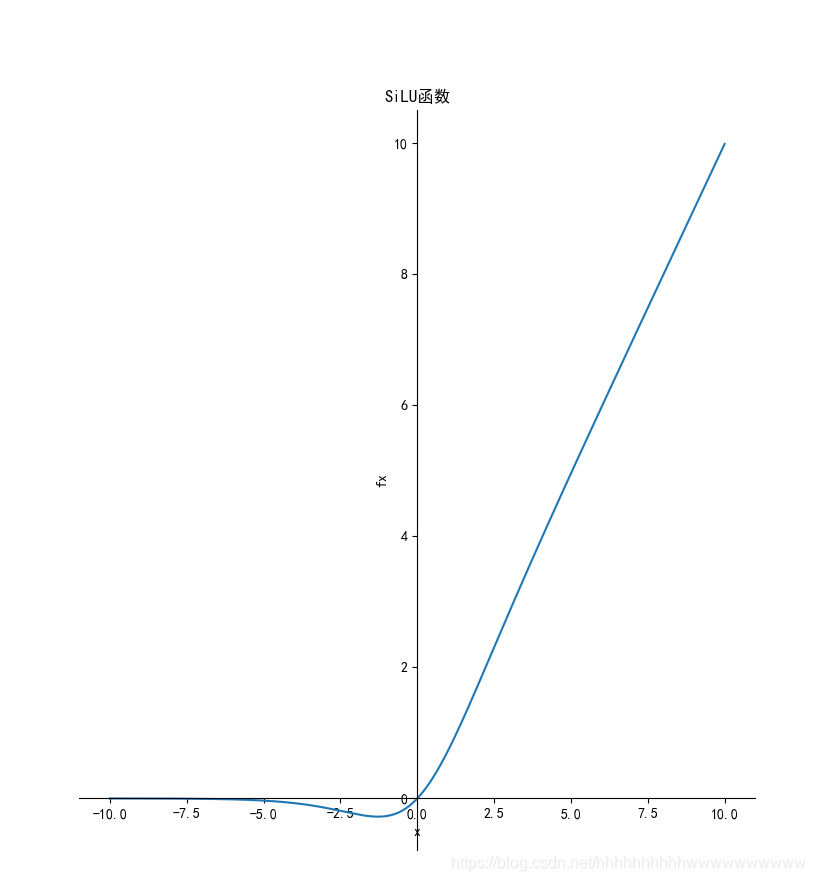

1.11 SiLU

1.11.1 函数

f(x)=x×sigmoid(x)

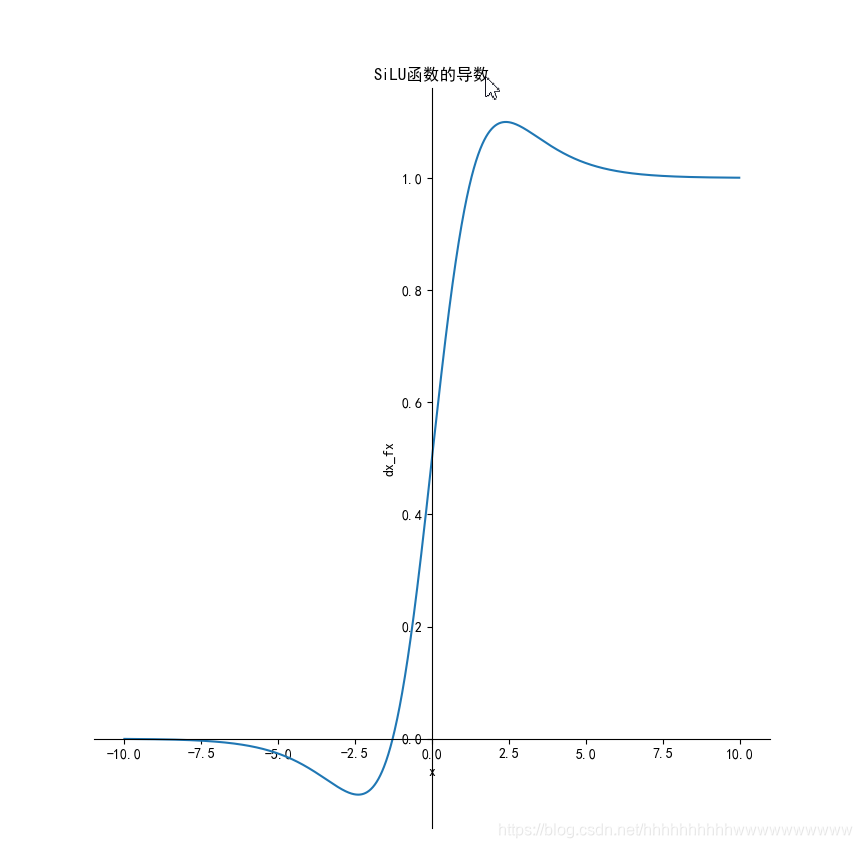

1.11.2 导数

推导过程

f(x)′=(x⋅sigmoid(x))′=sigmoid(x)+x⋅(sigmoid(x)(1−sigmoid(x))=sigmoid(x)+x⋅sigmoid(x)−x⋅sigmoid2(x)=f(x)+sigmoid(x)(1−f(x))

1.11.3 代码实现

import numpy as np

def sigmoid(x):

"""sigmoid函数"""

return 1 / (1 + np.exp(-x))

class SILU(object):

def __call__(self, x):

return x * sigmoid(x)

def gradient(self, x):

return self.__call__(x) + sigmoid(x) * (1 - self.__call__(x))

1.12 完整代码

定义一个activation_function.py,将下面的代码复制进去,到这里激活函数就完成了。

import numpy as np

class Sigmoid():

def __call__(self, x):

return 1 / (1 + np.exp(-x))

def gradient(self, x):

return self.__call__(x) * (1 - self.__call__(x))

class Softmax():

def __call__(self, x):

e_x = np.exp(x - np.max(x, axis=-1, keepdims=True))

return e_x / np.sum(e_x, axis=-1, keepdims=True)

def gradient(self, x):

p = self.__call__(x)

return p * (1 - p)

class TanH():

def __call__(self, x):

return 2 / (1 + np.exp(-2 * x)) - 1

def gradient(self, x):

return 1 - np.power(self.__call__(x), 2)

class ReLU():

def __call__(self, x):

return np.where(x >= 0, x, 0)

def gradient(self, x):

return np.where(x >= 0, 1, 0)

class LeakyReLU():

def __init__(self, alpha=0.2):

self.alpha = alpha

def __call__(self, x):

return np.where(x >= 0, x, self.alpha * x)

def gradient(self, x):

return np.where(x >= 0, 1, self.alpha)

class ELU(object):

def __init__(self, alpha=0.1):

self.alpha = alpha

def __call__(self, x):

return np.where(x >= 0.0, x, self.alpha * (np.exp(x) - 1))

def gradient(self, x):

return np.where(x >= 0.0, 1, self.__call__(x) + self.alpha)

class SELU():

def __init__(self):

self.alpha = 1.6732632423543772848170429916717

self.scale = 1.0507009873554804934193349852946

def __call__(self, x):

return self.scale * np.where(x >= 0.0, x, self.alpha * (np.exp(x) - 1))

def gradient(self, x):

return self.scale * np.where(x >= 0.0, 1, self.alpha * np.exp(x))

class SoftPlus(object):

def __call__(self, x):

return np.log(1 + np.exp(x))

def gradient(self, x):

return 1 / (1 + np.exp(-x))

class Swish(object):

def __init__(self, b):

self.b = b

def __call__(self, x):

return x * (np.exp(self.b * x) / (np.exp(self.b * x) + 1))

def gradient(self, x):

return self.b * x / (1 + np.exp(-self.b * x)) + (1 / (1 + np.exp(-self.b * x)))(

1 - self.b * (x / (1 + np.exp(-self.b * x))))

def sech(x):

"""sech函数"""

return 2 / (np.exp(x) + np.exp(-x))

def sigmoid(x):

"""sigmoid函数"""

return 1 / (1 + np.exp(-x))

def soft_plus(x):

"""softplus函数"""

return np.log(1 + np.exp(x))

def tan_h(x):

"""tanh函数"""

return (np.exp(x) - np.exp(-x)) / (np.exp(x) + np.exp(-x))

class Mish:

def __call__(self, x):

return x * tan_h(soft_plus(x))

def gradient(self, x):

return sech(soft_plus(x)) * sech(soft_plus(x)) * x * sigmoid(x) + tan_h(soft_plus(x))

class SILU(object):

def __call__(self, x):

return x * sigmoid(x)

def gradient(self, x):

return self.__call__(x) + sigmoid(x) * (1 - self.__call__(x))

参考公式

(C)′=0

(ax)′=axlna

(xμ)′=μxμ−1

(ex)′=ex

(sinx)′=cosx

(logax)′=xlna1

(cosx)′=−sinx

(lnx)′=x1

(tanx)′=sec2x

(arcsinx)′=1−x2

1

(cotx)′=−csc2x

(arccosx)′=−1−x2

1

(secx)′=secx⋅tanx

(arctanx)′=1+x21

(cscx)′=−cscx⋅cotx

(arccotx)′=−1+x21

双曲正弦:

sinhx=2ex−e−x

双曲余弦:

coshx=2ex+e−x

双曲正切:

tanhx=coshxsinhx=ex+e−xex−e−x

双曲余切:

cothx=tanhx1=ex−e−xex+e−x

双曲正割:

sechx=coshx1=ex+e−x2

双曲余割:

cschx=sinhx1=ex−e−x2

评论(0)