用ranger对hive metastore 进行授权管理

hive standalone metastore 3.1.2可作为独立服务,作为spark、flink、presto等服务的元数据管理中心,然而在现有的hive授权方案中只有针对hiveserver2的授权,所以本文针对hive standalone metastore独立服务使用ranger对连接到hive metastore的用户进行授权访问,以解决hive standalone metastore无权限验证问题。

为了测试验证,本文所作的操作都在一台centos 7.6主机上执行。

ranger编译指南

准备

centos 7.6

jdk 1.8

maven 3.6.3(建议使用该版本,本人测试验证3.8.x编译是会报错的)

源码下载

wget https://dlcdn.apache.org/ranger/2.1.0/apache-ranger-2.1.0.tar.gz

源码编译

tar zxvf apache-ranger-2.1.0.tar.gz

cd apache-ranger-2.1.0

mvn clean compile install -DskipTests -Drat.skip=true

ranger配置与启动

文件解压

将target/ranger-2.1.0-admin.tar.gz和target/ranger-2.1.0-usersync.tar.gz复制到/data/ranger目录下,解压:

tar zxvf ranger-2.1.0-admin.tar.gz

tar zxvf ranger-2.1.0-usersync.tar.gz

得到

ranger-2.1.0-admin

ranger-2.1.0-usersync

配置solr

修改ranger自带的solr配置,SOLR_DOWNLOAD_URL配置项为solr的二进制包下载地址。其他有关于目录的配置项请确保目录存在。

vim ranger-2.1.0-admin/contrib/solr_for_audit_setup/install.properties

SOLR_INSTALL=true

SOLR_DOWNLOAD_URL=http://home.lrting.top:11180/downloads/solr-7.7.3.tgz

SOLR_INSTALL_FOLDER=/data/solr

SOLR_RANGER_HOME=/data/solr/ranger_audit_server

SOLR_RANGER_PORT=6083

SOLR_RANGER_DATA_FOLDER=/data/solr/ranger_audit_server/data

初始化

ranger-2.1.0-admin/contrib/solr_for_audit_setup/setup.sh

启动solr

/data/solr/ranger_audit_server/scripts/start_solr.sh

配置ranger-admin

修改配置文件:SQL_CONNECTOR_JAR配置项为mysql-jdbc的jar包路径,请合理设置。

vim ranger-2.1.0-admin/install.properties

setup_mode=SeparateDBA

SQL_CONNECTOR_JAR=/usr/share/java/mysql-connector-java.jar

db_root_user=root

db_root_password=password

db_host=192.168.1.3

db_name=ranger # ranger需要事先在数据库中新建

db_user=root

db_password=password

audit_store=solr

audit_solr_urls=http://hadoop:6083/solr/ranger_audits

audit_solr_user=solr

初始化数据库

ranger-2.1.0-admin/setup.sh

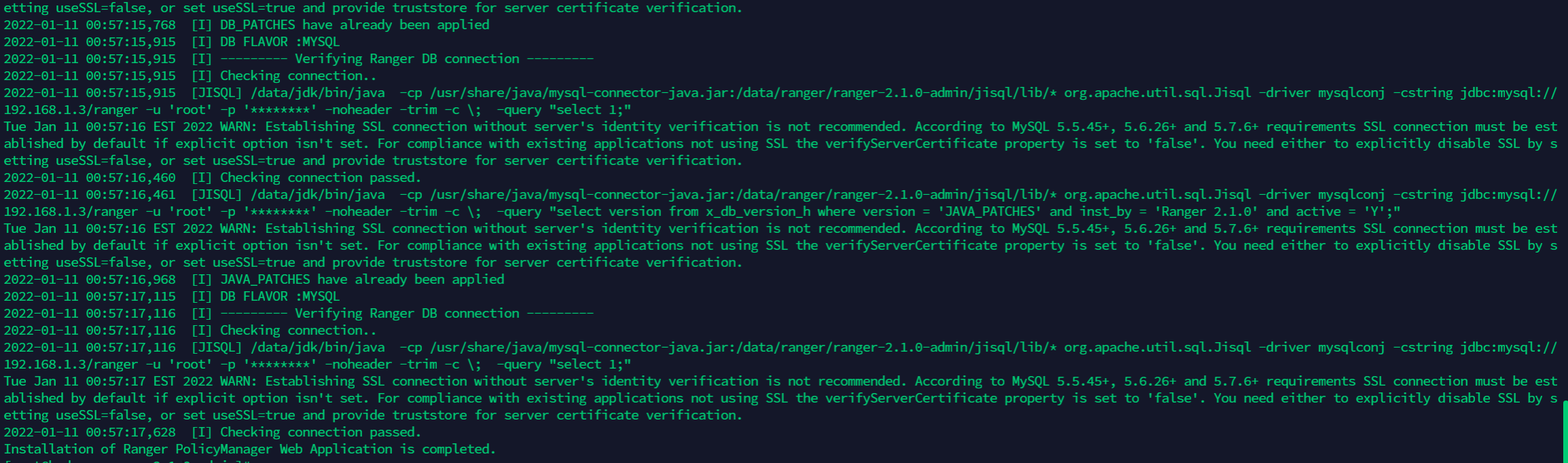

得到

启动ranger admin

ranger-2.1.0-admin/ews/start-ranger-admin.sh

验证ranger admin进程

访问该主机6080端口

账号密码全为admin,如果要自己设置该密码,可在install.properties中设置rangerAdmin_password参数。

得到如下界面:

配置ranger Usersync

vim ranger-2.1.0-usersync/install.properties

修改配置文件:

POLICY_MGR_URL = http://hadoop:6080

其他配置可不变。

执行初始化:

ranger-2.1.0-usersync/setup.sh

启动ranger-2.1.0-usersync

ranger-2.1.0-usersync/start

安装hive-standalone-metastore服务

hive-standalone-metastore服务需要依赖于hadoop环境,所以需要配置HADOOP_HOME

cd /data

wget https://dlcdn.apache.org/hadoop/common/hadoop-3.2.2/hadoop-3.2.2.tar.gz

tar zxvf hadoop-3.2.2.tar.gz

ln -s hadoop-3.2.2 hadoop

修改/etc/profile,新增

export HADOOP_HOME=/data/hadoop

使上述变量生效

source /etc/profile

hive-standalone-metastore安装包下载:

cd /data

wget https://repo1.maven.org/maven2/org/apache/hive/hive-standalone-metastore/3.1.2/hive-standalone-metastore-3.1.2-bin.tar.gz

tar zxvf hive-standalone-metastore-3.1.2-bin.tar.gz

得到:

/data/apache-hive-metastore-3.1.2-bin

进入lib目录下,下载mysql jdbc

cd /data/apache-hive-metastore-3.1.2-bin/lib

wget https://repo1.maven.org/maven2/mysql/mysql-connector-java/5.1.49/mysql-connector-java-5.1.49.jar

下载hive-exec-3.1.2.jar

wget https://repo1.maven.org/maven2/org/apache/hive/hive-exec/3.1.2/hive-exec-3.1.2.jar

将原先的gauva包删除,替换为hadoop中带的guava包

rm guava-*

cp /data/hadoop-3.2.2/share/hadoop/common/lib/guava-27.0-jre.jar .

hive-metastore关于数据库的初始化操作在安装ranger-metastore-plugin插件后执行。

安装ranger-metastore-plugin插件

插件下载、编译与安装

ranger插件地址:https://git.lrting.top/xiaozhch5/ranger-metastore-plugin.git

编译命令为:

mvn clean compile package install assembly:assembly -DskipTests

编译后在target目录下得到ranger-metastore-plugin-2.1.0-metastore-plugin.zip,之后将该插件拷贝到/data/ranger之后解压

unzip ranger-metastore-plugin-2.1.0-metastore-plugin.zip

得到ranger-metastore-plugin。

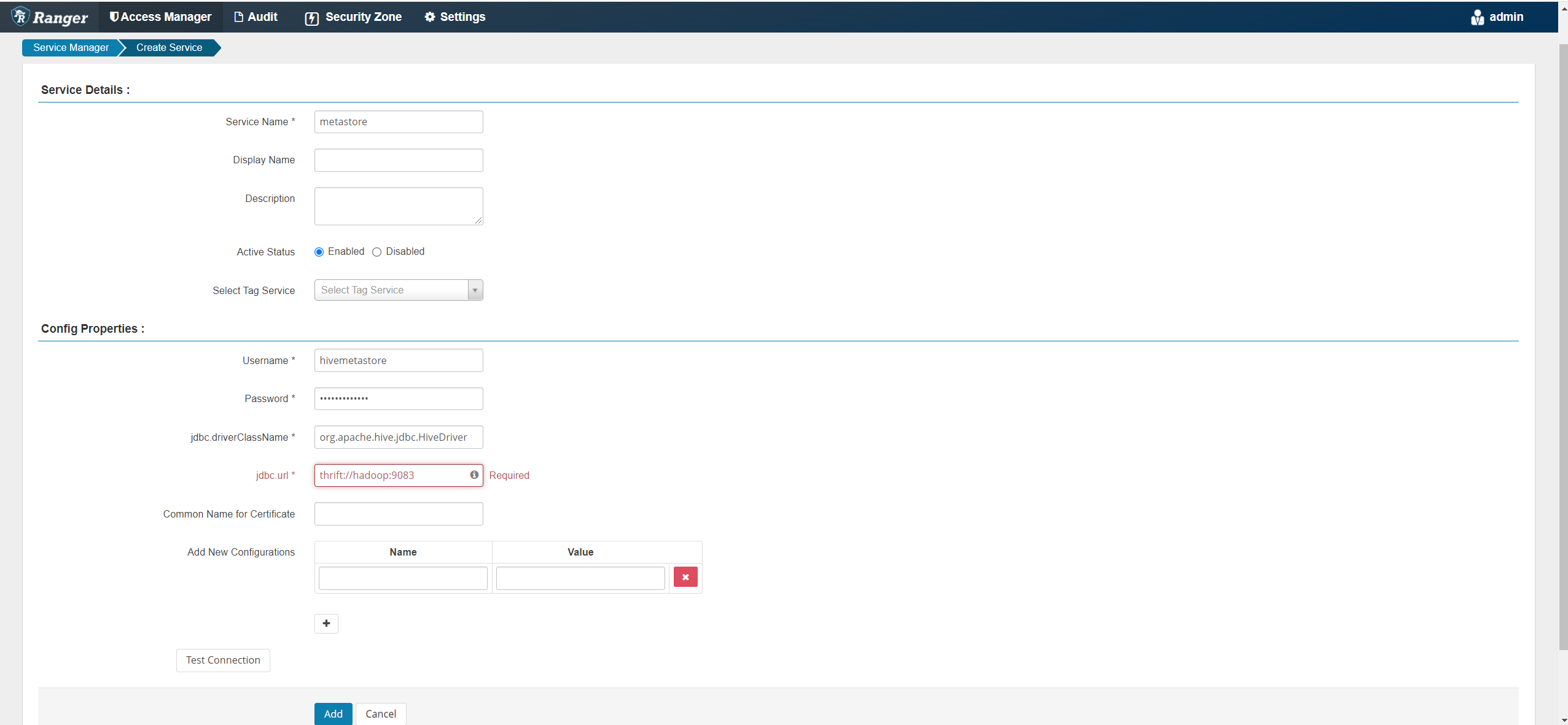

修改配置文件,注意REPOSITORY_NAME=metastore选项建议不要修改,就填metastore就好,该配置项后面再ranger界面创建metastore服务的时候要用到。

POLICY_MGR_URL=http://hadoop:6080

REPOSITORY_NAME=metastore

COMPONENT_INSTALL_DIR_NAME=/data/apache-hive-metastore-3.1.2-bin

启用ranger-metastore-plugin,

./enable-metastore-plugin.sh

得到

[root@hadoop ranger-metastore-plugin]# ./enable-metastore-plugin.sh

Custom user is available, using custom user and default group.

+ Tue Jan 11 01:27:50 EST 2022 : metastore: lib folder=/data/apache-hive-metastore-3.1.2-bin/lib conf folder=/data/apache-hive-metastore-3.1.2-bin/conf

+ Tue Jan 11 01:27:50 EST 2022 : Creating default file from [/data/ranger/ranger-metastore-plugin/install/conf.templates/default/configuration.xml] for [/data/apache-hive-metastore-3.1.2-bin/conf/hiveserver2-site.xml] ..

+ Tue Jan 11 01:27:50 EST 2022 : Saving current config file: /data/apache-hive-metastore-3.1.2-bin/conf/hiveserver2-site.xml to /data/apache-hive-metastore-3.1.2-bin/conf/.hiveserver2-site.xml.20220111-012750 ...

+ Tue Jan 11 01:27:50 EST 2022 : Creating default file from [/data/ranger/ranger-metastore-plugin/install/conf.templates/default/configuration.xml] for [/data/apache-hive-metastore-3.1.2-bin/conf/hiveserver-site.xml] ..

+ Tue Jan 11 01:27:50 EST 2022 : Saving current config file: /data/apache-hive-metastore-3.1.2-bin/conf/hiveserver-site.xml to /data/apache-hive-metastore-3.1.2-bin/conf/.hiveserver-site.xml.20220111-012750 ...

+ Tue Jan 11 01:27:50 EST 2022 : Creating default file from [/data/ranger/ranger-metastore-plugin/install/conf.templates/default/configuration.xml] for [/data/apache-hive-metastore-3.1.2-bin/conf/hive-site.xml] ..

+ Tue Jan 11 01:27:50 EST 2022 : Saving current config file: /data/apache-hive-metastore-3.1.2-bin/conf/hive-site.xml to /data/apache-hive-metastore-3.1.2-bin/conf/.hive-site.xml.20220111-012750 ...

+ Tue Jan 11 01:27:50 EST 2022 : Saving current config file: /data/apache-hive-metastore-3.1.2-bin/conf/ranger-hive-audit.xml to /data/apache-hive-metastore-3.1.2-bin/conf/.ranger-hive-audit.xml.20220111-012750 ...

+ Tue Jan 11 01:27:50 EST 2022 : Saving current config file: /data/apache-hive-metastore-3.1.2-bin/conf/ranger-hive-security.xml to /data/apache-hive-metastore-3.1.2-bin/conf/.ranger-hive-security.xml.20220111-012750 ...

+ Tue Jan 11 01:27:50 EST 2022 : Saving current config file: /data/apache-hive-metastore-3.1.2-bin/conf/ranger-policymgr-ssl.xml to /data/apache-hive-metastore-3.1.2-bin/conf/.ranger-policymgr-ssl.xml.20220111-012750 ...

+ Tue Jan 11 01:27:51 EST 2022 : Saving current JCE file: /etc/ranger/metastore/cred.jceks to /etc/ranger/metastore/.cred.jceks.20220111012751 ...

Ranger Plugin for metastore has been enabled. Please restart metastore to ensure that changes are effective.

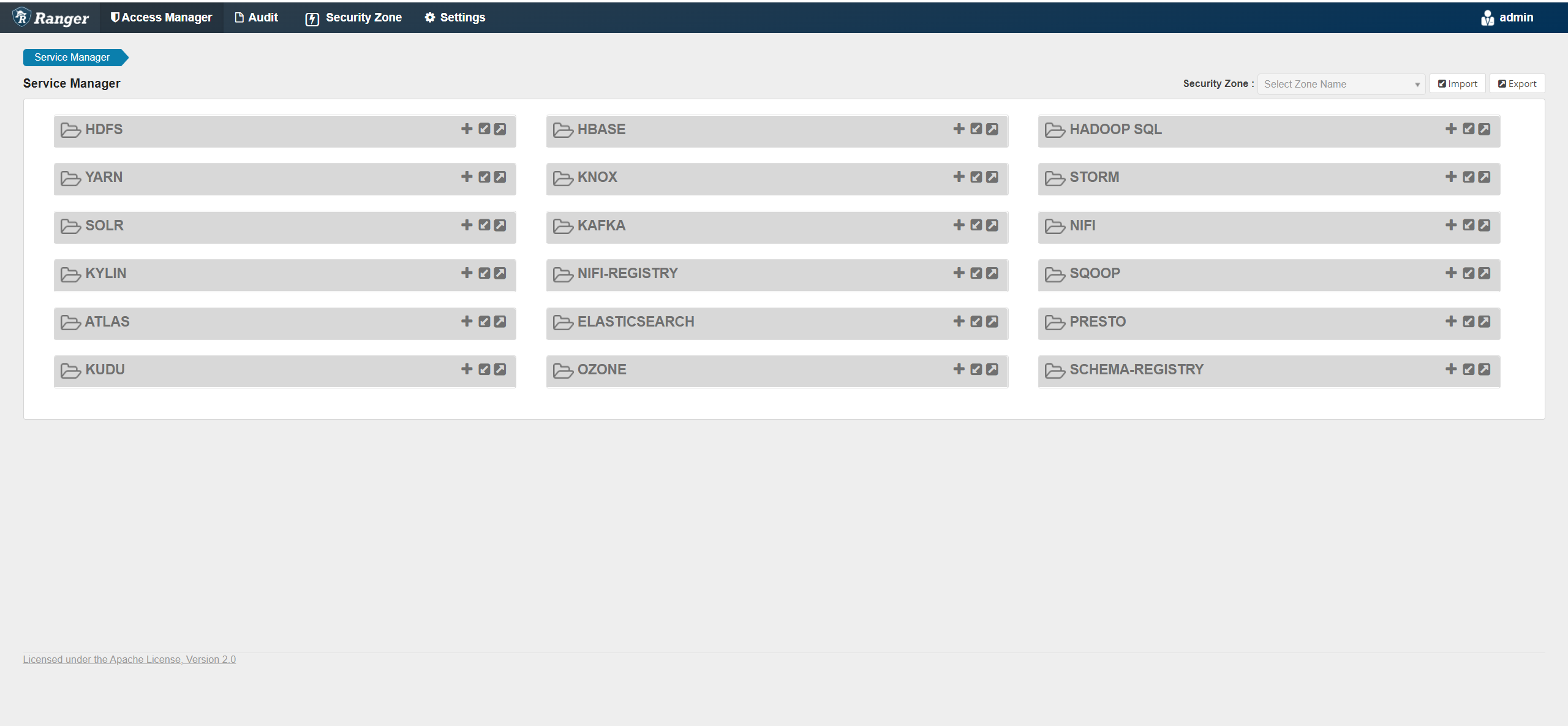

重新进入ranger管理页面

在HADOOP_SQL选项中新增 metastore service

hive metastore元数据初始化

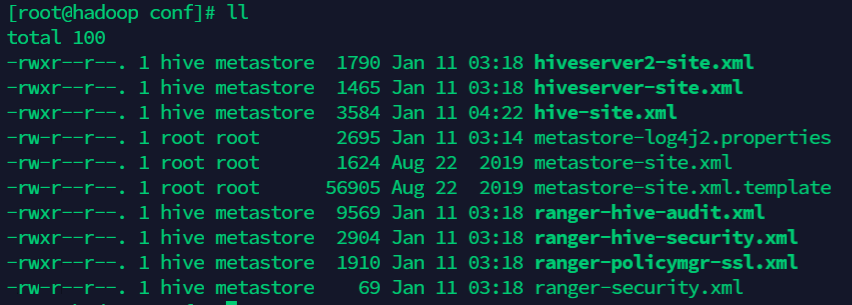

在启用ranger-metastore-plugin插件后,会在/data/apache-hive-metastore-3.1.2-bin/conf目录下生成如下文件

此时修改hive-site.xml文件,以进行hive-metastore元数据初始化操作:

首先新建/usr/hive/warehouse文件夹。(并确保hive metastore服务启动用户对该目录有写入权限)

mkdir -p /usr/hive/warehouse

hive-site.xml文件配置如下:配置mysql作为hive metastore元数据后端存储。

<configuration>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>file:///usr/hive/warehouse</value>

</property>

<property>

<name>hive.metastore.local</name>

<value>true</value>

</property>

<property>

<name>hive.security.authorization.enabled</name>

<value>true</value>

</property>

<property>

<name>hive.security.authorization.manager</name>

<value>org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizerFactory</value>

</property>

<property>

<name>hive.metastore.pre.event.listeners</name>

<value>org.apache.ranger.authorization.hive.authorizer.RangerHiveMetastoreAuthorizer</value>

</property>

<property>

<name>hive.metastore.event.listeners</name>

<value>org.apache.ranger.authorization.hive.authorizer.RangerHiveMetastorePrivilegeHandler</value>

</property>

<property>

<name>hive.conf.restricted.list</name>

<value>hive.security.authorization.enabled,hive.security.authorization.manager,hive.security.authenticator.manager</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.1.3:3306/metastore_2?useSSL=false&serverTimezone=UTC</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>password</value>

</property>

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

</property>

<property>

<name>metastore.thrift.uris</name>

<value>thrift://localhost:9083</value>

<description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description>

</property>

</configuration>

在执行初始化操作之前,需要在数据库中新建metastore_2数据库。

元数据初始化:

/data/apache-hive-metastore-3.1.2-bin/bin/schematool -initSchema -dbType mysql

通过上述配置,已经完成了hive metastore的初始化操作,接下来即可以启动hive metastore并验证了。

启动hive metastore:

/data/apache-hive-metastore-3.1.2-bin/bin/start-metastore

ranger授权验证

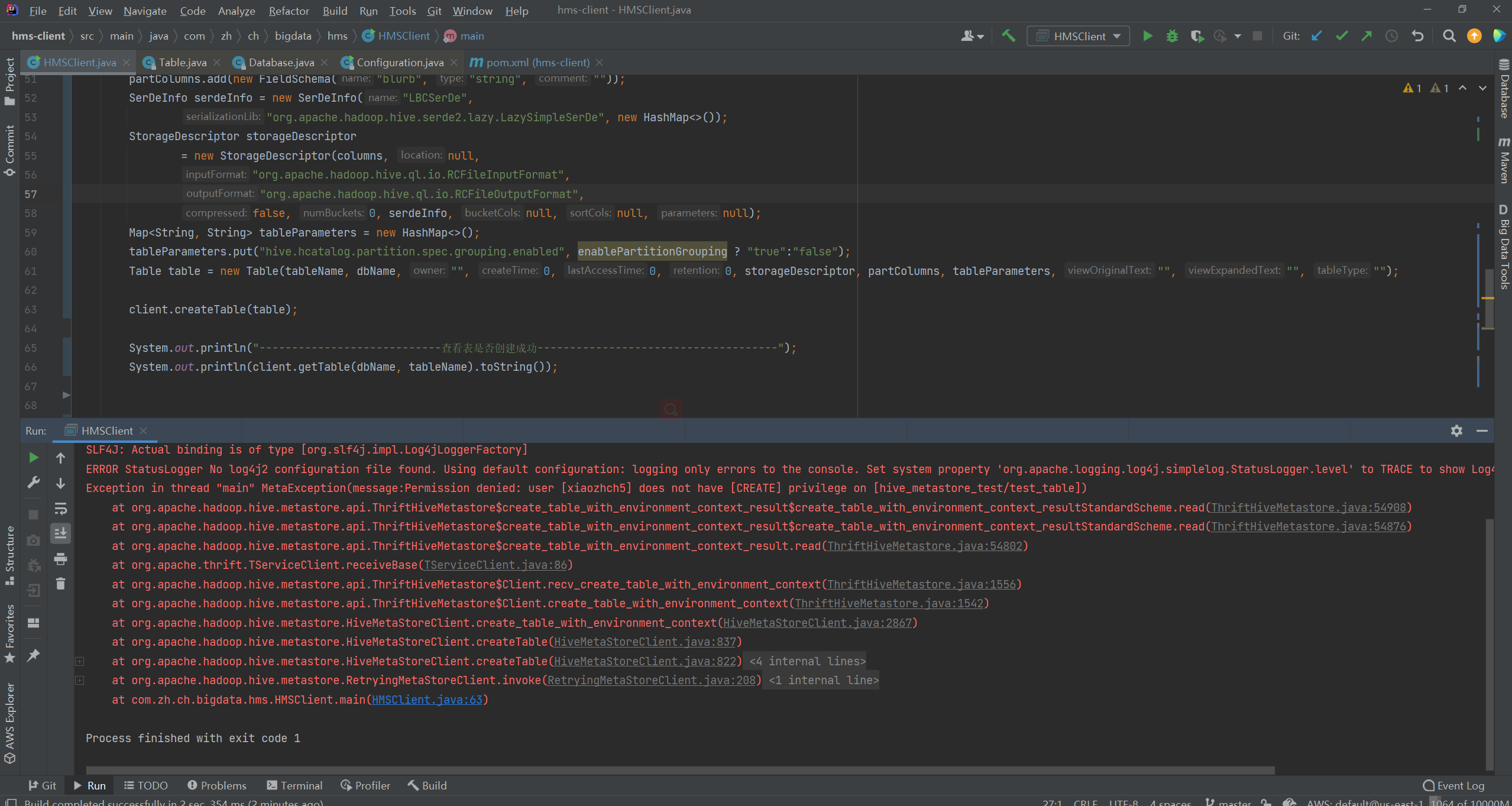

接下来通过JAVA API在hive metastore创建表,关于Java API操作hive metastore的相关代码可参考:通过Java API获取Hive Metastore中的元数据信息

核心代码如下:

package com.zh.ch.bigdata.hms;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hive.metastore.IMetaStoreClient;

import org.apache.hadoop.hive.metastore.RetryingMetaStoreClient;

import org.apache.hadoop.hive.metastore.api.*;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

public class HMSClient {

public static final Logger LOGGER = LoggerFactory.getLogger(HMSClient.class);

/**

* 初始化HMS连接

* @param conf org.apache.hadoop.conf.Configuration

* @return IMetaStoreClient

* @throws MetaException 异常

*/

public static IMetaStoreClient init(Configuration conf) throws MetaException {

try {

return RetryingMetaStoreClient.getProxy(conf, false);

} catch (MetaException e) {

LOGGER.error("hms连接失败", e);

throw e;

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

conf.set("hive.metastore.uris", "thrift://192.168.241.134:9083");

IMetaStoreClient client = HMSClient.init(conf);

boolean enablePartitionGrouping = true;

String tableName = "test_table";

String dbName = "hive_metastore_test";

List<FieldSchema> columns = new ArrayList<>();

columns.add(new FieldSchema("foo", "string", ""));

columns.add(new FieldSchema("bar", "string", ""));

List<FieldSchema> partColumns = new ArrayList<>();

partColumns.add(new FieldSchema("dt", "string", ""));

partColumns.add(new FieldSchema("blurb", "string", ""));

SerDeInfo serdeInfo = new SerDeInfo("LBCSerDe",

"org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe", new HashMap<>());

StorageDescriptor storageDescriptor

= new StorageDescriptor(columns, null,

"org.apache.hadoop.hive.ql.io.RCFileInputFormat",

"org.apache.hadoop.hive.ql.io.RCFileOutputFormat",

false, 0, serdeInfo, null, null, null);

Map<String, String> tableParameters = new HashMap<>();

tableParameters.put("hive.hcatalog.partition.spec.grouping.enabled", enablePartitionGrouping ? "true":"false");

Table table = new Table(tableName, dbName, "", 0, 0, 0, storageDescriptor, partColumns, tableParameters, "", "", "");

client.createTable(table);

System.out.println("----------------------------查看表是否创建成功-------------------------------------");

System.out.println(client.getTable(dbName, tableName).toString());

}

}

由下图可知,在未使用ranger进行授权的情况下,xiaozhch5用户无法创建上述test_table表(该用户为我本机用户)

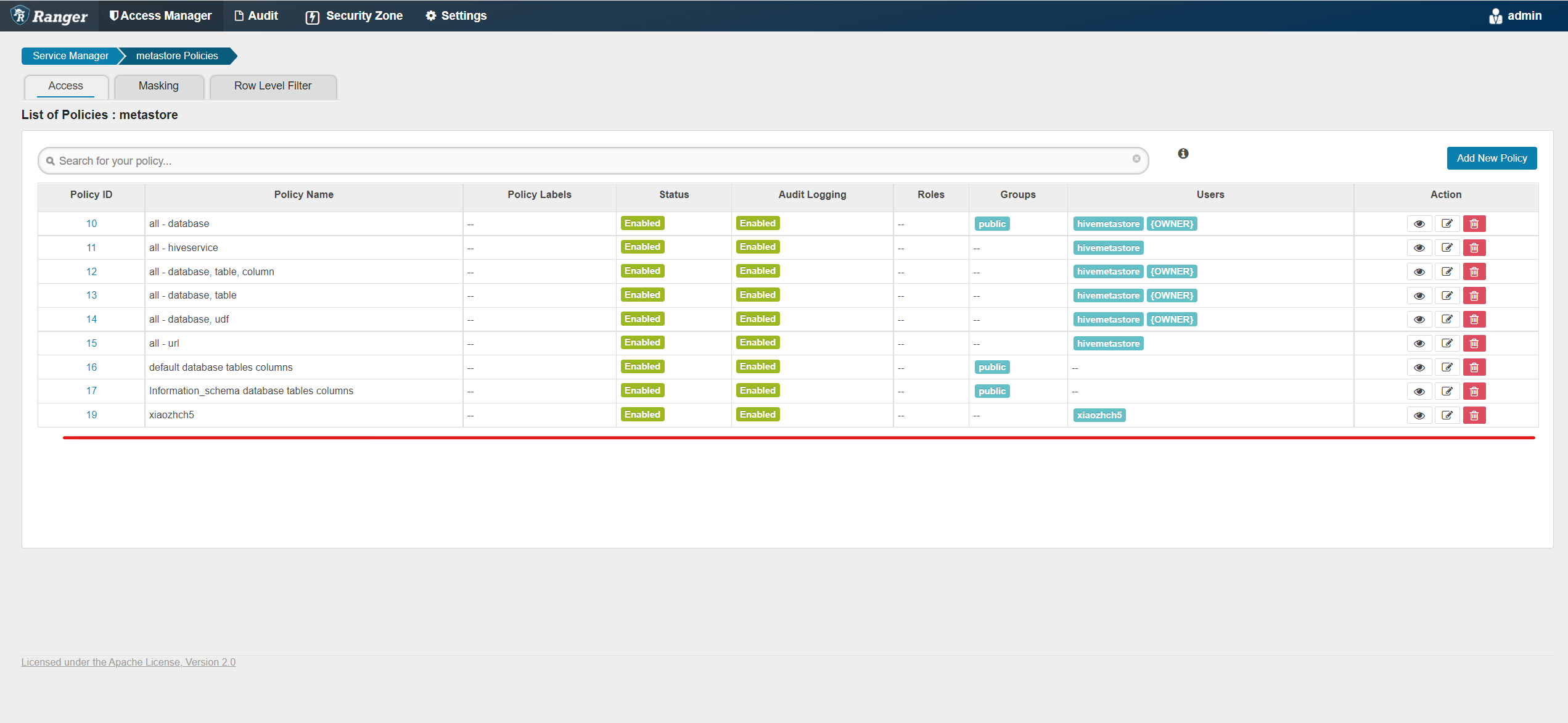

现在在ranger上对xiaozhch5用户进行授权操作

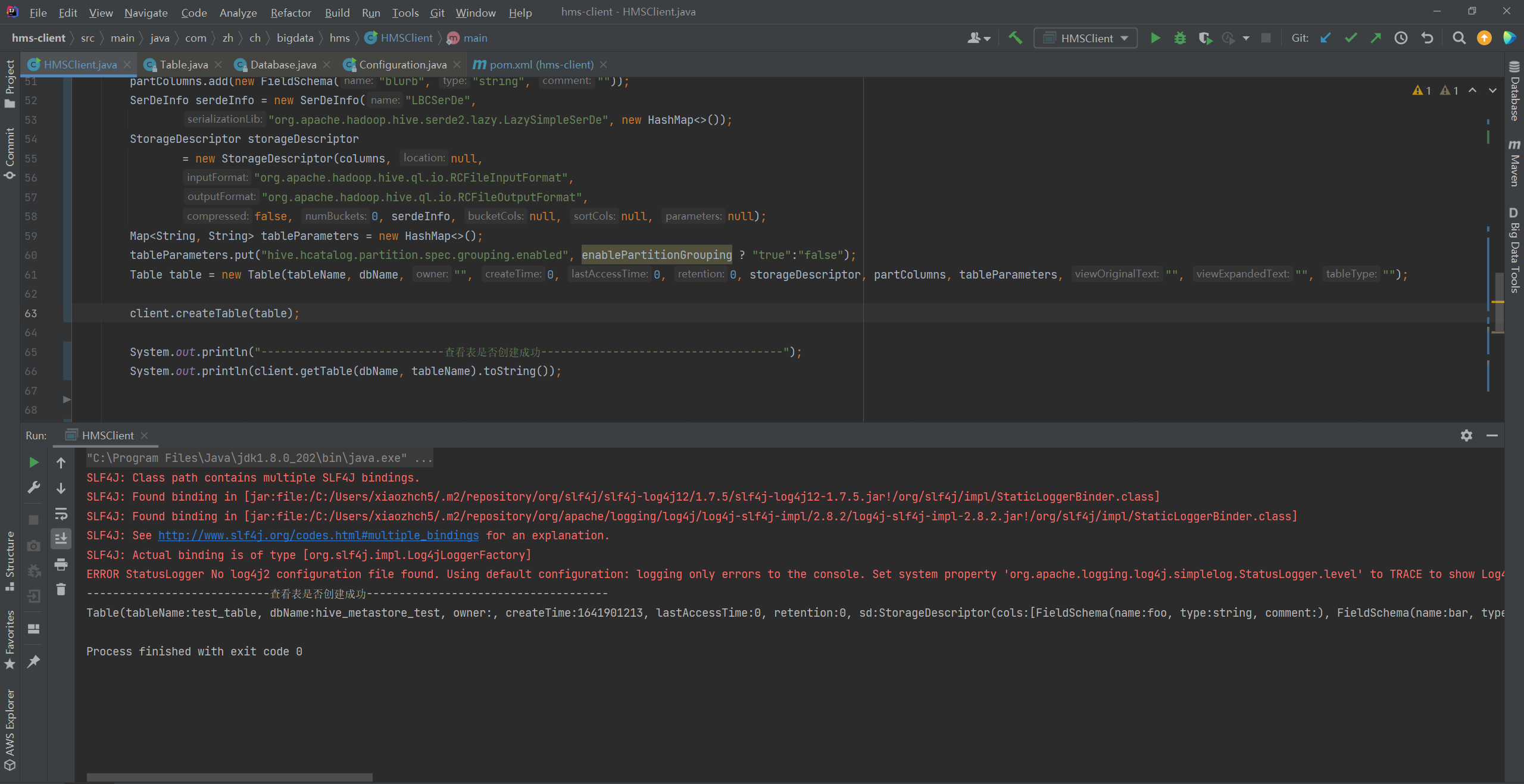

重新运行Java代码创建表,得到如下结果

可见经过ranger授权,表创建成功。

我的博客即将同步至腾讯云+社区,邀请大家一同入驻:https://cloud.tencent.com/developer/support-plan?invite_code=36r1sfksi8g0c

- 点赞

- 收藏

- 关注作者

评论(0)