关于 Kubernetes中NetworkPolicy(网络策略)方面的一些笔记

写在前面

- 学习

k8s遇到整理笔记 - 博文内容主要涉及

Kubernetes网络策略理论简述K8s中网络策略方式:egress和ingress的DemoipBlock,namespaceSelector,podSelector的网络策略规则Demo

混吃等死,小富即安,飞黄腾达,是因为各有各的缘法,未必有高下之分。–—烽火戏诸侯《剑来》

Kubernetes网络策略

为了实现

细粒度的容器间网络访问隔离策略(防火墙),Kubernetes从1.3版本开始,由SIG-Network小组主导研发了Network Policy机制,目前已升级为networking.k8s.io/v1稳定版本。

Network Policy的主要功能是对Pod间的网络通信进行限制和准入控制

设置方式为将Pod的Label作为查询条件,设置允许访问或禁止访问的客户端Pod列表。查询条件可以作用于Pod和Namespace级别。

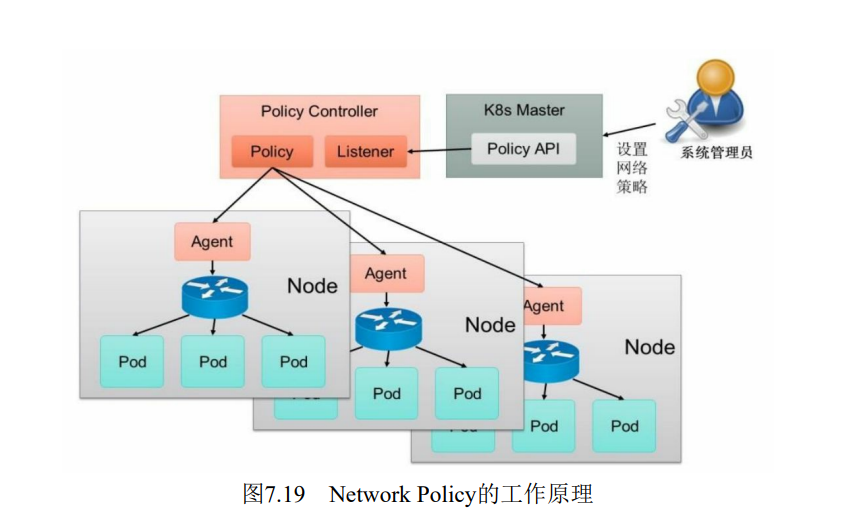

为了使用Network Policy, Kubernetes引入了一个新的资源对象NetworkPolicy,供用户设置Pod间网络访问的策略。但仅定义一个网络策略是无法完成实际的网络隔离的,还需要一个策略控制器(PolicyController)进行策略的实现。

策略控制器由第三方网络组件提供,目前Calico, Cilium, Kube-router, Romana, Weave Net等开源项目均支持网络策略的实现。Network Policy的工作原理如图

policy controller需要实现一个API Listener,监听用户设置的NetworkPolicy定义,并将网络访问规则通过各Node的Agent进行实际设置(Agent则需要通过CNI网络插件实现)

网络策略配置说明

网络策略的设置主要用于对目标Pod的网络访问进行限制,在默认·情况下对所有Pod都是允许访问的,在设置了指向Pod的NetworkPolicy网络策略之后,到Pod的访问才会被限制。 需要注意的是网络策略是基于Pod的

NetWorkPolicy基于命名空间进行限制,即只作用当前命名空间,分为两种:

- ingress:定义允许访问目标Pod的入站白名单规则

- egress: 定义目标Pod允许访问的“出站”白名单规则

具体的规则限制方式分为三种(需要注意的是,多个限制之间是或的逻辑关系,如果希望变成与的关系,yaml文件需要配置为数组):

- IP 策略

- 命名空间策略

- pod选择器限制

下面是一个资源文件的Demo

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector: #用于定义该网络策略作用的Pod范围

matchLabels:

role: db

policyTypes: #网络策略的类型,包括ingress和egress两种

- Ingress

- Egress

ingress: #定义允许访问目标Pod的入站白名单规则

- from: #满足from 条件的客户端才能访问ports定义的目标Pod端口号。

- ipBlock: # IP限制

cidr: 172.17.0.0/16

except: #排除那些IP

- 172.17.1.0/24

- namespaceSelector: #命名空间限制

matchLabels:

project: myproject

- podSelector: # pod选择器限制

matchLabels:

role: frontend

ports: #允许访问的目标Pod监听的端口号。

- protocol: TCP

port: 6379

egress: #定义目标Pod允许访问的“出站”白名单规则

- to: #目标Pod仅允许访问满足to条件的服务端IP范围和ports定义的端口号

- ipBlock:

cidr: 10.0.0.0/24

ports: #允许访问的服务端的端口号。

- protocol: TCP

port: 5978

在Namespace级别设置默认的网络策略

在Namespace级别还可以设置一些默认的全局网络策略,以方便管理员对整个Namespace进行统一的网络策略设置。

默认拒绝所有入站流量

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-ingress

spec:

podSelector: {}

policyTypes:

- Ingress

默认允许所有入站流量

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-all-ingress

spec:

podSelector: {}

ingress:

- {}

policyTypes:

- Ingress

默认拒绝所有出站流量

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-egress

spec:

podSelector: {}

policyTypes:

- Egress

默认允许所有出站流量

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-all-egress

spec:

podSelector: {}

egress:

- {}

policyTypes:

- Egress

默认拒绝所有入口和所有出站流量

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-all

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

NetworkPolicy的发展

作为一个稳定特性,

SCTP支持默认是被启用的。 要在集群层面禁用SCTP,你(或你的集群管理员)需要为API服务器指定--feature-gates=SCTPSupport=false,… 来禁用SCTPSupport特性门控。 启用该特性门控后,用户可以将NetworkPolicy的protocol字段设置为SCTP(不同版本略有区别)

NetWorkPolicy实战

环境准备

先创建两个没有任何策略的SVC

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$d=k8s-network-create

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$mkdir $d;cd $d

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl create ns liruilong-network-create

namespace/liruilong-network-create created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl config set-context $(kubectl config current-context) --namespace=liruilong-network-createContext "kubernetes-admin@kubernetes" modified.

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl config view | grep namespace

namespace: liruilong-network-create

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$

我们先构造两个pod,为两个SVC提供能力

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl run pod1 --image=nginx --image-pull-policy=IfNotPresent

pod/pod1 created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl run pod2 --image=nginx --image-pull-policy=IfNotPresent

pod/pod2 created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod1 1/1 Running 0 35s 10.244.70.31 vms83.liruilongs.github.io <none> <none>

pod2 1/1 Running 0 21s 10.244.171.181 vms82.liruilongs.github.io <none> <none>

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$

然后我们分别修改pod中Ngixn容器主页

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod1 1/1 Running 0 100s run=pod1

pod2 1/1 Running 0 86s run=pod2

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl exec -it pod1 -- sh -c "echo pod1 >/usr/share/nginx/html/index.html"

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl exec -it pod2 -- sh -c "echo pod2 >/usr/share/nginx/html/index.html"

创建两个SVC

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl expose --name=svc1 pod pod1 --port=80 --type=LoadBalancer

service/svc1 exposed

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl expose --name=svc2 pod pod2 --port=80 --type=LoadBalancer

service/svc2 exposed

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc1 LoadBalancer 10.106.61.84 192.168.26.240 80:30735/TCP 14s

svc2 LoadBalancer 10.111.123.194 192.168.26.241 80:31034/TCP 5s

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$

访问测试,无论在当前命名空间还是在指定命名空间,都可以相互访问

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl run testpod1 -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent

If you don''t see a command prompt, try pressing enter.

/home # curl svc1

pod1

/home # curl svc2

pod2

/home # exit

指定命名空间

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl run testpod2 -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent -n default

If you don''t see a command prompt, try pressing enter.

/home # curl svc1.liruilong-network-create

pod1

/home # curl svc2.liruilong-network-create

pod2

/home #

由于使用了LB,所以物理机也可以访问

PS E:\docker> curl 192.168.26.240

StatusCode : 200

StatusDescription : OK

Content : pod1

RawContent : HTTP/1.1 200 OK

Connection: keep-alive

Accept-Ranges: bytes

Content-Length: 5

Content-Type: text/html

Date: Mon, 03 Jan 2022 12:29:32 GMT

ETag: "61d27744-5"

Last-Modified: Mon, 03 Jan 2022 04:1...

Forms : {}

Headers : {[Connection, keep-alive], [Accept-Ranges, bytes], [Content-Lengt

h, 5], [Content-Type, text/html]...}

Images : {}

InputFields : {}

Links : {}

ParsedHtml : System.__ComObject

RawContentLength : 5

PS E:\docker> curl 192.168.26.241

StatusCode : 200

StatusDescription : OK

Content : pod2

RawContent : HTTP/1.1 200 OK

Connection: keep-alive

Accept-Ranges: bytes

Content-Length: 5

Content-Type: text/html

Date: Mon, 03 Jan 2022 12:29:49 GMT

ETag: "61d27752-5"

Last-Modified: Mon, 03 Jan 2022 04:1...

Forms : {}

Headers : {[Connection, keep-alive], [Accept-Ranges, bytes], [Content-Lengt

h, 5], [Content-Type, text/html]...}

Images : {}

InputFields : {}

Links : {}

ParsedHtml : System.__ComObject

RawContentLength : 5

PS E:\docker>

进入策略

下面我们看一下进入的策略

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod1 1/1 Running 2 (3d12h ago) 5d9h run=pod1

pod2 1/1 Running 2 (3d12h ago) 5d9h run=pod2

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc1 LoadBalancer 10.106.61.84 192.168.26.240 80:30735/TCP 5d9h run=pod1

svc2 LoadBalancer 10.111.123.194 192.168.26.241 80:31034/TCP 5d9h run=pod2

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$

测试的外部物理机机器IP

PS E:\docker> ipconfig

Windows IP 配置

..........

以太网适配器 VMware Network Adapter VMnet8:

连接特定的 DNS 后缀 . . . . . . . :

本地链接 IPv6 地址. . . . . . . . : fe80::f9c8:e941:4deb:698f%24

IPv4 地址 . . . . . . . . . . . . : 192.168.26.1

子网掩码 . . . . . . . . . . . . : 255.255.255.0

默认网关. . . . . . . . . . . . . :

IP限制

我们通过修改ip限制来演示网路策略,通过宿主机所在物理机访问。当设置指定网段可以访问,不是指定网段不可以访问

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$vim networkpolicy.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl apply -f networkpolicy.yaml

networkpolicy.networking.k8s.io/test-network-policy configured

编写资源文件,允许172.17.0.0/16网段的机器访问

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: liruilong-network-create

spec:

podSelector:

matchLabels:

run: pod1

policyTypes:

- Ingress

ingress:

- from:

- ipBlock:

cidr: 172.17.0.0/16 # 只允许这个网段访问

ports:

- protocol: TCP

port: 80

集群外部机器无法访问

PS E:\docker> curl 192.168.26.240

curl : 无法连接到远程服务器

所在位置 行:1 字符: 1

+ curl 192.168.26.240

+ ~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidOperation: (System.Net.HttpWebRequest:HttpWebRequest) [Invoke-WebRequest],WebExce

ption

+ FullyQualifiedErrorId : WebCmdletWebResponseException,Microsoft.PowerShell.Commands.InvokeWebRequestCommand

配置允许当前网段的ip访问

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: liruilong-network-create

spec:

podSelector:

matchLabels:

run: pod1

policyTypes:

- Ingress

ingress:

- from:

- ipBlock:

cidr: 192.168.26.0/24 # 只允许这个网段访问

ports:

- protocol: TCP

port: 80

修改网段之后正常外部机器可以访问

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$sed -i 's#172.17.0.0/16#192.168.26.0/24#' networkpolicy.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl apply -f networkpolicy.yaml

测试,外部机器可以访问

PS E:\docker> curl 192.168.26.240

StatusCode : 200

StatusDescription : OK

Content : pod1

RawContent : HTTP/1.1 200 OK

Connection: keep-alive

Accept-Ranges: bytes

Content-Length: 5

Content-Type: text/html

Date: Sat, 08 Jan 2022 14:59:13 GMT

ETag: "61d9a663-5"

Last-Modified: Sat, 08 Jan 2022 14:5...

Forms : {}

Headers : {[Connection, keep-alive], [Accept-Ranges, bytes], [Content-Length, 5], [Content-T

ype, text/html]...}

Images : {}

InputFields : {}

Links : {}

ParsedHtml : System.__ComObject

RawContentLength : 5

命名空间限制

设置只允许default命名空间的数据通过

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl get ns --show-labels | grep default

default Active 26d kubernetes.io/metadata.name=default

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$vim networkpolicy-name.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl apply -f networkpolicy-name.yaml

networkpolicy.networking.k8s.io/test-network-policy configured

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: liruilong-network-create

spec:

podSelector:

matchLabels:

run: pod1

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: default

ports:

- protocol: TCP

port: 80

宿主机所在物理机无法访问访问

PS E:\docker> curl 192.168.26.240

curl : 无法连接到远程服务器

所在位置 行:1 字符: 1

+ curl 192.168.26.240

+ ~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidOperation: (System.Net.HttpWebRequest:HttpWebRequest) [Invoke-WebR

equest],WebException

+ FullyQualifiedErrorId : WebCmdletWebResponseException,Microsoft.PowerShell.Commands.InvokeWebRequ

estCommand

PS E:\docker>

当前命名空间也无法访问

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl run testpod1 -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent

/home # curl --connect-timeout 10 -m 10 svc1

curl: (28) Connection timed out after 10413 milliseconds

default命名空间可以访问

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl run testpod1 -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent --namespace=default

/home # curl --connect-timeout 10 -m 10 svc1.liruilong-network-create

pod1

/home #

pod选择器限制

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: liruilong-network-create

spec:

podSelector:

matchLabels:

run: pod1

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

run: testpod

ports:

- protocol: TCP

port: 80

创建一个策略,只允许标签为run=testpod的pod访问

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl apply -f networkpolicy-pod.yaml

networkpolicy.networking.k8s.io/test-network-policy created

创建两个pod,都设置--labels=run=testpod标签,只有当前命名空间可以访问

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl run testpod1 -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent --labels=run=testpod --namespace=default

/home # curl --connect-timeout 10 -m 10 svc1.liruilong-network-create

curl: (28) Connection timed out after 10697 milliseconds

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl run testpod1 -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent --labels=run=testpod

/home # curl --connect-timeout 10 -m 10 svc1

pod1

/home #

下面的设置可以其他所有命名空间可以访问

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: liruilong-network-create

spec:

podSelector:

matchLabels:

run: pod1

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

podSelector:

matchLabels:

run: testpod

ports:

- protocol: TCP

port: 80

default 命名空间和当前命名空间可以访问

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: liruilong-network-create

spec:

podSelector:

matchLabels:

run: pod1

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

kubernetes.io/metadata.name: default

podSelector:

matchLabels:

run: testpod

- podSelector:

matchLabels:

run: testpod

ports:

- protocol: TCP

port: 80

定位pod所使用的网络策略

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl get networkpolicies

NAME POD-SELECTOR AGE

test-network-policy run=pod1 13m

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod1 1/1 Running 2 (3d15h ago) 5d12h run=pod1

pod2 1/1 Running 2 (3d15h ago) 5d12h run=pod2

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl get networkpolicies | grep run=pod1

test-network-policy run=pod1 15m

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$

出去策略

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

spec:

podSelector:

matchLabels:

run: pod1

policyTypes:

- Egress

egress:

- to:

- podSelector:

matchLabels:

run: pod2

ports:

- protocol: TCP

port: 80

pod1只能访问pod2的TCP协议80端口

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl apply -f networkpolicy1.yaml

通过IP访问正常pod2

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubectl exec -it pod1 -- bash

root@pod1:/# curl 10.111.123.194

pod2

因为DNS的pod在另一个命名空间(kube-system)运行,pod1只能到pod2,所以无法通过域名访问,需要添加另一个命名空间

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubectl exec -it pod1 -- bash

root@pod1:/# curl svc2

^C

相关参数获取

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl get ns --show-labels | grep kube-system

kube-system Active 27d kubernetes.io/metadata.name=kube-system

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl get pods --show-labels -n kube-system | grep dns

coredns-7f6cbbb7b8-ncd2s 1/1 Running 13 (3d19h ago) 24d k8s-app=kube-dns,pod-template-hash=7f6cbbb7b8

coredns-7f6cbbb7b8-pjnct 1/1 Running 13 (3d19h ago) 24d k8s-app=kube-dns,pod-template-hash=7f6cbbb7b8

配置两个出去规则,一个到pod2,一个到kube-dns,使用不同端口协议

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

spec:

podSelector:

matchLabels:

run: pod1

policyTypes:

- Egress

egress:

- to:

- podSelector:

matchLabels:

run: pod2

ports:

- protocol: TCP

port: 80

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: kube-system

podSelector:

matchLabels:

k8s-app: kube-dns

ports:

- protocol: UDP

port: 53

测试可以通过域名访问

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$vim networkpolicy2.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl apply -f networkpolicy2.yaml

networkpolicy.networking.k8s.io/test-network-policy configured

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl get networkpolicies

NAME POD-SELECTOR AGE

test-network-policy run=pod1 3h38m

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create]

└─$kubectl exec -it pod1 -- bash

root@pod1:/# curl svc2

pod2

root@pod1:/#

时间关系,关于网络策略和小伙伴们分享到这里,生活加油

- 点赞

- 收藏

- 关注作者

评论(0)