OrangePi AI Studio Pro基于MindYolo实现YOLOv8模型训练及验证

OrangePi AI Studio Pro基于MindYolo实现YOLOv8模型训练及验证

OrangePi AI Studio Pro是基于 2 个昇腾 310P 处理器的新一代高性能推理解析卡,提供基础通用算力+超强AI算力,整合了训练和推理的全部底层软件栈,实现训推一体。其中AI半精度FP16算力约为176TFLOPS,整数Int8精度可达352TOPS。本章将介绍如何在昇腾310上基于mindyolo实现YOLOv8模型的训练及验证。

一、环境准备

- 首先检查昇腾

310P的NPU驱动,在命令行中输入:npu-smi info,可以看到两块昇腾310P的AICore的利用率和内存的占用情况。

+--------------------------------------------------------------------------------------------------------+

| npu-smi v1.0 Version: 24.1.rc4.b999 |

+-------------------------------+-----------------+------------------------------------------------------+

| NPU Name | Health | Power(W) Temp(C) Hugepages-Usage(page) |

| Chip Device | Bus-Id | AICore(%) Memory-Usage(MB) |

+===============================+=================+======================================================+

| 30208 310P1 | OK | NA 41 0 / 0 |

| 0 0 | 0000:77:00.0 | 0 1416 / 89608 |

+-------------------------------+-----------------+------------------------------------------------------+

| 30208 310P1 | OK | NA 40 0 / 0 |

| 1 1 | 0000:77:00.0 | 0 1622 / 89085 |

+===============================+=================+======================================================+

+-------------------------------+-----------------+------------------------------------------------------+

| NPU Chip | Process id | Process name | Process memory(MB) |

+===============================+=================+======================================================+

| No running processes found in NPU 30208 |

+===============================+=================+======================================================+

- 之后升级

CANN的版本以及更新MindSpore,可以参考我的另一篇文章:如何在OrangePi Studio Pro上升级CANN以及的Pytorch和MindSpore,升级完成后,检查MindSpore的安装情况,我使用的版本是2.7.0。

source /usr/local/Ascend/ascend-toolkit/set_env.sh

python3 -c "import mindspore;mindspore.set_context(device_target='Ascend');mindspore.run_check()"

[WARNING] ME(1621400:139701939115840,MainProcess):2025-09-24-10:46:21.978.000 [mindspore/context.py:1412] For 'context.set_context', the parameter 'device_target' will be deprecated and removed in a future version. Please use the api mindspore.set_device() instead.

MindSpore version: 2.7.0

[WARNING] GE_ADPT(1621400,7f0e18710640,python3):2025-09-24-10:46:23.323.570 [mindspore/ops/kernel/ascend/acl_ir/op_api_exec.cc:169] GetAscendDefaultCustomPath] Checking whether the so exists or if permission to access it is available: /usr/local/Ascend/ascend-toolkit/latest/opp/vendors/customize_vision/op_api/lib/libcust_opapi.so

The result of multiplication calculation is correct, MindSpore has been installed on platform [Ascend] successfully!

- 克隆

mindyolo仓库,我们使用由天津大学发布的无人机视觉挑战赛数据集VisDrone-Dataset进行模型的训练及验证。

git clone https://github.com/mindspore-lab/mindyolo.git

正克隆到 'mindyolo'...

remote: Enumerating objects: 3505, done.

remote: Counting objects: 100% (157/157), done.

remote: Compressing objects: 100% (69/69), done.

remote: Total 3505 (delta 114), reused 88 (delta 88), pack-reused 3348 (from 2)

接收对象中: 100% (3505/3505), 6.74 MiB | 8.91 MiB/s, 完成.

处理 delta 中: 100% (2048/2048), 完成.

- 我们将下载后的数据集首先转换成

YOLO格式,具体的转换教程可以参考网上的公开资料,经过转换后的visdrone数据集包括以下内容:

visdrone

├── train

│ ├── images

│ │ ├── 000001.jpg

│ │ ├── 000002.jpg

│ │ ├── ...

│ │ └── ...

│ └── labels

│ ├── 000001.txt

│ ├── 000002.txt

│ ├── ...

│ └── ...

└── val

├── images

│ ├── 000001.jpg

│ ├── 000002.jpg

│ ├── ...

│ └── ...

└── labels

├── 000001.txt

├── 000001.txt

├── ...

└── ...

二、数据格式转换

由于mindyolo中的train过程使用的数据是yolo格式,而eval过程使用coco数据集中的json文件,因此需要再增加coco格式的标注文件instances_train2017.json、instances_val2017.json以及train.txt和val.txt文件,经过转换后的visdrone数据集包括以下内容:

visdrone_COCO_format

├── train.txt

├── val.txt

├── train

│ ├── images

│ │ ├── 000001.jpg

│ │ ├── 000002.jpg

│ │ ├── ...

│ │ └── ...

│ └── labels

│ ├── 000001.txt

│ ├── 000002.txt

│ ├── ...

│ └── ...

├── annotations

│ ├── instances_train2017.json

│ └── instances_val2017.json

└── val

├── images

│ ├── 000001.jpg

│ ├── 000002.jpg

│ ├── ...

│ └── ...

└── labels

├── 000001.txt

├── 000001.txt

├── ...

└── ...

- 我们先把

YOLO格式的数据集转换为COCO格式,在mindyolo中实现yolov5_yaml_to_coco.py脚本,具体代码如下:

# -*- encoding: utf-8 -*-

# @Author: SWHL

# @Contact: liekkaskono@163.com

import argparse

import glob

import json

import os

import shutil

import time

from pathlib import Path

import cv2

import yaml

from tqdm import tqdm

def read_txt(txt_path):

with open(str(txt_path), "r", encoding="utf-8") as f:

data = list(map(lambda x: x.rstrip("\n"), f))

return data

def mkdir(dir_path):

Path(dir_path).mkdir(parents=True, exist_ok=True)

def verify_exists(file_path):

file_path = Path(file_path).resolve()

if not file_path.exists():

raise FileNotFoundError(f"The {file_path} is not exists!!!")

class YOLOV5CFG2COCO:

def __init__(self, yaml_path):

verify_exists(yaml_path)

with open(yaml_path, "r", encoding="UTF-8") as f:

self.data_cfg = yaml.safe_load(f)

self.root_dir = Path(yaml_path).parent.parent

self.root_data_dir = Path(self.data_cfg.get("path"))

self.train_path = self._get_data_dir("train")

self.val_path = self._get_data_dir("val")

nc = self.data_cfg["nc"]

if "names" in self.data_cfg:

self.names = self.data_cfg.get("names")

else:

# assign class names if missing

self.names = [f"class{i}" for i in range(self.data_cfg["nc"])]

assert (

len(self.names) == nc

), f"{len(self.names)} names found for nc={nc} dataset in {yaml_path}"

# 构建COCO格式目录

self.dst = self.root_dir / f"{Path(self.root_data_dir).stem}_COCO_format"

self.coco_train = "train/images"

self.coco_val = "val/images"

self.coco_annotation = "annotations"

self.coco_train_json = (

self.dst / self.coco_annotation / f"instances_train2017.json"

)

self.coco_val_json = (

self.dst / self.coco_annotation / f"instances_val2017.json"

)

mkdir(self.dst)

mkdir(self.dst / self.coco_train)

mkdir(self.dst / self.coco_val)

mkdir(self.dst / self.coco_annotation)

# 构建json内容结构

self.type = "instances"

self.categories = []

self._get_category()

self.annotation_id = 1

cur_year = time.strftime("%Y", time.localtime(time.time()))

self.info = {

"year": int(cur_year),

"version": "1.0",

"description": "For object detection",

"date_created": cur_year,

}

self.licenses = [

{

"id": 1,

"name": "Apache License v2.0",

"url": "https://choosealicense.com/licenses/apache-2.0/",

}

]

def _get_data_dir(self, mode):

data_dir = self.data_cfg.get(mode)

if data_dir:

if isinstance(data_dir, str):

full_path = [str(self.root_data_dir / data_dir)]

elif isinstance(data_dir, list):

full_path = [str(self.root_data_dir / one_dir) for one_dir in data_dir]

else:

raise TypeError(f"{data_dir} is not str or list.")

else:

raise ValueError(f"{mode} dir is not in the yaml.")

return full_path

def _get_category(self):

for i, category in enumerate(self.names, start=1):

self.categories.append(

{

"supercategory": category,

"id": i,

"name": category,

}

)

def generate(self):

self.train_files = self.get_files(self.train_path)

self.valid_files = self.get_files(self.val_path)

train_dest_dir = Path(self.dst) / self.coco_train

self.gen_dataset(

self.train_files, train_dest_dir, self.coco_train_json, mode="train"

)

val_dest_dir = Path(self.dst) / self.coco_val

self.gen_dataset(self.valid_files, val_dest_dir, self.coco_val_json, mode="val")

print(f"The output directory is: {self.dst}")

def get_files(self, path):

IMG_FORMATS = ["bmp", "dng", "jpeg", "jpg", "mpo", "png", "tif", "tiff", "webp"]

f = []

for p in path:

p = Path(p)

if p.is_dir():

f += glob.glob(str(p / "**" / "*.*"), recursive=True)

elif p.is_file(): # file

with open(p, "r", encoding="utf-8") as t:

t = t.read().strip().splitlines()

parent = str(p.parent) + os.sep

f += [

x.replace("./", parent) if x.startswith("./") else x for x in t

]

else:

raise FileExistsError(f"{p} does not exist")

im_files = sorted(

x.replace("/", os.sep) for x in f if x.split(".")[-1].lower() in IMG_FORMATS

)

return im_files

def gen_dataset(self, img_paths, target_img_path, target_json, mode):

"""

https://cocodataset.org/#format-data

"""

images = []

annotations = []

sa, sb = (

os.sep + "images" + os.sep,

os.sep + "labels" + os.sep,

) # /images/, /labels/ substrings

for img_id, img_path in enumerate(tqdm(img_paths, desc=mode), 1):

label_path = sb.join(img_path.rsplit(sa, 1)).rsplit(".", 1)[0] + ".txt"

img_path = Path(img_path)

verify_exists(img_path)

imgsrc = cv2.imread(str(img_path))

height, width = imgsrc.shape[:2]

dest_file_name = f"{img_id:012d}.jpg"

save_img_path = target_img_path / dest_file_name

if img_path.suffix.lower() == ".jpg":

shutil.copyfile(img_path, save_img_path)

else:

cv2.imwrite(str(save_img_path), imgsrc)

images.append(

{

"date_captured": "2021",

"file_name": dest_file_name,

"id": img_id,

"height": height,

"width": width,

}

)

if Path(label_path).exists():

new_anno = self.read_annotation(label_path, img_id, height, width)

if len(new_anno) > 0:

annotations.extend(new_anno)

else:

raise ValueError(f"{label_path} is empty")

else:

raise FileNotFoundError(f"{label_path} not exists")

json_data = {

"info": self.info,

"images": images,

"licenses": self.licenses,

"type": self.type,

"annotations": annotations,

"categories": self.categories,

}

with open(target_json, "w", encoding="utf-8") as f:

json.dump(json_data, f, ensure_ascii=False)

def read_annotation(self, txt_file, img_id, height, width):

annotation = []

all_info = read_txt(txt_file)

for label_info in all_info:

# 遍历一张图中不同标注对象

label_info = label_info.split(" ")

if len(label_info) < 5:

continue

category_id, vertex_info = label_info[0], label_info[1:]

segmentation, bbox, area = self._get_annotation(vertex_info, height, width)

annotation.append(

{

"segmentation": segmentation,

"area": area,

"iscrowd": 0,

"image_id": img_id,

"bbox": bbox,

"category_id": int(category_id) + 1,

"id": self.annotation_id,

}

)

self.annotation_id += 1

return annotation

@staticmethod

def _get_annotation(vertex_info, height, width):

cx, cy, w, h = [float(i) for i in vertex_info]

cx = cx * width

cy = cy * height

box_w = w * width

box_h = h * height

x0 = max(cx - box_w / 2, 0)

y0 = max(cy - box_h / 2, 0)

x1 = min(x0 + box_w, width)

y1 = min(y0 + box_h, height)

segmentation = [[x0, y0, x1, y0, x1, y1, x0, y1]]

bbox = [x0, y0, box_w, box_h]

area = box_w * box_h

return segmentation, bbox, area

def main():

parser = argparse.ArgumentParser("Datasets converter from YOLOV5 to COCO")

parser.add_argument(

"--yaml_path",

type=str,

default="dataset/YOLOV5_yaml/sample.yaml",

help="Dataset cfg file",

)

args = parser.parse_args()

converter = YOLOV5CFG2COCO(args.yaml_path)

converter.generate()

if __name__ == "__main__":

main()

- 之后在

mindyolo目录下创建YOLO格式的配置文件visdrone.yaml:

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: /root/workspace/dataset/visdrone # dataset root dir (absolute path)

train: train/images # train images (relative to 'path')

val: val/images # val images (relative to 'path')

test: # test images (optional)

nc: 12

# Classes,类别

names:

0: ignored regions

1: pedestrian

2: people

3: bicycle

4: car

5: van

6: truck

7: tricycle

8: awning-tricycle

9: bus

10: motor

11: others

在终端中运行如下命令将YOLO格式的数据集转换为COCO格式:

python3 yolov5_yaml_to_coco.py --yaml_path visdrone.yaml

train: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 6471/6471 [01:13<00:00, 88.07it/s]

val: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 548/548 [00:03<00:00, 148.22it/s]

The output directory is: visdrone_COCO_format

- 再创建

coco2yolo.py的Python脚本将COCO格式的标注文件.json导出为labels文件夹中YOLO格式的标注文件.txt:

import json

import os

import argparse

parser = argparse.ArgumentParser(description='Test yolo data.')

parser.add_argument('-j', help='JSON file', dest='json', required=True)

parser.add_argument('-o', help='path to output folder', dest='out',required=True)

args = parser.parse_args()

json_file = args.json

output = args.out

class COCO2YOLO:

def __init__(self):

self._check_file_and_dir(json_file, output)

self.labels = json.load(open(json_file, 'r', encoding='utf-8'))

self.coco_id_name_map = self._categories()

self.coco_name_list = list(self.coco_id_name_map.values())

print("total images", len(self.labels['images']))

print("total categories", len(self.labels['categories']))

print("total labels", len(self.labels['annotations']))

def _check_file_and_dir(self, file_path, dir_path):

if not os.path.exists(file_path):

raise ValueError("file not found")

if not os.path.exists(dir_path):

os.makedirs(dir_path)

def _categories(self):

categories = {}

for cls in self.labels['categories']:

categories[cls['id']] = cls['name']

return categories

def _load_images_info(self):

images_info = {}

for image in self.labels['images']:

id = image['id']

file_name = image['file_name']

if file_name.find('\\') > -1:

file_name = file_name[file_name.index('\\')+1:]

w = image['width']

h = image['height']

images_info[id] = (file_name, w, h)

return images_info

def _bbox_2_yolo(self, bbox, img_w, img_h):

x, y, w, h = bbox[0], bbox[1], bbox[2], bbox[3]

centerx = bbox[0] + w / 2

centery = bbox[1] + h / 2

dw = 1 / img_w

dh = 1 / img_h

centerx *= dw

w *= dw

centery *= dh

h *= dh

return centerx, centery, w, h

def _convert_anno(self, images_info):

anno_dict = dict()

for anno in self.labels['annotations']:

bbox = anno['bbox']

image_id = anno['image_id']

category_id = anno['category_id']

image_info = images_info.get(image_id)

image_name = image_info[0]

img_w = image_info[1]

img_h = image_info[2]

yolo_box = self._bbox_2_yolo(bbox, img_w, img_h)

anno_info = (image_name, category_id, yolo_box)

anno_infos = anno_dict.get(image_id)

if not anno_infos:

anno_dict[image_id] = [anno_info]

else:

anno_infos.append(anno_info)

anno_dict[image_id] = anno_infos

return anno_dict

def save_classes(self):

sorted_classes = list(map(lambda x: x['name'], sorted(self.labels['categories'], key=lambda x: x['id'])))

print('coco names', sorted_classes)

with open('coco.names', 'w', encoding='utf-8') as f:

for cls in sorted_classes:

f.write(cls + '\n')

f.close()

def coco2yolo(self):

print("loading image info...")

images_info = self._load_images_info()

print("loading done, total images", len(images_info))

print("start converting...")

anno_dict = self._convert_anno(images_info)

print("converting done, total labels", len(anno_dict))

print("saving txt file...")

self._save_txt(anno_dict)

print("saving done")

def _save_txt(self, anno_dict):

for k, v in anno_dict.items():

file_name = os.path.splitext(v[0][0])[0] + ".txt"

with open(os.path.join(output, file_name), 'w', encoding='utf-8') as f:

print(k, v)

for obj in v:

cat_name = self.coco_id_name_map.get(obj[1])

category_id = self.coco_name_list.index(cat_name)

box = ['{:.6f}'.format(x) for x in obj[2]]

box = ' '.join(box)

line = str(category_id) + ' ' + box

f.write(line + '\n')

if __name__ == '__main__':

c2y = COCO2YOLO()

c2y.coco2yolo()

在终端中切换到mindyolo目录下依次运行如下命令导出instances_train2017.json和instances_val2017.json文件对应的YOLO格式的标注文件到labels文件夹中:

python3 coco2yolo.py -j ./visdrone_COCO_format/annotations/instances_train2017.json -o ./visdrone_COCO_format/train/labels

python3 coco2yolo.py -j ./visdrone_COCO_format/annotations/instances_val2017.json -o ./visdrone_COCO_format/val/labels

- 最后创建

generate_txt.sh脚本在COCO数据集目录下生成train.txt和val.txt,指定训练图片和验证图片的在数据集中的相对路径:

#!/bin/bash

# 检查是否提供了数据集路径参数

if [ $# -eq 0 ]; then

echo "Usage: $0 <dataset_path>"

echo "Example: $0 /path/to/visdrone"

exit 1

fi

# 获取数据集路径

DATASET_PATH="$1"

# 检查数据集路径是否存在

if [ ! -d "$DATASET_PATH" ]; then

echo "Error: Dataset path '$DATASET_PATH' does not exist."

exit 1

fi

# 定义训练和验证图片目录

TRAIN_DIR="$DATASET_PATH/train/images"

VAL_DIR="$DATASET_PATH/val/images"

# 检查训练和验证目录是否存在

if [ ! -d "$TRAIN_DIR" ]; then

echo "Error: Train directory '$TRAIN_DIR' does not exist."

exit 1

fi

if [ ! -d "$VAL_DIR" ]; then

echo "Error: Validation directory '$VAL_DIR' does not exist."

exit 1

fi

# 生成 train.txt

TRAIN_TXT="$DATASET_PATH/train.txt"

ls "$TRAIN_DIR" | grep '\.jpg$' | sort | sed 's/^/\.\/train\/images\//' > "$TRAIN_TXT"

echo "Generated $TRAIN_TXT"

# 生成 val.txt

VAL_TXT="$DATASET_PATH/val.txt"

ls "$VAL_DIR" | grep '\.jpg$' | sort | sed 's/^/\.\/val\/images\//' > "$VAL_TXT"

echo "Generated $VAL_TXT"

echo "Successfully generated train.txt and val.txt in $DATASET_PATH"

在终端中运行generate_txt.sh,并传入前面COCO数据集的路径:

chmod +x generate_txt.sh

./generate_txt.sh visdrone_COCO_format

Generated visdrone_COCO_format/train.txt

Generated visdrone_COCO_format/val.txt

Successfully generated train.txt and val.txt in visdrone_COCO_format

最终生成的visdrone_COCO_format数据集的格式如下,可以直接用于MindYOLOv8模型的训练:

visdrone_COCO_format

├── train.txt

├── val.txt

├── train

│ ├── images

│ │ ├── 000001.jpg

│ │ ├── 000002.jpg

│ │ ├── ...

│ │ └── ...

│ └── labels

│ ├── 000001.txt

│ ├── 000002.txt

│ ├── ...

│ └── ...

├── annotations

│ ├── instances_train2017.json

│ └── instances_val2017.json

└── val

├── images

│ ├── 000001.jpg

│ ├── 000002.jpg

│ ├── ...

│ └── ...

└── labels

├── 000001.txt

├── 000001.txt

├── ...

└── ...

三、模型训练

MindYOLO支持yaml文件继承机制,因此新编写的配置文件只需要继承MindYOLO提供的原生yaml文件现有配置文件:

- 在

configs目录下编写MindYOLO数据集的yaml配置文件,指定训练图片和验证图片的路径以及模型的类别标签:

data:

dataset_name: visdrone_COCO_format

train_set: /root/workspace/mindyolo/visdrone_COCO_format/train.txt

val_set: /root/workspace/mindyolo/visdrone_COCO_format/val.txt

test_set: /root/workspace/mindyolo/visdrone_COCO_format/val.txt

nc: 12

# class names

names: ['ignored regions', 'pedestrian', 'people', 'bicycle', 'car',

'van', 'truck', 'tricycle', 'awning-tricycle', 'bus',

'motor', 'others' ]

train_transforms: []

test_transforms: []

- 修改

configs/yolov8s.yaml文件,注释掉原有的coco.yaml配置文件,指定我们自己的数据集,同时添加epochs、img_size、per_batch_size、multi-stage data augment等自定义训练参数:

__BASE__: [

# '../coco.yaml',

'../visdrone.yaml',

'./hyp.scratch.low.yaml',

'./yolov8-base.yaml'

]

overflow_still_update: False

network:

depth_multiple: 0.33 # scales module repeats

width_multiple: 0.50 # scales convolution channels

max_channels: 1024

epochs: 10

img_size: 1024

per_batch_size: 16

data:

num_parallel_workers: 8

# multi-stage data augment

train_transforms: {

stage_epochs: [ 5, 5 ],

trans_list: [

[

{ func_name: mosaic, prob: 1.0 },

{ func_name: resample_segments },

{ func_name: random_perspective, prob: 1.0, degrees: 0.0, translate: 0.1, scale: 0.5, shear: 0.0 },

{func_name: albumentations},

{func_name: hsv_augment, prob: 1.0, hgain: 0.015, sgain: 0.7, vgain: 0.4},

{func_name: fliplr, prob: 0.5},

{func_name: label_norm, xyxy2xywh_: True},

{func_name: label_pad, padding_size: 160, padding_value: -1},

{func_name: image_norm, scale: 255.},

{func_name: image_transpose, bgr2rgb: True, hwc2chw: True}

],

[

{func_name: letterbox, scaleup: True},

{func_name: resample_segments},

{func_name: random_perspective, prob: 1.0, degrees: 0.0, translate: 0.1, scale: 0.5, shear: 0.0},

{func_name: albumentations},

{func_name: hsv_augment, prob: 1.0, hgain: 0.015, sgain: 0.7, vgain: 0.4},

{func_name: fliplr, prob: 0.5},

{func_name: label_norm, xyxy2xywh_: True},

{func_name: label_pad, padding_size: 160, padding_value: -1},

{func_name: image_norm, scale: 255.},

{func_name: image_transpose, bgr2rgb: True, hwc2chw: True}

]]

}

test_transforms: [

{func_name: letterbox, scaleup: False, only_image: True},

{func_name: image_norm, scale: 255.},

{func_name: image_transpose, bgr2rgb: True, hwc2chw: True}

]

- 在终端中运行

train.py进行模型训练,指定模型的配置文件以及使用昇腾NPU:

python3 train.py --config ./configs/yolov8/yolov8s.yaml --device_target Ascend

默认是跑在0卡上也可以在环境变量中指定DEVICE_ID让模型的训练代码跑在1卡上:

import os

os.setenv("DEVICE_ID", 1)

如果不想设置环境变量也可以修改mindyolo\mindyolo\utils\utils.py中默认的参数:

import os

import random

import yaml

import cv2

from datetime import datetime

import numpy as np

import mindspore as ms

from mindspore import ops, Tensor, nn

from mindspore.communication.management import get_group_size, get_rank, init

from mindspore import ParallelMode

from mindyolo.utils import logger

def set_seed(seed=2):

np.random.seed(seed)

random.seed(seed)

ms.set_seed(seed)

def set_default(args):

# Set Context

ms.set_context(mode=args.ms_mode)

ms.set_recursion_limit(args.max_call_depth)

if args.ms_mode == 0:

ms.set_context(jit_config={"jit_level": "O2"})

if args.device_target == "Ascend":

ms.set_device("Ascend", int(os.getenv("DEVICE_ID", 1)))

...

2025-10-23 14:48:02,364 [INFO] parse_args:

2025-10-23 14:48:02,364 [INFO] task detect

2025-10-23 14:48:02,364 [INFO] device_target Ascend

2025-10-23 14:48:02,364 [INFO] save_dir ./runs/2025.10.23-14.48.02

2025-10-23 14:48:02,364 [INFO] log_level INFO

2025-10-23 14:48:02,364 [INFO] is_parallel False

2025-10-23 14:48:02,364 [INFO] ms_mode 0

2025-10-23 14:48:02,364 [INFO] max_call_depth 2000

2025-10-23 14:48:02,364 [INFO] ms_amp_level O0

2025-10-23 14:48:02,364 [INFO] keep_loss_fp32 True

2025-10-23 14:48:02,364 [INFO] anchor_base False

2025-10-23 14:48:02,364 [INFO] ms_loss_scaler static

2025-10-23 14:48:02,364 [INFO] ms_loss_scaler_value 1024.0

2025-10-23 14:48:02,364 [INFO] ms_jit True

2025-10-23 14:48:02,364 [INFO] ms_enable_graph_kernel False

2025-10-23 14:48:02,364 [INFO] ms_datasink False

2025-10-23 14:48:02,364 [INFO] overflow_still_update False

2025-10-23 14:48:02,364 [INFO] clip_grad False

2025-10-23 14:48:02,364 [INFO] clip_grad_value 10.0

2025-10-23 14:48:02,364 [INFO] ema True

2025-10-23 14:48:02,364 [INFO] weight

2025-10-23 14:48:02,364 [INFO] ema_weight

2025-10-23 14:48:02,364 [INFO] freeze []

2025-10-23 14:48:02,364 [INFO] epochs 10

2025-10-23 14:48:02,364 [INFO] per_batch_size 16

2025-10-23 14:48:02,364 [INFO] img_size 1024

2025-10-23 14:48:02,364 [INFO] nbs 64

2025-10-23 14:48:02,364 [INFO] accumulate 1

2025-10-23 14:48:02,364 [INFO] auto_accumulate False

2025-10-23 14:48:02,364 [INFO] log_interval 100

2025-10-23 14:48:02,364 [INFO] single_cls False

2025-10-23 14:48:02,364 [INFO] sync_bn False

2025-10-23 14:48:02,364 [INFO] keep_checkpoint_max 100

2025-10-23 14:48:02,364 [INFO] run_eval False

2025-10-23 14:48:02,364 [INFO] run_eval_interval 1

2025-10-23 14:48:02,364 [INFO] conf_thres 0.001

2025-10-23 14:48:02,364 [INFO] iou_thres 0.7

2025-10-23 14:48:02,364 [INFO] conf_free True

2025-10-23 14:48:02,364 [INFO] rect False

2025-10-23 14:48:02,364 [INFO] nms_time_limit 20.0

2025-10-23 14:48:02,364 [INFO] recompute False

2025-10-23 14:48:02,364 [INFO] recompute_layers 0

2025-10-23 14:48:02,364 [INFO] seed 2

2025-10-23 14:48:02,364 [INFO] summary True

2025-10-23 14:48:02,364 [INFO] profiler False

2025-10-23 14:48:02,364 [INFO] profiler_step_num 1

2025-10-23 14:48:02,364 [INFO] opencv_threads_num 0

2025-10-23 14:48:02,364 [INFO] strict_load True

2025-10-23 14:48:02,364 [INFO] enable_modelarts False

2025-10-23 14:48:02,364 [INFO] data_url

2025-10-23 14:48:02,364 [INFO] ckpt_url

2025-10-23 14:48:02,364 [INFO] multi_data_url

2025-10-23 14:48:02,364 [INFO] pretrain_url

2025-10-23 14:48:02,364 [INFO] train_url

2025-10-23 14:48:02,364 [INFO] data_dir /cache/data/

2025-10-23 14:48:02,364 [INFO] ckpt_dir /cache/pretrain_ckpt/

2025-10-23 14:48:02,364 [INFO] data.dataset_name result

2025-10-23 14:48:02,364 [INFO] data.train_set /root/workspace/mindyolo/visdrone_COCO_format/train.txt

2025-10-23 14:48:02,364 [INFO] data.val_set /root/workspace/mindyolo/visdrone_COCO_format/val.txt

2025-10-23 14:48:02,364 [INFO] data.test_set /root/workspace/mindyolo/visdrone_COCO_format/val.txt

2025-10-23 14:48:02,364 [INFO] data.nc 12

2025-10-23 14:48:02,364 [INFO] data.names ['ignored regions', 'pedestrian', 'people', 'bicycle', 'car', 'van', 'truck', 'tricycle', 'awning-tricycle', 'bus', 'motor', 'others']

2025-10-23 14:48:02,364 [INFO] train_transforms.stage_epochs [5, 5]

2025-10-23 14:48:02,364 [INFO] train_transforms.trans_list [[{'func_name': 'mosaic', 'prob': 1.0}, {'func_name': 'resample_segments'}, {'func_name': 'random_perspective', 'prob': 1.0, 'degrees': 0.0, 'translate': 0.1, 'scale': 0.5, 'shear': 0.0}, {'func_name': 'albumentations'}, {'func_name': 'hsv_augment', 'prob': 1.0, 'hgain': 0.015, 'sgain': 0.7, 'vgain': 0.4}, {'func_name': 'fliplr', 'prob': 0.5}, {'func_name': 'label_norm', 'xyxy2xywh_': True}, {'func_name': 'label_pad', 'padding_size': 160, 'padding_value': -1}, {'func_name': 'image_norm', 'scale': 255.0}, {'func_name': 'image_transpose', 'bgr2rgb': True, 'hwc2chw': True}], [{'func_name': 'letterbox', 'scaleup': True}, {'func_name': 'resample_segments'}, {'func_name': 'random_perspective', 'prob': 1.0, 'degrees': 0.0, 'translate': 0.1, 'scale': 0.5, 'shear': 0.0}, {'func_name': 'albumentations'}, {'func_name': 'hsv_augment', 'prob': 1.0, 'hgain': 0.015, 'sgain': 0.7, 'vgain': 0.4}, {'func_name': 'fliplr', 'prob': 0.5}, {'func_name': 'label_norm', 'xyxy2xywh_': True}, {'func_name': 'label_pad', 'padding_size': 160, 'padding_value': -1}, {'func_name': 'image_norm', 'scale': 255.0}, {'func_name': 'image_transpose', 'bgr2rgb': True, 'hwc2chw': True}]]

2025-10-23 14:48:02,364 [INFO] data.test_transforms [{'func_name': 'letterbox', 'scaleup': False, 'only_image': True}, {'func_name': 'image_norm', 'scale': 255.0}, {'func_name': 'image_transpose', 'bgr2rgb': True, 'hwc2chw': True}]

2025-10-23 14:48:02,364 [INFO] data.num_parallel_workers 8

2025-10-23 14:48:02,364 [INFO] optimizer.optimizer momentum

2025-10-23 14:48:02,364 [INFO] optimizer.lr_init 0.01

2025-10-23 14:48:02,364 [INFO] optimizer.momentum 0.937

2025-10-23 14:48:02,364 [INFO] optimizer.nesterov True

2025-10-23 14:48:02,364 [INFO] optimizer.loss_scale 1.0

2025-10-23 14:48:02,364 [INFO] optimizer.warmup_epochs 3

2025-10-23 14:48:02,364 [INFO] optimizer.warmup_momentum 0.8

2025-10-23 14:48:02,364 [INFO] optimizer.warmup_bias_lr 0.1

2025-10-23 14:48:02,364 [INFO] optimizer.min_warmup_step 1000

2025-10-23 14:48:02,364 [INFO] optimizer.group_param yolov8

2025-10-23 14:48:02,364 [INFO] optimizer.gp_weight_decay 0.0005

2025-10-23 14:48:02,364 [INFO] optimizer.start_factor 1.0

2025-10-23 14:48:02,364 [INFO] optimizer.end_factor 0.01

2025-10-23 14:48:02,364 [INFO] optimizer.epochs 10

2025-10-23 14:48:02,364 [INFO] optimizer.nbs 64

2025-10-23 14:48:02,364 [INFO] optimizer.accumulate 1

2025-10-23 14:48:02,364 [INFO] optimizer.total_batch_size 16

2025-10-23 14:48:02,364 [INFO] loss.name YOLOv8Loss

2025-10-23 14:48:02,364 [INFO] loss.box 7.5

2025-10-23 14:48:02,364 [INFO] loss.cls 0.5

2025-10-23 14:48:02,364 [INFO] loss.dfl 1.5

2025-10-23 14:48:02,364 [INFO] loss.reg_max 16

2025-10-23 14:48:02,364 [INFO] network.model_name yolov8

2025-10-23 14:48:02,364 [INFO] network.nc 80

2025-10-23 14:48:02,364 [INFO] network.reg_max 16

2025-10-23 14:48:02,364 [INFO] network.stride [8, 16, 32]

2025-10-23 14:48:02,364 [INFO] network.backbone [[-1, 1, 'ConvNormAct', [64, 3, 2]], [-1, 1, 'ConvNormAct', [128, 3, 2]], [-1, 3, 'C2f', [128, True]], [-1, 1, 'ConvNormAct', [256, 3, 2]], [-1, 6, 'C2f', [256, True]], [-1, 1, 'ConvNormAct', [512, 3, 2]], [-1, 6, 'C2f', [512, True]], [-1, 1, 'ConvNormAct', [1024, 3, 2]], [-1, 3, 'C2f', [1024, True]], [-1, 1, 'SPPF', [1024, 5]]]

2025-10-23 14:48:02,364 [INFO] network.head [[-1, 1, 'Upsample', ['None', 2, 'nearest']], [[-1, 6], 1, 'Concat', [1]], [-1, 3, 'C2f', [512]], [-1, 1, 'Upsample', ['None', 2, 'nearest']], [[-1, 4], 1, 'Concat', [1]], [-1, 3, 'C2f', [256]], [-1, 1, 'ConvNormAct', [256, 3, 2]], [[-1, 12], 1, 'Concat', [1]], [-1, 3, 'C2f', [512]], [-1, 1, 'ConvNormAct', [512, 3, 2]], [[-1, 9], 1, 'Concat', [1]], [-1, 3, 'C2f', [1024]], [[15, 18, 21], 1, 'YOLOv8Head', ['nc', 'reg_max', 'stride']]]

2025-10-23 14:48:02,364 [INFO] network.depth_multiple 0.33

2025-10-23 14:48:02,364 [INFO] network.width_multiple 0.5

2025-10-23 14:48:02,364 [INFO] network.max_channels 1024

2025-10-23 14:48:02,364 [INFO] config ./configs/yolov8/yolov8s.yaml

2025-10-23 14:48:02,364 [INFO] rank 0

2025-10-23 14:48:02,364 [INFO] rank_size 1

2025-10-23 14:48:02,364 [INFO] total_batch_size 16

2025-10-23 14:48:02,364 [INFO] callback []

2025-10-23 14:48:02,364 [INFO]

2025-10-23 14:48:02,365 [INFO] Please check the above information for the configurations

2025-10-23 14:48:02,441 [WARNING] Parse Model, args: nearest, keep str type

2025-10-23 14:48:02,451 [WARNING] Parse Model, args: nearest, keep str type

2025-10-23 14:48:02,528 [INFO] number of network params, total: 11.160279M, trainable: 11.140228M

[WARNING] GE_ADPT(336686,7ff4350e8740,python3):2025-10-23-14:48:13.472.732 [mindspore/ops/kernel/ascend/acl_ir/op_api_exec.cc:169] GetAscendDefaultCustomPath] Checking whether the so exists or if permission to access it is available: /usr/local/Ascend/ascend-toolkit/latest/opp/vendors/customize_vision/op_api/lib/libcust_opapi.so

2025-10-23 14:48:14,547 [WARNING] Parse Model, args: nearest, keep str type

2025-10-23 14:48:14,558 [WARNING] Parse Model, args: nearest, keep str type

2025-10-23 14:48:14,646 [INFO] number of network params, total: 11.160279M, trainable: 11.140228M

.2025-10-23 14:48:30,416 [INFO] ema_weight not exist, default pretrain weight is currently used.

2025-10-23 14:48:30,421 [INFO] No dataset cache available, caching now...

Scanning images: 0%| | 0/6471 [00:00<?, ?it/s]WARNING ⚠️ /root/workspace/mindyolo/visdrone_COCO_format/train/images/000000000335.jpg: 1 duplicate labels removed

Scanning '/root/workspace/mindyolo/visdrone_COCO_format/train.cache' images and labels... 397 found, 0 missing, 0 empty, 0 corrupted: 6%|████ | 397/6471 [00:00<00:01, 3960.91it/s]WARNING ⚠️ /root/workspace/mindyolo/visdrone_COCO_format/train/images/000000000427.jpg: 1 duplicate labels removed

Scanning '/root/workspace/mindyolo/visdrone_COCO_format/train.cache' images and labels... 1261 found, 0 missing, 0 empty, 0 corrupted: 19%|████████████▋ | 1261/6471 [00:00<00:01, 4238.38it/s]WARNING ⚠️ /root/workspace/mindyolo/visdrone_COCO_format/train/images/000000001492.jpg: 1 duplicate labels removed

Scanning '/root/workspace/mindyolo/visdrone_COCO_format/train.cache' images and labels... 3866 found, 0 missing, 0 empty, 0 corrupted: 60%|██████████████████████████████████████▊ | 3866/6471 [00:00<00:00, 4332.85it/s]WARNING ⚠️ /root/workspace/mindyolo/visdrone_COCO_format/train/images/000000003868.jpg: 1 duplicate labels removed

Scanning '/root/workspace/mindyolo/visdrone_COCO_format/train.cache' images and labels... 5607 found, 0 missing, 0 empty, 0 corrupted: 87%|████████████████████████████████████████████████████████▎ | 5607/6471 [00:01<00:00, 4337.04it/s]WARNING ⚠️ /root/workspace/mindyolo/visdrone_COCO_format/train/images/000000005742.jpg: 1 duplicate labels removed

Scanning '/root/workspace/mindyolo/visdrone_COCO_format/train.cache' images and labels... 6471 found, 0 missing, 0 empty, 0 corrupted: 100%|█████████████████████████████████████████████████████████████████| 6471/6471 [00:01<00:00, 4307.45it/s]

2025-10-23 14:48:32,028 [INFO] New cache created: /root/workspace/mindyolo/visdrone_COCO_format/train.cache.npy

2025-10-23 14:48:32,029 [INFO] Dataset caching success.

2025-10-23 14:48:32,051 [INFO] Dataloader num parallel workers: [8]

2025-10-23 14:48:32,135 [INFO] Dataset Cache file hash/version check success.

2025-10-23 14:48:32,135 [INFO] Load dataset cache from [/root/workspace/mindyolo/visdrone_COCO_format/train.cache.npy] success.

Scanning '/root/workspace/mindyolo/visdrone_COCO_format/train.cache.npy' images and labels... 6471 found, 0 missing, 0 empty, 0 corrupted: 100%|███████████████████████████████████████████████████████████████████████| 6471/6471 [00:00<?, ?it/s]

2025-10-23 14:48:32,157 [INFO] Dataloader num parallel workers: [8]

2025-10-23 14:48:32,273 [INFO] Registry(name=callback, total=4)

2025-10-23 14:48:32,273 [INFO] (0): YoloxSwitchTrain in mindyolo/utils/callback.py

2025-10-23 14:48:32,273 [INFO] (1): EvalWhileTrain in mindyolo/utils/callback.py

2025-10-23 14:48:32,273 [INFO] (2): SummaryCallback in mindyolo/utils/callback.py

2025-10-23 14:48:32,273 [INFO] (3): ProfilerCallback in mindyolo/utils/callback.py

2025-10-23 14:48:32,273 [INFO]

2025-10-23 14:48:32,276 [INFO] got 1 active callback as follows:

2025-10-23 14:48:32,276 [INFO] SummaryCallback()

2025-10-23 14:48:32,276 [WARNING] The first epoch will be compiled for the graph, which may take a long time; You can come back later :).

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

[INFO] albumentations load success

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

[INFO] albumentations load success

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

[INFO] albumentations load success

[INFO] albumentations load success

[INFO] albumentations load success

[INFO] albumentations load success

[INFO] albumentations load success

[INFO] albumentations load success

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

[INFO] albumentations load success

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

[INFO] albumentations load success

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

[INFO] albumentations load success

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

[INFO] albumentations load success

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

[INFO] albumentations load success

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

[INFO] albumentations load success

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

[INFO] albumentations load success

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

[INFO] albumentations load success

.........2025-10-23 14:52:54,293 [INFO] Epoch 1/10, Step 100/404, imgsize (1024, 1024), loss: 5.5585, lbox: 3.2052, lcls: 0.4855, dfl: 1.8678, cur_lr: 0.09257426112890244

2025-10-23 14:52:55,203 [INFO] Epoch 1/10, Step 100/404, step time: 2629.27 ms

2025-10-23 14:55:40,115 [INFO] Epoch 1/10, Step 200/404, imgsize (1024, 1024), loss: 4.5693, lbox: 2.5884, lcls: 0.4230, dfl: 1.5578, cur_lr: 0.08514851331710815

2025-10-23 14:55:40,138 [INFO] Epoch 1/10, Step 200/404, step time: 1649.36 ms

2025-10-23 14:58:25,055 [INFO] Epoch 1/10, Step 300/404, imgsize (1024, 1024), loss: 3.9681, lbox: 2.1428, lcls: 0.3853, dfl: 1.4400, cur_lr: 0.07772277295589447

2025-10-23 14:58:25,078 [INFO] Epoch 1/10, Step 300/404, step time: 1649.39 ms

2025-10-23 15:01:10,020 [INFO] Epoch 1/10, Step 400/404, imgsize (1024, 1024), loss: 3.6795, lbox: 2.0528, lcls: 0.3339, dfl: 1.2929, cur_lr: 0.07029703259468079

2025-10-23 15:01:10,044 [INFO] Epoch 1/10, Step 400/404, step time: 1649.65 ms

2025-10-23 15:01:17,111 [INFO] Saving model to ./runs/2025.10.23-14.48.02/weights/yolov8s-1_404.ckpt

2025-10-23 15:01:17,111 [INFO] Epoch 1/10, epoch time: 12.75 min.

2025-10-23 15:04:02,010 [INFO] Epoch 2/10, Step 100/404, imgsize (1024, 1024), loss: 3.5361, lbox: 1.9678, lcls: 0.3183, dfl: 1.2500, cur_lr: 0.062162574380636215

2025-10-23 15:04:02,018 [INFO] Epoch 2/10, Step 100/404, step time: 1649.07 ms

2025-10-23 15:06:46,939 [INFO] Epoch 2/10, Step 200/404, imgsize (1024, 1024), loss: 3.3767, lbox: 1.8395, lcls: 0.3042, dfl: 1.2329, cur_lr: 0.05465514957904816

2025-10-23 15:06:46,947 [INFO] Epoch 2/10, Step 200/404, step time: 1649.28 ms

2025-10-23 15:09:31,885 [INFO] Epoch 2/10, Step 300/404, imgsize (1024, 1024), loss: 3.3604, lbox: 1.8753, lcls: 0.3134, dfl: 1.1718, cur_lr: 0.0471477210521698

2025-10-23 15:09:31,894 [INFO] Epoch 2/10, Step 300/404, step time: 1649.46 ms

2025-10-23 15:12:16,806 [INFO] Epoch 2/10, Step 400/404, imgsize (1024, 1024), loss: 3.2902, lbox: 1.8262, lcls: 0.2795, dfl: 1.1846, cur_lr: 0.03964029625058174

2025-10-23 15:12:16,814 [INFO] Epoch 2/10, Step 400/404, step time: 1649.20 ms

2025-10-23 15:12:23,860 [INFO] Saving model to ./runs/2025.10.23-14.48.02/weights/yolov8s-2_404.ckpt

2025-10-23 15:12:23,860 [INFO] Epoch 2/10, epoch time: 11.11 min.

2025-10-23 15:15:08,782 [INFO] Epoch 3/10, Step 100/404, imgsize (1024, 1024), loss: 3.3220, lbox: 1.7991, lcls: 0.3124, dfl: 1.2106, cur_lr: 0.031090890988707542

2025-10-23 15:15:08,791 [INFO] Epoch 3/10, Step 100/404, step time: 1649.30 ms

2025-10-23 15:17:53,703 [INFO] Epoch 3/10, Step 200/404, imgsize (1024, 1024), loss: 3.1162, lbox: 1.6879, lcls: 0.2824, dfl: 1.1460, cur_lr: 0.02350178174674511

2025-10-23 15:17:53,711 [INFO] Epoch 3/10, Step 200/404, step time: 1649.20 ms

2025-10-23 15:20:38,631 [INFO] Epoch 3/10, Step 300/404, imgsize (1024, 1024), loss: 3.0332, lbox: 1.6024, lcls: 0.2703, dfl: 1.1605, cur_lr: 0.015912672504782677

2025-10-23 15:20:38,639 [INFO] Epoch 3/10, Step 300/404, step time: 1649.28 ms

2025-10-23 15:23:23,580 [INFO] Epoch 3/10, Step 400/404, imgsize (1024, 1024), loss: 3.1371, lbox: 1.7095, lcls: 0.2808, dfl: 1.1469, cur_lr: 0.008323564194142818

2025-10-23 15:23:23,589 [INFO] Epoch 3/10, Step 400/404, step time: 1649.49 ms

2025-10-23 15:23:30,617 [INFO] Saving model to ./runs/2025.10.23-14.48.02/weights/yolov8s-3_404.ckpt

2025-10-23 15:23:30,617 [INFO] Epoch 3/10, epoch time: 11.11 min.

2025-10-23 15:26:15,527 [INFO] Epoch 4/10, Step 100/404, imgsize (1024, 1024), loss: 3.2965, lbox: 1.8179, lcls: 0.2614, dfl: 1.2172, cur_lr: 0.007029999978840351

2025-10-23 15:26:15,535 [INFO] Epoch 4/10, Step 100/404, step time: 1649.18 ms

2025-10-23 15:29:00,451 [INFO] Epoch 4/10, Step 200/404, imgsize (1024, 1024), loss: 3.1855, lbox: 1.7697, lcls: 0.2504, dfl: 1.1654, cur_lr: 0.007029999978840351

2025-10-23 15:29:00,459 [INFO] Epoch 4/10, Step 200/404, step time: 1649.24 ms

2025-10-23 15:31:45,369 [INFO] Epoch 4/10, Step 300/404, imgsize (1024, 1024), loss: 2.9900, lbox: 1.6270, lcls: 0.2307, dfl: 1.1323, cur_lr: 0.007029999978840351

2025-10-23 15:31:45,378 [INFO] Epoch 4/10, Step 300/404, step time: 1649.18 ms

2025-10-23 15:34:30,277 [INFO] Epoch 4/10, Step 400/404, imgsize (1024, 1024), loss: 3.1742, lbox: 1.7506, lcls: 0.2590, dfl: 1.1646, cur_lr: 0.007029999978840351

2025-10-23 15:34:30,285 [INFO] Epoch 4/10, Step 400/404, step time: 1649.07 ms

2025-10-23 15:34:37,315 [INFO] Saving model to ./runs/2025.10.23-14.48.02/weights/yolov8s-4_404.ckpt

2025-10-23 15:34:37,316 [INFO] Epoch 4/10, epoch time: 11.11 min.

2025-10-23 15:37:22,195 [INFO] Epoch 5/10, Step 100/404, imgsize (1024, 1024), loss: 2.9632, lbox: 1.6123, lcls: 0.2424, dfl: 1.1085, cur_lr: 0.006039999891072512

2025-10-23 15:37:22,204 [INFO] Epoch 5/10, Step 100/404, step time: 1648.88 ms

2025-10-23 15:40:07,094 [INFO] Epoch 5/10, Step 200/404, imgsize (1024, 1024), loss: 2.7776, lbox: 1.4777, lcls: 0.2025, dfl: 1.0975, cur_lr: 0.006039999891072512

2025-10-23 15:40:07,103 [INFO] Epoch 5/10, Step 200/404, step time: 1648.99 ms

2025-10-23 15:42:52,021 [INFO] Epoch 5/10, Step 300/404, imgsize (1024, 1024), loss: 2.7209, lbox: 1.4253, lcls: 0.2130, dfl: 1.0826, cur_lr: 0.006039999891072512

2025-10-23 15:42:52,029 [INFO] Epoch 5/10, Step 300/404, step time: 1649.26 ms

2025-10-23 15:45:36,965 [INFO] Epoch 5/10, Step 400/404, imgsize (1024, 1024), loss: 2.7360, lbox: 1.4817, lcls: 0.2157, dfl: 1.0387, cur_lr: 0.006039999891072512

2025-10-23 15:45:36,973 [INFO] Epoch 5/10, Step 400/404, step time: 1649.44 ms

2025-10-23 15:45:44,037 [INFO] Saving model to ./runs/2025.10.23-14.48.02/weights/yolov8s-5_404.ckpt

2025-10-23 15:45:44,037 [INFO] Epoch 5/10, epoch time: 11.11 min.

2025-10-23 15:48:28,914 [INFO] Epoch 6/10, Step 100/404, imgsize (1024, 1024), loss: 2.6675, lbox: 1.4472, lcls: 0.2042, dfl: 1.0161, cur_lr: 0.005049999803304672

2025-10-23 15:48:28,923 [INFO] Epoch 6/10, Step 100/404, step time: 1648.85 ms

2025-10-23 15:51:13,798 [INFO] Epoch 6/10, Step 200/404, imgsize (1024, 1024), loss: 2.7114, lbox: 1.4235, lcls: 0.1986, dfl: 1.0893, cur_lr: 0.005049999803304672

2025-10-23 15:51:13,807 [INFO] Epoch 6/10, Step 200/404, step time: 1648.84 ms

2025-10-23 15:53:58,688 [INFO] Epoch 6/10, Step 300/404, imgsize (1024, 1024), loss: 2.6783, lbox: 1.4169, lcls: 0.1985, dfl: 1.0629, cur_lr: 0.005049999803304672

2025-10-23 15:53:58,697 [INFO] Epoch 6/10, Step 300/404, step time: 1648.90 ms

2025-10-23 15:56:43,578 [INFO] Epoch 6/10, Step 400/404, imgsize (1024, 1024), loss: 2.7539, lbox: 1.4734, lcls: 0.2037, dfl: 1.0768, cur_lr: 0.005049999803304672

2025-10-23 15:56:43,586 [INFO] Epoch 6/10, Step 400/404, step time: 1648.89 ms

2025-10-23 15:56:50,613 [INFO] Saving model to ./runs/2025.10.23-14.48.02/weights/yolov8s-6_404.ckpt

2025-10-23 15:56:50,613 [INFO] Epoch 6/10, epoch time: 11.11 min.

2025-10-23 15:59:35,561 [INFO] Epoch 7/10, Step 100/404, imgsize (1024, 1024), loss: 2.9109, lbox: 1.6203, lcls: 0.2210, dfl: 1.0696, cur_lr: 0.00406000018119812

2025-10-23 15:59:35,569 [INFO] Epoch 7/10, Step 100/404, step time: 1649.56 ms

2025-10-23 16:02:20,470 [INFO] Epoch 7/10, Step 200/404, imgsize (1024, 1024), loss: 2.6941, lbox: 1.4727, lcls: 0.2068, dfl: 1.0147, cur_lr: 0.00406000018119812

2025-10-23 16:02:20,479 [INFO] Epoch 7/10, Step 200/404, step time: 1649.10 ms

2025-10-23 16:05:05,384 [INFO] Epoch 7/10, Step 300/404, imgsize (1024, 1024), loss: 2.8098, lbox: 1.4810, lcls: 0.2188, dfl: 1.1101, cur_lr: 0.00406000018119812

2025-10-23 16:05:05,391 [INFO] Epoch 7/10, Step 300/404, step time: 1649.12 ms

2025-10-23 16:07:50,302 [INFO] Epoch 7/10, Step 400/404, imgsize (1024, 1024), loss: 2.8426, lbox: 1.5529, lcls: 0.2108, dfl: 1.0788, cur_lr: 0.00406000018119812

2025-10-23 16:07:50,310 [INFO] Epoch 7/10, Step 400/404, step time: 1649.18 ms

2025-10-23 16:07:57,341 [INFO] Saving model to ./runs/2025.10.23-14.48.02/weights/yolov8s-7_404.ckpt

2025-10-23 16:07:57,342 [INFO] Epoch 7/10, epoch time: 11.11 min.

2025-10-23 16:10:42,225 [INFO] Epoch 8/10, Step 100/404, imgsize (1024, 1024), loss: 2.4095, lbox: 1.2257, lcls: 0.1704, dfl: 1.0134, cur_lr: 0.0030700000934302807

2025-10-23 16:10:42,233 [INFO] Epoch 8/10, Step 100/404, step time: 1648.92 ms

2025-10-23 16:13:27,126 [INFO] Epoch 8/10, Step 200/404, imgsize (1024, 1024), loss: 2.6034, lbox: 1.3788, lcls: 0.1872, dfl: 1.0374, cur_lr: 0.0030700000934302807

2025-10-23 16:13:27,134 [INFO] Epoch 8/10, Step 200/404, step time: 1649.00 ms

2025-10-23 16:16:12,032 [INFO] Epoch 8/10, Step 300/404, imgsize (1024, 1024), loss: 2.6074, lbox: 1.3916, lcls: 0.1787, dfl: 1.0371, cur_lr: 0.0030700000934302807

2025-10-23 16:16:12,041 [INFO] Epoch 8/10, Step 300/404, step time: 1649.07 ms

2025-10-23 16:18:56,946 [INFO] Epoch 8/10, Step 400/404, imgsize (1024, 1024), loss: 2.8867, lbox: 1.4981, lcls: 0.2189, dfl: 1.1697, cur_lr: 0.0030700000934302807

2025-10-23 16:18:56,954 [INFO] Epoch 8/10, Step 400/404, step time: 1649.13 ms

2025-10-23 16:19:03,973 [INFO] Saving model to ./runs/2025.10.23-14.48.02/weights/yolov8s-8_404.ckpt

2025-10-23 16:19:03,973 [INFO] Epoch 8/10, epoch time: 11.11 min.

2025-10-23 16:21:48,883 [INFO] Epoch 9/10, Step 100/404, imgsize (1024, 1024), loss: 2.8544, lbox: 1.6248, lcls: 0.2181, dfl: 1.0115, cur_lr: 0.0020800000056624413

2025-10-23 16:21:48,891 [INFO] Epoch 9/10, Step 100/404, step time: 1649.18 ms

2025-10-23 16:24:33,791 [INFO] Epoch 9/10, Step 200/404, imgsize (1024, 1024), loss: 2.9393, lbox: 1.6026, lcls: 0.2223, dfl: 1.1145, cur_lr: 0.0020800000056624413

2025-10-23 16:24:33,799 [INFO] Epoch 9/10, Step 200/404, step time: 1649.08 ms

2025-10-23 16:27:18,695 [INFO] Epoch 9/10, Step 300/404, imgsize (1024, 1024), loss: 2.4632, lbox: 1.2884, lcls: 0.1701, dfl: 1.0047, cur_lr: 0.0020800000056624413

2025-10-23 16:27:18,703 [INFO] Epoch 9/10, Step 300/404, step time: 1649.04 ms

2025-10-23 16:30:03,567 [INFO] Epoch 9/10, Step 400/404, imgsize (1024, 1024), loss: 2.7216, lbox: 1.4867, lcls: 0.2002, dfl: 1.0346, cur_lr: 0.0020800000056624413

2025-10-23 16:30:03,575 [INFO] Epoch 9/10, Step 400/404, step time: 1648.72 ms

2025-10-23 16:30:10,627 [INFO] Saving model to ./runs/2025.10.23-14.48.02/weights/yolov8s-9_404.ckpt

2025-10-23 16:30:10,627 [INFO] Epoch 9/10, epoch time: 11.11 min.

2025-10-23 16:32:55,537 [INFO] Epoch 10/10, Step 100/404, imgsize (1024, 1024), loss: 2.5899, lbox: 1.4239, lcls: 0.1668, dfl: 0.9992, cur_lr: 0.0010900000343099236

2025-10-23 16:32:55,545 [INFO] Epoch 10/10, Step 100/404, step time: 1649.18 ms

2025-10-23 16:35:40,433 [INFO] Epoch 10/10, Step 200/404, imgsize (1024, 1024), loss: 2.5535, lbox: 1.3745, lcls: 0.1813, dfl: 0.9976, cur_lr: 0.0010900000343099236

2025-10-23 16:35:40,441 [INFO] Epoch 10/10, Step 200/404, step time: 1648.95 ms

2025-10-23 16:38:25,358 [INFO] Epoch 10/10, Step 300/404, imgsize (1024, 1024), loss: 2.4509, lbox: 1.2441, lcls: 0.1717, dfl: 1.0351, cur_lr: 0.0010900000343099236

2025-10-23 16:38:25,366 [INFO] Epoch 10/10, Step 300/404, step time: 1649.25 ms

2025-10-23 16:41:10,260 [INFO] Epoch 10/10, Step 400/404, imgsize (1024, 1024), loss: 2.6832, lbox: 1.4217, lcls: 0.1896, dfl: 1.0719, cur_lr: 0.0010900000343099236

2025-10-23 16:41:10,268 [INFO] Epoch 10/10, Step 400/404, step time: 1649.02 ms

2025-10-23 16:41:17,324 [INFO] Saving model to ./runs/2025.10.23-14.48.02/weights/yolov8s-10_404.ckpt

2025-10-23 16:41:17,324 [INFO] Epoch 10/10, epoch time: 11.11 min.

2025-10-23 16:41:17,742 [INFO] End Train.

2025-10-23 16:41:18,446 [INFO] Training completed.

平均每个epoch耗时约10min左右,在训练过程中我们也可以查看AI Core的利用率以及内存的占用情况:

npu-smi info

+--------------------------------------------------------------------------------------------------------+

| npu-smi v1.0 Version: 24.1.rc4.b999 |

+-------------------------------+-----------------+------------------------------------------------------+

| NPU Name | Health | Power(W) Temp(C) Hugepages-Usage(page) |

| Chip Device | Bus-Id | AICore(%) Memory-Usage(MB) |

+===============================+=================+======================================================+

| 30208 310P1 | OK | NA 52 11372 / 11372 |

| 0 0 | 0000:77:00.0 | 99 24288/ 89608 |

+-------------------------------+-----------------+------------------------------------------------------+

| 30208 310P1 | OK | NA 42 0 / 0 |

| 1 1 | 0000:77:00.0 | 0 1576 / 89085 |

+===============================+=================+======================================================+

+-------------------------------+-----------------+------------------------------------------------------+

| NPU Chip | Process id | Process name | Process memory(MB) |

+===============================+=================+======================================================+

| 30208 0 | 336686 | python3 | 22835 |

+===============================+=================+======================================================+

四、模型验证

这里我们仅训练了10个epoch进行模型的验证,可以看到模型的精度和召回率如下:

python3 test.py --config ./configs/yolov8/yolov8s.yaml --device_target Ascend --weight ./runs/2025.10.23-14.48.02/weights/yolov8s-10_404.ckpt

2025-10-23 16:46:18,824 [INFO] parse_args:

2025-10-23 16:46:18,824 [INFO] task detect

2025-10-23 16:46:18,824 [INFO] device_target Ascend

2025-10-23 16:46:18,824 [INFO] ms_mode 0

2025-10-23 16:46:18,824 [INFO] ms_amp_level O0

2025-10-23 16:46:18,824 [INFO] ms_enable_graph_kernel False

2025-10-23 16:46:18,824 [INFO] precision_mode None

2025-10-23 16:46:18,824 [INFO] weight ./runs/2025.10.23-14.48.02/weights/yolov8s-10_404.ckpt

2025-10-23 16:46:18,824 [INFO] per_batch_size 16

2025-10-23 16:46:18,824 [INFO] img_size 1024

2025-10-23 16:46:18,824 [INFO] single_cls False

2025-10-23 16:46:18,824 [INFO] rect False

2025-10-23 16:46:18,824 [INFO] exec_nms True

2025-10-23 16:46:18,824 [INFO] nms_time_limit 60.0

2025-10-23 16:46:18,824 [INFO] conf_thres 0.001

2025-10-23 16:46:18,824 [INFO] iou_thres 0.7

2025-10-23 16:46:18,824 [INFO] conf_free True

2025-10-23 16:46:18,824 [INFO] seed 2

2025-10-23 16:46:18,824 [INFO] log_level INFO

2025-10-23 16:46:18,824 [INFO] save_dir ./runs_test/2025.10.23-16.46.18

2025-10-23 16:46:18,824 [INFO] enable_modelarts False

2025-10-23 16:46:18,824 [INFO] data_url

2025-10-23 16:46:18,824 [INFO] ckpt_url

2025-10-23 16:46:18,824 [INFO] train_url

2025-10-23 16:46:18,824 [INFO] data_dir /cache/data/

2025-10-23 16:46:18,824 [INFO] is_parallel False

2025-10-23 16:46:18,824 [INFO] ckpt_dir /cache/pretrain_ckpt/

2025-10-23 16:46:18,824 [INFO] data.dataset_name result

2025-10-23 16:46:18,824 [INFO] data.train_set /root/workspace/mindyolo/visdrone_COCO_format/train.txt

2025-10-23 16:46:18,824 [INFO] data.val_set /root/workspace/mindyolo/visdrone_COCO_format/val.txt

2025-10-23 16:46:18,824 [INFO] data.test_set /root/workspace/mindyolo/visdrone_COCO_format/val.txt

2025-10-23 16:46:18,824 [INFO] data.nc 12

2025-10-23 16:46:18,824 [INFO] data.names ['ignored regions', 'pedestrian', 'people', 'bicycle', 'car', 'van', 'truck', 'tricycle', 'awning-tricycle', 'bus', 'motor', 'others']

2025-10-23 16:46:18,824 [INFO] train_transforms.stage_epochs [5, 5]

2025-10-23 16:46:18,824 [INFO] train_transforms.trans_list [[{'func_name': 'mosaic', 'prob': 1.0}, {'func_name': 'resample_segments'}, {'func_name': 'random_perspective', 'prob': 1.0, 'degrees': 0.0, 'translate': 0.1, 'scale': 0.5, 'shear': 0.0}, {'func_name': 'albumentations'}, {'func_name': 'hsv_augment', 'prob': 1.0, 'hgain': 0.015, 'sgain': 0.7, 'vgain': 0.4}, {'func_name': 'fliplr', 'prob': 0.5}, {'func_name': 'label_norm', 'xyxy2xywh_': True}, {'func_name': 'label_pad', 'padding_size': 160, 'padding_value': -1}, {'func_name': 'image_norm', 'scale': 255.0}, {'func_name': 'image_transpose', 'bgr2rgb': True, 'hwc2chw': True}], [{'func_name': 'letterbox', 'scaleup': True}, {'func_name': 'resample_segments'}, {'func_name': 'random_perspective', 'prob': 1.0, 'degrees': 0.0, 'translate': 0.1, 'scale': 0.5, 'shear': 0.0}, {'func_name': 'albumentations'}, {'func_name': 'hsv_augment', 'prob': 1.0, 'hgain': 0.015, 'sgain': 0.7, 'vgain': 0.4}, {'func_name': 'fliplr', 'prob': 0.5}, {'func_name': 'label_norm', 'xyxy2xywh_': True}, {'func_name': 'label_pad', 'padding_size': 160, 'padding_value': -1}, {'func_name': 'image_norm', 'scale': 255.0}, {'func_name': 'image_transpose', 'bgr2rgb': True, 'hwc2chw': True}]]

2025-10-23 16:46:18,824 [INFO] data.test_transforms [{'func_name': 'letterbox', 'scaleup': False, 'only_image': True}, {'func_name': 'image_norm', 'scale': 255.0}, {'func_name': 'image_transpose', 'bgr2rgb': True, 'hwc2chw': True}]

2025-10-23 16:46:18,824 [INFO] data.num_parallel_workers 8

2025-10-23 16:46:18,824 [INFO] optimizer.optimizer momentum

2025-10-23 16:46:18,824 [INFO] optimizer.lr_init 0.01

2025-10-23 16:46:18,824 [INFO] optimizer.momentum 0.937

2025-10-23 16:46:18,824 [INFO] optimizer.nesterov True

2025-10-23 16:46:18,824 [INFO] optimizer.loss_scale 1.0

2025-10-23 16:46:18,824 [INFO] optimizer.warmup_epochs 3

2025-10-23 16:46:18,824 [INFO] optimizer.warmup_momentum 0.8

2025-10-23 16:46:18,824 [INFO] optimizer.warmup_bias_lr 0.1

2025-10-23 16:46:18,824 [INFO] optimizer.min_warmup_step 1000

2025-10-23 16:46:18,824 [INFO] optimizer.group_param yolov8

2025-10-23 16:46:18,824 [INFO] optimizer.gp_weight_decay 0.0005

2025-10-23 16:46:18,824 [INFO] optimizer.start_factor 1.0

2025-10-23 16:46:18,824 [INFO] optimizer.end_factor 0.01

2025-10-23 16:46:18,824 [INFO] loss.name YOLOv8Loss

2025-10-23 16:46:18,824 [INFO] loss.box 7.5

2025-10-23 16:46:18,824 [INFO] loss.cls 0.5

2025-10-23 16:46:18,824 [INFO] loss.dfl 1.5

2025-10-23 16:46:18,824 [INFO] loss.reg_max 16

2025-10-23 16:46:18,824 [INFO] epochs 10

2025-10-23 16:46:18,824 [INFO] sync_bn True

2025-10-23 16:46:18,824 [INFO] anchor_base False

2025-10-23 16:46:18,824 [INFO] opencv_threads_num 0

2025-10-23 16:46:18,824 [INFO] network.model_name yolov8

2025-10-23 16:46:18,824 [INFO] network.nc 80

2025-10-23 16:46:18,824 [INFO] network.reg_max 16

2025-10-23 16:46:18,824 [INFO] network.stride [8, 16, 32]

2025-10-23 16:46:18,824 [INFO] network.backbone [[-1, 1, 'ConvNormAct', [64, 3, 2]], [-1, 1, 'ConvNormAct', [128, 3, 2]], [-1, 3, 'C2f', [128, True]], [-1, 1, 'ConvNormAct', [256, 3, 2]], [-1, 6, 'C2f', [256, True]], [-1, 1, 'ConvNormAct', [512, 3, 2]], [-1, 6, 'C2f', [512, True]], [-1, 1, 'ConvNormAct', [1024, 3, 2]], [-1, 3, 'C2f', [1024, True]], [-1, 1, 'SPPF', [1024, 5]]]

2025-10-23 16:46:18,824 [INFO] network.head [[-1, 1, 'Upsample', ['None', 2, 'nearest']], [[-1, 6], 1, 'Concat', [1]], [-1, 3, 'C2f', [512]], [-1, 1, 'Upsample', ['None', 2, 'nearest']], [[-1, 4], 1, 'Concat', [1]], [-1, 3, 'C2f', [256]], [-1, 1, 'ConvNormAct', [256, 3, 2]], [[-1, 12], 1, 'Concat', [1]], [-1, 3, 'C2f', [512]], [-1, 1, 'ConvNormAct', [512, 3, 2]], [[-1, 9], 1, 'Concat', [1]], [-1, 3, 'C2f', [1024]], [[15, 18, 21], 1, 'YOLOv8Head', ['nc', 'reg_max', 'stride']]]

2025-10-23 16:46:18,824 [INFO] network.depth_multiple 0.33

2025-10-23 16:46:18,824 [INFO] network.width_multiple 0.5

2025-10-23 16:46:18,824 [INFO] network.max_channels 1024

2025-10-23 16:46:18,824 [INFO] overflow_still_update False

2025-10-23 16:46:18,824 [INFO] config ./configs/yolov8/yolov8s.yaml

2025-10-23 16:46:18,824 [INFO] rank 0

2025-10-23 16:46:18,824 [INFO] rank_size 1

2025-10-23 16:46:18,824 [INFO]

2025-10-23 16:46:18,898 [WARNING] Parse Model, args: nearest, keep str type

2025-10-23 16:46:18,909 [WARNING] Parse Model, args: nearest, keep str type

2025-10-23 16:46:18,984 [INFO] number of network params, total: 11.160279M, trainable: 11.140228M

[WARNING] GE_ADPT(540183,7efcd8e26740,python3):2025-10-23-16:46:22.493.658 [mindspore/ops/kernel/ascend/acl_ir/op_api_exec.cc:169] GetAscendDefaultCustomPath] Checking whether the so exists or if permission to access it is available: /usr/local/Ascend/ascend-toolkit/latest/opp/vendors/customize_vision/op_api/lib/libcust_opapi.so

2025-10-23 16:46:23,434 [INFO] Load checkpoint from [./runs/2025.10.23-14.48.02/weights/yolov8s-10_404.ckpt] success.

2025-10-23 16:46:23,437 [INFO] No dataset cache available, caching now...

Scanning '/root/workspace/mindyolo/visdrone_COCO_format/val.cache' images and labels... 548 found, 0 missing, 0 empty, 0 corrupted: 100%|█████████████████████████████████████████████████████████████████████████████| 548/548 [00:00<00:00, 3754.44it/s]

2025-10-23 16:46:23,595 [INFO] New cache created: /root/workspace/mindyolo/visdrone_COCO_format/val.cache.npy

2025-10-23 16:46:23,595 [INFO] Dataset caching success.

2025-10-23 16:46:23,597 [INFO] Dataloader num parallel workers: [8]

2025-10-23 16:46:23,607 [WARNING] unable to load fast_coco_eval api, use normal one instead

Warning: tiling offset out of range, index: 32

..2025-10-23 16:46:55,297 [INFO] Sample 35/1, time cost: 30512.14 ms.

2025-10-23 16:46:57,108 [INFO] Sample 35/2, time cost: 1722.38 ms.

2025-10-23 16:46:58,628 [INFO] Sample 35/3, time cost: 1420.95 ms.

2025-10-23 16:47:00,538 [INFO] Sample 35/4, time cost: 1809.91 ms.

2025-10-23 16:47:02,502 [INFO] Sample 35/5, time cost: 1865.30 ms.

2025-10-23 16:47:04,321 [INFO] Sample 35/6, time cost: 1718.46 ms.

2025-10-23 16:47:06,724 [INFO] Sample 35/7, time cost: 2303.35 ms.

2025-10-23 16:47:08,940 [INFO] Sample 35/8, time cost: 2117.25 ms.

2025-10-23 16:47:11,018 [INFO] Sample 35/9, time cost: 1978.46 ms.

2025-10-23 16:47:13,101 [INFO] Sample 35/10, time cost: 1982.41 ms.

2025-10-23 16:47:14,871 [INFO] Sample 35/11, time cost: 1671.05 ms.

2025-10-23 16:47:17,112 [INFO] Sample 35/12, time cost: 2140.79 ms.

2025-10-23 16:47:19,142 [INFO] Sample 35/13, time cost: 1930.53 ms.

2025-10-23 16:47:20,984 [INFO] Sample 35/14, time cost: 1741.35 ms.

2025-10-23 16:47:23,393 [INFO] Sample 35/15, time cost: 2307.50 ms.

2025-10-23 16:47:25,557 [INFO] Sample 35/16, time cost: 2060.89 ms.

2025-10-23 16:47:27,324 [INFO] Sample 35/17, time cost: 1664.00 ms.

2025-10-23 16:47:29,254 [INFO] Sample 35/18, time cost: 1824.31 ms.

2025-10-23 16:47:31,281 [INFO] Sample 35/19, time cost: 1921.78 ms.

2025-10-23 16:47:33,331 [INFO] Sample 35/20, time cost: 1942.85 ms.

2025-10-23 16:47:35,806 [INFO] Sample 35/21, time cost: 2368.87 ms.

2025-10-23 16:47:38,165 [INFO] Sample 35/22, time cost: 2255.00 ms.

2025-10-23 16:47:40,453 [INFO] Sample 35/23, time cost: 2182.96 ms.

2025-10-23 16:47:42,588 [INFO] Sample 35/24, time cost: 2029.14 ms.

2025-10-23 16:47:44,490 [INFO] Sample 35/25, time cost: 1796.02 ms.

2025-10-23 16:47:46,804 [INFO] Sample 35/26, time cost: 2207.91 ms.

2025-10-23 16:47:49,181 [INFO] Sample 35/27, time cost: 2270.69 ms.

2025-10-23 16:47:50,926 [INFO] Sample 35/28, time cost: 1638.70 ms.

2025-10-23 16:47:53,079 [INFO] Sample 35/29, time cost: 2046.37 ms.

2025-10-23 16:47:55,061 [INFO] Sample 35/30, time cost: 1875.28 ms.

2025-10-23 16:47:57,140 [INFO] Sample 35/31, time cost: 1972.00 ms.

2025-10-23 16:47:59,895 [INFO] Sample 35/32, time cost: 2647.24 ms.

2025-10-23 16:48:02,196 [INFO] Sample 35/33, time cost: 2191.50 ms.

2025-10-23 16:48:04,739 [INFO] Sample 35/34, time cost: 2434.77 ms.

..2025-10-23 16:48:20,509 [INFO] Sample 35/35, time cost: 15723.18 ms.

2025-10-23 16:48:20,509 [INFO] loading annotations into memory...

2025-10-23 16:48:20,639 [INFO] Done (t=0.13s)

2025-10-23 16:48:20,639 [INFO] creating index...

2025-10-23 16:48:20,650 [INFO] index created!

2025-10-23 16:48:20,650 [INFO] Loading and preparing results...

2025-10-23 16:48:21,106 [INFO] DONE (t=0.46s)

2025-10-23 16:48:21,106 [INFO] creating index...

2025-10-23 16:48:21,134 [INFO] index created!

2025-10-23 16:48:21,135 [INFO] Running per image evaluation...

2025-10-23 16:48:21,135 [INFO] Evaluate annotation type *bbox*

2025-10-23 16:48:31,087 [INFO] DONE (t=9.95s).

2025-10-23 16:48:31,087 [INFO] Accumulating evaluation results...

2025-10-23 16:48:31,996 [INFO] DONE (t=0.91s).

2025-10-23 16:48:31,996 [INFO] Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.019

2025-10-23 16:48:31,996 [INFO] Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.036

2025-10-23 16:48:31,996 [INFO] Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.019

2025-10-23 16:48:31,996 [INFO] Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.016

2025-10-23 16:48:31,997 [INFO] Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.024

2025-10-23 16:48:31,997 [INFO] Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.056

2025-10-23 16:48:31,997 [INFO] Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.009

2025-10-23 16:48:31,997 [INFO] Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.049

2025-10-23 16:48:31,997 [INFO] Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.076

2025-10-23 16:48:31,997 [INFO] Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.057

2025-10-23 16:48:31,997 [INFO] Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.106

2025-10-23 16:48:31,997 [INFO] Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.161

2025-10-23 16:48:31,997 [INFO] Speed: 99.0/100.3/199.3 ms inference/NMS/total per 1024x1024 image at batch-size 16;

2025-10-23 16:48:31,997 [INFO] Testing completed, cost 133.18s.

使用predict.py测试训练模型参数的结果并进行可视化推理,运行方式如下:

python3 examples/finetune_visdrone/predict.py --config ./configs/yolov8/yolov8s.yaml --weight=./runs/2025.10.23-14.48.02/weights/yolov8s-120_404.ckpt --image_path ./visdrone_COCO_format/val/images/000000000001.jpg

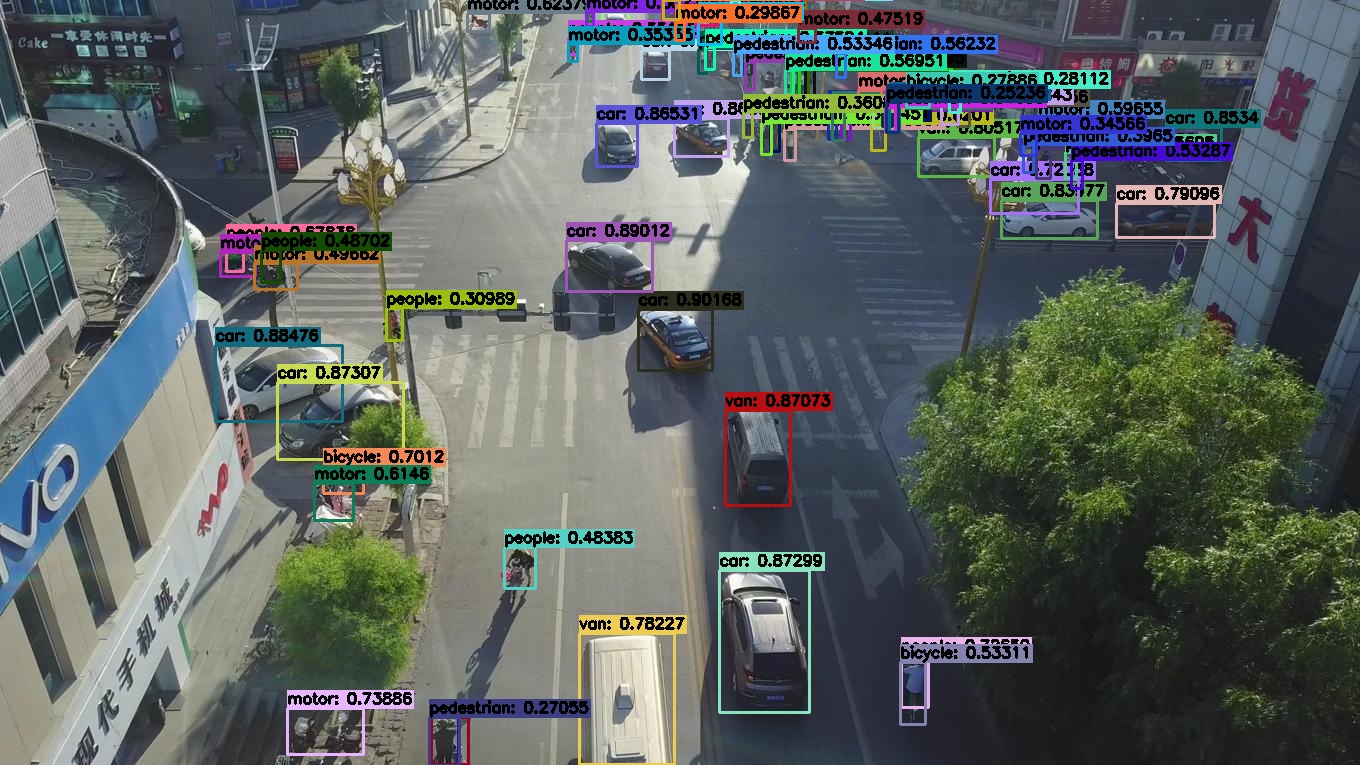

训练120个epoch后,模型的推理效果如下:

五、小结

本文详细阐述了在OrangePi AI Studio Pro上基于昇腾310P使用MindYolo框架实现YOLOv8模型训练与验证的完整流程,涵盖环境准备、数据集格式转换、模型训练参数配置及性能评估。

- 点赞

- 收藏

- 关注作者

评论(0)