手把手教物体检测——M2Det

目录

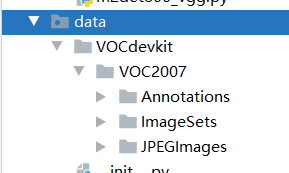

在data文件夹下新建VOCdevkit文件夹,导入VOC格式的数据集。如下图:

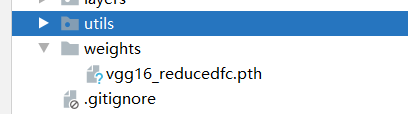

下载权重文件,放在weights(如果没有就在根目录新建)文件夹下面。

模型介绍

物体检测模型M2Det,是北京大学&阿里达摩院提出的Single-shot目标检测新模型,使用multi-level特征。在MS-COCO benchmark上,M2Det的单尺度版本和多尺度版本AP分别达到41.0和44.2 。

该模型的特点:

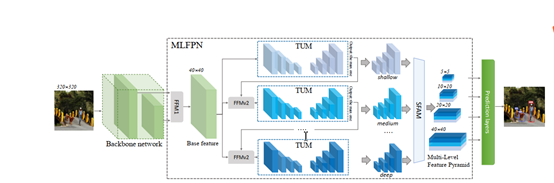

- 提出多级特征金字塔网络MLFPN。MLFPN的结构如下:

- 基于提出的MLFPN,结合SSD,提出一种新的Single-shot目标检测模型M2Det

模型使用

https://github.com/qijiezhao/M2Det”。

将:

-

VOC_CLASSES = ( '__background__', # always index 0

-

-

'aeroplane', 'bicycle', 'bird', 'boat',

-

-

'bottle', 'bus', 'car', 'cat', 'chair',

-

-

'cow', 'diningtable', 'dog', 'horse',

-

-

'motorbike', 'person', 'pottedplant',

-

-

'sheep', 'sofa', 'train', 'tvmonitor')

修改为:

VOC_CLASSES = ( '__background__', # always index 0

'aircraft', 'oiltank')

选择配置文件。

本例采用configs->m2det512_vgg.py配置文件

model = dict(

type = 'm2det',

input_size = 512,

init_net = True,

pretrained = 'weights/vgg16_reducedfc.pth',

m2det_config = dict(

backbone = 'vgg16',

net_family = 'vgg', # vgg includes ['vgg16','vgg19'], res includes ['resnetxxx','resnextxxx']

base_out = [22,34], # [22,34] for vgg, [2,4] or [3,4] for res families

planes = 256,

num_levels = 8,

num_scales = 6,

sfam = False,

smooth = True,

num_classes = 3,#更改类别,按照数据集里面的类别数量+1(背景)

),

rgb_means = (104, 117, 123),

p = 0.6,

anchor_config = dict(

step_pattern = [8, 16, 32, 64, 128, 256],

size_pattern = [0.06, 0.15, 0.33, 0.51, 0.69, 0.87, 1.05],

),

save_eposhs = 10,

weights_save = 'weights/' #保存权重文件的目录

)

train_cfg = dict(

cuda = True,#是否使用cuda

warmup = 5,

per_batch_size = 2,#修改batchsize,按照自己显卡的能力修改

lr = [0.004, 0.002, 0.0004, 0.00004, 0.000004],#学利率调整,调整依据step_lr的epoch数值。

gamma = 0.1,

end_lr = 1e-6,

step_lr = dict(

COCO = [90, 110, 130, 150, 160],

VOC = [100, 150, 200, 250, 300], # unsolve

),

print_epochs = 10,#每个10个epoch保存一个模型。

num_workers= 2,#线程数,根据CPU调整

)

test_cfg = dict(

cuda = True,

topk = 0,

iou = 0.45,

soft_nms = True,

score_threshold = 0.1,

keep_per_class = 50,

save_folder = 'eval'

)

loss = dict(overlap_thresh = 0.5,

prior_for_matching = True,

bkg_label = 0,

neg_mining = True,

neg_pos = 3,

neg_overlap = 0.5,

encode_target = False)

optimizer = dict(type='SGD', momentum=0.9, weight_decay=0.0005)#激活函数。

#修改dataset,本例采用VOC2007数据集,将COCO的删除即可,删除VOC2012

-

dataset = dict(

-

-

VOC = dict(

-

-

train_sets = [('2007', 'trainval')],

-

-

eval_sets = [('2007', 'test')],

-

-

)

-

-

)

-

-

import os

-

-

import os

-

-

home = ""#home路径,默认是linux的,本例采用win10,讲其修改为“”

-

-

VOCroot = os.path.join(home,"data/VOCdevkit/")

-

-

COCOroot = os.path.join(home,"data/coco/")

在安装pycocotools工具前提下,将程序自带的pycocotools工具包删除。

修改coco.py

将:

from utils.pycocotools.coco import COCO

from utils.pycocotools.cocoeval import COCOeval

from utils.pycocotools import mask as COCOmask

修改为:

from pycocotools.coco import COCO

from pycocotools.cocoeval import COCOeval

from pycocotools import mask as COCOmask

将:

from .nms.cpu_nms import cpu_nms, cpu_soft_nms

from .nms.gpu_nms import gpu_nms

# def nms(dets, thresh, force_cpu=False):

# """Dispatch to either CPU or GPU NMS implementations."""

# if dets.shape[0] == 0:

# return []

# if cfg.USE_GPU_NMS and not force_cpu:

# return gpu_nms(dets, thresh, device_id=cfg.GPU_ID)

# else:

# return cpu_nms(dets, thresh)

def nms(dets, thresh, force_cpu=False):

"""Dispatch to either CPU or GPU NMS implementations."""

if dets.shape[0] == 0:

return []

if force_cpu:

return cpu_soft_nms(dets, thresh, method = 1)

#return cpu_nms(dets, thresh)

return gpu_nms(dets, thresh)

修改为:

from .nms.py_cpu_nms import py_cpu_nms

def nms(dets, thresh, force_cpu=False):

"""Dispatch to either CPU or GPU NMS implementations."""

if dets.shape[0] == 0:

return []

if force_cpu:

return py_cpu_nms(dets, thresh, method = 1)

return py_cpu_nms(dets, thresh)

修改选定配置的文件

parser.add_argument('-c', '--config', default='configs/m2det512_vgg.py')

修改数据的格式

parser.add_argument('-d', '--dataset', default='VOC', help='VOC or COCO dataset')

然后就可以开始训练了。

parser = argparse.ArgumentParser(description='M2Det Testing')

parser.add_argument('-c', '--config', default='configs/m2det512_vgg.py', type=str)#选择配置文件,和训练的配置文件对应

parser.add_argument('-d', '--dataset', default='VOC', help='VOC or COCO version')

parser.add_argument('-m', '--trained_model', default='weights/M2Det_VOC_size512_netvgg16_epoch30.pth', type=str, help='Trained state_dict file path to open')

parser.add_argument('--test', action='store_true', help='to submit a test file')

修改voc0712.py282行的xml路径。将:

annopath = os.path.join(

rootpath,

'Annotations',

'{:s}.xml')

改为:

annopath = rootpath+'/Annotations/{:s}.xml'

测试结果:

修改demo.py中超参数

parser.add_argument('-c', '--config', default='configs/m2det512_vgg.py', type=str)

parser.add_argument('-f', '--directory', default='imgs/', help='the path to demo images')

parser.add_argument('-m', '--trained_model', default='weights/M2Det_VOC_size512_netvgg16_epoch30.pth', type=str, help='Trained state_dict file path to open')

然后将部分测试图片放到imgs文件夹下面,运行demo.py.

本文实例:https://download.csdn.net/download/hhhhhhhhhhwwwwwwwwww/12428492

文章来源: wanghao.blog.csdn.net,作者:AI浩,版权归原作者所有,如需转载,请联系作者。

原文链接:wanghao.blog.csdn.net/article/details/106160814

- 点赞

- 收藏

- 关注作者

评论(0)