【图像分类】手撕ResNet——复现ResNet(Keras,Tensorflow 2.x)

目录

摘要

ResNet(Residual Neural Network)由微软研究院的Kaiming He等四名华人提出,通过使用ResNet Unit成功训练出了152层的神经网络,并在ILSVRC2015比赛中取得冠军,在top5上的错误率为3.57%,同时参数量比VGGNet低,效果非常明显。

模型的创新点在于提出残差学习的思想,在网络中增加了直连通道,将原始输入信息直接传到后面的层中,如下图所示:

传统的卷积网络或者全连接网络在信息传递的时候或多或少会存在信息丢失,损耗等问题,同时还有导致梯度消失或者梯度爆炸,导致很深的网络无法训练。ResNet在一定程度上解决了这个问题,通过直接将输入信息绕道传到输出,保护信息的完整性,整个网络只需要学习输入、输出差别的那一部分,简化学习目标和难度。VGGNet和ResNet的对比如下图所示。ResNet最大的区别在于有很多的旁路将输入直接连接到后面的层,这种结构也被称为shortcut或者skip connections。

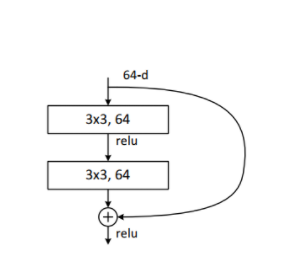

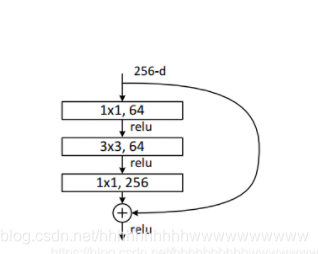

在ResNet网络结构中会用到两种残差模块,一种是以两个3*3的卷积网络串接在一起作为一个残差模块,另外一种是1*1、3*3、1*1的3个卷积网络串接在一起作为一个残差模块。如下图所示:

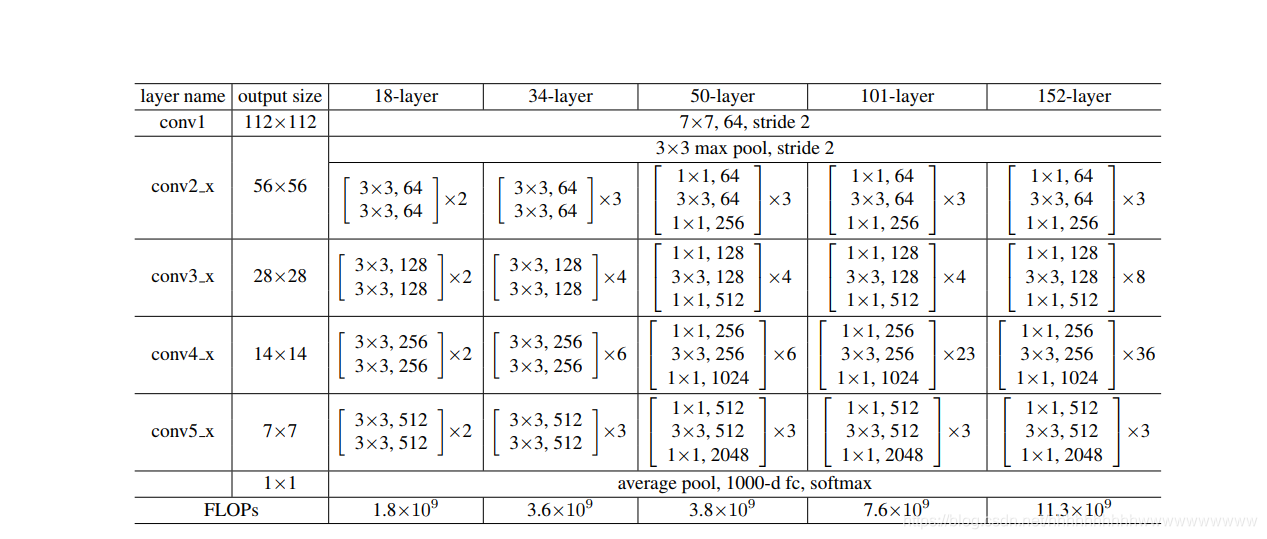

ResNet有不同的网络层数,比较常用的是18-layer,34-layer,50-layer,101-layer,152-layer。他们都是由上述的残差模块堆叠在一起实现的。 下图展示了不同的ResNet模型。

实现残差模块

第一个残差模块

-

# 第一个残差模块

-

class BasicBlock(layers.Layer):

-

def __init__(self, filter_num, stride=1):

-

super(BasicBlock, self).__init__()

-

self.conv1 = layers.Conv2D(filter_num, (3, 3), strides=stride, padding='same')

-

self.bn1 = layers.BatchNormalization()

-

self.relu = layers.Activation('relu')

-

self.conv2 = layers.Conv2D(filter_num, (3, 3), strides=1, padding='same')

-

self.bn2 = layers.BatchNormalization()

-

if stride != 1:

-

self.downsample = Sequential()

-

self.downsample.add(layers.Conv2D(filter_num, (1, 1), strides=stride))

-

else:

-

self.downsample = lambda x: x

-

-

def call(self, input, training=None):

-

out = self.conv1(input)

-

out = self.bn1(out)

-

out = self.relu(out)

-

out = self.conv2(out)

-

out = self.bn2(out)

-

identity = self.downsample(input)

-

output = layers.add([out, identity])

-

output = tf.nn.relu(output)

-

return output

-

第二个残差模块

-

# 第二个残差模块

-

class Block(layers.Layer):

-

def __init__(self, filters, downsample=False, stride=1):

-

super(Block, self).__init__()

-

self.downsample = downsample

-

self.conv1 = layers.Conv2D(filters, (1, 1), strides=stride, padding='same')

-

self.bn1 = layers.BatchNormalization()

-

self.relu = layers.Activation('relu')

-

self.conv2 = layers.Conv2D(filters, (3, 3), strides=1, padding='same')

-

self.bn2 = layers.BatchNormalization()

-

self.conv3 = layers.Conv2D(4 * filters, (1, 1), strides=1, padding='same')

-

self.bn3 = layers.BatchNormalization()

-

if self.downsample:

-

self.shortcut = Sequential()

-

self.shortcut.add(layers.Conv2D(4 * filters, (1, 1), strides=stride))

-

self.shortcut.add(layers.BatchNormalization(axis=3))

-

-

def call(self, input, training=None):

-

out = self.conv1(input)

-

out = self.bn1(out)

-

out = self.relu(out)

-

out = self.conv2(out)

-

out = self.bn2(out)

-

out = self.relu(out)

-

out = self.conv3(out)

-

out = self.bn3(out)

-

if self.downsample:

-

shortcut = self.shortcut(input)

-

else:

-

shortcut = input

-

output = layers.add([out, shortcut])

-

output = tf.nn.relu(output)

-

return output

ResNet18, ResNet34

-

import tensorflow as tf

-

from tensorflow import keras

-

from tensorflow.keras import layers, Sequential

-

-

-

# 第一个残差模块

-

class BasicBlock(layers.Layer):

-

def __init__(self, filter_num, stride=1):

-

super(BasicBlock, self).__init__()

-

self.conv1 = layers.Conv2D(filter_num, (3, 3), strides=stride, padding='same')

-

self.bn1 = layers.BatchNormalization()

-

self.relu = layers.Activation('relu')

-

self.conv2 = layers.Conv2D(filter_num, (3, 3), strides=1, padding='same')

-

self.bn2 = layers.BatchNormalization()

-

if stride != 1:

-

self.downsample = Sequential()

-

self.downsample.add(layers.Conv2D(filter_num, (1, 1), strides=stride))

-

else:

-

self.downsample = lambda x: x

-

-

def call(self, input, training=None):

-

out = self.conv1(input)

-

out = self.bn1(out)

-

out = self.relu(out)

-

out = self.conv2(out)

-

out = self.bn2(out)

-

identity = self.downsample(input)

-

output = layers.add([out, identity])

-

output = tf.nn.relu(output)

-

return output

-

-

-

class ResNet(keras.Model):

-

def __init__(self, layer_dims, num_classes=10):

-

super(ResNet, self).__init__()

-

# 预处理层

-

self.padding = keras.layers.ZeroPadding2D((3, 3))

-

self.stem = Sequential([

-

layers.Conv2D(64, (7, 7), strides=(2, 2)),

-

layers.BatchNormalization(),

-

layers.Activation('relu'),

-

layers.MaxPool2D(pool_size=(3, 3), strides=(2, 2), padding='same')

-

])

-

# resblock

-

self.layer1 = self.build_resblock(64, layer_dims[0])

-

self.layer2 = self.build_resblock(128, layer_dims[1], stride=2)

-

self.layer3 = self.build_resblock(256, layer_dims[2], stride=2)

-

self.layer4 = self.build_resblock(512, layer_dims[3], stride=2)

-

# 全局池化

-

self.avgpool = layers.GlobalAveragePooling2D()

-

# 全连接层

-

self.fc = layers.Dense(num_classes, activation=tf.keras.activations.softmax)

-

-

def call(self, input, training=None):

-

x=self.padding(input)

-

x = self.stem(x)

-

x = self.layer1(x)

-

x = self.layer2(x)

-

x = self.layer3(x)

-

x = self.layer4(x)

-

# [b,c]

-

x = self.avgpool(x)

-

x = self.fc(x)

-

return x

-

-

def build_resblock(self, filter_num, blocks, stride=1):

-

res_blocks = Sequential()

-

res_blocks.add(BasicBlock(filter_num, stride))

-

for pre in range(1, blocks):

-

res_blocks.add(BasicBlock(filter_num, stride=1))

-

return res_blocks

-

-

-

def ResNet34(num_classes=10):

-

return ResNet([2, 2, 2, 2], num_classes=num_classes)

-

-

-

def ResNet34(num_classes=10):

-

return ResNet([3, 4, 6, 3], num_classes=num_classes)

-

-

-

model = ResNet34(num_classes=1000)

-

model.build(input_shape=(1, 224, 224, 3))

-

print(model.summary()) # 统计网络参数

ResNet50、ResNet101、ResNet152

-

import tensorflow as tf

-

from tensorflow import keras

-

from tensorflow.keras import layers, Sequential

-

-

-

# 第一个残差模块

-

class Block(layers.Layer):

-

def __init__(self, filters, downsample=False, stride=1):

-

super(Block, self).__init__()

-

self.downsample = downsample

-

self.conv1 = layers.Conv2D(filters, (1, 1), strides=stride, padding='same')

-

self.bn1 = layers.BatchNormalization()

-

self.relu = layers.Activation('relu')

-

self.conv2 = layers.Conv2D(filters, (3, 3), strides=1, padding='same')

-

self.bn2 = layers.BatchNormalization()

-

self.conv3 = layers.Conv2D(4 * filters, (1, 1), strides=1, padding='same')

-

self.bn3 = layers.BatchNormalization()

-

if self.downsample:

-

self.shortcut = Sequential()

-

self.shortcut.add(layers.Conv2D(4 * filters, (1, 1), strides=stride))

-

self.shortcut.add(layers.BatchNormalization(axis=3))

-

-

def call(self, input, training=None):

-

out = self.conv1(input)

-

out = self.bn1(out)

-

out = self.relu(out)

-

out = self.conv2(out)

-

out = self.bn2(out)

-

out = self.relu(out)

-

out = self.conv3(out)

-

out = self.bn3(out)

-

if self.downsample:

-

shortcut = self.shortcut(input)

-

else:

-

shortcut = input

-

output = layers.add([out, shortcut])

-

output = tf.nn.relu(output)

-

return output

-

-

-

class ResNet(keras.Model):

-

def __init__(self, layer_dims, num_classes=10):

-

super(ResNet, self).__init__()

-

# 预处理层

-

self.padding = keras.layers.ZeroPadding2D((3, 3))

-

self.stem = Sequential([

-

layers.Conv2D(64, (7, 7), strides=(2, 2)),

-

layers.BatchNormalization(),

-

layers.Activation('relu'),

-

layers.MaxPool2D(pool_size=(3, 3), strides=(2, 2), padding='same')

-

])

-

# resblock

-

self.layer1 = self.build_resblock(64, layer_dims[0],stride=1)

-

self.layer2 = self.build_resblock(128, layer_dims[1], stride=2)

-

self.layer3 = self.build_resblock(256, layer_dims[2], stride=2)

-

self.layer4 = self.build_resblock(512, layer_dims[3], stride=2)

-

# 全局池化

-

self.avgpool = layers.GlobalAveragePooling2D()

-

# 全连接层

-

self.fc = layers.Dense(num_classes, activation=tf.keras.activations.softmax)

-

-

def call(self, input, training=None):

-

x = self.padding(input)

-

x = self.stem(x)

-

x = self.layer1(x)

-

x = self.layer2(x)

-

x = self.layer3(x)

-

x = self.layer4(x)

-

# [b,c]

-

x = self.avgpool(x)

-

x = self.fc(x)

-

return x

-

-

def build_resblock(self, filter_num, blocks, stride=1):

-

res_blocks = Sequential()

-

if stride != 1 or filter_num * 4 != 64:

-

res_blocks.add(Block(filter_num, downsample=True,stride=stride))

-

for pre in range(1, blocks):

-

res_blocks.add(Block(filter_num, stride=1))

-

return res_blocks

-

-

-

def ResNet50(num_classes=10):

-

return ResNet([3, 4, 6, 3], num_classes=num_classes)

-

-

-

def ResNet101(num_classes=10):

-

return ResNet([3, 4, 23, 3], num_classes=num_classes)

-

-

def ResNet152(num_classes=10):

-

return ResNet([3, 8, 36, 3], num_classes=num_classes)

-

-

model = ResNet50(num_classes=1000)

-

model.build(input_shape=(1, 224, 224, 3))

-

print(model.summary()) # 统计网络参数

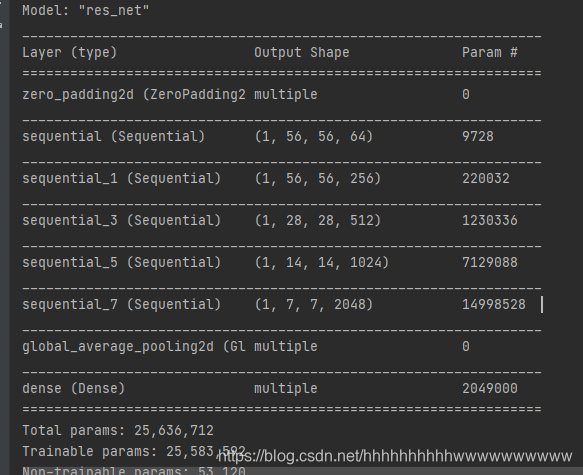

运行结果:

文章来源: wanghao.blog.csdn.net,作者:AI浩,版权归原作者所有,如需转载,请联系作者。

原文链接:wanghao.blog.csdn.net/article/details/117420186

- 点赞

- 收藏

- 关注作者

评论(0)