复现ResNeXt50和Reset50 pytorch代码

【摘要】

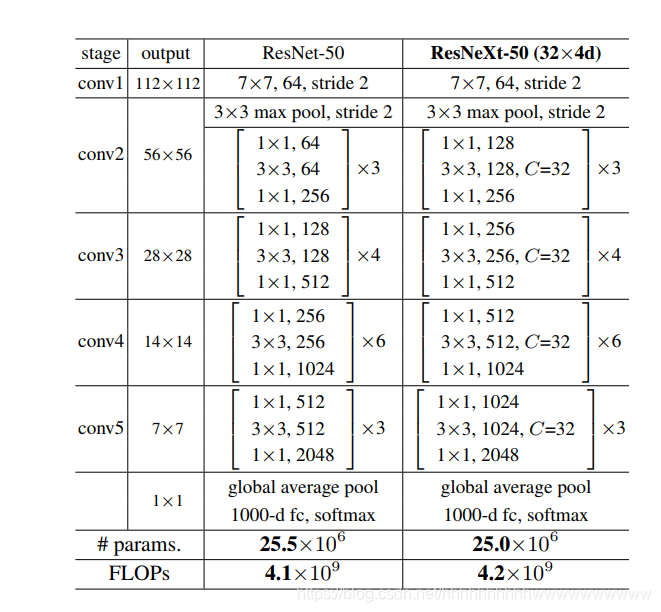

Reset50和ResNeXt50网络图

Reset50 101 152 pytorch代码复现

import torchimport torch.nn as nnimport torchvisionimport numpy as np print("PyTorch Version: ",torch.__version__)pr...

Reset50和ResNeXt50网络图

Reset50 101 152 pytorch代码复现

-

import torch

-

import torch.nn as nn

-

import torchvision

-

import numpy as np

-

-

print("PyTorch Version: ",torch.__version__)

-

print("Torchvision Version: ",torchvision.__version__)

-

-

__all__ = ['ResNet50', 'ResNet101','ResNet152']

-

-

def Conv1(in_planes, places, stride=2):

-

return nn.Sequential(

-

nn.Conv2d(in_channels=in_planes,out_channels=places,kernel_size=7,stride=stride,padding=3, bias=False),

-

nn.BatchNorm2d(places),

-

nn.ReLU(inplace=True),

-

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

-

)

-

-

class Bottleneck(nn.Module):

-

def __init__(self,in_places,places, stride=1,downsampling=False, expansion = 4):

-

super(Bottleneck,self).__init__()

-

self.expansion = expansion

-

self.downsampling = downsampling

-

-

self.bottleneck = nn.Sequential(

-

nn.Conv2d(in_channels=in_places,out_channels=places,kernel_size=1,stride=1, bias=False),

-

nn.BatchNorm2d(places),

-

nn.ReLU(inplace=True),

-

nn.Conv2d(in_channels=places, out_channels=places, kernel_size=3, stride=stride, padding=1, bias=False),

-

nn.BatchNorm2d(places),

-

nn.ReLU(inplace=True),

-

nn.Conv2d(in_channels=places, out_channels=places*self.expansion, kernel_size=1, stride=1, bias=False),

-

nn.BatchNorm2d(places*self.expansion),

-

)

-

-

if self.downsampling:

-

self.downsample = nn.Sequential(

-

nn.Conv2d(in_channels=in_places, out_channels=places*self.expansion, kernel_size=1, stride=stride, bias=False),

-

nn.BatchNorm2d(places*self.expansion)

-

)

-

self.relu = nn.ReLU(inplace=True)

-

def forward(self, x):

-

residual = x

-

out = self.bottleneck(x)

-

-

if self.downsampling:

-

residual = self.downsample(x)

-

-

out += residual

-

out = self.relu(out)

-

return out

-

-

class ResNet(nn.Module):

-

def __init__(self,blocks, num_classes=1000, expansion = 4):

-

super(ResNet,self).__init__()

-

self.expansion = expansion

-

-

self.conv1 = Conv1(in_planes = 3, places= 64)

-

-

self.layer1 = self.make_layer(in_places = 64, places= 64, block=blocks[0], stride=1)

-

self.layer2 = self.make_layer(in_places = 256,places=128, block=blocks[1], stride=2)

-

self.layer3 = self.make_layer(in_places=512,places=256, block=blocks[2], stride=2)

-

self.layer4 = self.make_layer(in_places=1024,places=512, block=blocks[3], stride=2)

-

-

self.avgpool = nn.AvgPool2d(7, stride=1)

-

self.fc = nn.Linear(2048,num_classes)

-

-

for m in self.modules():

-

if isinstance(m, nn.Conv2d):

-

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

-

elif isinstance(m, nn.BatchNorm2d):

-

nn.init.constant_(m.weight, 1)

-

nn.init.constant_(m.bias, 0)

-

-

def make_layer(self, in_places, places, block, stride):

-

layers = []

-

layers.append(Bottleneck(in_places, places,stride, downsampling =True))

-

for i in range(1, block):

-

layers.append(Bottleneck(places*self.expansion, places))

-

-

return nn.Sequential(*layers)

-

-

-

def forward(self, x):

-

x = self.conv1(x)

-

-

x = self.layer1(x)

-

x = self.layer2(x)

-

x = self.layer3(x)

-

x = self.layer4(x)

-

-

x = self.avgpool(x)

-

x = x.view(x.size(0), -1)

-

x = self.fc(x)

-

return x

-

-

def ResNet50():

-

return ResNet([3, 4, 6, 3])

-

-

def ResNet101():

-

return ResNet([3, 4, 23, 3])

-

-

def ResNet152():

-

return ResNet([3, 8, 36, 3])

-

-

-

if __name__=='__main__':

-

#model = torchvision.models.resnet50()

-

model = ResNet50()

-

print(model)

-

-

input = torch.randn(1, 3, 224, 224)

-

out = model(input)

-

print(out.shape)

ResNeXt50 pytorch代码

-

import torch

-

import torch.nn as nn

-

-

class Block(nn.Module):

-

def __init__(self,in_channels, out_channels, stride=1, is_shortcut=False):

-

super(Block,self).__init__()

-

self.relu = nn.ReLU(inplace=True)

-

self.is_shortcut = is_shortcut

-

self.conv1 = nn.Sequential(

-

nn.Conv2d(in_channels, out_channels // 2, kernel_size=1,stride=stride,bias=False),

-

nn.BatchNorm2d(out_channels // 2),

-

nn.ReLU()

-

)

-

self.conv2 = nn.Sequential(

-

nn.Conv2d(out_channels // 2, out_channels // 2, kernel_size=3, stride=1, padding=1, groups=32,

-

bias=False),

-

nn.BatchNorm2d(out_channels // 2),

-

nn.ReLU()

-

)

-

self.conv3 = nn.Sequential(

-

nn.Conv2d(out_channels // 2, out_channels, kernel_size=1,stride=1,bias=False),

-

nn.BatchNorm2d(out_channels),

-

)

-

if is_shortcut:

-

self.shortcut = nn.Sequential(

-

nn.Conv2d(in_channels,out_channels,kernel_size=1,stride=stride,bias=1),

-

nn.BatchNorm2d(out_channels)

-

)

-

def forward(self, x):

-

x_shortcut = x

-

x = self.conv1(x)

-

x = self.conv2(x)

-

x = self.conv3(x)

-

if self.is_shortcut:

-

x_shortcut = self.shortcut(x_shortcut)

-

x = x + x_shortcut

-

x = self.relu(x)

-

return x

-

-

class Resnext(nn.Module):

-

def __init__(self,num_classes,layer=[3,4,6,3]):

-

super(Resnext,self).__init__()

-

self.conv1 = nn.Sequential(

-

nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False),

-

nn.BatchNorm2d(64),

-

nn.ReLU(),

-

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

-

)

-

self.conv2 = self._make_layer(64,256,1,num=layer[0])

-

self.conv3 = self._make_layer(256,512,2,num=layer[1])

-

self.conv4 = self._make_layer(512,1024,2,num=layer[2])

-

self.conv5 = self._make_layer(1024,2048,2,num=layer[3])

-

self.global_average_pool = nn.AvgPool2d(kernel_size=7, stride=1)

-

self.fc = nn.Linear(2048,num_classes)

-

def forward(self, x):

-

x = self.conv1(x)

-

x = self.conv2(x)

-

x = self.conv3(x)

-

x = self.conv4(x)

-

x = self.conv5(x)

-

x = self.global_average_pool(x)

-

x = torch.flatten(x,1)

-

x = self.fc(x)

-

return x

-

def _make_layer(self,in_channels,out_channels,stride,num):

-

layers = []

-

block_1=Block(in_channels, out_channels,stride=stride,is_shortcut=True)

-

layers.append(block_1)

-

for i in range(1, num):

-

layers.append(Block(out_channels,out_channels,stride=1,is_shortcut=False))

-

return nn.Sequential(*layers)

-

-

-

net = Resnext(10)

-

x = torch.rand((10, 3, 224, 224))

-

for name,layer in net.named_children():

-

if name != "fc":

-

x = layer(x)

-

print(name, 'output shaoe:', x.shape)

-

else:

-

x = x.view(x.size(0), -1)

-

x = layer(x)

-

print(name, 'output shaoe:', x.shape)

文章来源: wanghao.blog.csdn.net,作者:AI浩,版权归原作者所有,如需转载,请联系作者。

原文链接:wanghao.blog.csdn.net/article/details/115477386

【版权声明】本文为华为云社区用户转载文章,如果您发现本社区中有涉嫌抄袭的内容,欢迎发送邮件进行举报,并提供相关证据,一经查实,本社区将立刻删除涉嫌侵权内容,举报邮箱:

cloudbbs@huaweicloud.com

- 点赞

- 收藏

- 关注作者

评论(0)