Pytorch自定义模型实现猫狗分类

【摘要】

摘要

本例采用猫狗大战的部分数据作为数据集,模型是自定义的模型。

训练

1、构建数据集

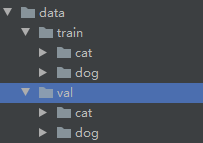

在data文件夹下面新家train和val文件夹,分别在train和val文件夹下面新家cat和dog文件夹,并将图片放进去。如图:

2、导入库

# 导入库import torch.nn.functional as Fimpo...

摘要

本例采用猫狗大战的部分数据作为数据集,模型是自定义的模型。

训练

在data文件夹下面新家train和val文件夹,分别在train和val文件夹下面新家cat和dog文件夹,并将图片放进去。如图:

-

# 导入库

-

import torch.nn.functional as F

-

import torch.optim as optim

-

import torch

-

import torch.nn as nn

-

import torch.nn.parallel

-

-

import torch.optim

-

import torch.utils.data

-

import torch.utils.data.distributed

-

import torchvision.transforms as transforms

-

import torchvision.datasets as datasets

-

# 设置超参数

-

-

BATCH_SIZE = 20

-

-

EPOCHS = 10

-

-

DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

-

# 数据预处理

-

-

transform = transforms.Compose([

-

-

transforms.Resize(100),

-

-

transforms.RandomVerticalFlip(),

-

-

transforms.RandomCrop(50),

-

-

transforms.RandomResizedCrop(150),

-

-

transforms.ColorJitter(brightness=0.5, contrast=0.5, hue=0.5),

-

-

transforms.ToTensor(),

-

-

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

-

-

])

-

# 读取数据

-

-

dataset_train = datasets.ImageFolder('data/train', transform)

-

-

print(dataset_train.imgs)

-

-

# 对应文件夹的label

-

-

print(dataset_train.class_to_idx)

-

-

dataset_test = datasets.ImageFolder('data/val', transform)

-

-

# 对应文件夹的label

-

-

print(dataset_test.class_to_idx)

-

-

# 导入数据

-

-

train_loader = torch.utils.data.DataLoader(dataset_train, batch_size=BATCH_SIZE, shuffle=True)

-

-

test_loader = torch.utils.data.DataLoader(dataset_test, batch_size=BATCH_SIZE, shuffle=True)

-

# 定义网络

-

-

class ConvNet(nn.Module):

-

-

def __init__(self):

-

-

super(ConvNet, self).__init__()

-

-

self.conv1 = nn.Conv2d(3, 32, 3)

-

-

self.max_pool1 = nn.MaxPool2d(2)

-

-

self.conv2 = nn.Conv2d(32, 64, 3)

-

-

self.max_pool2 = nn.MaxPool2d(2)

-

-

self.conv3 = nn.Conv2d(64, 64, 3)

-

-

self.conv4 = nn.Conv2d(64, 64, 3)

-

-

self.max_pool3 = nn.MaxPool2d(2)

-

-

self.conv5 = nn.Conv2d(64, 128, 3)

-

-

self.conv6 = nn.Conv2d(128, 128, 3)

-

-

self.max_pool4 = nn.MaxPool2d(2)

-

-

self.fc1 = nn.Linear(4608, 512)

-

-

self.fc2 = nn.Linear(512, 1)

-

-

-

-

def forward(self, x):

-

-

in_size = x.size(0)

-

-

x = self.conv1(x)

-

-

x = F.relu(x)

-

-

x = self.max_pool1(x)

-

-

x = self.conv2(x)

-

-

x = F.relu(x)

-

-

x = self.max_pool2(x)

-

-

x = self.conv3(x)

-

-

x = F.relu(x)

-

-

x = self.conv4(x)

-

-

x = F.relu(x)

-

-

x = self.max_pool3(x)

-

-

x = self.conv5(x)

-

-

x = F.relu(x)

-

-

x = self.conv6(x)

-

-

x = F.relu(x)

-

-

x = self.max_pool4(x)

-

-

# 展开

-

-

x = x.view(in_size, -1)

-

-

x = self.fc1(x)

-

-

x = F.relu(x)

-

-

x = self.fc2(x)

-

-

x = torch.sigmoid(x)

-

-

return x

-

-

-

-

-

-

modellr = 1e-4

-

-

# 实例化模型并且移动到GPU

-

-

model = ConvNet().to(DEVICE)

-

-

# 选择简单暴力的Adam优化器,学习率调低

-

-

optimizer = optim.Adam(model.parameters(), lr=modellr)

-

def adjust_learning_rate(optimizer, epoch):

-

-

"""Sets the learning rate to the initial LR decayed by 10 every 30 epochs"""

-

-

modellrnew = modellr * (0.1 ** (epoch // 5))

-

-

print("lr:",modellrnew)

-

-

for param_group in optimizer.param_groups:

-

-

param_group['lr'] = modellrnew

-

# 定义训练过程

-

-

def train(model, device, train_loader, optimizer, epoch):

-

-

model.train()

-

-

for batch_idx, (data, target) in enumerate(train_loader):

-

-

data, target = data.to(device), target.to(device).float().unsqueeze(1)

-

-

optimizer.zero_grad()

-

-

output = model(data)

-

-

# print(output)

-

-

loss = F.binary_cross_entropy(output, target)

-

-

loss.backward()

-

-

optimizer.step()

-

-

if (batch_idx + 1) % 10 == 0:

-

-

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

-

-

epoch, (batch_idx + 1) * len(data), len(train_loader.dataset),

-

-

100. * (batch_idx + 1) / len(train_loader), loss.item()))

-

-

-

-

-

-

# 定义测试过程

-

-

def val(model, device, test_loader):

-

-

model.eval()

-

-

test_loss = 0

-

-

correct = 0

-

-

with torch.no_grad():

-

-

for data, target in test_loader:

-

-

data, target = data.to(device), target.to(device).float().unsqueeze(1)

-

-

output = model(data)

-

-

# print(output)

-

-

test_loss += F.binary_cross_entropy(output, target, reduction='mean').item() # 将一批的损失相加

-

-

pred = torch.tensor([[1] if num[0] >= 0.5 else [0] for num in output]).to(device)

-

-

correct += pred.eq(target.long()).sum().item()

-

-

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

-

-

test_loss, correct, len(test_loader.dataset),

-

-

100. * correct / len(test_loader.dataset)))

9、训练并保存模型

-

# 训练

-

-

for epoch in range(1, EPOCHS + 1):

-

-

adjust_learning_rate(optimizer, epoch)

-

-

train(model, DEVICE, train_loader, optimizer, epoch)

-

-

val(model, DEVICE, test_loader)

-

-

torch.save(model, 'model.pth')

完整代码:

-

# 导入库

-

-

import torch.nn.functional as F

-

-

import torch.optim as optim

-

-

import torch

-

-

import torch.nn as nn

-

-

import torch.nn.parallel

-

-

-

-

import torch.optim

-

-

import torch.utils.data

-

-

import torch.utils.data.distributed

-

-

import torchvision.transforms as transforms

-

-

import torchvision.datasets as datasets

-

-

-

-

# 设置超参数

-

-

BATCH_SIZE = 20

-

-

EPOCHS = 10

-

-

DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

-

-

-

-

# 数据预处理

-

-

transform = transforms.Compose([

-

-

transforms.Resize(100),

-

-

transforms.RandomVerticalFlip(),

-

-

transforms.RandomCrop(50),

-

-

transforms.RandomResizedCrop(150),

-

-

transforms.ColorJitter(brightness=0.5, contrast=0.5, hue=0.5),

-

-

transforms.ToTensor(),

-

-

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

-

-

])

-

-

-

-

# 读取数据

-

-

dataset_train = datasets.ImageFolder('data/train', transform)

-

-

print(dataset_train.imgs)

-

-

# 对应文件夹的label

-

-

print(dataset_train.class_to_idx)

-

-

dataset_test = datasets.ImageFolder('data/val', transform)

-

-

# 对应文件夹的label

-

-

print(dataset_test.class_to_idx)

-

-

# 导入数据

-

-

train_loader = torch.utils.data.DataLoader(dataset_train, batch_size=BATCH_SIZE, shuffle=True)

-

-

test_loader = torch.utils.data.DataLoader(dataset_test, batch_size=BATCH_SIZE, shuffle=True)

-

-

-

-

-

-

# 定义网络

-

-

class ConvNet(nn.Module):

-

-

def __init__(self):

-

-

super(ConvNet, self).__init__()

-

-

self.conv1 = nn.Conv2d(3, 32, 3)

-

-

self.max_pool1 = nn.MaxPool2d(2)

-

-

self.conv2 = nn.Conv2d(32, 64, 3)

-

-

self.max_pool2 = nn.MaxPool2d(2)

-

-

self.conv3 = nn.Conv2d(64, 64, 3)

-

-

self.conv4 = nn.Conv2d(64, 64, 3)

-

-

self.max_pool3 = nn.MaxPool2d(2)

-

-

self.conv5 = nn.Conv2d(64, 128, 3)

-

-

self.conv6 = nn.Conv2d(128, 128, 3)

-

-

self.max_pool4 = nn.MaxPool2d(2)

-

-

self.fc1 = nn.Linear(4608, 512)

-

-

self.fc2 = nn.Linear(512, 1)

-

-

-

-

def forward(self, x):

-

-

in_size = x.size(0)

-

-

x = self.conv1(x)

-

-

x = F.relu(x)

-

-

x = self.max_pool1(x)

-

-

x = self.conv2(x)

-

-

x = F.relu(x)

-

-

x = self.max_pool2(x)

-

-

x = self.conv3(x)

-

-

x = F.relu(x)

-

-

x = self.conv4(x)

-

-

x = F.relu(x)

-

-

x = self.max_pool3(x)

-

-

x = self.conv5(x)

-

-

x = F.relu(x)

-

-

x = self.conv6(x)

-

-

x = F.relu(x)

-

-

x = self.max_pool4(x)

-

-

# 展开

-

-

x = x.view(in_size, -1)

-

-

x = self.fc1(x)

-

-

x = F.relu(x)

-

-

x = self.fc2(x)

-

-

x = torch.sigmoid(x)

-

-

return x

-

-

-

-

-

-

modellr = 1e-4

-

-

# 实例化模型并且移动到GPU

-

-

model = ConvNet().to(DEVICE)

-

-

# 选择简单暴力的Adam优化器,学习率调低

-

-

optimizer = optim.Adam(model.parameters(), lr=modellr)

-

-

-

-

-

-

def adjust_learning_rate(optimizer, epoch):

-

-

"""Sets the learning rate to the initial LR decayed by 10 every 30 epochs"""

-

-

modellrnew = modellr * (0.1 ** (epoch // 5))

-

-

print("lr:",modellrnew)

-

-

for param_group in optimizer.param_groups:

-

-

param_group['lr'] = modellrnew

-

-

-

-

-

-

# 定义训练过程

-

-

def train(model, device, train_loader, optimizer, epoch):

-

-

model.train()

-

-

for batch_idx, (data, target) in enumerate(train_loader):

-

-

data, target = data.to(device), target.to(device).float().unsqueeze(1)

-

-

optimizer.zero_grad()

-

-

output = model(data)

-

-

# print(output)

-

-

loss = F.binary_cross_entropy(output, target)

-

-

loss.backward()

-

-

optimizer.step()

-

-

if (batch_idx + 1) % 10 == 0:

-

-

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

-

-

epoch, (batch_idx + 1) * len(data), len(train_loader.dataset),

-

-

100. * (batch_idx + 1) / len(train_loader), loss.item()))

-

-

-

-

-

-

# 定义测试过程

-

-

def val(model, device, test_loader):

-

-

model.eval()

-

-

test_loss = 0

-

-

correct = 0

-

-

with torch.no_grad():

-

-

for data, target in test_loader:

-

-

data, target = data.to(device), target.to(device).float().unsqueeze(1)

-

-

output = model(data)

-

-

# print(output)

-

-

test_loss += F.binary_cross_entropy(output, target, reduction='mean').item() # 将一批的损失相加

-

-

pred = torch.tensor([[1] if num[0] >= 0.5 else [0] for num in output]).to(device)

-

-

correct += pred.eq(target.long()).sum().item()

-

-

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

-

-

test_loss, correct, len(test_loader.dataset),

-

-

100. * correct / len(test_loader.dataset)))

-

-

-

-

-

-

# 训练

-

-

for epoch in range(1, EPOCHS + 1):

-

-

adjust_learning_rate(optimizer, epoch)

-

-

train(model, DEVICE, train_loader, optimizer, epoch)

-

-

val(model, DEVICE, test_loader)

-

-

torch.save(model, 'model.pth')

测试

完整代码:

-

from __future__ import print_function, division

-

-

from PIL import Image

-

-

from torchvision import transforms

-

import torch.nn.functional as F

-

-

import torch

-

import torch.nn as nn

-

import torch.nn.parallel

-

# 定义网络

-

class ConvNet(nn.Module):

-

def __init__(self):

-

super(ConvNet, self).__init__()

-

self.conv1 = nn.Conv2d(3, 32, 3)

-

self.max_pool1 = nn.MaxPool2d(2)

-

self.conv2 = nn.Conv2d(32, 64, 3)

-

self.max_pool2 = nn.MaxPool2d(2)

-

self.conv3 = nn.Conv2d(64, 64, 3)

-

self.conv4 = nn.Conv2d(64, 64, 3)

-

self.max_pool3 = nn.MaxPool2d(2)

-

self.conv5 = nn.Conv2d(64, 128, 3)

-

self.conv6 = nn.Conv2d(128, 128, 3)

-

self.max_pool4 = nn.MaxPool2d(2)

-

self.fc1 = nn.Linear(4608, 512)

-

self.fc2 = nn.Linear(512, 1)

-

-

def forward(self, x):

-

in_size = x.size(0)

-

x = self.conv1(x)

-

x = F.relu(x)

-

x = self.max_pool1(x)

-

x = self.conv2(x)

-

x = F.relu(x)

-

x = self.max_pool2(x)

-

x = self.conv3(x)

-

x = F.relu(x)

-

x = self.conv4(x)

-

x = F.relu(x)

-

x = self.max_pool3(x)

-

x = self.conv5(x)

-

x = F.relu(x)

-

x = self.conv6(x)

-

x = F.relu(x)

-

x = self.max_pool4(x)

-

# 展开

-

x = x.view(in_size, -1)

-

x = self.fc1(x)

-

x = F.relu(x)

-

x = self.fc2(x)

-

x = torch.sigmoid(x)

-

return x

-

# 模型存储路径

-

model_save_path = 'model.pth'

-

-

# ------------------------ 加载数据 --------------------------- #

-

# Data augmentation and normalization for training

-

# Just normalization for validation

-

# 定义预训练变换

-

# 数据预处理

-

transform_test = transforms.Compose([

-

transforms.Resize(100),

-

transforms.RandomVerticalFlip(),

-

transforms.RandomCrop(50),

-

transforms.RandomResizedCrop(150),

-

transforms.ColorJitter(brightness=0.5, contrast=0.5, hue=0.5),

-

transforms.ToTensor(),

-

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

-

])

-

-

-

class_names = ['cat', 'dog'] # 这个顺序很重要,要和训练时候的类名顺序一致

-

-

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

-

-

# ------------------------ 载入模型并且训练 --------------------------- #

-

model = torch.load(model_save_path)

-

model.eval()

-

# print(model)

-

-

image_PIL = Image.open('dog.12.jpg')

-

#

-

image_tensor = transform_test(image_PIL)

-

# 以下语句等效于 image_tensor = torch.unsqueeze(image_tensor, 0)

-

image_tensor.unsqueeze_(0)

-

# 没有这句话会报错

-

image_tensor = image_tensor.to(device)

-

-

out = model(image_tensor)

-

pred = torch.tensor([[1] if num[0] >= 0.5 else [0] for num in out]).to(device)

-

print(class_names[pred])

-

-

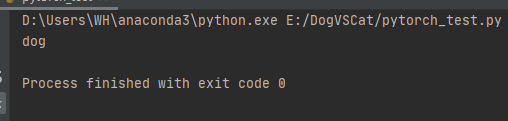

运行结果:

文章来源: wanghao.blog.csdn.net,作者:AI浩,版权归原作者所有,如需转载,请联系作者。

原文链接:wanghao.blog.csdn.net/article/details/110428926

【版权声明】本文为华为云社区用户转载文章,如果您发现本社区中有涉嫌抄袭的内容,欢迎发送邮件进行举报,并提供相关证据,一经查实,本社区将立刻删除涉嫌侵权内容,举报邮箱:

cloudbbs@huaweicloud.com

- 点赞

- 收藏

- 关注作者

评论(0)