Kubernetes 管理员认证(CKA)考试笔记(二)

写在前面

- 嗯,准备考

cka证书,报了个班,花了一个月工资,好心疼呀,一定要考过去。 - 这篇博客是报班听课后整理的笔记,适合温习。

- 博文内容涉及

docker,k8s; - 写的有点多了,因为粘贴了代码,所以只能分开发布

- 本部分内容涉及

k8s多集群切换,k8s版本升级、etcd,pod相关 - 博文涉及镜像小伙伴有需要可以留言

生活的意义就是学着真实的活下去,生命的意义就是寻找生活的意义 -----山河已无恙

创建一个新的集群,配置ssh免密,修改主机清单,然后使用之前的配置文件修改下

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat inventory

[node]

192.168.26.82

192.168.26.83

[master]

192.168.26.81

[temp]

192.168.26.91

192.168.26.92

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat init_c2_playbook.yml

- name: init k8s

hosts: temp

tasks:

# 关闭防火墙

- shell: firewall-cmd --set-default-zone=trusted

# 关闭selinux

- shell: getenforce

register: out

- debug: msg="{{out}}"

- shell: setenforce 0

when: out.stdout != "Disabled"

- replace:

path: /etc/selinux/config

regexp: "SELINUX=enforcing"

replace: "SELINUX=disabled"

- shell: cat /etc/selinux/config

register: out

- debug: msg="{{out}}"

- copy:

src: ./hosts_c2

dest: /etc/hosts

force: yes

# 关闭交换分区

- shell: swapoff -a

- shell: sed -i '/swap/d' /etc/fstab

- shell: cat /etc/fstab

register: out

- debug: msg="{{out}}"

# 配置yum源

- shell: tar -cvf /etc/yum.tar /etc/yum.repos.d/

- shell: rm -rf /etc/yum.repos.d/*

- shell: wget ftp://ftp.rhce.cc/k8s/* -P /etc/yum.repos.d/

# 安装docker-ce

- yum:

name: docker-ce

state: present

# 配置docker加速

- shell: mkdir /etc/docker

- copy:

src: ./daemon.json

dest: /etc/docker/daemon.json

- shell: systemctl daemon-reload

- shell: systemctl restart docker

# 配置属性,安装k8s相关包

- copy:

src: ./k8s.conf

dest: /etc/sysctl.d/k8s.conf

- shell: yum install -y kubelet-1.21.1-0 kubeadm-1.21.1-0 kubectl-1.21.1-0 --disableexcludes=kubernetes

# 缺少镜像导入

- copy:

src: ./coredns-1.21.tar

dest: /root/coredns-1.21.tar

- shell: docker load -i /root/coredns-1.21.tar

# 启动服务

- shell: systemctl restart kubelet

- shell: systemctl enable kubelet

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

第二个集群,一个node节点,一个master节点

[root@vms91 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms91.liruilongs.github.io Ready control-plane,master 139m v1.21.1

vms92.liruilongs.github.io Ready <none> 131m v1.21.1

[root@vms91 ~]# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://192.168.26.91:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

[root@vms91 ~]#

| 一个控制台管理多个集群,多集群切换: |

|---|

| 一个控制台管理多个集群 |

| 对于一个 kubeconfig文件来说,有3个部分: |

| cluster:集群信息 |

| context:属性–默认的命名空间 |

| user: 用户密匙 |

需要配置config,多个集群配置文件合并为一个

┌──[root@vms81.liruilongs.github.io]-[~/.kube]

└─$pwd;ls

/root/.kube

cache config

config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0.........0tCg==

server: https://192.168.26.81:6443

name: cluster1

- cluster:

certificate-authority-data: LS0.........0tCg==

server: https://192.168.26.91:6443

name: cluster2

contexts:

- context:

cluster: cluster1

namespace: kube-public

user: kubernetes-admin1

name: context1

- context:

cluster: cluster2

namespace: kube-system

user: kubernetes-admin2

name: context2

current-context: context2

kind: Config

preferences: {}

users:

- name: kubernetes-admin1

user:

client-certificate-data: LS0.......0tCg==

client-key-data: LS0......LQo=

- name: kubernetes-admin2

user:

client-certificate-data: LS0.......0tCg==

client-key-data: LS0......0tCg==

多集群切换:kubectl config use-context context2

┌──[root@vms81.liruilongs.github.io]-[~/.kube]

└─$kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* context1 cluster1 kubernetes-admin1 kube-public

context2 cluster2 kubernetes-admin2 kube-system

┌──[root@vms81.liruilongs.github.io]-[~/.kube]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms81.liruilongs.github.io Ready control-plane,master 23h v1.21.1

vms82.liruilongs.github.io Ready <none> 23h v1.21.1

vms83.liruilongs.github.io Ready <none> 23h v1.21.1

┌──[root@vms81.liruilongs.github.io]-[~/.kube]

└─$kubectl config use-context context2

Switched to context "context2".

┌──[root@vms81.liruilongs.github.io]-[~/.kube]

└─$kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

context1 cluster1 kubernetes-admin1 kube-public

* context2 cluster2 kubernetes-admin2 kube-system

┌──[root@vms81.liruilongs.github.io]-[~/.kube]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms91.liruilongs.github.io Ready control-plane,master 8h v1.21.1

vms92.liruilongs.github.io Ready <none> 8h v1.21.1

┌──[root@vms81.liruilongs.github.io]-[~/.kube]

└─$

┌──[root@liruilongs.github.io]-[~]

└─$ yum -y install etcd

┌──[root@liruilongs.github.io]-[~]

└─$ rpm -qc etcd

/etc/etcd/etcd.conf

┌──[root@liruilongs.github.io]-[~]

└─$ vim $(rpm -qc etcd)

┌──[root@liruilongs.github.io]-[~]

└─$

#[Member]

# 数据位置

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

# 数据同步端口

ETCD_LISTEN_PEER_URLS="http://192.168.26.91:2380,http://localhost:2380"

# 读写端口

ETCD_LISTEN_CLIENT_URLS="http://192.168.26.91:2379,http://localhost:2379"

ETCD_NAME="default"

#[Clustering]

ETCD_ADVERTISE_CLIENT_URLS="http://localhost:2379"

┌──[root@liruilongs.github.io]-[~]

└─$ systemctl enable etcd --now

┌──[root@liruilongs.github.io]-[~]

└─$ etcdctl member list

8e9e05c52164694d: name=default peerURLs=http://localhost:2380 clientURLs=http://localhost:2379 isLeader=true

┌──[root@liruilongs.github.io]-[~]

└─$ etcdctl cluster-health

member 8e9e05c52164694d is healthy: got healthy result from http://localhost:2379

cluster is healthy

┌──[root@liruilongs.github.io]-[~]

└─$ etcdctl ls /

┌──[root@liruilongs.github.io]-[~]

└─$ etcdctl mkdir cka

┌──[root@liruilongs.github.io]-[~]

└─$ etcdctl ls /

/cka

┌──[root@liruilongs.github.io]-[~]

└─$ etcdctl rmdir /cka

┌──[root@liruilongs.github.io]-[~]

└─$ etcdctl ls /

┌──[root@liruilongs.github.io]-[~]

└─$

2和3版本切换

┌──[root@liruilongs.github.io]-[~]

└─$ etcdctl -v

etcdctl version: 3.3.11

API version: 2

┌──[root@liruilongs.github.io]-[~]

└─$ export ETCDCTL_API=3

┌──[root@liruilongs.github.io]-[~]

└─$ etcdctl version

etcdctl version: 3.3.11

API version: 3.3

┌──[root@liruilongs.github.io]-[~]

└─$

- ETCD集群是一个分布式系统,使用Raft协议来维护集群内各个节点状态的一致性。

- 主机状态 Leader, Follower, Candidate

- 当集群初始化时候,每个节点都是Follower角色,通过心跳与其他节点同步数据

- 当Follower在一定时间内没有收到来自主节点的心跳,会将自己角色改变为Candidate,并发起一次选主投票

- 配置etcd集群,建议尽可能是奇数个节点,而不要偶数个节点

环境准备

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat inventory

......

[etcd]

192.168.26.100

192.168.26.101

192.168.26.102

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -m ping

192.168.26.100 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

192.168.26.102 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

192.168.26.101 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -m yum -a "name=etcd state=installed"

配置文件修改

这里用前两台(192.168.26.100,192.168.26.101)初始化集群,第三台(192.168.26.102 )以添加的方式加入集群

本机编写配置文件。

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat etcd.conf

ETCD_DATA_DIR="/var/lib/etcd/cluster.etcd"

ETCD_LISTEN_PEER_URLS="http://192.168.26.100:2380,http://localhost:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.26.100:2379,http://localhost:2379"

ETCD_NAME="etcd-100"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.26.100:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://localhost:2379,http://192.168.26.100:2379"

ETCD_INITIAL_CLUSTER="etcd-100=http://192.168.26.100:2380,etcd-101=http://192.168.26.101:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

把配置文件拷贝到192.168.26.100,192.168.26.101

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.100,192.168.26.101 -m copy -a "src=./etcd.conf dest=/etc/etcd/etcd.conf force=yes"

192.168.26.101 | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "bae3b8bc6636bf7304cce647b7068aa45ced859b",

"dest": "/etc/etcd/etcd.conf",

"gid": 0,

"group": "root",

"md5sum": "5f2a3fbe27515f85b7f9ed42a206c2a6",

"mode": "0644",

"owner": "root",

"size": 533,

"src": "/root/.ansible/tmp/ansible-tmp-1633800905.88-59602-39965601417441/source",

"state": "file",

"uid": 0

}

192.168.26.100 | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "bae3b8bc6636bf7304cce647b7068aa45ced859b",

"dest": "/etc/etcd/etcd.conf",

"gid": 0,

"group": "root",

"md5sum": "5f2a3fbe27515f85b7f9ed42a206c2a6",

"mode": "0644",

"owner": "root",

"size": 533,

"src": "/root/.ansible/tmp/ansible-tmp-1633800905.9-59600-209338664801782/source",

"state": "file",

"uid": 0

}

检查配置文件

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.100,192.168.26.101 -m shell -a "cat /etc/etcd/etcd.conf"

192.168.26.101 | CHANGED | rc=0 >>

ETCD_DATA_DIR="/var/lib/etcd/cluster.etcd"

ETCD_LISTEN_PEER_URLS="http://192.168.26.100:2380,http://localhost:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.26.100:2379,http://localhost:2379"

ETCD_NAME="etcd-100"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.26.100:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://localhost:2379,http://192.168.26.100:2379"

ETCD_INITIAL_CLUSTER="etcd-100=http://192.168.26.100:2380,etcd-101=http://192.168.26.101:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

192.168.26.100 | CHANGED | rc=0 >>

ETCD_DATA_DIR="/var/lib/etcd/cluster.etcd"

ETCD_LISTEN_PEER_URLS="http://192.168.26.100:2380,http://localhost:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.26.100:2379,http://localhost:2379"

ETCD_NAME="etcd-100"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.26.100:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://localhost:2379,http://192.168.26.100:2379"

ETCD_INITIAL_CLUSTER="etcd-100=http://192.168.26.100:2380,etcd-101=http://192.168.26.101:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

修改101的配置文件

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.101 -m shell -a "sed -i '1,9s/100/101/g' /etc/etcd/etcd.conf"

192.168.26.101 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.100,192.168.26.101 -m shell -a "cat -n /etc/etcd/etcd.conf"

192.168.26.100 | CHANGED | rc=0 >>

1 ETCD_DATA_DIR="/var/lib/etcd/cluster.etcd"

2

3 ETCD_LISTEN_PEER_URLS="http://192.168.26.100:2380,http://localhost:2380"

4 ETCD_LISTEN_CLIENT_URLS="http://192.168.26.100:2379,http://localhost:2379"

5

6 ETCD_NAME="etcd-100"

7 ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.26.100:2380"

8

9 ETCD_ADVERTISE_CLIENT_URLS="http://localhost:2379,http://192.168.26.100:2379"

10

11 ETCD_INITIAL_CLUSTER="etcd-100=http://192.168.26.100:2380,etcd-101=http://192.168.26.101:2380"

12 ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

13 ETCD_INITIAL_CLUSTER_STATE="new"

192.168.26.101 | CHANGED | rc=0 >>

1 ETCD_DATA_DIR="/var/lib/etcd/cluster.etcd"

2

3 ETCD_LISTEN_PEER_URLS="http://192.168.26.101:2380,http://localhost:2380"

4 ETCD_LISTEN_CLIENT_URLS="http://192.168.26.101:2379,http://localhost:2379"

5

6 ETCD_NAME="etcd-101"

7 ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.26.101:2380"

8

9 ETCD_ADVERTISE_CLIENT_URLS="http://localhost:2379,http://192.168.26.101:2379"

10

11 ETCD_INITIAL_CLUSTER="etcd-100=http://192.168.26.100:2380,etcd-101=http://192.168.26.101:2380"

12 ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

13 ETCD_INITIAL_CLUSTER_STATE="new"

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

查看etcd集群

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.100,192.168.26.101 -m shell -a "etcdctl member list"

192.168.26.100 | CHANGED | rc=0 >>

6f2038a018db1103: name=etcd-100 peerURLs=http://192.168.26.100:2380 clientURLs=http://192.168.26.100:2379,http://localhost:2379 isLeader=false

bd330576bb637f25: name=etcd-101 peerURLs=http://192.168.26.101:2380 clientURLs=http://192.168.26.101:2379,http://localhost:2379 isLeader=true

192.168.26.101 | CHANGED | rc=0 >>

6f2038a018db1103: name=etcd-100 peerURLs=http://192.168.26.100:2380 clientURLs=http://192.168.26.100:2379,http://localhost:2379 isLeader=false

bd330576bb637f25: name=etcd-101 peerURLs=http://192.168.26.101:2380 clientURLs=http://192.168.26.101:2379,http://localhost:2379 isLeader=true

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

添加etcd 192.168.26.102

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.100 -m shell -a "etcdctl member add etcd-102 http://192.168.26.102:2380"

192.168.26.100 | CHANGED | rc=0 >>

Added member named etcd-102 with ID 2fd4f9ba70a04579 to cluster

ETCD_NAME="etcd-102"

ETCD_INITIAL_CLUSTER="etcd-102=http://192.168.26.102:2380,etcd-100=http://192.168.26.100:2380,etcd-101=http://192.168.26.101:2380"

ETCD_INITIAL_CLUSTER_STATE="existing"

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

修改之前写好的配置文件给102

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$sed -i '1,8s/100/102/g' etcd.conf

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$sed -i '13s/new/existing/' etcd.conf

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$sed -i 's#ETCD_INITIAL_CLUSTER="#ETCD_INITIAL_CLUSTER="etcd-102=http://192.168.26.102:2380,#' etcd.conf

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat -n etcd.conf

1 ETCD_DATA_DIR="/var/lib/etcd/cluster.etcd"

2

3 ETCD_LISTEN_PEER_URLS="http://192.168.26.102:2380,http://localhost:2380"

4 ETCD_LISTEN_CLIENT_URLS="http://192.168.26.102:2379,http://localhost:2379"

5

6 ETCD_NAME="etcd-102"

7 ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.26.102:2380"

8

9 ETCD_ADVERTISE_CLIENT_URLS="http://localhost:2379,http://192.168.26.100:2379"

10

11 ETCD_INITIAL_CLUSTER="etcd-102=http://192.168.26.102:2380,etcd-100=http://192.168.26.100:2380,etcd-101=http://192.168.26.101:2380"

12 ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

13 ETCD_INITIAL_CLUSTER_STATE="existing"

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

配置文件拷贝替换,启动etcd

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.102 -m copy -a "src=./etcd.conf dest=/etc/etcd/etcd.conf force=yes"

192.168.26.102 | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "2d8fa163150e32da563f5e591134b38cc356d237",

"dest": "/etc/etcd/etcd.conf",

"gid": 0,

"group": "root",

"md5sum": "389c2850d434478e2d4d57a7798196de",

"mode": "0644",

"owner": "root",

"size": 574,

"src": "/root/.ansible/tmp/ansible-tmp-1633803533.57-102177-227527368141930/source",

"state": "file",

"uid": 0

}

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.102 -m shell -a "systemctl enable etcd --now"

192.168.26.102 | CHANGED | rc=0 >>

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

检查集群是否添加成功

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -m shell -a "etcdctl member list"

192.168.26.101 | CHANGED | rc=0 >>

2fd4f9ba70a04579: name=etcd-102 peerURLs=http://192.168.26.102:2380 clientURLs=http://192.168.26.100:2379,http://localhost:2379 isLeader=false

6f2038a018db1103: name=etcd-100 peerURLs=http://192.168.26.100:2380 clientURLs=http://192.168.26.100:2379,http://localhost:2379 isLeader=false

bd330576bb637f25: name=etcd-101 peerURLs=http://192.168.26.101:2380 clientURLs=http://192.168.26.101:2379,http://localhost:2379 isLeader=true

192.168.26.102 | CHANGED | rc=0 >>

2fd4f9ba70a04579: name=etcd-102 peerURLs=http://192.168.26.102:2380 clientURLs=http://192.168.26.100:2379,http://localhost:2379 isLeader=false

6f2038a018db1103: name=etcd-100 peerURLs=http://192.168.26.100:2380 clientURLs=http://192.168.26.100:2379,http://localhost:2379 isLeader=false

bd330576bb637f25: name=etcd-101 peerURLs=http://192.168.26.101:2380 clientURLs=http://192.168.26.101:2379,http://localhost:2379 isLeader=true

192.168.26.100 | CHANGED | rc=0 >>

2fd4f9ba70a04579: name=etcd-102 peerURLs=http://192.168.26.102:2380 clientURLs=http://192.168.26.100:2379,http://localhost:2379 isLeader=false

6f2038a018db1103: name=etcd-100 peerURLs=http://192.168.26.100:2380 clientURLs=http://192.168.26.100:2379,http://localhost:2379 isLeader=false

bd330576bb637f25: name=etcd-101 peerURLs=http://192.168.26.101:2380 clientURLs=http://192.168.26.101:2379,http://localhost:2379 isLeader=true

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

设置环境变量,这里有一点麻烦。

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -m shell -a "echo 'export ETCDCTL_API=3' >> ~/.bashrc"

192.168.26.100 | CHANGED | rc=0 >>

192.168.26.102 | CHANGED | rc=0 >>

192.168.26.101 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -m shell -a "cat ~/.bashrc"

192.168.26.100 | CHANGED | rc=0 >>

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

export ETCDCTL_API=3

192.168.26.102 | CHANGED | rc=0 >>

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

export ETCDCTL_API=3

192.168.26.101 | CHANGED | rc=0 >>

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

export ETCDCTL_API=3

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -m shell -a "etcdctl version"

192.168.26.100 | CHANGED | rc=0 >>

etcdctl version: 3.3.11

API version: 3.3

192.168.26.102 | CHANGED | rc=0 >>

etcdctl version: 3.3.11

API version: 3.3

192.168.26.101 | CHANGED | rc=0 >>

etcdctl version: 3.3.11

API version: 3.3

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

同步性测试

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$# 同步性测试

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.100 -a "etcdctl put name liruilong"

192.168.26.100 | CHANGED | rc=0 >>

OK

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -a "etcdctl get name"

192.168.26.100 | CHANGED | rc=0 >>

name

liruilong

192.168.26.101 | CHANGED | rc=0 >>

name

liruilong

192.168.26.102 | CHANGED | rc=0 >>

name

liruilong

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

准备数据

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.100 -a "etcdctl put name liruilong"

192.168.26.100 | CHANGED | rc=0 >>

OK

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -a "etcdctl get name"

192.168.26.102 | CHANGED | rc=0 >>

name

liruilong

192.168.26.100 | CHANGED | rc=0 >>

name

liruilong

192.168.26.101 | CHANGED | rc=0 >>

name

liruilong

在任何一台主机上对 etcd 做快照

#在任何一台主机上对 etcd 做快照

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.101 -a "etcdctl snapshot save snap20211010.db"

192.168.26.101 | CHANGED | rc=0 >>

Snapshot saved at snap20211010.db

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$# 此快照里包含了刚刚写的数据 name=liruilong,然后把快照文件到所有节点

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.101 -a "scp /root/snap20211010.db root@192.168.26.100:/root/"

192.168.26.101 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.101 -a "scp /root/snap20211010.db root@192.168.26.102:/root/"

192.168.26.101 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

清空数据

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -a "etcdctl del name"

192.168.26.101 | CHANGED | rc=0 >>

1

192.168.26.102 | CHANGED | rc=0 >>

0

192.168.26.100 | CHANGED | rc=0 >>

0

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

在所有节点上关闭 etcd,并删除/var/lib/etcd/里所有数据:

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$# 在所有节点上关闭 etcd,并删除/var/lib/etcd/里所有数据:

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -a "systemctl stop etcd"

192.168.26.100 | CHANGED | rc=0 >>

192.168.26.102 | CHANGED | rc=0 >>

192.168.26.101 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -m shell -a "rm -rf /var/lib/etcd/*"

[WARNING]: Consider using the file module with state=absent rather than running 'rm'. If you need to

use command because file is insufficient you can add 'warn: false' to this command task or set

'command_warnings=False' in ansible.cfg to get rid of this message.

192.168.26.102 | CHANGED | rc=0 >>

192.168.26.100 | CHANGED | rc=0 >>

192.168.26.101 | CHANGED | rc=0 >>

在所有节点上把快照文件的所有者和所属组设置为 etcd:

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -a "chown etcd.etcd /root/snap20211010.db"

[WARNING]: Consider using the file module with owner rather than running 'chown'. If you need to use

command because file is insufficient you can add 'warn: false' to this command task or set

'command_warnings=False' in ansible.cfg to get rid of this message.

192.168.26.100 | CHANGED | rc=0 >>

192.168.26.102 | CHANGED | rc=0 >>

192.168.26.101 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$# 在每台节点上开始恢复数据:

在每台节点上开始恢复数据:

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.100 -m script -a "./snapshot_restore.sh"

192.168.26.100 | CHANGED => {

"changed": true,

"rc": 0,

"stderr": "Shared connection to 192.168.26.100 closed.\r\n",

"stderr_lines": [

"Shared connection to 192.168.26.100 closed."

],

"stdout": "2021-10-10 12:14:30.726021 I | etcdserver/membership: added member 6f2038a018db1103 [http://192.168.26.100:2380] to cluster af623437f584d792\r\n2021-10-10 12:14:30.726234 I | etcdserver/membership: added member bd330576bb637f25 [http://192.168.26.101:2380] to cluster af623437f584d792\r\n",

"stdout_lines": [

"2021-10-10 12:14:30.726021 I | etcdserver/membership: added member 6f2038a018db1103 [http://192.168.26.100:2380] to cluster af623437f584d792",

"2021-10-10 12:14:30.726234 I | etcdserver/membership: added member bd330576bb637f25 [http://192.168.26.101:2380] to cluster af623437f584d792"

]

}

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat -n ./snapshot_restore.sh

1 #!/bin/bash

2

3 # 每台节点恢复镜像

4

5 etcdctl snapshot restore /root/snap20211010.db \

6 --name etcd-100 \

7 --initial-advertise-peer-urls="http://192.168.26.100:2380" \

8 --initial-cluster="etcd-100=http://192.168.26.100:2380,etcd-101=http://192.168.26.101:2380" \

9 --data-dir="/var/lib/etcd/cluster.etcd"

10

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$sed '6,7s/100/101/g' ./snapshot_restore.sh

#!/bin/bash

# 每台节点恢复镜像

etcdctl snapshot restore /root/snap20211010.db \

--name etcd-101 \

--initial-advertise-peer-urls="http://192.168.26.101:2380" \

--initial-cluster="etcd-100=http://192.168.26.100:2380,etcd-101=http://192.168.26.101:2380" \

--data-dir="/var/lib/etcd/cluster.etcd"

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$sed -i '6,7s/100/101/g' ./snapshot_restore.sh

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat ./snapshot_restore.sh

#!/bin/bash

# 每台节点恢复镜像

etcdctl snapshot restore /root/snap20211010.db \

--name etcd-101 \

--initial-advertise-peer-urls="http://192.168.26.101:2380" \

--initial-cluster="etcd-100=http://192.168.26.100:2380,etcd-101=http://192.168.26.101:2380" \

--data-dir="/var/lib/etcd/cluster.etcd"

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.101 -m script -a "./snapshot_restore.sh"

192.168.26.101 | CHANGED => {

"changed": true,

"rc": 0,

"stderr": "Shared connection to 192.168.26.101 closed.\r\n",

"stderr_lines": [

"Shared connection to 192.168.26.101 closed."

],

"stdout": "2021-10-10 12:20:26.032754 I | etcdserver/membership: added member 6f2038a018db1103 [http://192.168.26.100:2380] to cluster af623437f584d792\r\n2021-10-10 12:20:26.032930 I | etcdserver/membership: added member bd330576bb637f25 [http://192.168.26.101:2380] to cluster af623437f584d792\r\n",

"stdout_lines": [

"2021-10-10 12:20:26.032754 I | etcdserver/membership: added member 6f2038a018db1103 [http://192.168.26.100:2380] to cluster af623437f584d792",

"2021-10-10 12:20:26.032930 I | etcdserver/membership: added member bd330576bb637f25 [http://192.168.26.101:2380] to cluster af623437f584d792"

]

}

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

所有节点把/var/lib/etcd 及里面内容的所有者和所属组改为 etcd:v然后分别启动 etcd

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -a "chown -R etcd.etcd /var/lib/etcd/"

[WARNING]: Consider using the file module with owner rather than running 'chown'. If you need to use

command because file is insufficient you can add 'warn: false' to this command task or set

'command_warnings=False' in ansible.cfg to get rid of this message.

192.168.26.100 | CHANGED | rc=0 >>

192.168.26.101 | CHANGED | rc=0 >>

192.168.26.102 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -a "systemctl start etcd"

192.168.26.102 | FAILED | rc=1 >>

Job for etcd.service failed because the control process exited with error code. See "systemctl status etcd.service" and "journalctl -xe" for details.non-zero return code

192.168.26.101 | CHANGED | rc=0 >>

192.168.26.100 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

把剩下的节点添加进集群

# etcdctl member add etcd_name –peer-urls=”https://peerURLs”

[root@vms100 cluster.etcd]# etcdctl member add etcd-102 --peer-urls="http://192.168.26.102:2380"

Member fbd8a96cbf1c004d added to cluster af623437f584d792

ETCD_NAME="etcd-102"

ETCD_INITIAL_CLUSTER="etcd-100=http://192.168.26.100:2380,etcd-101=http://192.168.26.101:2380,etcd-102=http://192.168.26.102:2380"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.26.102:2380"

ETCD_INITIAL_CLUSTER_STATE="existing"

[root@vms100 cluster.etcd]#

测试恢复结果

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.102 -m copy -a "src=./etcd.conf dest=/etc/etcd/etcd.conf force=yes"

192.168.26.102 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"checksum": "2d8fa163150e32da563f5e591134b38cc356d237",

"dest": "/etc/etcd/etcd.conf",

"gid": 0,

"group": "root",

"mode": "0644",

"owner": "root",

"path": "/etc/etcd/etcd.conf",

"size": 574,

"state": "file",

"uid": 0

}

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.102 -m shell -a "systemctl enable etcd --now"

192.168.26.102 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -m shell -a "etcdctl member list"

192.168.26.101 | CHANGED | rc=0 >>

6f2038a018db1103, started, etcd-100, http://192.168.26.100:2380, http://192.168.26.100:2379,http://localhost:2379

bd330576bb637f25, started, etcd-101, http://192.168.26.101:2380, http://192.168.26.101:2379,http://localhost:2379

fbd8a96cbf1c004d, started, etcd-102, http://192.168.26.102:2380, http://192.168.26.100:2379,http://localhost:2379

192.168.26.100 | CHANGED | rc=0 >>

6f2038a018db1103, started, etcd-100, http://192.168.26.100:2380, http://192.168.26.100:2379,http://localhost:2379

bd330576bb637f25, started, etcd-101, http://192.168.26.101:2380, http://192.168.26.101:2379,http://localhost:2379

fbd8a96cbf1c004d, started, etcd-102, http://192.168.26.102:2380, http://192.168.26.100:2379,http://localhost:2379

192.168.26.102 | CHANGED | rc=0 >>

6f2038a018db1103, started, etcd-100, http://192.168.26.100:2380, http://192.168.26.100:2379,http://localhost:2379

bd330576bb637f25, started, etcd-101, http://192.168.26.101:2380, http://192.168.26.101:2379,http://localhost:2379

fbd8a96cbf1c004d, started, etcd-102, http://192.168.26.102:2380, http://192.168.26.100:2379,http://localhost:2379

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible etcd -a "etcdctl get name"

192.168.26.102 | CHANGED | rc=0 >>

name

liruilong

192.168.26.101 | CHANGED | rc=0 >>

name

liruilong

192.168.26.100 | CHANGED | rc=0 >>

name

liruilong

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

k8s中etcd以pod的方式设置

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubectl get pods

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-78d6f96c7b-79xx4 1/1 Running 57 3d1h

calico-node-ntm7v 1/1 Running 16 3d11h

calico-node-skzjp 0/1 Running 42 3d11h

calico-node-v7pj5 1/1 Running 9 3d11h

coredns-545d6fc579-9h2z4 1/1 Running 9 3d1h

coredns-545d6fc579-xgn8x 1/1 Running 10 3d1h

etcd-vms81.liruilongs.github.io 1/1 Running 8 3d11h

kube-apiserver-vms81.liruilongs.github.io 1/1 Running 20 3d11h

kube-controller-manager-vms81.liruilongs.github.io 1/1 Running 26 3d11h

kube-proxy-rbhgf 1/1 Running 4 3d11h

kube-proxy-vm2sf 1/1 Running 3 3d11h

kube-proxy-zzbh9 1/1 Running 2 3d11h

kube-scheduler-vms81.liruilongs.github.io 1/1 Running 24 3d11h

metrics-server-bcfb98c76-6q5mb 1/1 Terminating 0 43h

metrics-server-bcfb98c76-9ptf4 1/1 Terminating 0 27h

metrics-server-bcfb98c76-bbr6n 0/1 Pending 0 12h

┌──[root@vms81.liruilongs.github.io]-[~]

└─$

不能跨版本更新

| 升级工作的基本流程如下 |

|---|

| 升级主控制平面节点 |

| 升级工作节点 |

┌──[root@vms81.liruilongs.github.io]-[~]

└─$yum list --showduplicates kubeadm --disableexcludes=kubernetes

# 在列表中查找最新的 1.22 版本

# 它看起来应该是 1.22.x-0,其中 x 是最新的补丁版本

现有环境

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms81.liruilongs.github.io NotReady control-plane,master 11m v1.21.1

vms82.liruilongs.github.io NotReady <none> 12s v1.21.1

vms83.liruilongs.github.io NotReady <none> 11s v1.21.1

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

控制面节点上的升级过程应该每次处理一个节点。 首先选择一个要先行升级的控制面节点。该节点上必须拥有 /etc/kubernetes/admin.conf 文件。

# 用最新的补丁版本号替换 1.22.x-0 中的 x

┌──[root@vms81.liruilongs.github.io]-[~]

└─$yum install -y kubeadm-1.22.2-0 --disableexcludes=kubernetes

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.2", GitCommit:"8b5a19147530eaac9476b0ab82980b4088bbc1b2", GitTreeState:"clean", BuildDate:"2021-09-15T21:37:34Z", GoVersion:"go1.16.8", Compiler:"gc", Platform:"linux/amd64"}

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubeadm upgrade plan

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks.

[upgrade] Running cluster health checks

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.21.1

[upgrade/versions] kubeadm version: v1.22.2

[upgrade/versions] Target version: v1.22.2

[upgrade/versions] Latest version in the v1.21 series: v1.21.5

................

┌──[root@vms81.liruilongs.github.io]-[~]

└─$sudo kubeadm upgrade apply v1.22.2

............

upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.22.2". Enjoy!

[upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.

┌──[root@vms81.liruilongs.github.io]-[~]

└─$

通过将节点标记为不可调度并腾空节点为节点作升级准备:

# 将 <node-to-drain> 替换为你要腾空的控制面节点名称

#kubectl drain <node-to-drain> --ignore-daemonsets

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubectl drain vms81.liruilongs.github.io --ignore-daemonsets

┌──[root@vms81.liruilongs.github.io]-[~]

└─$

# 用最新的补丁版本号替换 1.22.x-00 中的 x

#yum install -y kubelet-1.22.x-0 kubectl-1.22.x-0 --disableexcludes=kubernetes

┌──[root@vms81.liruilongs.github.io]-[~]

└─$yum install -y kubelet-1.22.2-0 kubectl-1.22.2-0 --disableexcludes=kubernetes

┌──[root@vms81.liruilongs.github.io]-[~]

└─$sudo systemctl daemon-reload

┌──[root@vms81.liruilongs.github.io]-[~]

└─$sudo systemctl restart kubelet

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubectl uncordon vms81.liruilongs.github.io

node/vms81.liruilongs.github.io uncordoned

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms81.liruilongs.github.io Ready control-plane,master 11d v1.22.2

vms82.liruilongs.github.io NotReady <none> 11d v1.21.1

vms83.liruilongs.github.io Ready <none> 11d v1.21.1

┌──[root@vms81.liruilongs.github.io]-[~]

└─$

工作节点上的升级过程应该一次执行一个节点,或者一次执行几个节点, 以不影响运行工作负载所需的最小容量。

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible node -a "yum install -y kubeadm-1.22.2-0 --disableexcludes=kubernetes"

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible node -a "sudo kubeadm upgrade node" # 执行 "kubeadm upgrade" 对于工作节点,下面的命令会升级本地的 kubelet 配置:

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms81.liruilongs.github.io Ready control-plane,master 12d v1.22.2

vms82.liruilongs.github.io Ready <none> 12d v1.21.1

vms83.liruilongs.github.io Ready,SchedulingDisabled <none> 12d v1.22.2

腾空节点,设置维护状态

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl drain vms82.liruilongs.github.io --ignore-daemonsets

node/vms82.liruilongs.github.io cordoned

WARNING: ignoring DaemonSet-managed Pods: kube-system/calico-node-ntm7v, kube-system/kube-proxy-nzm24

node/vms82.liruilongs.github.io drained

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.82 -a "yum install -y kubelet-1.22.2-0 kubectl-1.22.2-0 --disableexcludes=kubernetes"

重启 kubelet

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.82 -a "systemctl daemon-reload"

192.168.26.82 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.82 -a "systemctl restart kubelet"

192.168.26.82 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms81.liruilongs.github.io Ready control-plane,master 13d v1.22.2

vms82.liruilongs.github.io Ready,SchedulingDisabled <none> 13d v1.22.2

vms83.liruilongs.github.io Ready,SchedulingDisabled <none> 13d v1.22.2

取消对节点的保护

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubectl uncordon vms82.liruilongs.github.io

node/vms82.liruilongs.github.io uncordoned

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubectl uncordon vms83.liruilongs.github.io

node/vms83.liruilongs.github.io uncordoned

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms81.liruilongs.github.io Ready control-plane,master 13d v1.22.2

vms82.liruilongs.github.io Ready <none> 13d v1.22.2

vms83.liruilongs.github.io Ready <none> 13d v1.22.2

┌──[root@vms81.liruilongs.github.io]-[~]

└─$

kubeadm upgrade apply 做了以下工作:

- 检查你的集群是否处于可升级状态:

- API 服务器是可访问的

- 所有节点处于 Ready 状态

- 控制面是健康的

- 强制执行版本偏差策略。

- 确保控制面的镜像是可用的或可拉取到服务器上。

- 如果组件配置要求版本升级,则生成替代配置与/或使用用户提供的覆盖版本配置。

- 升级控制面组件或回滚(如果其中任何一个组件无法启动)。

- 应用新的 CoreDNS 和 kube-proxy 清单,并强制创建所有必需的 RBAC 规则。

- 如果旧文件在 180 天后过期,将创建 API 服务器的新证书和密钥文件并备份旧文件。

kubeadm upgrade node 在其他控制平节点上执行以下操作:

- 从集群中获取 kubeadm ClusterConfiguration。

- (可选操作)备份 kube-apiserver 证书。

- 升级控制平面组件的静态 Pod 清单。

- 为本节点升级 kubelet 配置

kubeadm upgrade node 在工作节点上完成以下工作:

- 从集群取回 kubeadm ClusterConfiguration。

- 为本节点升级 kubelet 配置。

环境测试

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms81.liruilongs.github.io Ready control-plane,master 7d23h v1.21.1

vms82.liruilongs.github.io Ready <none> 7d23h v1.21.1

vms83.liruilongs.github.io NotReady <none> 7d23h v1.21.1

┌──[root@vms81.liruilongs.github.io]-[~]

└─$cd ansible/

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible node -m ping

192.168.26.82 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

192.168.26.83 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible node -m shell -a "systemctl is-active docker"

192.168.26.83 | FAILED | rc=3 >>

unknownnon-zero return code

192.168.26.82 | CHANGED | rc=0 >>

active

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.83 -m shell -a "systemctl enable docker --now"

192.168.26.83 | CHANGED | rc=0 >>

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms81.liruilongs.github.io Ready control-plane,master 7d23h v1.21.1

vms82.liruilongs.github.io Ready <none> 7d23h v1.21.1

vms83.liruilongs.github.io Ready <none> 7d23h v1.21.1

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl explain --help

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl explain pods

KIND: Pod

VERSION: v1

DESCRIPTION:

Pod is a collection of containers that can run on a host. This resource is

created by clients and scheduled onto hosts.

FIELDS:

apiVersion <string>

....

kind <string>

.....

metadata <Object>

.....

spec <Object>

.....

status <Object>

....

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl explain pods.metadata

KIND: Pod

VERSION: v1

kubectl config set-context context1 --namespace=liruilong-pod-create

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$mkdir k8s-pod-create

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cd k8s-pod-create/

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl create ns liruilong-pod-create

namespace/liruilong-pod-create created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://192.168.26.81:6443

name: cluster1

contexts:

- context:

cluster: cluster1

namespace: kube-system

user: kubernetes-admin1

name: context1

current-context: context1

kind: Config

preferences: {}

users:

- name: kubernetes-admin1

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get ns

NAME STATUS AGE

default Active 8d

kube-node-lease Active 8d

kube-public Active 8d

kube-system Active 8d

liruilong Active 7d10h

liruilong-pod-create Active 4m18s

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl config set-context context1 --namespace=liruilong-pod-create

Context "context1" modified.

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$docker pull nginx

Using default tag: latest

latest: Pulling from library/nginx

b380bbd43752: Pull complete

fca7e12d1754: Pull complete

745ab57616cb: Pull complete

a4723e260b6f: Pull complete

1c84ebdff681: Pull complete

858292fd2e56: Pull complete

Digest: sha256:644a70516a26004c97d0d85c7fe1d0c3a67ea8ab7ddf4aff193d9f301670cf36

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest

kubectl run podcommon --image=nginx --image-pull-policy=IfNotPresent --labels="name=liruilong" --env="name=liruilong"

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl run podcommon --image=nginx --image-pull-policy=IfNotPresent --labels="name=liruilong" --env="name=liruilong"

pod/podcommon created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods

NAME READY STATUS RESTARTS AGE

podcommon 0/1 ContainerCreating 0 12s

kubectl get pods -o wide

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl run pod-demo --image=nginx --labels=name=nginx --env="user=liruilong" --port=8888 --image-pull-policy=IfNotPresent

pod/pod-demo created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods | grep pod-

pod-demo 1/1 Running 0 73s

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mysql-577h7 1/1 Running 0 19m 10.244.70.39 vms83.liruilongs.github.io <none> <none>

myweb-4xlc5 1/1 Running 0 18m 10.244.70.40 vms83.liruilongs.github.io <none> <none>

myweb-ltqdt 1/1 Running 0 18m 10.244.171.148 vms82.liruilongs.github.io <none> <none>

pod-demo 1/1 Running 0 94s 10.244.171.149 vms82.liruilongs.github.io <none> <none>

poddemo 1/1 Running 0 8m22s 10.244.70.41 vms83.liruilongs.github.io <none> <none>

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$

kubectl delete pod pod-demo --force

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl delete pod pod-demo --force

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely.

pod "pod-demo" force deleted

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods | grep pod-

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$

kubectl run pod-demo --image=nginx --image-pull-policy=IfNotPresent --dry-run=client -o yaml >pod-demo.yaml

yaml文件的获取方法:

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create] # yaml文件的获取方法:

└─$kubectl run pod-demo --image=nginx --image-pull-policy=IfNotPresent --dry-run=client -o yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod-demo

name: pod-demo

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod-demo

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

yaml文件创建pod

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl run pod-demo --image=nginx --image-pull-policy=IfNotPresent --dry-run=client -o yaml >pod-demo.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod-demo

name: pod-demo

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod-demo

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl apply -f pod-demo.yaml

pod/pod-demo created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods

NAME READY STATUS RESTARTS AGE

pod-demo 1/1 Running 0 12s

podcommon 1/1 Running 0 13m

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-demo 1/1 Running 0 27s 10.244.70.4 vms83.liruilongs.github.io <none> <none>

podcommon 1/1 Running 0 13m 10.244.70.3 vms83.liruilongs.github.io <none> <none>

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl delete pod pod-demo

pod "pod-demo" deleted

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podcommon 1/1 Running 0 14m 10.244.70.3 vms83.liruilongs.github.io <none> <none>

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$

创建pod时指定运行命令。替换镜像中CMD的命令

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$# 创建pod时指定运行命令。替换镜像中CMD的命令

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl run comm-pod --image=nginx --image-pull-policy=IfNotPresent --dry-run=client -o yaml -- "echo liruilong"

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: comm-pod

name: comm-pod

spec:

containers:

- args:

- echo liruilong

image: nginx

imagePullPolicy: IfNotPresent

name: comm-pod

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl run comm-pod --image=nginx --image-pull-policy=IfNotPresent --dry-run=client -o yaml -- sh -c "echo liruilong"

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: comm-pod

name: comm-pod

spec:

containers:

- args:

- sh

- -c

- echo liruilong

image: nginx

imagePullPolicy: IfNotPresent

name: comm-pod

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$

kubectl delete -f comm-pod.yaml删除pod

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl run comm-pod --image=nginx --image-pull-policy=IfNotPresent --dry-run=client -o yaml -- sh c "echo liruilong" > comm-pod.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl apply -f comm-pod.yaml

pod/comm-pod created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods

NAME READY STATUS RESTARTS AGE

comm-pod 0/1 CrashLoopBackOff 3 (27s ago) 72s

mysql-577h7 1/1 Running 0 54m

myweb-4xlc5 1/1 Running 0 53m

myweb-ltqdt 1/1 Running 0 52m

poddemo 1/1 Running 0 42m

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl delete -f comm-pod.yaml

pod "comm-pod" deleted

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$

批量创建pod

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$sed 's/demo/demo1/' demo.yaml | kubectl apply -f -

pod/demo1 created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$sed 's/demo/demo2/' demo.yaml | kubectl create -f -

pod/demo2 created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods

NAME READY STATUS RESTARTS AGE

demo 0/1 CrashLoopBackOff 7 (4m28s ago) 18m

demo1 1/1 Running 0 49s

demo2 1/1 Running 0 26s

mysql-d4n6j 1/1 Running 0 23m

myweb-85kf8 1/1 Running 0 22m

myweb-z4qnz 1/1 Running 0 22m

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demo1 1/1 Running 0 3m29s 10.244.70.32 vms83.liruilongs.github.io <none> <none>

demo2 1/1 Running 0 3m6s 10.244.70.33 vms83.liruilongs.github.io <none> <none>

mysql-d4n6j 1/1 Running 0 25m 10.244.171.137 vms82.liruilongs.github.io <none> <none>

myweb-85kf8 1/1 Running 0 25m 10.244.171.138 vms82.liruilongs.github.io <none> <none>

myweb-z4qnz 1/1 Running 0 25m 10.244.171.139 vms82.liruilongs.github.io <none> <none>

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$

容器共享pod的网络空间的。即使用同一个IP地址

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.83 -m shell -a "docker ps | grep demo1"

192.168.26.83 | CHANGED | rc=0 >>

0d644ad550f5 87a94228f133 "/docker-entrypoint.…" 8 minutes ago Up 8 minutes k8s_demo1_demo1_liruilong-pod-create_b721b109-a656-4379-9d3c-26710dadbf70_0

0bcffe0f8e2d registry.aliyuncs.com/google_containers/pause:3.4.1 "/pause" 8 minutes ago Up 8 minutes k8s_POD_demo1_liruilong-pod-create_b721b109-a656-4379-9d3c-26710dadbf70_0

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.83 -m shell -a "docker inspect 0d644ad550f5 | grep -i ipaddress "

192.168.26.83 | CHANGED | rc=0 >>

"SecondaryIPAddresses": null,

"IPAddress": "",

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$# 容器共享pod的网络空间的。

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

一个pod内创建多个容器

yaml 文件编写

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: comm-pod

name: comm-pod

spec:

containers:

- args:

- sh

- -c

- echo liruilong;sleep 10000

image: nginx

imagePullPolicy: IfNotPresent

name: comm-pod0

resources: {}

- name: comm-pod1

image: nginx

imagePullPolicy: IfNotPresent

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

创建 pod

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl delete -f comm-pod.yaml

pod "comm-pod" deleted

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$vim comm-pod.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl apply -f comm-pod.yaml

pod/comm-pod created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods

NAME READY STATUS RESTARTS AGE

comm-pod 2/2 Running 0 20s

mysql-577h7 1/1 Running 0 89m

myweb-4xlc5 1/1 Running 0 87m

myweb-ltqdt 1/1 Running 0 87m

查看标签,指定标签过滤

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

comm-pod 2/2 Running 0 4m43s run=comm-pod

mysql-577h7 1/1 Running 0 93m app=mysql

myweb-4xlc5 1/1 Running 0 92m app=myweb

myweb-ltqdt 1/1 Running 0 91m app=myweb

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods -l run=comm-pod

NAME READY STATUS RESTARTS AGE

comm-pod 2/2 Running 0 5m12s

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$

--image-pull-policy

- Always 每次都下载最新镜像

- Never 只使用本地镜像,从不下载

- IfNotPresent 本地没有才下载

restartPolicy

- Always 总是重启

- OnFailure 非正常退出才重启

- Never 从不重启

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$# 每个对象都有标签

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

vms81.liruilongs.github.io Ready control-plane,master 8d v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=vms81.liruilongs.github.io,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

vms82.liruilongs.github.io Ready <none> 8d v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=vms82.liruilongs.github.io,kubernetes.io/os=linux

vms83.liruilongs.github.io Ready <none> 8d v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=vms83.liruilongs.github.io,kubernetes.io/os=linux

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

podcommon 1/1 Running 0 87s name=liruilong

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.83 -m shell -a "docker ps | grep podcomm"

192.168.26.83 | CHANGED | rc=0 >>

c04e155aa25d nginx "/docker-entrypoint.…" 21 minutes ago Up 21 minutes k8s_podcommon_podcommon_liruilong-pod-create_dbfc4fcd-d62b-4339-9f15-0a48802f60ad_0

309925812d42 registry.aliyuncs.com/google_containers/pause:3.4.1 "/pause" 21 minutes ago Up 21 minutes k8s_POD_podcommon_liruilong-pod-create_dbfc4fcd-d62b-4339-9f15-0a48802f60ad_0

| pod的状态 | – |

|---|---|

Pending pod |

因为其他的原因导致pod准备开始创建 还没有创建(卡住了) |

Running pod |

已经被调度到节点上,且容器工作正常 |

Completed pod |

里所有容器正常退出 |

error/CrashLoopBackOff |

创建的时候就出错,属于内部原因 |

imagePullBackoff |

创建pod的时候,镜像下载失败 |

kubectl exec 命令

kubectl exec -it pod sh #如果pod里有多个容器,则命令是在第一个容器里执行

kubectl exec -it demo -c demo1 sh # 指定容器

kubectl describe pod pod名

kubectl logs pod名 -c 容器名 #如果有多个容器的话 查看日志。

kubectl edit pod pod名 # 部分可以修改,有些不能修改

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl describe pod demo1

Name: demo1

Namespace: liruilong-pod-create

Priority: 0

Node: vms83.liruilongs.github.io/192.168.26.83

Start Time: Wed, 20 Oct 2021 22:27:15 +0800

Labels: run=demo1

Annotations: cni.projectcalico.org/podIP: 10.244.70.32/32

cni.projectcalico.org/podIPs: 10.244.70.32/32

Status: Running

IP: 10.244.70.32

IPs:

IP: 10.244.70.32

Containers:

demo1:

Container ID: docker://0d644ad550f59029036fd73d420d4d2c651801dd12814bb26ad8e979dc0b59c1

Image: nginx

Image ID: docker-pullable://nginx@sha256:644a70516a26004c97d0d85c7fe1d0c3a67ea8ab7ddf4aff193d9f301670cf36

Port: <none>

Host Port: <none>

State: Running

Started: Wed, 20 Oct 2021 22:27:20 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-scc89 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-scc89:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 13m default-scheduler Successfully assigned liruilong-pod-create/demo1 to vms83.liruilongs.github.io

Normal Pulled 13m kubelet Container image "nginx" already present on machine

Normal Created 13m kubelet Created container demo1

Normal Started 13m kubelet Started container demo1

多个容器需要用-c指定

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl exec -it demo1 -- ls /tmp

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl exec -it demo1 -- sh

# ls

bin dev docker-entrypoint.sh home lib64 mnt proc run srv tmp var

boot docker-entrypoint.d etc lib media opt root sbin sys usr

# exit

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl exec -it demo1 -- bash

root@demo1:/# ls

bin dev docker-entrypoint.sh home lib64 mnt proc run srv tmp var

boot docker-entrypoint.d etc lib media opt root sbin sys usr

root@demo1:/# exit

exit

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl exec comm-pod -c comm-pod1 -- echo liruilong

liruilong

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl exec -it comm-pod -c comm-pod1 -- sh

# ls

bin boot dev docker-entrypoint.d docker-entrypoint.sh etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

# exit

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$#

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl logs demo1

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2021/10/20 14:27:21 [notice] 1#1: using the "epoll" event method

2021/10/20 14:27:21 [notice] 1#1: nginx/1.21.3

2021/10/20 14:27:21 [notice] 1#1: built by gcc 8.3.0 (Debian 8.3.0-6)

2021/10/20 14:27:21 [notice] 1#1: OS: Linux 3.10.0-693.el7.x86_64

2021/10/20 14:27:21 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2021/10/20 14:27:21 [notice] 1#1: start worker processes

2021/10/20 14:27:21 [notice] 1#1: start worker process 32

2021/10/20 14:27:21 [notice] 1#1: start worker process 33

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

和docke一样的,可以相互拷贝

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl cp /etc/hosts comm-pod:/usr/share/nginx/html -c comm-pod1

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl exec comm-pod -c comm-pod1 -- ls /usr/share/nginx/html

50x.html

hosts

index.html

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: busybox

command: ['sh', '-c', 'echo OK! && sleep 60']

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: busybox

command:

- sh

- -c

- echo OK! && sleep 60

k8s对于pod的删除有一个延期的删除期,即宽限期,这个时间默认为30s,如果删除时加了 --force选项,就会强制删除。

在删除宽限期内,节点状态被标记为treminating ,宽限期结束后删掉pod,这里的宽限期通过参数 terminationGracePeriodSeconds 设定

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl explain pod.spec

....

terminationGracePeriodSeconds <integer>

pod需要优雅终止的可选持续时间(以秒为单位)。可在删除请求中增加。值必须是非负整数。

值0表示通过kill信号立即停止(没有机会关机)。如果该值为null,则使用默认的宽限期。

宽限期是在pod中运行的进程收到终止信号后的持续时间(以秒为单位),以及进程被kill信号强制停止的时间。

设置此值比流程的预期清理时间长。默认为30秒。

如果pod里面是Nginx进程,就不行,Nginx的处理信号的方式和k8s不同,当我们使用Nginx作为镜像来生成一个个pod的时候,pod里面的Nginx进程就会被很快的关闭,之后的pod也会被删除,并不会使用k8s的宽限期

当某个pod正在被使用是,突然关闭,那这个时候我们还想处理一些事情,这里可以用 pod hook

hook是一个很常见的功能,有时候也称回调,即在到达某一预期事件时触发的操作,比如 前端框架 Vue 的生命周期回调函数,java 虚拟机 JVM 在进程结束时的钩子线程。

在pod的整个生命周期内,有两个回调可以使用

| 两个回调可以使用 |

|---|

| postStart: 当创建pod的时候调用,会随着pod里的主进程同时运行,并行操作,没有先后顺序 |

| preStop: 当删除pod的时候创建,要先运行perStop里的程序,之后在关闭pod,这里的preStop必须是在pod的宽限期内完成,没有完成pod也会被强制删除 |

下面我们创建一个带钩子的pod

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$cat demo.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: demo

name: demo

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: demo

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

通过帮助文档查看宽限期的命令

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl explain pod.spec | grep termin*

terminationGracePeriodSeconds <integer>

Optional duration in seconds the pod needs to terminate gracefully. May be

the pod are sent a termination signal and the time when the processes are

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$

修改yaml文件

demo.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: demo

name: demo

spec:

terminationGracePeriodSeconds: 600

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: demo

resources: {}

lifecycle:

postStart:

exec:

command: ["bin/sh", "-c","echo liruilong`date` >> /liruilong"]

preStop:

exec:

command: ["bin/sh","-c","use/sbin/nginx -s quit"]

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$vim demo.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl apply -f demo.yaml

pod/demo created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods

NAME READY STATUS RESTARTS AGE

demo 1/1 Running 0 21s

mysql-cp7qd 1/1 Running 0 2d13h

myweb-bh9g7 1/1 Running 0 2d4h

myweb-zdc4q 1/1 Running 0 2d13h

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl exec -it demo -- bin/bash

root@demo:/# ls

bin dev docker-entrypoint.sh home lib64 media opt root sbin sys usr

boot docker-entrypoint.d etc lib liruilong mnt proc run srv tmp var

root@demo:/# cat liruilong

liruilongSun Nov 14 05:10:51 UTC 2021

root@demo:/#

这里关闭的话,主进程不会等到宽限期结束,会找Ngixn收到关闭信号时直接关闭

所谓初始化pod,类比java中的构造概念,如果pod的创建命令类比java的构造函数的话,那么初始化容器即为构造块,java中构造块是在构造函数之前执行的一些语句块。初始化容器即为主容器构造前执行的一些语句

| 初始化规则: |

|---|

| 它们总是运行到完成。 |

| 每个都必须在下一个启动之前成功完成。 |

| 如果 Pod 的 Init 容器失败,Kubernetes 会不断地重启该 Pod,直到 Init 容器成功为止。然而,如果 Pod 对应的restartPolicy 为 Never,它不会重新启动。 |

| Init 容器支持应用容器的全部字段和特性,但不支持 Readiness Probe,因为它们必须在 Pod 就绪之前运行完成。 |

| 如果为一个 Pod 指定了多个 Init 容器,那些容器会按顺序一次运行一个。 每个 Init 容器必须运行成功,下一个才能够运行。 |

因为Init容器可能会被重启、重试或者重新执行,所以 Init 容器的代码应该是幂等的。特别地,被写到EmptyDirs 中文件的代码,应该对输出文件可能已经存在做好准备。 |

在 Pod 上使用 activeDeadlineSeconds,在容器上使用 livenessProbe,这样能够避免Init容器一直失败。 这就为 Init 容器活跃设置了一个期限。 |

在Pod中的每个app 和Init容器的名称必须唯一;与任何其它容器共享同一个名称,会在验证时抛出错误。 |

对 Init容器spec 的修改,被限制在容器 image字段中。 更改 Init 容器的image字段,等价于重启该Pod。 |

初始化容器在pod资源文件里 的initContainers里定义,和containers是同一级

创建初始化容器,这里我们通过初始化容器修改swap的一个内核参数为0,即使用交换分区频率为0

Alpine 操作系统是一个面向安全的轻型 Linux 发行版。它不同于通常 Linux 发行版,Alpine 采用了 musl libc 和 busybox 以减小系统的体积和运行时资源消耗,但功能上比 busybox 又完善的多,因此得到开源社区越来越多的青睐。在保持瘦身的同时,Alpine 还提供了自己的包管理工具 apk,可以通过 https://pkgs.alpinelinux.org/packages 网站上查询包信息,也可以直接通过 apk 命令直接查询和安装各种软件

YAML文件编写

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod-init

name: pod-init

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod1-init

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

initContainers:

- image: alpine

name: init

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","sbin/sysctl -w vm.swappiness=0"]

securityContext:

privileged: true

status: {}

查看系统默认值,运行pod

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$cat /proc/sys/vm/swappiness

30

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$vim pod_init.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl apply -f pod_init.yaml

pod/pod-init created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-hhjnk 1/1 Running 0 3d9h

myweb-bn5h4 1/1 Running 0 3d9h

myweb-h8jkc 1/1 Running 0 3d9h

pod-init 0/1 PodInitializing 0 7s

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-hhjnk 1/1 Running 0 3d9h

myweb-bn5h4 1/1 Running 0 3d9h

myweb-h8jkc 1/1 Running 0 3d9h

pod-init 1/1 Running 0 14s

pod创建成功验证一下

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mysql-hhjnk 1/1 Running 0 3d9h 10.244.171.162 vms82.liruilongs.github.io <none> <none>

myweb-bn5h4 1/1 Running 0 3d9h 10.244.171.163 vms82.liruilongs.github.io <none> <none>

myweb-h8jkc 1/1 Running 0 3d9h 10.244.171.160 vms82.liruilongs.github.io <none> <none>

pod-init 1/1 Running 0 11m 10.244.70.54 vms83.liruilongs.github.io <none> <none>

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$cd ..

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.83 -m ping

192.168.26.83 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible 192.168.26.83 -m shell -a "cat /proc/sys/vm/swappiness"

192.168.26.83 | CHANGED | rc=0 >>

0

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

配置文件编写

这里我们配置一个共享卷,然后再初始化容器里同步数据到普通的容器里。

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$cp pod_init.yaml pod_init1.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$vim pod_init1.yaml

31L, 604C 已写入

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl apply -f pod_init1.yaml

pod/pod-init1 created

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-hhjnk 1/1 Running 0 3d9h

myweb-bn5h4 1/1 Running 0 3d9h

myweb-h8jkc 1/1 Running 0 3d9h

pod-init 1/1 Running 0 31m

pod-init1 1/1 Running 0 10s

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl get pods pod-init1

NAME READY STATUS RESTARTS AGE

pod-init1 1/1 Running 0 30s

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-pod-create]

└─$kubectl exec -it pod-init1 /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "pod1-init" out of: pod1-init, init (init)

# ls

2021 boot docker-entrypoint.d etc lib media opt root sbin sys usr

bin dev docker-entrypoint.sh home lib64 mnt proc run srv tmp var

# cd 2021;ls

liruilong.txt

#

正常情况下,pod是在master上统一管理的,所谓静态pod就是,即不是由master上创建调度的,是属于node自身特的pod,在node上只要启动kubelet之后,就会自动的创建的pod。这里理解的话,结合java静态熟悉,静态方法理解,即的node节点初始化的时候需要创建的一些pod

比如 kubeadm的安装k8s的话,所以的服务都是通过容器的方式运行的。相比较二进制的方式方便很多,这里的话,那么涉及到master节点的相关组件在没有k8s环境时是如何运行,构建master节点的,这里就涉及到静态pod的问题。

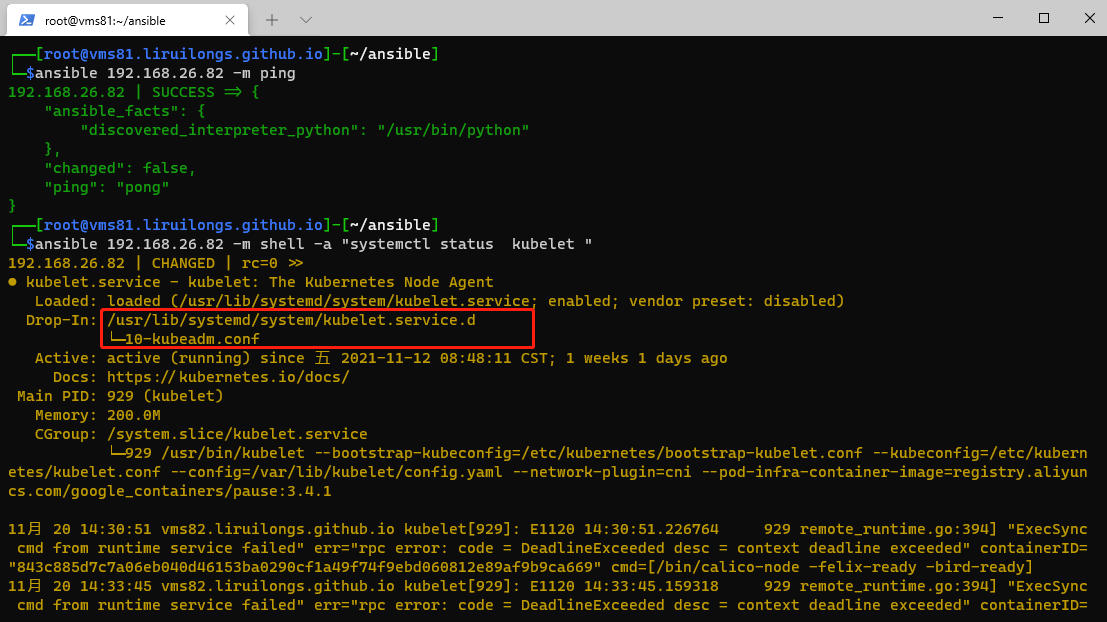

工作节点查看kubelet 启动参数配置文件

| /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf |

|---|

--pod-manifest-path=/etc/kubernetes/kubelet.d |

|

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --pod-manifest-path=/etc/kubernetes/kubelet.d" |

mkdir -p /etc/kubernetes/kubelet.d |

首先需要在配置文件中添加加载静态pod 的yaml文件位置

先在本地改配置文件,使用ansible发送到node节点上,

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$vim /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --pod-manifest-path=/etc/kubernetes/kubelet.d"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/sysconfig/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$mkdir -p /etc/kubernetes/kubelet.d

修改配置后需要加载配置文件重启kubelet

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible node -m copy -a "src=/usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf dest=/usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf force

=yes"

192.168.26.82 | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "13994d828e831f4aa8760c2de36e100e7e255526",

"dest": "/usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf",

"gid": 0,

"group": "root",

"md5sum": "0cfe0f899ea24596f95aa2e175f0dd08",

"mode": "0644",

"owner": "root",

"size": 946,

"src": "/root/.ansible/tmp/ansible-tmp-1637403640.92-32296-63660481173900/source",

"state": "file",

"uid": 0

}

192.168.26.83 | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "13994d828e831f4aa8760c2de36e100e7e255526",

"dest": "/usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf",

"gid": 0,

"group": "root",

"md5sum": "0cfe0f899ea24596f95aa2e175f0dd08",

"mode": "0644",

"owner": "root",

"size": 946,

"src": "/root/.ansible/tmp/ansible-tmp-1637403640.89-32297-164984088437265/source",

"state": "file",

"uid": 0

}

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible node -m shell -a "mkdir -p /etc/kubernetes/kubelet.d"

192.168.26.83 | CHANGED | rc=0 >>

192.168.26.82 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible node -m shell -a "systemctl daemon-reload"

192.168.26.82 | CHANGED | rc=0 >>

192.168.26.83 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible node -m shell -a "systemctl restart kubelet"

192.168.26.83 | CHANGED | rc=0 >>

192.168.26.82 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

现在我们需要到Node的/etc/kubernetes/kubelet.d里创建一个yaml文件,然后根据这个yaml文件,创建一个pod,这样创建出来的node,是不会接受master的管理的。我们同样使用ansible的方式来处理

default名称空间里创建两个静态pod

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat static-pod.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod-static

name: pod-static

namespeace: default

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod-demo

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible node -m copy -a "src=./static-pod.yaml dest=/etc/kubernetes/kubelet.d/static-pod.yaml"

192.168.26.83 | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "9b059b0acb4cd99272809d1785926092816f8771",

"dest": "/etc/kubernetes/kubelet.d/static-pod.yaml",

"gid": 0,

"group": "root",

"md5sum": "41515d4c5c116404cff9289690cdcc20",

"mode": "0644",

"owner": "root",

"size": 302,

"src": "/root/.ansible/tmp/ansible-tmp-1637474358.05-72240-139405051351544/source",

"state": "file",

"uid": 0

}

192.168.26.82 | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "9b059b0acb4cd99272809d1785926092816f8771",

"dest": "/etc/kubernetes/kubelet.d/static-pod.yaml",

"gid": 0,

"group": "root",

"md5sum": "41515d4c5c116404cff9289690cdcc20",

"mode": "0644",

"owner": "root",

"size": 302,

"src": "/root/.ansible/tmp/ansible-tmp-1637474357.94-72238-185516913523170/source",

"state": "file",

"uid": 0

}

node检查一下,配置文件

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible node -m shell -a " cat /etc/kubernetes/kubelet.d/static-pod.yaml"

192.168.26.83 | CHANGED | rc=0 >>

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod-static

name: pod-static

namespeace: default

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod-demo

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always