Apache IoTDB开发系统之Writing Data on HDFS

共享存储体系结构

目前支持将 TSFile(包括 TSFile 和相关数据文件)存储在本地文件系统和 Hadoop分布式文件系统 (HDFS) 中。配置TSFile的存储文件系统非常容易。

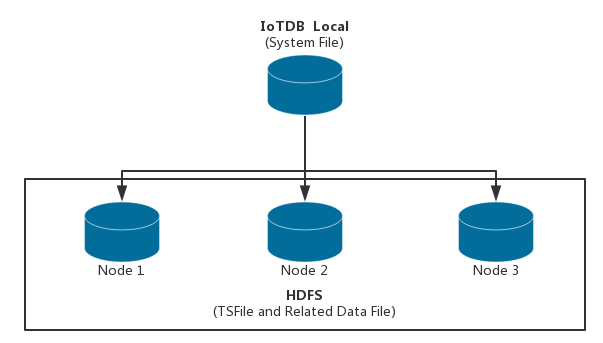

系统架构

当您配置将 TSFile 存储在 HDFS 上时,您的数据文件将位于分布式存储中。系统架构如下:

配置和用法

如果要将 TSFile 和相关数据文件存储在 HDFS 中,步骤如下:

首先,从网站下载源版本或 git 克隆仓库,发布版本的标签是 release/x.x.x

通过以下方式构建服务器和Hadoop模块:mvn clean package -pl server,hadoop -am -Dmaven.test.skip=true

然后,将 Hadoop 模块的目标 jar 复制到服务器目标库文件夹中。hadoop-tsfile-0.10.0-jar-with-dependencies.jar.../server/target/iotdb-server-0.10.0/lib

在 中编辑用户配置。相关配置包括:iotdb-engine.properties

- tsfile_storage_fs

| Name | tsfile_storage_fs |

|---|---|

| Description | The storage file system of Tsfile and related data files. Currently LOCAL file system and HDFS are supported. |

| Type | String |

| Default | LOCAL |

| Effective | After restart system |

- core_site_path

| Name | core_site_path |

|---|---|

| Description | Absolute file path of core-site.xml if Tsfile and related data files are stored in HDFS. |

| Type | String |

| Default | /etc/hadoop/conf/core-site.xml |

| Effective | After restart system |

- hdfs_site_path

| Name | hdfs_site_path |

|---|---|

| Description | Absolute file path of hdfs-site.xml if Tsfile and related data files are stored in HDFS. |

| Type | String |

| Default | /etc/hadoop/conf/hdfs-site.xml |

| Effective | After restart system |

- hdfs_ip

| Name | hdfs_ip |

|---|---|

| Description | IP of HDFS if Tsfile and related data files are stored in HDFS. If there are more than one hdfs_ip in configuration, Hadoop HA is used. |

| Type | String |

| Default | localhost |

| Effective | After restart system |

- hdfs_port

| Name | hdfs_port |

|---|---|

| Description | Port of HDFS if Tsfile and related data files are stored in HDFS |

| Type | String |

| Default | 9000 |

| Effective | After restart system |

- dfs_nameservices

| Name | hdfs_nameservices |

|---|---|

| Description | Nameservices of HDFS HA if using Hadoop HA |

| Type | String |

| Default | hdfsnamespace |

| Effective | After restart system |

- dfs_ha_namenodes

| Name | hdfs_ha_namenodes |

|---|---|

| Description | Namenodes under DFS nameservices of HDFS HA if using Hadoop HA |

| Type | String |

| Default | nn1,nn2 |

| Effective | After restart system |

- dfs_ha_automatic_failover_enabled

| Name | dfs_ha_automatic_failover_enabled |

|---|---|

| Description | Whether using automatic failover if using Hadoop HA |

| Type | Boolean |

| Default | true |

| Effective | After restart system |

- dfs_client_failover_proxy_provider

| Name | dfs_client_failover_proxy_provider |

|---|---|

| Description | Proxy provider if using Hadoop HA and enabling automatic failover |

| Type | String |

| Default | org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider |

| Effective | After restart system |

- hdfs_use_kerberos

| Name | hdfs_use_kerberos |

|---|---|

| Description | Whether use kerberos to authenticate hdfs |

| Type | String |

| Default | false |

| Effective | After restart system |

- kerberos_keytab_file_path

| Name | kerberos_keytab_file_path |

|---|---|

| Description | Full path of kerberos keytab file |

| Type | String |

| Default | /path |

| Effective | After restart system |

- kerberos_principal

| Name | kerberos_principal |

|---|---|

| Description | Kerberos pricipal |

| Type | String |

| Default | your principal |

| Effective | After restart system |

启动服务器,Tsfile将存储在HDFS上。

如果要将存储文件系统重置为本地,只需将配置编辑为 。在这种情况下,如果已经在HDFS上有一些数据文件,应该将它们下载到本地并移动到你的配置数据文件夹(默认),或者重新启动你的进程并将数据导入到IoTDB。tsfile_storage_fsLOCAL../server/target/iotdb-server-0.10.0/data/data

启动服务器或尝试创建时间序列时,可能会遇到以下错误:

ERROR org.apache.iotdb.tsfile.fileSystem.fsFactory.HDFSFactory:62 - Failed to get Hadoop file system. Please check your dependency of Hadoop module.

1.

构建Hadoop模块:mvn clean package -pl hadoop -am -Dmaven.test.skip=true

将 Hadoop 模块的目标 jar 复制到服务器目标库文件夹中。hadoop-tsfile-0.10.0-jar-with-dependencies.jar.../server/target/iotdb-server-0.10.0/lib

- 点赞

- 收藏

- 关注作者

评论(0)