超参优化算法——BO-SMAC

方法描述

SMAC(Sequential Model-Based Optimization forGeneral Algorithm Configuration)是一种基于模型的序贯优化方法(SMBO, Sequential Model-Based Optimization). 该算法:

- 采用

随机森林模型(RF, Random Forest)建立代理模型.

用每棵子树的输出计算均值, 方差作为代理模型的输出. - 采用

EI(expected improvement)或者TS(Thompson Sampling)作为其采集函数生成新采样点.

采集函数会根据代理模型选取均值小或方差大的点进行采样.

算法细节可参考论文.

方法特性

随机森林擅长处理离散值输入, 因此SMAC对离散值多的优化问题比较擅长.随机森林的子树具有非常大的多样性, 对于探索次数较少的点会产生较大的方差, 这使得SMAC具有较优的探索能力.

性能测试

本节将用四个函数测试SMAC算法的性能:

Sphere函数介绍

该函数形似碗, 仅有一个最优点, 没有局部最优点.

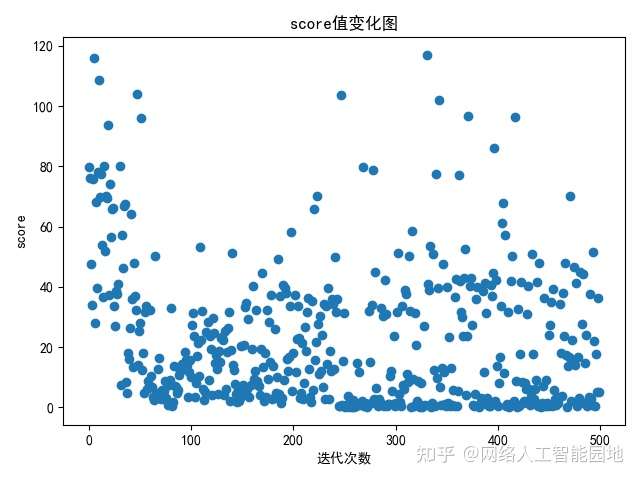

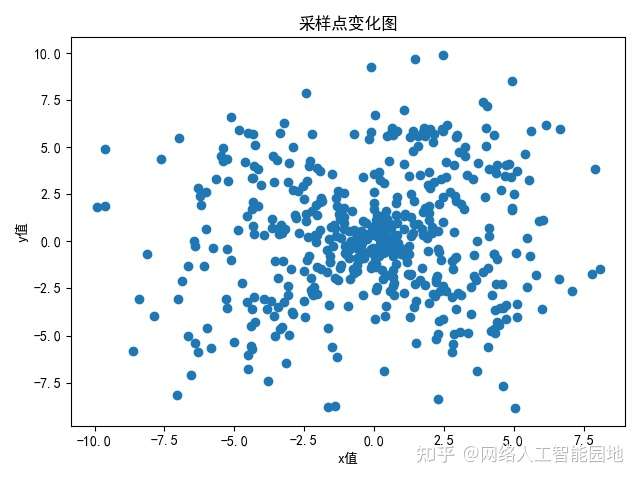

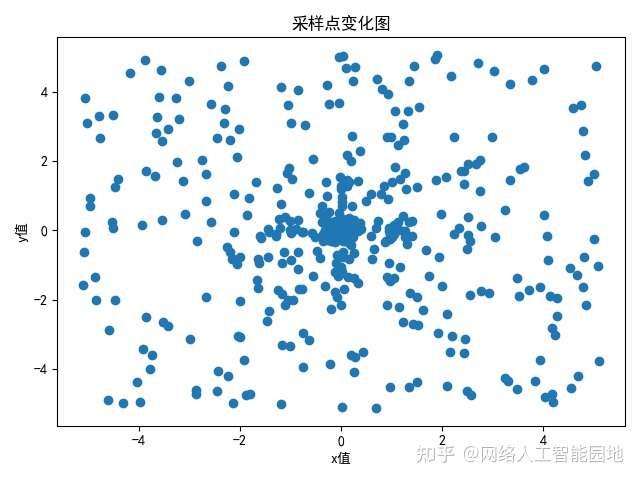

SMAC在Sphere函数上的测试结果

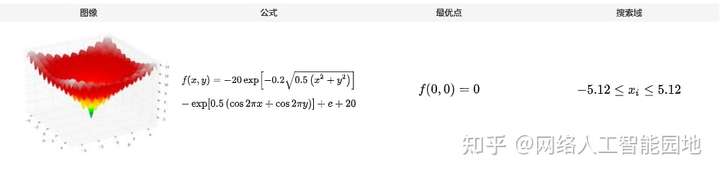

Ackley函数介绍

该函数形似溶洞中的钟乳石, 仅有一个最优点, 存在大量的局部最优点.

SMAC在Ackley函数上的测试结果

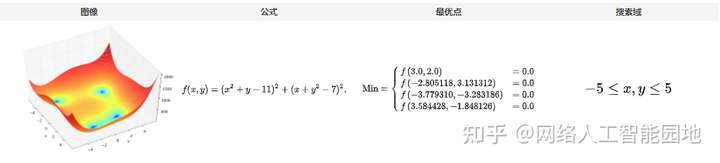

Himmelblau函数介绍

该函数形似山谷, 存在四个全局最优点, 没有局部最优点.

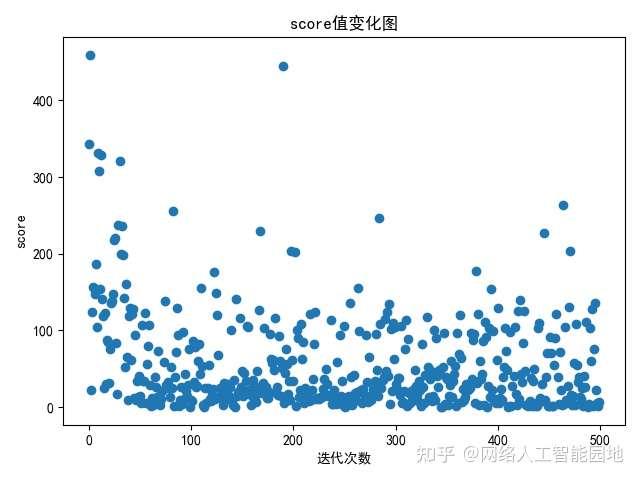

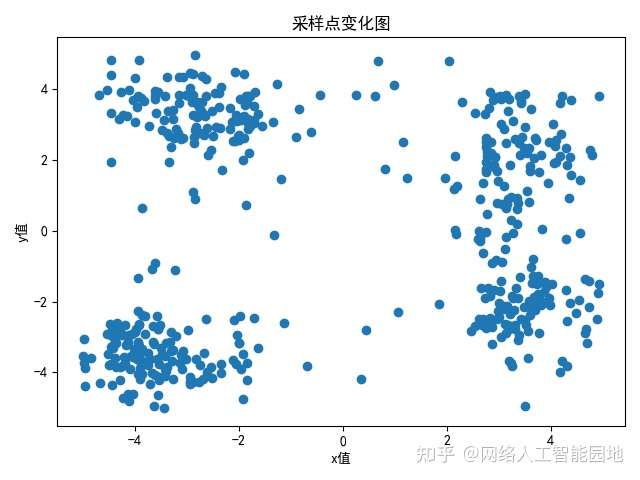

SMAC在Himmelblau函数上的测试结果

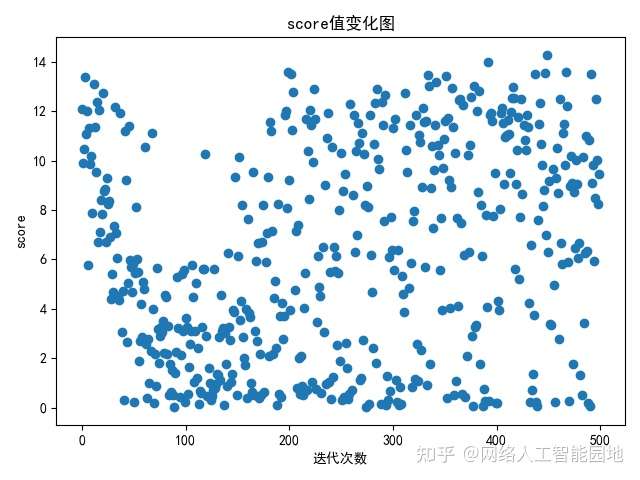

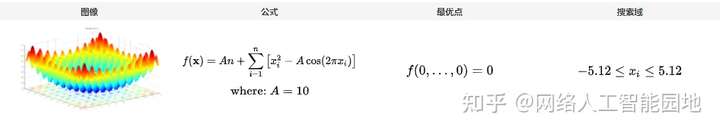

Rastrigin函数介绍

该函数形似森林, 仅有一个最优点, 存在大量的局部最优点.

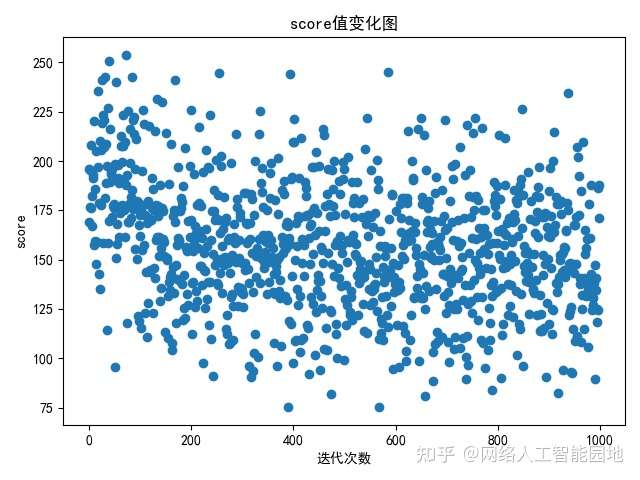

SMAC在Rastrigin函数上的测试结果

运行时间: 265.02s (4.42min)

最优结果: 75.40

结果说明

SMAC算法在迭代初期能以较快速度收敛, 在少量迭代的利用后, 会在全局范围内继续进行大量探索.

因此, SMAC算法具有很好的收敛能力和探索能力.

性能测试代码

由于SMAC算法不能冷启动, 因此, 超参优化在运行SMAC之前会默认执行5次随机搜索.

Sphere函数测试代码

import numpy as np

from naie.context import Context

# 定义Sphere函数

def sphere(): x, y = Context.get("x"), Context.get("y") return np.square(x) + np.square(y)

# 定义搜索配置

config = { "goal": "min", "trial_iter": 500, "method": "bo-smac", "domain_spaces": { "sphere": { "hyper_parameters": [ { "name": "x", "range": [ -10, 10 ], "type": "FLOAT" }, { "name": "y", "range": [ -10, 10 ], "type": "FLOAT" } ] } }

}

# 运行BO-SAMC

from naie.model_selection import HyperparameterOptimization

opt = HyperparameterOptimization(sphere, configuration=config)

opt.start_trials()

Ackley函数测试代码

import numpy as np

from naie.context import Context

# 定义Ackley函数

def ackley(): x, y = Context.get("x"), Context.get("y") return -20 * np.exp(-0.2 * np.sqrt(0.5 * (np.square(x) + np.square(y)))) \ - np.exp(0.5 * (np.cos(2 * np.pi * x) + np.cos(2 * np.pi * y))) \ + np.e + 20

# 定义搜索配置

config = { "goal": "min", "trial_iter": 500, "method": "bo-smac", "domain_spaces": { "ackley": { "hyper_parameters": [ { "name": "x", "range": [ -5.12, 5.12 ], "type": "FLOAT" }, { "name": "y", "range": [ -5.12, 5.12 ], "type": "FLOAT" } ] } }

}

# 运行BO-SMAC

from naie.model_selection import HyperparameterOptimization

opt = HyperparameterOptimization(ackley, configuration=config)

opt.start_trials()

Himmelblau函数测试代码

import numpy as np

from naie.context import Context

# 定义Himmelblau函数

def himmelblau(): x, y = Context.get("x"), Context.get("y") return np.square(np.square(x) + y -11) + np.square(x + np.square(y) - 7)

# 定义搜索配置

config = { "goal": "min", "trial_iter": 500, "method": "bo-smac", "domain_spaces": { "himmelblau": { "hyper_parameters": [ { "name": "x", "range": [ -5, 5 ], "type": "FLOAT" }, { "name": "y", "range": [ -5, 5 ], "type": "FLOAT" } ] } }

}

# 运行BO-SMAC

from naie.model_selection import HyperparameterOptimization

opt = HyperparameterOptimization(himmelblau, configuration=config)

opt.start_trials()

Rastrigin函数测试代码

采用一个10维的Rastrigin函数来测试BO-SMAC在高维问题中的性能.

import numpy as np

from naie.context import Context

def _square_sum(args): args = np.asarray(args) return np.sum(np.square(args))

def _cos_sum(args): args = np.asarray(args) return np.sum(np.cos(2*np.pi*args))

# 定义Rastrigin函数

def rastrigin(): args = \ Context.get("a"), Context.get("b"), Context.get("c"), Context.get("d"),\ Context.get("e"), Context.get("f"), Context.get("g"), Context.get("h"),\ Context.get("i"), Context.get("j") A = 10 n = len(args) return A*n + _square_sum(args) - A*_cos_sum(args)

# 定义搜索配置

config = { "goal": "min", # 运行1000次超参优化 "trial_iter": 1000, "method": "bo-smac", "domain_spaces": { "himmelblau": { "hyper_parameters": [ { "name": "a", "range": [ -5.12, 5.12 ], "type": "FLOAT" }, { "name": "b", "range": [ -5.12, 5.12 ], "type": "FLOAT" }, { "name": "c", "range": [ -5.12, 5.12 ], "type": "FLOAT" }, { "name": "d", "range": [ -5.12, 5.12 ], "type": "FLOAT" }, { "name": "e", "range": [ -5.12, 5.12 ], "type": "FLOAT" }, { "name": "f", "range": [ -5.12, 5.12 ], "type": "FLOAT" }, { "name": "g", "range": [ -5.12, 5.12 ], "type": "FLOAT" }, { "name": "h", "range": [ -5.12, 5.12 ], "type": "FLOAT" }, { "name": "i", "range": [ -5.12, 5.12 ], "type": "FLOAT" }, { "name": "j", "range": [ -5.12, 5.12 ], "type": "FLOAT" } ] } }

}

# 运行BO-SMAC

from naie.model_selection import HyperparameterOptimization

opt = HyperparameterOptimization(rastrigin, configuration=config)

opt.start_trials()文章来源: zhuanlan.zhihu.com,作者:网络人工智能园地,版权归原作者所有,如需转载,请联系作者。

原文链接:zhuanlan.zhihu.com/p/379350567

- 点赞

- 收藏

- 关注作者

评论(0)