Tungsten Fabric SDN — within AWS EKS

目录

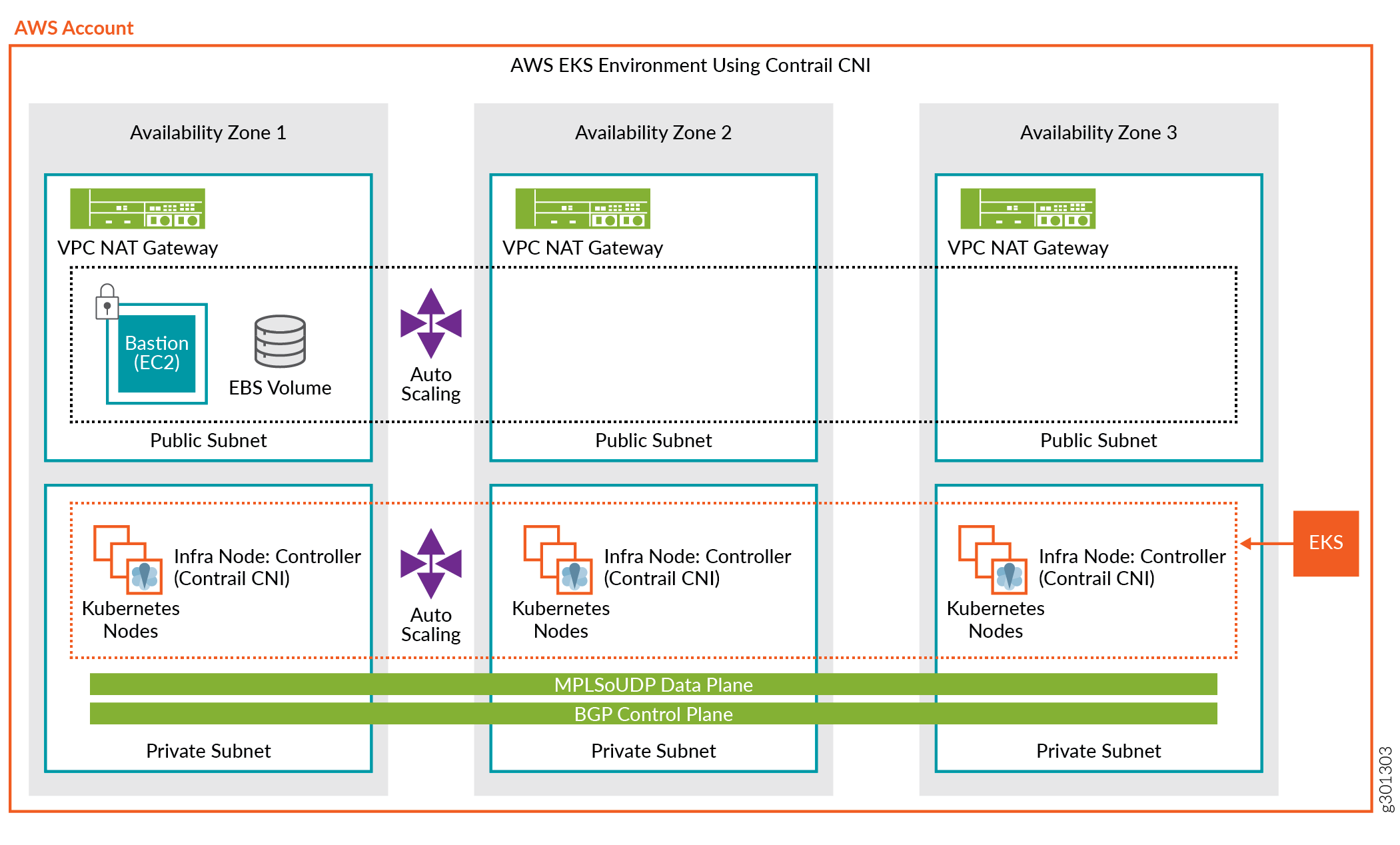

Contrail within AWS EKS

AWS EKS 原生使用的 CNI 是 Amazon VPC CNI(amazon-vpc-cni-k8s),支持原生的 Amazon VPC networking。可实现自动创建 elastic network interfaces(弹性网络接口),并将其连接到 Amazon EC2 节点。同时,还实现了从 VPC 为每个 Pod 和 Service 分配专用的 IPv4/IPv6 地址。

Contrail within AWS EKS 用于在 AWS EKS 环境中安装 Contrail Networking 作为 CNI。

软件版本:

- AWS CLI 1.16.156 及以上

- EKS 1.16 及以上

- Kubernetes 1.18 及以上

- Contrail 2008 及以上

官方稳定:https://www.juniper.net/documentation/en_US/contrail20/topics/task/installation/how-to-install-contrail-aws-eks.html

视频教程:https://www.youtube.com/watch?v=gVL31tJZwvQ&list=PLBO-FXA5nIK_Xi-FbfxLFDCUx4EvIy6_d&index=1

Install Contrail Networking as the CNI for EKS

- 安装 AWS CLI(文档:https://docs.aws.amazon.com/zh_cn/cli/latest/userguide/cli-chap-welcome.html)

$ curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

$ unzip awscliv2.zip

$ sudo ./aws/install

$ aws --version

aws-cli/2.7.23 Python/3.9.11 Linux/3.10.0-1160.66.1.el7.x86_64 exe/x86_64.centos.7 prompt/off

$ aws configure

AWS Access Key ID [None]: XX

AWS Secret Access Key [None]: XX

Default region name [None]: us-east-1

Default output format [None]: json

这里选择使用 us-east-1 作为 Default Region。注意,根据个人账户的情况,可能无法使用 ap-east-1 Region。

- 安装 Kubectl(文档:https://kubernetes.io/zh-cn/docs/tasks/tools/install-kubectl-linux/)

$ cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

$ sudo yum install -y kubectl

$ kubectl version --client

Client Version: version.Info{Major:"1", Minor:"24", GitVersion:"v1.24.3", GitCommit:"aef86a93758dc3cb2c658dd9657ab4ad4afc21cb", GitTreeState:"clean", BuildDate:"2022-07-13T14:30:46Z", GoVersion:"go1.18.3", Compiler:"gc", Platform:"linux/amd64"}

Kustomize Version: v4.5.4

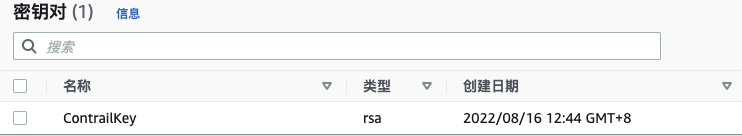

- 创建 EC2 密钥对(文档:https://docs.aws.amazon.com/zh_cn/AWSEC2/latest/UserGuide/ec2-key-pairs.html)

$ aws ec2 create-key-pair \

--key-name ContrailKey \

--key-type rsa \

--key-format pem \

--query "KeyMaterial" \

--output text > ./ContrailKey.pem

$ chmod 400 ContrailKey.pem

- Download the EKS deployer:

wget https://s3-eu-central-1.amazonaws.com/contrail-one-click-deployers/EKS-Scripts.zip -O EKS-Scripts.zip

unzip EKS-Scripts.zip

cd contrail-as-the-cni-for-aws-eks/

- 编辑 variables.sh 文件中的变量。

- CLOUDFORMATIONREGION:指定 CloudFormation 的 AWS Region,这里使用和 EC2 一致的 us-east-1。CloudFormation 会使用 Quickstart Tools 将 EKS 部署到该 Region。

- S3QUICKSTARTREGION:制定 S3 bucket 的 AWS Region,这里使用和 EC2 一致的 us-east-1。

- JUNIPERREPONAME:指定允许访问 Contrail image repository 的 Username。

- JUNIPERREPOPASS:指定允许访问 Contrail image repository 的 Password。

- RELEASE:指定 Contrail 的 Release Contrail container image tag。

- EC2KEYNAME:指定 AWS Region 中现有的 keyname。

- BASTIONSSHKEYPATH:指定本地存放 AWS EC2 SSH Key 的路径。

###############################################################################

#complete the below variables for your setup and run the script

###############################################################################

#this is the aws region you are connected to and want to deploy EKS and Contrail into

export CLOUDFORMATIONREGION="us-east-1"

#this is the region for my quickstart, only change if you plan to deploy your own quickstart

export S3QUICKSTARTREGION="us-east-1"

export LOGLEVEL="SYS_DEBUG"

#example Juniper docker login, change to yours

export JUNIPERREPONAME="XX"

export JUNIPERREPOPASS="XX"

export RELEASE="R21.3"

export K8SAPIPORT="443"

export PODSN="10.0.1.0/24"

export SERVICESN="10.0.2.0/24"

export FABRICSN="10.0.3.0/24"

export ASN="64513"

export MYEMAIL="example@mail.com"

#example key, change these two to your existing ec2 ssh key name and private key file for the region

#also don't forget to chmod 0400 [your private key]

export EC2KEYNAME="ContrailKey"

export BASTIONSSHKEYPATH="/root/aws/ContrailKey.pem"

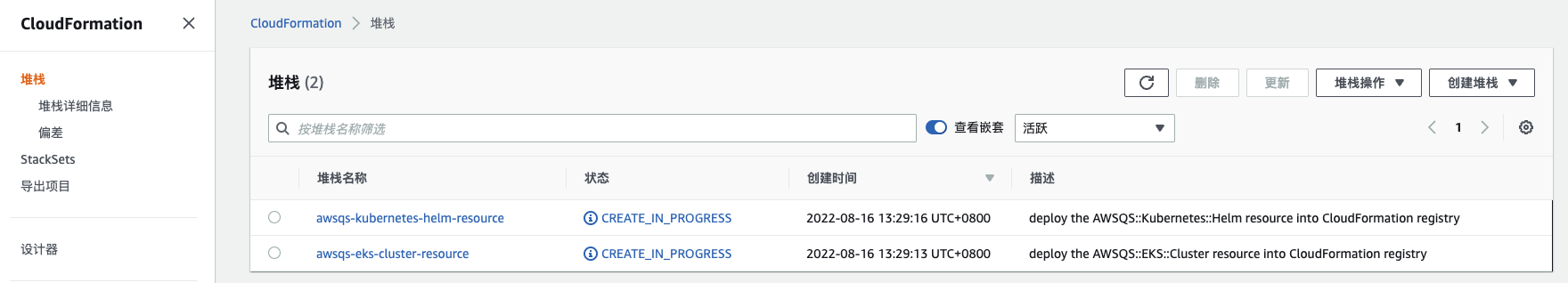

- Deploy the cloudformation-resources.sh file:

$ vi cloudformation-resources.sh

...

if [ $(aws iam list-roles --query "Roles[].RoleName" | grep CloudFormation-Kubernetes-VPC | sed 's/"//g' | sed 's/,//g' | xargs) = "CloudFormation-Kubernetes-VPC" ]; then

#export ADDROLE="false"

export ADDROLE="Disabled"

else

#export ADDROLE="true"

export ADDROLE="Enabled"

fi

...

$ ./cloudformation-resources.sh

./cloudformation-resources.sh: 第 2 行:[: =: 期待一元表达式

{

"StackId": "arn:aws:cloudformation:us-east-1:805369193666:stack/awsqs-eks-cluster-resource/8aedfea0-1d22-11ed-8904-0a73b9f64f57"

}

{

"StackId": "arn:aws:cloudformation:us-east-1:805369193666:stack/awsqs-kubernetes-helm-resource/5e6503e0-1d24-11ed-90da-12f2079f0ffd"

}

waiting for cloudformation stack awsqs-kubernetes-helm-resource to complete

waiting for cloudformation stack awsqs-kubernetes-helm-resource to complete

waiting for cloudformation stack awsqs-kubernetes-helm-resource to complete

waiting for cloudformation stack awsqs-kubernetes-helm-resource to complete

waiting for cloudformation stack awsqs-kubernetes-helm-resource to complete

waiting for cloudformation stack awsqs-kubernetes-helm-resource to complete

waiting for cloudformation stack awsqs-kubernetes-helm-resource to complete

All Done

- 创建 Amazon-EKS-Contrail-CNI CloudFormation Template 的 S3 bucket。

$ vi mk-s3-bucket.sh

...

#S3REGION="eu-west-1"

S3REGION="us-east-1"

$ ./mk-s3-bucket.sh

************************************************************************************

This script is for the admins, you do not need to run it.

It creates a public s3 bucket if needed and pushed the quickstart git repo up to it

************************************************************************************

ok lets get started...

Are you an admin on the SRE aws account and want to push up the latest quickstart to S3? [y/n] y

ok then lets proceed...

Creating the s3 bucket

make_bucket: aws-quickstart-XX

...

...

********************************************

Your quickstart bucket name will be

********************************************

https://s3-us-east-1.amazonaws.com/aws-quickstart-XX

********************************************************************************************************************

**I recommend going to the console, highlighting your quickstart folder directory and clicking action->make public**

**otherwise you may see permissions errors when running from other accounts **

********************************************************************************************************************

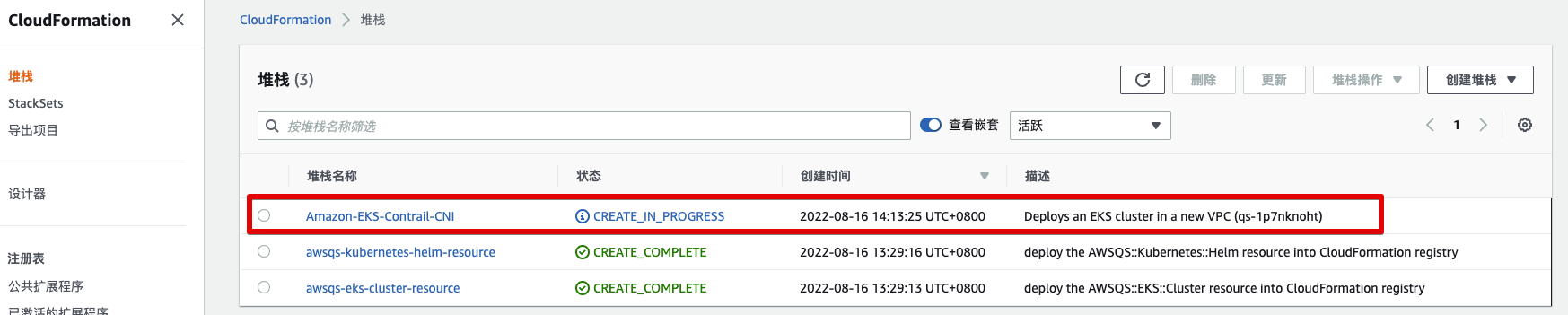

- From the AWS CLI, deploy the EKS quickstart stack.

$ ll quickstart-amazon-eks/

$ ll *.patch

-rw-r--r-- 1 root root 2163 10月 6 2020 patch1-amazon-eks-iam.patch

-rw-r--r-- 1 root root 1610 10月 6 2020 patch2-amazon-eks-master.patch

-rw-r--r-- 1 root root 12204 10月 6 2020 patch3-amazon-eks-nodegroup.patch

-rw-r--r-- 1 root root 381 10月 6 2020 patch4-amazon-eks.patch

$ vi eks-ubuntu.sh

source ./variables.sh

aws cloudformation create-stack \

--capabilities CAPABILITY_IAM \

--stack-name Amazon-EKS-Contrail-CNI \

--disable-rollback \

--template-url https://aws-quickstart-XX.s3.amazonaws.com/quickstart-amazon-eks/templates/amazon-eks-master.template.yaml \

--parameters \

ParameterKey=AvailabilityZones,ParameterValue="${CLOUDFORMATIONREGION}a\,${CLOUDFORMATIONREGION}b\,${CLOUDFORMATIONREGION}c" \

ParameterKey=KeyPairName,ParameterValue=$EC2KEYNAME \

ParameterKey=RemoteAccessCIDR,ParameterValue="0.0.0.0/0" \

ParameterKey=NodeInstanceType,ParameterValue="m4.xlarge" \

ParameterKey=NodeVolumeSize,ParameterValue="100" \

ParameterKey=NodeAMIOS,ParameterValue="UBUNTU-EKS-HVM" \

ParameterKey=QSS3BucketRegion,ParameterValue=${S3QUICKSTARTREGION} \

ParameterKey=QSS3BucketName,ParameterValue="aws-quickstart-XX" \

ParameterKey=QSS3KeyPrefix,ParameterValue="quickstart-amazon-eks/" \

ParameterKey=VPCCIDR,ParameterValue="100.72.0.0/16" \

ParameterKey=PrivateSubnet1CIDR,ParameterValue="100.72.0.0/25" \

ParameterKey=PrivateSubnet2CIDR,ParameterValue="100.72.0.128/25" \

ParameterKey=PrivateSubnet3CIDR,ParameterValue="100.72.1.0/25" \

ParameterKey=PublicSubnet1CIDR,ParameterValue="100.72.1.128/25" \

ParameterKey=PublicSubnet2CIDR,ParameterValue="100.72.2.0/25" \

ParameterKey=PublicSubnet3CIDR,ParameterValue="100.72.2.128/25" \

ParameterKey=NumberOfNodes,ParameterValue="3" \

ParameterKey=MaxNumberOfNodes,ParameterValue="3" \

ParameterKey=EKSPrivateAccessEndpoint,ParameterValue="Enabled" \

ParameterKey=EKSPublicAccessEndpoint,ParameterValue="Enabled"

while [[ $(aws cloudformation describe-stacks --stack-name Amazon-EKS-Contrail-CNI --query "Stacks[].StackStatus" --output text) != "CREATE_COMPLETE" ]];

do

echo "waiting for cloudformation stack Amazon-EKS-Contrail-CNI to complete. This can take up to 45 minutes"

sleep 60

done

echo "All Done"

$ ./eks-ubuntu.sh

{

"StackId": "arn:aws:cloudformation:us-east-1:805369193666:stack/Amazon-EKS-Contrail-CNI/3e6b2c80-1d25-11ed-9c50-0ae926948d21"

}

waiting for cloudformation stack Amazon-EKS-Contrail-CNI to complete. This can take up to 45 minutes

NOTE:contrail-as-the-cni-for-aws-eks 提供的 quickstart-amazon-eks(https://github.com/aws-quickstart/quickstart-amazon-eks)经过了 Contrail 二次开发的,有 4 个 patches 文件。

quickstart-amazon-eks 提供了大量的 CloudFormation EKS Template 文件,我们使用到的 amazon-eks-master.template.yaml。

- You can monitor the status of the deployment using this command:

aws cloudformation describe-stacks --stack-name Amazon-EKS-Contrail-CNI --output table | grep StackStatus

|| StackStatus | CREATE_COMPLETE

- Install the aws-iam-authenticator and the register:

aws sts get-caller-identity

export CLUSTER=$(aws eks list-clusters --output text | awk -F ' ' '{print $2}')

export REGION=$CLOUDFORMATIONREGION

aws eks --region $REGION update-kubeconfig --name $CLUSTER

- From the Kubernetes CLI, verify your cluster parameters

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-100-72-0-19.eu-west-1.compute.internal Ready (none) 19m v1.14.8

ip-100-72-0-210.eu-west-1.compute.internal Ready (none) 19m v1.14.8

ip-100-72-0-44.eu-west-1.compute.internal Ready (none) 19m v1.14.8

ip-100-72-1-124.eu-west-1.compute.internal Ready (none) 19m v1.14.8

ip-100-72-1-53.eu-west-1.compute.internal Ready (none) 19m v1.14.8

$ kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS AGE IP NODE

kube-system aws-node-7gh94 1/1 Running 21m 100.72.1.124 ip-100-72-1-124.eu-west-1.compute.internal

kube-system aws-node-bq2x9 1/1 Running 21m 100.72.1.53 ip-100-72-1-53.eu-west-1.compute.internal

kube-system aws-node-gtdz7 1/1 Running 21m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system aws-node-jr4gn 1/1 Running 21m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system aws-node-zlrbj 1/1 Running 21m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system coredns-6987776bbd-ggsjt 1/1 Running 33m 100.72.0.5 ip-100-72-0-44.eu-west-1.compute.internal

kube-system coredns-6987776bbd-v7ckc 1/1 Running 33m 100.72.1.77 ip-100-72-1-53.eu-west-1.compute.internal

kube-system kube-proxy-k6hdc 1/1 Running 21m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system kube-proxy-m59sb 1/1 Running 21m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system kube-proxy-qrrqn 1/1 Running 21m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system kube-proxy-r2vqw 1/1 Running 21m 100.72.1.53 ip-100-72-1-53.eu-west-1.compute.internal

kube-system kube-proxy-vzkcd 1/1 Running 21m 100.72.1.124 ip-100-72-1-124.eu-west-1.compute.internal

- Upgrade the worker nodes to the latest EKS version:

kubectl apply -f upgrade-nodes.yaml

- After confirming that the EKS version is updated on all nodes, delete the upgrade pods:

kubectl delete -f upgrade-nodes.yaml

- Apply the OS fixes for the EC2 worker nodes for Contrail Networking:

kubectl apply -f cni-patches.yaml

- Deploy Contrail Networking as the CNI for EKS

../deploy-me.sh

- Deploy the setup bastion to provide SSH access for worker nodes

. ./setup-bastion.sh

- Run the Contrail setup file to provide a base Contrail Networking configuration:

. ./setup-contrail.sh

- Check Contrail status

$ . ./contrail-status.sh

**************************************

******node is 100.72.0.19

**************************************

###############################################################################

# ___ ______ ___ _ _ ____ _ _ #

# / \ \ / / ___| / _ \ _ _(_) ___| | __ / ___|| |_ __ _ _ __| |_ #

# / _ \ \ /\ / /\___ \ | | | | | | | |/ __| |/ / \___ \| __/ _` | '__| __| #

# / ___ \ V V / ___) | | |_| | |_| | | (__| < ___) | || (_| | | | |_ #

# /_/ \_\_/\_/ |____/ \__\_\\__,_|_|\___|_|\_\ |____/ \__\__,_|_| \__| #

#-----------------------------------------------------------------------------#

# Amazon EKS Quick Start bastion host #

# https://docs.aws.amazon.com/quickstart/latest/amazon-eks-architecture/ #

###############################################################################

Unable to find image 'hub.juniper.net/contrail/contrail-status:2008.121' locally

2008.121: Pulling from contrail/contrail-status

f34b00c7da20: Already exists

5a390a7d68be: Already exists

07ca884ff4ba: Already exists

0d7531696e74: Already exists

eda9dec1319f: Already exists

c52247bf208e: Already exists

a5dc1d3a1a1f: Already exists

0297580c16ad: Already exists

e341bea3e3e5: Pulling fs layer

12584a95f49f: Pulling fs layer

367eed12f241: Pulling fs layer

367eed12f241: Download complete

12584a95f49f: Download complete

e341bea3e3e5: Verifying Checksum

e341bea3e3e5: Download complete

e341bea3e3e5: Pull complete

12584a95f49f: Pull complete

367eed12f241: Pull complete

Digest: sha256:54ba0b280811a45f846d673addd38d4495eec0e7c3a7156e5c0cd556448138a7

Status: Downloaded newer image for hub.juniper.net/contrail/contrail-status:2008.121

Pod Service Original Name Original Version State Id Status

redis contrail-external-redis 2008-121 running bf3a68e58446 Up 9 minutes

analytics api contrail-analytics-api 2008-121 running 4d394a8fa343 Up 9 minutes

analytics collector contrail-analytics-collector 2008-121 running 1772e258b8b4 Up 9 minutes

analytics nodemgr contrail-nodemgr 2008-121 running f7cb3d64ff2d Up 9 minutes

analytics provisioner contrail-provisioner 2008-121 running 4f73934a4744 Up 7 minutes

analytics-alarm alarm-gen contrail-analytics-alarm-gen 2008-121 running 472b5d2fd7dd Up 9 minutes

analytics-alarm kafka contrail-external-kafka 2008-121 running 88641415d540 Up 9 minutes

analytics-alarm nodemgr contrail-nodemgr 2008-121 running 35e75ddd5b6e Up 9 minutes

analytics-alarm provisioner contrail-provisioner 2008-121 running e82526c4d835 Up 7 minutes

analytics-snmp nodemgr contrail-nodemgr 2008-121 running 6883986527fa Up 9 minutes

analytics-snmp provisioner contrail-provisioner 2008-121 running 91c7be2f4ac9 Up 7 minutes

analytics-snmp snmp-collector contrail-analytics-snmp-collector 2008-121 running 342a11ca471e Up 9 minutes

analytics-snmp topology contrail-analytics-snmp-topology 2008-121 running f4fa7aa0d980 Up 9 minutes

config api contrail-controller-config-api 2008-121 running 17093d75ec93 Up 9 minutes

config device-manager contrail-controller-config-devicemgr 2008-121 running f2c11a305851 Up 6 minutes

config nodemgr contrail-nodemgr 2008-121 running 8322869eaf34 Up 9 minutes

config provisioner contrail-provisioner 2008-121 running 3d2618f9a20b Up 7 minutes

config schema contrail-controller-config-schema 2008-121 running e3b7cbff4ef7 Up 6 minutes

config svc-monitor contrail-controller-config-svcmonitor 2008-121 running 49c3a0f44466 Up 6 minutes

config-database cassandra contrail-external-cassandra 2008-121 running 0eb7d5c56612 Up 9 minutes

config-database nodemgr contrail-nodemgr 2008-121 running 8f1bb252f002 Up 9 minutes

config-database provisioner contrail-provisioner 2008-121 running 4b23ff9ad2bc Up 7 minutes

config-database rabbitmq contrail-external-rabbitmq 2008-121 running 22ab5777e1fa Up 9 minutes

config-database zookeeper contrail-external-zookeeper 2008-121 running 5d1e33e545ae Up 9 minutes

control control contrail-controller-control-control 2008-121 running 05e3ac0e4de3 Up 9 minutes

control dns contrail-controller-control-dns 2008-121 running ea24d045f221 Up 9 minutes

control named contrail-controller-control-named 2008-121 running 977ddeb4a636 Up 9 minutes

control nodemgr contrail-nodemgr 2008-121 running 248ae2888c15 Up 9 minutes

control provisioner contrail-provisioner 2008-121 running c666bd178d29 Up 9 minutes

database cassandra contrail-external-cassandra 2008-121 running 9e840c1a5034 Up 9 minutes

database nodemgr contrail-nodemgr 2008-121 running 355984d1689c Up 9 minutes

database provisioner contrail-provisioner 2008-121 running 60d472efb042 Up 7 minutes

database query-engine contrail-analytics-query-engine 2008-121 running fa56e2c7c765 Up 9 minutes

kubernetes kube-manager contrail-kubernetes-kube-manager 2008-121 running 584013153ef8 Up 9 minutes

vrouter agent contrail-vrouter-agent 2008-121 running 7bc5b164ed44 Up 8 minutes

vrouter nodemgr contrail-nodemgr 2008-121 running 5c9201f4308e Up 8 minutes

vrouter provisioner contrail-provisioner 2008-121 running ce9d14aaba89 Up 8 minutes

webui job contrail-controller-webui-job 2008-121 running d92079688dda Up 9 minutes

webui web contrail-controller-webui-web 2008-121 running 8efed46b98d6 Up 9 minutes

vrouter kernel module is PRESENT

== Contrail control ==

control: active

nodemgr: active

named: active

dns: active

== Contrail analytics-alarm ==

nodemgr: active

kafka: active

alarm-gen: active

== Contrail kubernetes ==

kube-manager: active

== Contrail database ==

nodemgr: active

query-engine: active

cassandra: active

== Contrail analytics ==

nodemgr: active

api: active

collector: active

== Contrail config-database ==

nodemgr: active

zookeeper: active

rabbitmq: active

cassandra: active

== Contrail webui ==

web: active

job: active

== Contrail vrouter ==

nodemgr: active

agent: timeout

== Contrail analytics-snmp ==

snmp-collector: active

nodemgr: active

topology: active

== Contrail config ==

svc-monitor: backup

nodemgr: active

device-manager: backup

api: active

schema: backup

- Confirm that the pods are running

$ kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

kube-system cni-patches-dgjnc 1/1 Running 0 44s 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system cni-patches-krss8 1/1 Running 0 44s 100.72.1.53 ip-100-72-1-53.eu-west-1.compute.internal

kube-system cni-patches-r9vgj 1/1 Running 0 44s 100.72.1.124 ip-100-72-1-124.eu-west-1.compute.internal

kube-system cni-patches-wcc9p 1/1 Running 0 44s 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system cni-patches-xqrw8 1/1 Running 0 44s 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system config-zookeeper-2mspv 1/1 Running 0 16m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system config-zookeeper-k65hk 1/1 Running 0 16m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system config-zookeeper-nj2qb 1/1 Running 0 16m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system contrail-agent-2cqbz 3/3 Running 0 16m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system contrail-agent-kbd7v 3/3 Running 0 16m 100.72.1.53 ip-100-72-1-53.eu-west-1.compute.internal

kube-system contrail-agent-kc4gk 3/3 Running 0 16m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system contrail-agent-n7shj 3/3 Running 0 16m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system contrail-agent-vckdh 3/3 Running 0 16m 100.72.1.124 ip-100-72-1-124.eu-west-1.compute.internal

kube-system contrail-analytics-9llmv 4/4 Running 1 16m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system contrail-analytics-alarm-27x47 4/4 Running 1 16m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system contrail-analytics-alarm-rzxgv 4/4 Running 1 16m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system contrail-analytics-alarm-z6w9k 4/4 Running 1 16m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system contrail-analytics-jmjzk 4/4 Running 1 16m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system contrail-analytics-snmp-4prpn 4/4 Running 1 16m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system contrail-analytics-snmp-s4r4g 4/4 Running 1 16m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system contrail-analytics-snmp-z8gxh 4/4 Running 1 16m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system contrail-analytics-xbbfz 4/4 Running 1 16m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system contrail-analyticsdb-gkcnw 4/4 Running 1 16m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system contrail-analyticsdb-k89fl 4/4 Running 1 16m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system contrail-analyticsdb-txkb4 4/4 Running 1 16m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system contrail-configdb-6hp6v 3/3 Running 1 16m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system contrail-configdb-w7sf8 3/3 Running 1 16m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system contrail-configdb-wkcpp 3/3 Running 1 16m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system contrail-controller-config-h4g7l 6/6 Running 4 16m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system contrail-controller-config-pmlcb 6/6 Running 3 16m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system contrail-controller-config-vvklq 6/6 Running 3 16m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system contrail-controller-control-56d46 5/5 Running 0 16m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system contrail-controller-control-t4mrf 5/5 Running 0 16m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system contrail-controller-control-wlhzq 5/5 Running 0 16m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system contrail-controller-webui-t4bzd 2/2 Running 0 16m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system contrail-controller-webui-wkqzz 2/2 Running 0 16m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system contrail-controller-webui-wnf4z 2/2 Running 0 16m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system contrail-kube-manager-fd6mr 1/1 Running 0 3m23s 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system contrail-kube-manager-jhl2l 1/1 Running 0 3m33s 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system contrail-kube-manager-wnmxt 1/1 Running 0 3m23s 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system coredns-6987776bbd-8vzv9 1/1 Running 0 12m 10.20.0.250 ip-100-72-0-19.eu-west-1.compute.internal

kube-system coredns-6987776bbd-w8h8d 1/1 Running 0 12m 10.20.0.249 ip-100-72-1-124.eu-west-1.compute.internal

kube-system kube-proxy-k6hdc 1/1 Running 1 50m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system kube-proxy-m59sb 1/1 Running 1 50m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system kube-proxy-qrrqn 1/1 Running 1 50m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system kube-proxy-r2vqw 1/1 Running 1 50m 100.72.1.53 ip-100-72-1-53.eu-west-1.compute.internal

kube-system kube-proxy-vzkcd 1/1 Running 1 50m 100.72.1.124 ip-100-72-1-124.eu-west-1.compute.internal

kube-system rabbitmq-754b8 1/1 Running 0 16m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system rabbitmq-bclkx 1/1 Running 0 16m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

kube-system rabbitmq-mk76f 1/1 Running 0 16m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system redis-8wr29 1/1 Running 0 16m 100.72.0.19 ip-100-72-0-19.eu-west-1.compute.internal

kube-system redis-kbtmd 1/1 Running 0 16m 100.72.0.44 ip-100-72-0-44.eu-west-1.compute.internal

kube-system redis-rmr8h 1/1 Running 0 16m 100.72.0.210 ip-100-72-0-210.eu-west-1.compute.internal

- Setup Contrail user interface access.

. ./setup-contrail-ui.sh

- WebGUI https://bastion-public-ip-address:8143

NOTE: You may get some BGP alarm messages upon login. These messages occur because sample BGP peering relationships are established with gateway devices and federated clusters. Delete the BGP peers in your environment if you want to clear the alarms.

- Modify the auto scaling groups so that you can stop instances that are not in use.

export SCALINGGROUPS=( $(aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[].AutoScalingGroupName" --output text) )

aws autoscaling suspend-processes --auto-scaling-group-name ${SCALINGGROUPS[0]}

aws autoscaling suspend-processes --auto-scaling-group-name ${SCALINGGROUPS[1]}

NOTE: If you plan on deleting stacks at a later time, you will have to reset this configuration and use the resume-processes option before deleting the primary stack:

export SCALINGGROUPS=( $(aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[].AutoScalingGroupName" --output text) )

aws autoscaling resume-processes --auto-scaling-group-name ${SCALINGGROUPS[0]}

aws autoscaling resume-processes --auto-scaling-group-name ${SCALINGGROUPS[1]}

-

If you have a public network that you’d like to use for ingress via a gateway, perform the following configuration steps:

- Enter https://bastion-public-ip-address:8143 to connect to the web user interface.

- Navigate to Configure > Networks > k8s-default > networks (left side of page) > Add network (+)

- In the Add network box, enter the following parameters:

- Name: k8s-public

- Subnet: Select ipv4, then enter the IP address of your public service network.

- Leave all other fields in subnet as default.

- advanced: External=tick

- advanced: Share-tick

- route target: Click +. Enter a route target for your public network. For example, 64512:1000.

- Click Save.

-

Deploy a test application on each node:

cd TestDaemonSet

./Create.sh

kubectl get pods -A -o wide

- Deploy a multitier test application:

cd ../TestApp

./Create.sh

kubectl get deployments -n justlikenetflix

kubectl get pods -o wide -n justlikenetflix

kubectl get services -n justlikenetflix

kubectl get ingress -n justlikenetflix

文章来源: is-cloud.blog.csdn.net,作者:范桂飓,版权归原作者所有,如需转载,请联系作者。

原文链接:is-cloud.blog.csdn.net/article/details/126355271

- 点赞

- 收藏

- 关注作者

评论(0)