七十八、Hive数据仓库实际操作(操作测试)

Hive数据仓库的操作:

- 数据库的创建与删除

- 表的创建,修改,删除

- 表中数据的导入与导出

- 表分区与桶的创建、修改、删除

目录

Hive环境搭建

Hive数据仓库的操作

一、创建数据库

hive> show databases;

OK

default

Time taken: 0.067 seconds, Fetched: 1 row(s)

hive> create database if not exists DB;

OK

Time taken: 0.064 seconds

hive> show databases;

OK

db

default

Time taken: 0.018 seconds, Fetched: 2 row(s)

二、查看数据仓库DB的信息及路径

hive> describe database DB;

OK

db hdfs://master:9000/user/hive/warehouse/db.db root USER

Time taken: 0.065 seconds, Fetched: 1 row(s)

Hive数据表的操作

Hive的数据表分为两种:内部表和外部表。

Hive创建内部表时,会将数据移动到数据仓库指向的路径;若创建外部表,仅记录数据所在的路径,不对数据的位置做任何改变。在删除表的时候,内部表的元数据和数据会被一起删除,而外部表只删除元数据,不删除数据。这样外部表相对来说更加安全些,数据组织也更加灵活,方便共享源数据,生产中常使用外部表。

下面详细介绍对表操作的命令及使用方法:

即将创建的表,表名不能和已有表名重复,否则会报错,现在我们show tables 一下,查看已存在的表。

一、创建一个名为cat的内部表,有两个字段为cat_id和cat_name,字符类型为string

hive> create table cat(cat_id string,cat_name string);

OK

Time taken: 0.72 seconds

hive> show tables;

OK

cat

Time taken: 0.057 seconds, Fetched: 1 row(s)

二、创建一个外部表,表名为cat2,有两个字段为cat_id和cat_name,字符类型为string

hive> create external table if not exists goods(group_id string,group_name string) row format delimited fields terminated by '\t' location '/user/root/goods';

OK

Time taken: 0.155 seconds

hive> show tables;

OK

cat

goods

Time taken: 0.026 seconds, Fetched: 2 row(s)

三、修改cat表的表结构。对cat表添加两个字段group_id和cat_code

hive> alter table cat add columns(group_id string,cat_code string);

OK

Time taken: 0.372 seconds

hive> desc cat;

OK

cat_id string

cat_name string

group_id string

cat_code string

Time taken: 0.087 seconds, Fetched: 4 row(s)

四、修改表名cat为cat2

hive> alter table cat rename to cat2;

OK

Time taken: 0.275 seconds

这个命令可以让用户为表更名,数据所在的位置和分区名并不改变。

五、创建与已知表相同结构的表,创建一个与cat表结构相同的表,名为cat3,这里要

用到 like 关键字

hive> create table cat3 like cat2;

OK

Time taken: 1.391 seconds

hive> show tables;

OK

cat2

cat3

goods

Time taken: 0.047 seconds, Fetched: 3 row(s)

hive> desc cat3;

OK

cat_id string

cat_name string

group_id string

cat_code string

Time taken: 0.118 seconds, Fetched: 4 row(s)

Hive中数据的导入与导出

一、从本地文件系统中导入数据到Hive表

首先,在Hive 中创建一个cat_group表,包含group_id和group_name两个字段,字符类型为string,以“\t”为分隔符,并查看结果。

hive> create table cat_group(group_id string,group_name string) row format delimited fields terminated by '\t' stored as textfile;

OK

Time taken: 0.218 seconds

hive> show tables;

OK

cat2

cat3

cat_group

goods

Time taken: 0.048 seconds, Fetched: 4 row(s)

[row format delimited]关键字,是用来设置创建的表在加载数据的时候,支持的列分隔符。

[stored as textfile]关键字,是用来设置加载数据的数据类型,默认是 TEXTFILE,如果文件数据是纯文本,就是使用[stored as textfile],然后从本地直接拷贝到HDFS上,Hive直接可以识别数据。

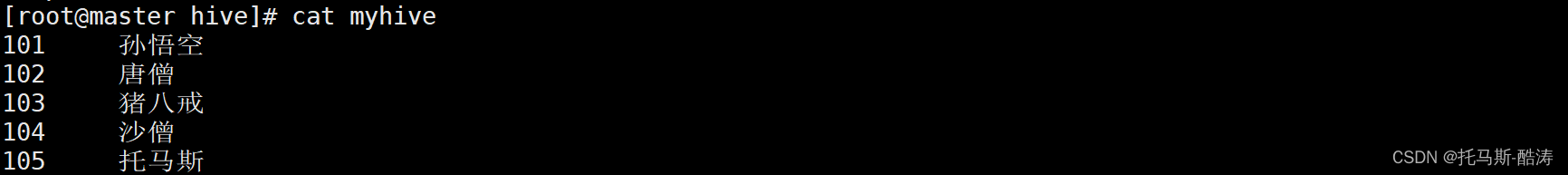

二、将Linux本地 /input/hive/目录下的myhive 文件导入到 Hive 中的cat_group表中

hive> load data local inpath '/input/hive/myhive' into table cat_group;

Loading data to table db.cat_group

OK

Time taken: 1.081 seconds

通过select语句查看cat_group表中是否成功导入数据,使用limit关键字限制输出5条记录。

hive> select * from cat_group limit 5;

OK

101 孙悟空

102 唐僧

103 猪八戒

104 沙僧

105 托马斯

Time taken: 2.088 seconds, Fetched: 5 row(s)

三、将HDFS中的数据导入到Hive中

1、首先,另外开启一个操作窗口,在HDFS上创建/output/hive目录

[root@master hive]# hadoop fs -mkdir /output/hive2、将本地/iutput/hive/下的myhive文件上传到HDFS的/output/hive上,并查看是否创建成功

[root@master hive]# hadoop fs -put /input/hive/myhive /output/hive/

[root@master hive]# hadoop fs -ls /output/hive

Found 1 items

-rw-r--r-- 2 root supergroup 64 2022-03-05 22:19 /output/hive/myhive

3、在Hive 中创建名为cat_group1的表,创表语句如下

hive> create table cat_group1(group_id string,group_name string)

> row format delimited fields terminated by '\t' stored as textfile;

OK

Time taken: 0.243 seconds

4、将HDFS 下/output/hive 中的表cat_group导入到Hive 中的cat_group1表中,并查看结果

hive> load data inpath '/output/hive/myhive' into table cat_group1;

Loading data to table db.cat_group1

OK

Time taken: 0.539 seconds

hive> select * from cat_group1 limit 5;

OK

101 孙悟空

102 唐僧

103 猪八戒

104 沙僧

105 托马斯

Time taken: 0.262 seconds, Fetched: 5 row(s)

注:数据导入成功。

HDFS中数据导入到 Hive中与本地数据导入到 hive中的区别是load data后少了local。

四、从别的表中查询出相应的数据并导入到Hive中

1、首先在Hive 中创建一个名为cat_group2的表。

hive> create table cat_group2(group_id string,group_name string)

> row format delimited fields terminated by '\t' stored as textfile;

OK

Time taken: 0.111 seconds

2、用下面两种方式将cat_group1表中的数据导入到cat_group2表中。

hive> insert into table cat_group2 select * from cat_group1;

Query ID = root_20220306040659_42572420-db7d-4412-bbc3-495abd9ce479

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1646528951444_0003, Tracking URL = http://master:8088/proxy/application_1646528951444_0003/

Kill Command = /home/hadoop//bin/mapred job -kill job_1646528951444_0003

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2022-03-06 04:07:31,799 Stage-1 map = 0%, reduce = 0%

2022-03-06 04:07:51,642 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.89 sec

2022-03-06 04:08:00,165 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.47 sec

MapReduce Total cumulative CPU time: 3 seconds 470 msec

Ended Job = job_1646528951444_0003

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to directory hdfs://master:9000/user/hive/warehouse/db.db/cat_group2/.hive-staging_hive_2022-03-06_04-06-59_043_3456913091663343579-1/-ext-10000

Loading data to table db.cat_group2

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 3.47 sec HDFS Read: 13409 HDFS Write: 348 SUCCESS

Total MapReduce CPU Time Spent: 3 seconds 470 msec

OK

Time taken: 63.711 seconds

hive> insert overwrite table cat_group2 select * from cat_group1;

Query ID = root_20220306041024_bf920fd1-b42d-4ed7-ad7b-66955905fa19

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1646528951444_0004, Tracking URL = http://master:8088/proxy/application_1646528951444_0004/

Kill Command = /home/hadoop//bin/mapred job -kill job_1646528951444_0004

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2022-03-06 04:10:47,981 Stage-1 map = 0%, reduce = 0%

2022-03-06 04:11:12,568 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.33 sec

2022-03-06 04:11:22,231 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 4.1 sec

MapReduce Total cumulative CPU time: 4 seconds 100 msec

Ended Job = job_1646528951444_0004

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to directory hdfs://master:9000/user/hive/warehouse/db.db/cat_group2/.hive-staging_hive_2022-03-06_04-10-24_167_6531779411761470258-1/-ext-10000

Loading data to table db.cat_group2

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 4.1 sec HDFS Read: 13494 HDFS Write: 348 SUCCESS

Total MapReduce CPU Time Spent: 4 seconds 100 msec

OK

Time taken: 60.895 seconds

注:insert overwrite会覆盖数据

3、查询表 cat_group2

hive> select * from cat_group2 limit 5;

OK

101 孙悟空

102 唐僧

103 猪八戒

104 沙僧

105 托马斯

Time taken: 0.33 seconds, Fetched: 5 row(s)

4、在创建表的时候从别的表中查询出相应数据并插入到所创建的表中

Hive中创建表cat_group3并直接从cat_group2中获得数据。

hive> create table cat_group3 as select * from cat_group2;

Query ID = root_20220306041630_3200b863-b9b3-4c2e-ac0d-c7caff9b6611

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1646528951444_0005, Tracking URL = http://master:8088/proxy/application_1646528951444_0005/

Kill Command = /home/hadoop//bin/mapred job -kill job_1646528951444_0005

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

2022-03-06 04:16:54,438 Stage-1 map = 0%, reduce = 0%

2022-03-06 04:17:02,430 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.58 sec

MapReduce Total cumulative CPU time: 1 seconds 580 msec

Ended Job = job_1646528951444_0005

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to directory hdfs://master:9000/user/hive/warehouse/db.db/.hive-staging_hive_2022-03-06_04-16-30_327_7813330832683742274-1/-ext-10002

Moving data to directory hdfs://master:9000/user/hive/warehouse/db.db/cat_group3

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Cumulative CPU: 1.58 sec HDFS Read: 4969 HDFS Write: 133 SUCCESS

Total MapReduce CPU Time Spent: 1 seconds 580 msec

OK

Time taken: 34.65 seconds

5、查询表 cat_group3

hive> select * from cat_group3 limit 5;

OK

101 孙悟空

102 唐僧

103 猪八戒

104 沙僧

105 托马斯

Time taken: 0.229 seconds, Fetched: 5 row(s)

五、常见的三种数据导出方式

1、导出到本地文件系统

在本地创建目录 /output/hive 并将Hive中的cat_group表导出到本地文件系统/output/hive/中。

[root@master hive]# mkdir -p /output/hive/

hive> insert overwrite local directory '/output/hive/'

> row format delimited fields terminated by '\t' select * from cat_group;

Query ID = root_20220306062829_b059a3f5-e4ad-4dd7-a000-e294c4ccbee2

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1646528951444_0006, Tracking URL = http://master:8088/proxy/application_1646528951444_0006/

Kill Command = /home/hadoop//bin/mapred job -kill job_1646528951444_0006

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

2022-03-06 06:28:51,743 Stage-1 map = 0%, reduce = 0%

2022-03-06 06:29:00,515 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.59 sec

MapReduce Total cumulative CPU time: 1 seconds 590 msec

Ended Job = job_1646528951444_0006

Moving data to local directory /output/hive

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Cumulative CPU: 1.59 sec HDFS Read: 4738 HDFS Write: 64 SUCCESS

Total MapReduce CPU Time Spent: 1 seconds 590 msec

OK

Time taken: 32.116 seconds

[root@master out]# cd /output/hive/

[root@master hive]# ll

total 4

-rw-r--r--. 1 root root 64 Mar 6 06:29 000000_0

[root@master hive]# cat 000000_0

101 孙悟空

102 唐僧

103 猪八戒

104 沙僧

105 托马斯

注意:方法和导入数据到 Hive不一样,不能用insert into来将数据导出。

2、Hive中数据导出到HDFS中

将Hive中的表cat_group中的数据导入到HDFS的/output/hive目录里。

hive> insert overwrite directory '/output/hive'

> row format delimited fields terminated by '\t' select group_id,

> group_name from cat_group;

Query ID = root_20220306063621_b359d338-77ee-4571-a425-5415f9c6fb03

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1646528951444_0007, Tracking URL = http://master:8088/proxy/application_1646528951444_0007/

Kill Command = /home/hadoop//bin/mapred job -kill job_1646528951444_0007

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

2022-03-06 06:36:41,866 Stage-1 map = 0%, reduce = 0%

2022-03-06 06:36:55,679 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.75 sec

MapReduce Total cumulative CPU time: 1 seconds 750 msec

Ended Job = job_1646528951444_0007

Stage-3 is selected by condition resolver.

Stage-2 is filtered out by condition resolver.

Stage-4 is filtered out by condition resolver.

Moving data to directory hdfs://master:9000/output/hive/.hive-staging_hive_2022-03-06_06-36-21_452_7432529204143275493-1/-ext-10000

Moving data to directory /output/hive

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Cumulative CPU: 1.75 sec HDFS Read: 4772 HDFS Write: 64 SUCCESS

Total MapReduce CPU Time Spent: 1 seconds 750 msec

OK

Time taken: 36.494 seconds

在HDFS上查看结果

[root@master hive]# hadoop fs -ls /output/hive

Found 1 items

-rw-r--r-- 2 root supergroup 64 2022-03-06 06:36 /output/hive/000000_0

3、导出到Hive的另一张表中

将Hive中表cat_group中的数据导入到cat_group4中(两表字段及字符类型相同)。

首先在Hive 中创建一个表cat_group4,有group_id和group_name 两个字段,字符类型为string,以\t’为分隔符。

hive> create table cat_group4(group_id string,group_name string)

> row format delimited fields terminated by '\t' stored as textfile;

OK

Time taken: 0.195 seconds

然后将cat_group中的数据导入到cat_group4中。

hive> insert into table cat_group4 select * from cat_group;

Query ID = root_20220306064421_722364dd-7475-4ae5-ba44-553f3df856e2

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1646528951444_0008, Tracking URL = http://master:8088/proxy/application_1646528951444_0008/

Kill Command = /home/hadoop//bin/mapred job -kill job_1646528951444_0008

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2022-03-06 06:44:47,514 Stage-1 map = 0%, reduce = 0%

2022-03-06 06:44:58,359 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.74 sec

2022-03-06 06:45:11,880 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.4 sec

MapReduce Total cumulative CPU time: 3 seconds 400 msec

Ended Job = job_1646528951444_0008

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to directory hdfs://master:9000/user/hive/warehouse/db.db/cat_group4/.hive-staging_hive_2022-03-06_06-44-21_318_6696628966307745769-1/-ext-10000

Loading data to table db.cat_group4

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 3.4 sec HDFS Read: 13474 HDFS Write: 348 SUCCESS

Total MapReduce CPU Time Spent: 3 seconds 400 msec

OK

Time taken: 52.617 seconds

导入完成后,查看cat_group4表中数据。

hive> select * from cat_group4 limit 10;

OK

101 孙悟空

102 唐僧

103 猪八戒

104 沙僧

105 托马斯

Time taken: 0.249 seconds, Fetched: 5 row(s)

六、Hive分区表的操作

创建表分区,在 Hive中创建一个分区表goods,包含 goods_id和goods_status两个字段,字符类型为string,分区为cat_id,字符类型为string,以“\t“为分隔符。

hive> create table goods(goods_id string,goods_status string) partitioned by (cat_id string)

> row format delimited fields terminated by '\t';

OK

Time taken: 0.107 seconds

查看表 goods 结构

hive> desc goods;

OK

goods_id string

goods_status string

cat_id string

# Partition Information

# col_name data_type comment

cat_id string

Time taken: 0.108 seconds, Fetched: 7 row(s)

向分区表插入数据,将本地/output/hive下的表goods 中数据,插入到分区表goods中。

[root@master hive]# cat goods

1020405 6 52052

1020405 6 52052

1020405 6 52052

1020405 6 52052

1020405 6 52052

1020405 6 52052

1020405 6 52052

1020405 6 52052

1020405 6 52052

1020405 6 52052在Hive中创建一个非分区表goods_1表,用于存储本地/input/hive/下的表goods 中数据。

hive> create table goods_1(goods_id string,goods_status string,cat_id string)

> row format delimited fields terminated by '\t';

OK

Time taken: 0.179 seconds

将本地/input/hive/下的表goods 中数据导入到Hive中的goods_1表中。

hive> load data local inpath '/input/hive/goods' into table goods_1;

Loading data to table db.goods_1

OK

Time taken: 0.511 seconds

再将表goods_1中的数据导入到分区表goods中

hive> insert into table db.goods partition(cat_id = '52052') select goods_id, goods_status from db.goods_1 where cat_id = '52052';

Query ID = root_20220307041832_30f47fc3-629d-4eda-821a-5f0c3a9edb0d

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1646636256603_0002, Tracking URL = http://master:8088/proxy/application_1646636256603_0002/

Kill Command = /home/hadoop//bin/mapred job -kill job_1646636256603_0002

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2022-03-07 04:19:05,274 Stage-1 map = 0%, reduce = 0%

2022-03-07 04:19:18,487 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2022-03-07 04:19:27,292 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 4.59 sec

MapReduce Total cumulative CPU time: 4 seconds 590 msec

Ended Job = job_1646636256603_0002

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to directory hdfs://master:9000/user/hive/warehouse/db.db/goods/cat_id=52052/.hive-staging_hive_2022-03-07_04-18-32_060_6446641423854979060-1/-ext-10000

Loading data to table db.goods partition (cat_id=52052)

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 4.59 sec HDFS Read: 14777 HDFS Write: 320 SUCCESS

Total MapReduce CPU Time Spent: 4 seconds 590 msec

OK

Time taken: 59.931 seconds

查看表 goods中数据

hive> select goods_id, goods_status from goods;

OK

1624123 6

1020405 6

1020405 6

1020405 6

1020405 6

1020405 6

1020405 6

1020405 6

Time taken: 0.252 seconds, Fetched: 8 row(s)

修改表分区,将分区表goods中的分区列 cat_id = 52050 改为cat_id = 52051,并查看修改后的分区名。

hive> alter table goods partition(cat_id=52052) rename to partition(cat_id=52051);

OK

Time taken: 0.678 seconds

hive> show partitions goods;

OK

cat_id=52051

Time taken: 0.139 seconds, Fetched: 1 row(s)

删除表分区

在删除goods分区表之前,先将goods表备份出一个goods_2表

hive> create table goods_2(goods_id string,goods_status string) partitioned by (cat_id string) row format delimited fields terminated by '\t';

OK

Time taken: 0.178 seconds

hive> insert into table goods_2 partition(cat_id='52052') select goods_id,goods_status from goods_1 where cat_id = '52052';

Query ID = root_20220307054238_db58a379-17f6-4ecb-86e0-402e0d7bbf54

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1646636256603_0003, Tracking URL = http://master:8088/proxy/application_1646636256603_0003/

Kill Command = /home/hadoop//bin/mapred job -kill job_1646636256603_0003

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2022-03-07 05:43:04,534 Stage-1 map = 0%, reduce = 0%

2022-03-07 05:43:17,542 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.76 sec

2022-03-07 05:43:26,197 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 4.55 sec

MapReduce Total cumulative CPU time: 4 seconds 550 msec

Ended Job = job_1646636256603_0003

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to directory hdfs://master:9000/user/hive/warehouse/db.db/goods_2/cat_id=52052/.hive-staging_hive_2022-03-07_05-42-38_498_2225361888387483704-1/-ext-10000

Loading data to table db.goods_2 partition (cat_id=52052)

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 4.55 sec HDFS Read: 14813 HDFS Write: 322 SUCCESS

Total MapReduce CPU Time Spent: 4 seconds 550 msec

OK

Time taken: 49.84 seconds

删除goods表中的cat_id 分区

hive> alter table goods drop if exists partition(cat_id = '52051');

Dropped the partition cat_id=52051

OK

Time taken: 0.405 seconds

hive> show partitions goods;

OK

Time taken: 0.137 seconds

七、Hive桶的操作

在建立桶之前,需要设置hive.enforce.bucketing属性为true,使用Hive能识别桶。

1、创建桶

创建一个名为goods_t表,包含两个字段goods_id 和goods_status ,字段类型都为string ,按cat_id string 做分区,按goods_status 列聚类和goods_id列排列,划分成两个桶。

hive> create table goods_t(goods_id string, goods_status string) partitioned by (cat_id string) clustered by(goods_status) sorted by(goods_id) into 2 buckets;

OK

Time taken: 0.148 seconds

2、设置环境变量set hive.enforce.bucketing=true;

hive> set hive.enforce.bucketing=true;3、向goods_t表中插入goods_2表中的数据

hive> insert overwrite table goods_t partition(cat_id='52063') select goods_id,goods_status from goods_2;

Query ID = root_20220307060336_c76fa90c-ea59-4fa4-9dd5-654c843421fd

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks determined at compile time: 2

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1646636256603_0004, Tracking URL = http://master:8088/proxy/application_1646636256603_0004/

Kill Command = /home/hadoop//bin/mapred job -kill job_1646636256603_0004

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 2

2022-03-07 06:04:01,531 Stage-1 map = 0%, reduce = 0%

2022-03-07 06:04:12,389 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.73 sec

2022-03-07 06:04:29,170 Stage-1 map = 100%, reduce = 50%, Cumulative CPU 4.23 sec

2022-03-07 06:04:30,371 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 6.99 sec

MapReduce Total cumulative CPU time: 7 seconds 410 msec

Ended Job = job_1646636256603_0004

Loading data to table db.goods_t partition (cat_id=52063)

Launching Job 2 out of 2

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1646636256603_0005, Tracking URL = http://master:8088/proxy/application_1646636256603_0005/

Kill Command = /home/hadoop//bin/mapred job -kill job_1646636256603_0005

Hadoop job information for Stage-3: number of mappers: 1; number of reducers: 1

2022-03-07 06:04:54,726 Stage-3 map = 0%, reduce = 0%

2022-03-07 06:05:07,008 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 1.75 sec

2022-03-07 06:05:16,566 Stage-3 map = 100%, reduce = 100%, Cumulative CPU 3.93 sec

MapReduce Total cumulative CPU time: 3 seconds 930 msec

Ended Job = job_1646636256603_0005

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 2 Cumulative CPU: 7.41 sec HDFS Read: 19414 HDFS Write: 469 SUCCESS

Stage-Stage-3: Map: 1 Reduce: 1 Cumulative CPU: 3.93 sec HDFS Read: 11591 HDFS Write: 173 SUCCESS

Total MapReduce CPU Time Spent: 11 seconds 340 msec

OK

Time taken: 102.151 seconds

4、抽样桶表

hive> select * from goods_t tablesample(bucket 1 out of 2 on goods_status);

OK

Time taken: 0.281 seconds

文章来源: tuomasi.blog.csdn.net,作者:托马斯-酷涛,版权归原作者所有,如需转载,请联系作者。

原文链接:tuomasi.blog.csdn.net/article/details/123310024

- 点赞

- 收藏

- 关注作者

评论(0)