flink问题集锦

报错一:

Could not get job jar and dependencies from JAR file: JAR file does not exist: -yn

原因:flink1.8版本之后已弃用该参数,ResourceManager将自动启动所需的尽可能多的容器,以满足作业请求的并行性。解决方法:去掉即可

报错二:

java.lang.IllegalStateException: No Executor found. Please make sure to export the HADOOP_CLASSPATH environment variable or have hadoop in your classpath.

方法1:

配置环境变量

export HADOOP_CLASSPATH=`hadoop classpath`

- 1

方法2:

下载对应版本 flink-shaded-hadoop-2-uber,放到flink的lib目录下

报错三:

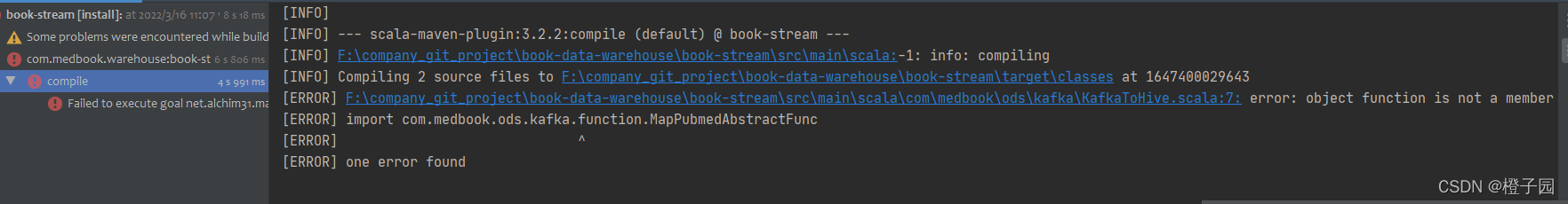

Failed to execute goal net.alchim31.maven:scala-maven-plugin:3.2.2:compile (default) on project book-stream: wrap: org.apache.commons.exec.ExecuteException: Process exited with an error: 1 (Exit value: 1)

产生这个问题的原因有很多,重要的是查看error报错的信息,我这边主要是scala中调用了java的方法,但build时只指定了打包scala的资源,所以会找不到类报错,下面是build出错的行,把它注释掉、删掉,不指定sourceDirectory,所有的sourceDirectory都会打包进去就可解决。

<sourceDirectory>src/main/scala</sourceDirectory>

- 1

报错四:

org.apache.flink.client.program.ProgramInvocationException: The main method caused an error: Could not find a suitable table factory for ‘org.apache.flink.table.planner.delegation.ParserFactory’ in

the classpath.

这个错误也是因为打包时候没有将依赖打包进去、或者需要将依赖放到flink的lib目录下

maven换成了如下的build 的pulgin

<build>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<version>2.15.2</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.6.0</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.19</version>

<configuration>

<skip>true</skip>

</configuration>

</plugin>

</plugins>

</build>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

警告五:

Multiple versions of scala libraries detected!

Expected all dependencies to require Scala version: 2.11.12

org.apache.flink:flink-runtime_2.11:1.13.2 requires scala version: 2.11.12

org.apache.flink:flink-scala_2.11:1.13.2 requires scala version: 2.11.12

org.apache.flink:flink-scala_2.11:1.13.2 requires scala version: 2.11.12

org.scala-lang:scala-reflect:2.11.12 requires scala version: 2.11.12

org.apache.flink:flink-streaming-scala_2.11:1.13.2 requires scala version: 2.11.12

org.apache.flink:flink-streaming-scala_2.11:1.13.2 requires scala version: 2.11.12

org.scala-lang:scala-compiler:2.11.12 requires scala version: 2.11.12

org.scala-lang.modules:scala-xml_2.11:1.0.5 requires scala version: 2.11.7

这是由于scala-maven-plugin打包插件版本低的问题

Starting from Scala 2.10 all changes in bugfix/patch version should be backward compatible, so these warnings don’t really have the point in this case. But they are still very important in case when, let’s say, you somehow end up with scala 2.9 and 2.11 libraries. It happens that since version 3.1.6 you can fix this using scalaCompatVersion configuration

方法1:指定scalaCompatVersion一样的版本

<configuration>

<scalaCompatVersion>${scala.binary.version}</scalaCompatVersion> <scalaVersion>${scala.version}</scalaVersion>

</configuration>

- 1

- 2

- 3

下面是完整的

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.1.6</version>

<configuration>

<scalaCompatVersion>${scala.binary.version}</scalaCompatVersion> <scalaVersion>${scala.binary.version}</scalaVersion>

</configuration>

<executions>

<execution>

<goals>

<goal>compile</goal>

</goals>

</execution>

</executions>

</plugin>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

方法2:打包插件换成4.x的版本

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>4.2.0</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

</goals>

</execution>

</executions>

</plugin>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

文章来源: blog.csdn.net,作者:橙子园,版权归原作者所有,如需转载,请联系作者。

原文链接:blog.csdn.net/Chenftli/article/details/123581749

- 点赞

- 收藏

- 关注作者

评论(0)