金鱼哥RHCA回忆录:RH236创建不同类型的卷

学习完第三章关于GlusterFS的基础配置,进入到第四章创建不同类型的卷。不同类型的卷有着不同的作用,也有着不同的区别。好好理解各种卷的用途。

🎹 个人简介:大家好,我是 金鱼哥,CSDN运维领域新星创作者,华为云·云享专家,阿里云社区·专家博主

📚个人资质:CCNA、HCNP、CSNA(网络分析师),软考初级、中级网络工程师、RHCSA、RHCE、RHCA、RHCI、ITIL😜

💬格言:努力不一定成功,但要想成功就必须努力🔥

练习与实验配置的volume是默认的分布式卷,glusterfs支持多种类型的volume,分别为4种基本类型卷,多种复合卷,分别如下:

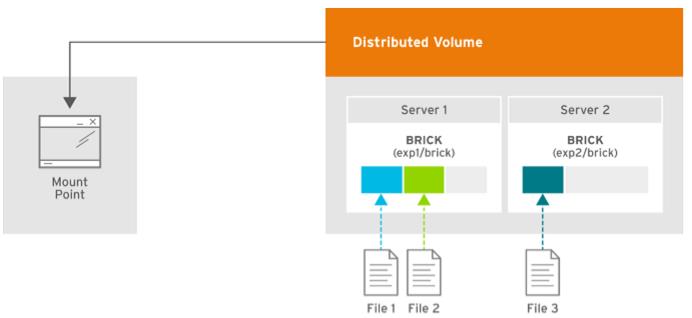

distributed volume(分布式)

默认情况下,不指定类型,就是分布式volume。分布式volume,数据只保存一份,通过随机算法,选出一个birck保存。也就是说,一个brick down了之后,该volume就不可用了,架构图如下:

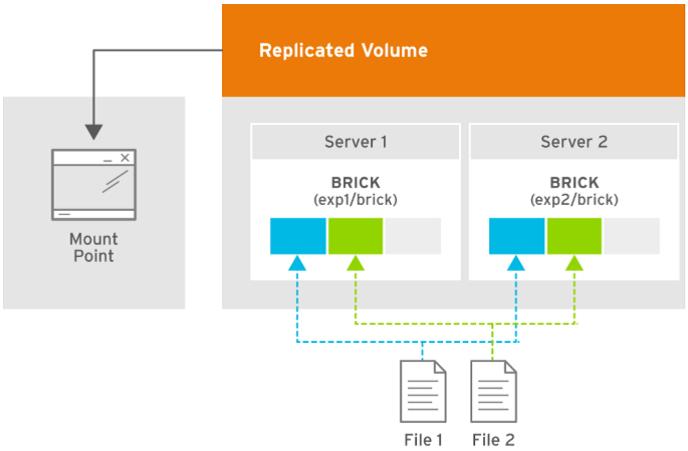

replicate volume(复制)

会对数据进行复制到不同的brick,复制份数,在创建该类型的volume时指定

在客户端看来,并不关心数据到底保存在哪里

该类型的磁盘使用率,只有一个brick的大小

gluster volume create vol2 replica 2\

node1:/brick/brick2/brick \

node2:/brick/brick2/brick

# 创建一个复制份数为2的复制类型volume

gluster volume status vol2

#会发现有一个叫:self-heal deamon,这个进程,是用来实现自愈,也就是复制。

架构图如下:

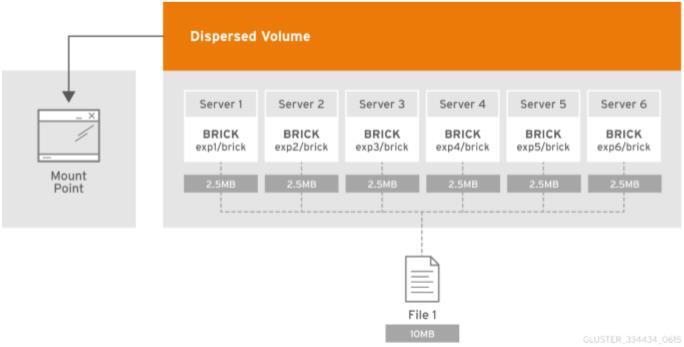

dispersed volume(分散)

类拟raid5,将数据进行分片,分布到brick中,其中一个brick用来作奇偶校验,允许一个brick down掉,比复制类型更省空间。需要的brick数量的计算方式如下:

-

6个brick,可设置冗余份数为2(4+2)

-

11个brick,可设置冗余份数为3(8+3)

-

12以上brick,可设置冗余份数为4(8+4)

比如:有6个brick,2个用来作奇偶校验,一份数据,会被分片成4份进行存储,允许2个brick down掉

gluster volume create vol4 disperse-data 4 redundancy 2 \

node1:/brick/brick4/brick \

node2:/brick/brick4/brick \

node3:/brick/brick4/brick \

node4:/brick/brick4/brick \

node5:/brick/brick4/brick \

node6:/brick/brick4/brick

注意1:如果想将同一个server上的多个brick加入同一个Volume,需要加上force选项

注意2:create创建参数时会有2个控制分片的参数:disperse和disperse-data,前者是控制总的分片数,包含奇偶校验块的数量,后者则为控制将原数据的分片数,不包含冗余块,故当6个brick,2个用来作奇偶校验,一份数据分片成4份进行存储这样的volume配置,使用disperse配置则为:

gluster volume create vol4 disperse 6 redundancy 2 \

node1:/brick/brick4/brick \

node2:/brick/brick4/brick \

node3:/brick/brick4/brick \

node4:/brick/brick4/brick \

node5:/brick/brick4/brick \

node6:/brick/brick4/brick

架构图:

作用:冗余并提高读I/O

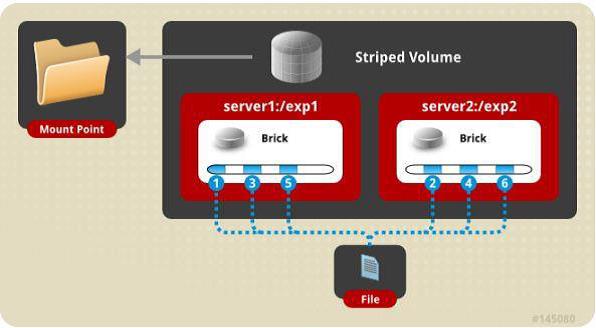

stripe volume(条带)

类似RAID0,可以将一份数据平均分成多份存储进不同的brick,操作过程如下:

gluster volume create vol3 stripe 2 \

node1:/brick/brick3/brick \

node2:/brick/brick3/brick

架构图如下:

作用:提高I/O,缺点:一个brick故障,整个volume失效,且数据丢失

distribute replicate volume(分布复制)

既分布又复制,比如:2×3,2表示分布数,3表示复制数,共需要6个brick,以3为基础,一个数据复制3份,共分两组进行分布,第一个数据分布到第一组,并复制3份,第二个数据,分布到第二组,也复制3份

该类型磁盘使用率为复制数*单个brick容量

gluster volume create vol3 replica 2 \

node1:/brick/brick3/brick \

node2:/brick/brick3/brick \

node3:/brick/brick3/brick \

node4:/brick/brick3/brick

# 创建一个分布复制类型的volume,复制2份,2个分布

# 只需指定复制份数,分布数由brick总数/复制份数计算出来

架构图如下:

作用:扩容及冗余

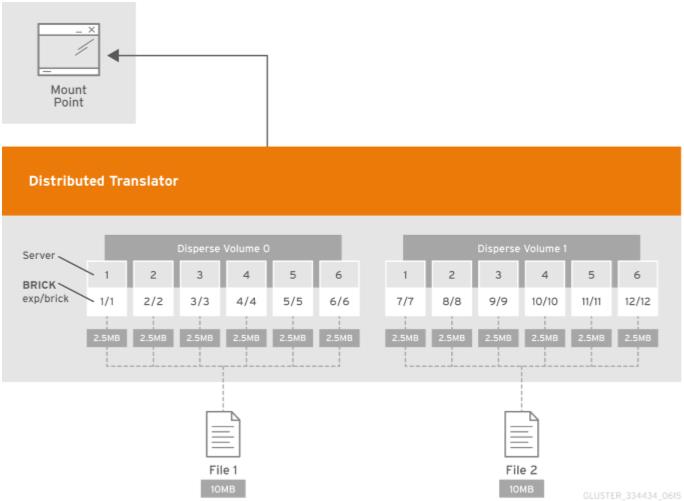

distributed dipersed volume(分布分散)

比分散卷多一层分布调度,扩大容量,所以计算方法相对于分散卷是倍数计算的。如:2×(4+1),表示,将数据分片成4份,1个作冗余,分布组为2个,也就是需要2×(4+1) =10个brick

gluster volume create vol5 disperse-data 4 redundancy 1 \

node1:/brick/brick5/brick node1:/brick/brick6/brick \

node2:/brick/brick5/brick node2:/brick/brick6/brick \

node3:/brick/brick5/brick node3:/brick/brick6/brick \

node4:/brick/brick5/brick node4:/brick/brick6/brick \

node5:/brick/brick5/brick node5:/brick/brick6/brick \

force

# 分布数量根据brick总数/一个分散组所需brick个数

架构图:

作用:冗余并扩容

删除volume

删除volume前需停止volume的服务,才能删除,否则会报错:

# gluster volume info vol1|grep Status

Status: Started

# gluster volume delete vol1

Deleting volume will erase all information about the volume. Do you want to continue? (y/n) y

volume delete: vol1: failed: Volume vol1 has been started.Volume needs to be stopped before deletion.

所以正确步骤为先停止服务,再删除卷

# gluster volume stop vol1

Stopping volume will make its data inaccessible. Do you want to continue? (y/n) y

volume stop: vol1: success

# gluster volume delete vol1

Deleting volume will erase all information about the volume. Do you want to continue? (y/n) y

volume delete: vol1: success

删除后,若需要重新利用起创建过的目录创建卷,需要删除里面的隐藏数据目录和目录的扩展属性:

1、 删除隐藏数据目录

这类目录是glusterfs启动volume时,对volume初始化生成的:

# tree -a /brick/brick1/brick1

/brick/brick1/brick1

├── .glusterfs

│ ├── 00

│ │ └── 00

│ │ ├── 00000000-0000-0000-0000-000000000001 -> ../../..

│ │ ├── 00000000-0000-0000-0000-000000000005 -> ../../00/00/00000000-0000-0000-0000-000000000001/.trashcan

│ │ └── 00000000-0000-0000-0000-000000000006 -> ../../00/00/00000000-0000-0000-0000-000000000005/internal_op

│ ├── brick1.db

│ ├── brick1.db-shm

│ ├── brick1.db-wal

│ ├── changelogs

│ │ ├── csnap

│ │ └── htime

│ ├── health_check

│ ├── indices

│ │ ├── dirty

│ │ └── xattrop

│ ├── landfill

│ ├── quanrantine

│ │ └── stub-00000000-0000-0000-0000-000000000008

│ └── unlink

└── .trashcan

└── internal_op

17 directories, 5 files

使用命令删除目录内文件:

rm -rf /brick/brick1/brick1/{.glusterfs,.trashcan}

2、 删除目录扩展属性

扩展属性在创建卷时就已添加,在卷启动时进一步添加更多的扩展属性标签,所以在重新创建前需删除干净扩展属性标签。

查看扩展属性标签:

# getfattr -d -m “.*” /brick/brick1/brick1/

getfattr: Removing leading ‘/’ from absolute path names

# file: brick/brick1/brick1/

security.selinux=“system_u:object_r:unlabeled_t:s0”

trusted.gfid=0sAAAAAAAAAAAAAAAAAAAAAQ==

trusted.glusterfs.dht=0sAAAAAQAAAAAAAAAAf////g==

trusted.glusterfs.volume-id=0sf1W8jgybTceZGk4fsq4eew==

有标记引用的为glusterfs的标签,删除扩展属性标签:

# setfattr -x trusted.gfid /brick/brick1/brick1

# setfattr -x trusted.glusterfs.dht /brick/brick1/brick1

# setfattr -x trusted.glusterfs.volume-id /brick/brick1/brick1

当然,若文件夹内已无重要文件,需要重新引用时,直接删除即可,无需删除隐藏目录和扩展属性标签:

# rm -rf /brick/brick1/brick1

课本练习

[student@workstation ~]$ lab createvolumes setup

Setting up servera for lab exercise work:

• Testing if servera is reachable............................. PASS

• Testing if serverb is reachable............................. PASS

• Testing if serverc is reachable............................. PASS

• Testing if serverd is reachable............................. PASS

• Adding glusterfs to runtime firewall on servera............. SUCCESS

• Adding glusterfs to permanent firewall on servera........... SUCCESS

• Adding glusterfs to runtime firewall on serverb............. SUCCESS

• Adding glusterfs to permanent firewall on serverb........... SUCCESS

• Adding glusterfs to runtime firewall on serverc............. SUCCESS

• Adding glusterfs to permanent firewall on serverc........... SUCCESS

• Adding glusterfs to runtime firewall on serverd............. SUCCESS

• Adding glusterfs to permanent firewall on serverd........... SUCCESS

• Adding serverb.lab.example.com as peer...................... SUCCESS

• Adding serverc.lab.example.com as peer...................... SUCCESS

• Adding serverd.lab.example.com as peer...................... SUCCESS

• Ensuring thin LVM pool vg_bricks/thinpool exists on servera. SUCCESS

• Ensuring 2G LV in vg_bricks/thinpool on servera exists...... SUCCESS

• Ensuring xfs on /dev/vg_bricks/brick-a1 on servera.......... SUCCESS

• Verifying/adding /bricks/brick-a1 directory on servera...... SUCCESS

• Verifying/adding mount for /bricks/brick-a1 on servera...... SUCCESS

• Verifying/adding /bricks/brick-a1/brick directory on servera SUCCESS

• Ensuring fixed SELinux context for /bricks/brick-a1/brick on servera SUCCESS

• Restoring SElinux contexts on servera....................... SUCCESS

• Ensuring 2G LV in vg_bricks/thinpool on servera exists...... SUCCESS

• Ensuring xfs on /dev/vg_bricks/brick-a2 on servera.......... SUCCESS

• Verifying/adding /bricks/brick-a2 directory on servera...... SUCCESS

• Verifying/adding mount for /bricks/brick-a2 on servera...... SUCCESS

• Verifying/adding /bricks/brick-a2/brick directory on servera SUCCESS

• Ensuring fixed SELinux context for /bricks/brick-a2/brick on servera SUCCESS

• Restoring SElinux contexts on servera....................... SUCCESS

• Ensuring thin LVM pool vg_bricks/thinpool exists on serverb. SUCCESS

• Ensuring 2G LV in vg_bricks/thinpool on serverb exists...... SUCCESS

• Ensuring xfs on /dev/vg_bricks/brick-b1 on serverb.......... SUCCESS

• Verifying/adding /bricks/brick-b1 directory on serverb...... SUCCESS

• Verifying/adding mount for /bricks/brick-b1 on serverb...... SUCCESS

• Verifying/adding /bricks/brick-b1/brick directory on serverb SUCCESS

…………

1. 创建replicate volume

[root@servera ~]# gluster volume create replvol replica 2 servera:/bricks/brick-a1/brick/ serverb:/bricks/brick-b1/brick/

volume create: replvol: success: please start the volume to access data

[root@servera ~]# gluster volume start replvol

volume start: replvol: success

[root@servera ~]# gluster volume info replvol

Volume Name: replvol

Type: Replicate

Volume ID: d98665a7-e311-440c-a542-f96ed04ef277

Status: Started

Number of Bricks: 1 x 2 = 2

Transport-type: tcp

Bricks:

Brick1: servera:/bricks/brick-a1/brick

Brick2: serverb:/bricks/brick-b1/brick

Options Reconfigured:

performance.readdir-ahead: on

[root@servera ~]# showmount -e servera

Export list for servera:

/replvol *

[root@workstation ~]# mkdir /mnt/replvol

[root@workstation ~]# yum -y install glusterfs-fuse

[root@workstation ~]# mount -t glusterfs servera:/replvol /mnt/replvol/

[root@workstation ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

/dev/vda1 xfs 10G 3.0G 7.0G 31% /

devtmpfs devtmpfs 902M 0 902M 0% /dev

tmpfs tmpfs 920M 84K 920M 1% /dev/shm

tmpfs tmpfs 920M 17M 904M 2% /run

tmpfs tmpfs 920M 0 920M 0% /sys/fs/cgroup

tmpfs tmpfs 184M 16K 184M 1% /run/user/42

tmpfs tmpfs 184M 0 184M 0% /run/user/0

servera:/replvol fuse.glusterfs 2.0G 33M 2.0G 2% /mnt/replvol

2. 创建dispersed volume

[root@servera ~]# gluster volume create dispersevol disperse-data 4 redundancy 2 servera:/bricks/brick-a2/brick serverb:/bricks/brick-b2/brick serverc:/bricks/brick-c1/brick serverd:/bricks/brick-d1/brick serverc:/bricks/brick-c2/brick serverd:/bricks/brick-d2/brick force

volume create: dispersevol: success: please start the volume to access data

[root@servera ~]# gluster volume start dispersevol

volume start: dispersevol: success

[root@servera ~]# gluster volume info dispersevol

Volume Name: dispersevol

Type: Disperse

Volume ID: ba9ce31f-7cac-48ef-a4f1-079965f632ca

Status: Started

Number of Bricks: 1 x (4 + 2) = 6

Transport-type: tcp

Bricks:

Brick1: servera:/bricks/brick-a2/brick

Brick2: serverb:/bricks/brick-b2/brick

Brick3: serverc:/bricks/brick-c1/brick

Brick4: serverd:/bricks/brick-d1/brick

Brick5: serverc:/bricks/brick-c2/brick

Brick6: serverd:/bricks/brick-d2/brick

Options Reconfigured:

performance.readdir-ahead: on

[root@workstation ~]# mkdir /mnt/dispersevol

[root@workstation ~]# mount -t glusterfs servera:/dispersevol /mnt/dispersevol

[root@workstation ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

/dev/vda1 xfs 10G 3.0G 7.0G 31% /

devtmpfs devtmpfs 902M 0 902M 0% /dev

tmpfs tmpfs 920M 84K 920M 1% /dev/shm

tmpfs tmpfs 920M 17M 904M 2% /run

tmpfs tmpfs 920M 0 920M 0% /sys/fs/cgroup

tmpfs tmpfs 184M 16K 184M 1% /run/user/42

tmpfs tmpfs 184M 0 184M 0% /run/user/0

servera:/replvol fuse.glusterfs 2.0G 33M 2.0G 2% /mnt/replvol

servera:/dispersevol fuse.glusterfs 8.0G 131M 7.9G 2% /mnt/dispersevol

3. 复制文件到两个卷中,然后检查组成卷的单元的内容。

[root@workstation ~]# cp -R /boot /mnt/replvol/

[root@workstation ~]# cp -R /boot /mnt/dispersevol/

[root@servera ~]# ll -h /bricks/brick-a1/brick/boot/

total 108M

-rw-r--r--. 2 root root 124K Nov 26 14:46 config-3.10.0-327.el7.x86_64

drwxr-xr-x. 2 root root 37 Nov 26 14:46 grub

drwx------. 6 root root 104 Nov 26 14:46 grub2

-rw-r--r--. 2 root root 36M Nov 26 14:46 initramfs-0-rescue-4093bf66a4a4444886ac88feb9f56896.img

-rw-r--r--. 2 root root 35M Nov 26 14:46 initramfs-3.10.0-327.el7.x86_64.img

-rw-------. 2 root root 16M Nov 26 14:46 initramfs-3.10.0-327.el7.x86_64kdump.img

-rw-r--r--. 2 root root 9.8M Nov 26 14:46 initrd-plymouth.img

-rw-r--r--. 2 root root 247K Nov 26 14:46 symvers-3.10.0-327.el7.x86_64.gz

-rw-------. 2 root root 2.9M Nov 26 14:46 System.map-3.10.0-327.el7.x86_64

-rwxr-xr-x. 2 root root 5.0M Nov 26 14:46 vmlinuz-0-rescue-4093bf66a4a4444886ac88feb9f56896

-rwxr-xr-x. 2 root root 5.0M Nov 26 14:46 vmlinuz-3.10.0-327.el7.x86_64

[root@servera ~]# ll -h /bricks/brick-a2/brick/boot/

total 27M

-rw-r--r--. 2 root root 31K Nov 26 14:47 config-3.10.0-327.el7.x86_64

drwxr-xr-x. 2 root root 37 Nov 26 14:47 grub

drwx------. 6 root root 104 Nov 26 14:47 grub2

-rw-r--r--. 2 root root 8.8M Nov 26 14:47 initramfs-0-rescue-4093bf66a4a4444886ac88feb9f56896.img

-rw-r--r--. 2 root root 8.7M Nov 26 14:47 initramfs-3.10.0-327.el7.x86_64.img

-rw-------. 2 root root 3.8M Nov 26 14:47 initramfs-3.10.0-327.el7.x86_64kdump.img

-rw-r--r--. 2 root root 2.5M Nov 26 14:47 initrd-plymouth.img

-rw-r--r--. 2 root root 62K Nov 26 14:47 symvers-3.10.0-327.el7.x86_64.gz

-rw-------. 2 root root 724K Nov 26 14:47 System.map-3.10.0-327.el7.x86_64

-rwxr-xr-x. 2 root root 1.3M Nov 26 14:47 vmlinuz-0-rescue-4093bf66a4a4444886ac88feb9f56896

-rwxr-xr-x. 2 root root 1.3M Nov 26 14:47 vmlinuz-3.10.0-327.el7.x86_64

4. 评分脚本

[student@workstation ~]$ lab createvolumes grade

章节实验

[student@workstation ~]$ lab creatingvolumes-lab setup

Setting up servera for lab exercise work:

• Testing if servera is reachable............................. PASS

• Testing if serverb is reachable............................. PASS

• Testing if serverc is reachable............................. PASS

• Testing if serverd is reachable............................. PASS

• Testing if all nodes are present and accounted for.......... PASS

• Testing if vg_bricks/thinpool is available on servera....... PASS

• Testing if vg_bricks/thinpool is available on serverb....... PASS

• Testing if vg_bricks/thinpool is available on serverc....... PASS

• Testing if vg_bricks/thinpool is available on serverd....... PASS

Overall Pre-requisites check................................... PASS

Creating bricks for exercise

• Ensuring thin LVM pool vg_bricks/thinpool exists on servera. SUCCESS

• Ensuring 2G LV in vg_bricks/thinpool on servera exists...... SUCCESS

• Ensuring xfs on /dev/vg_bricks/brick-a1 on servera.......... SUCCESS

• Verifying/adding /bricks/brick-a1 directory on servera...... SUCCESS

• Verifying/adding mount for /bricks/brick-a1 on servera...... SUCCESS

• Verifying/adding /bricks/brick-a1/brick directory on servera SUCCESS

…………

1. 创建distribute replicate volume

[root@servera ~]# gluster volume create distreplvol replica 2 servera:/bricks/brick-a3/brick serverb:/bricks/brick-b3/brick serverc:/bricks/brick-c3/brick serverd:/bricks/brick-d3/brick

volume create: distreplvol: success: please start the volume to access data

[root@servera ~]# gluster volume start distreplvol

volume start: distreplvol: success

[root@servera ~]# gluster volume info distreplvol

Volume Name: distreplvol

Type: Distributed-Replicate

Volume ID: 2911369a-9287-4c91-ab3f-ac56ef504052

Status: Started

Number of Bricks: 2 x 2 = 4

Transport-type: tcp

Bricks:

Brick1: servera:/bricks/brick-a3/brick

Brick2: serverb:/bricks/brick-b3/brick

Brick3: serverc:/bricks/brick-c3/brick

Brick4: serverd:/bricks/brick-d3/brick

Options Reconfigured:

performance.readdir-ahead: on

2. 创建distributed dipersed volume

[root@servera ~]# cat server.sh

#!/bin/bash

for BRICKNUM in {4..6}; do

for NODE in {a..d}; do

echo server${NODE}:/bricks/brick-${NODE}${BRICKNUM}/brick

done

done

[root@servera ~]# sh server.sh > brick.txt

[root@servera ~]# echo $(</root/brick.txt)

servera:/bricks/brick-a4/brick serverb:/bricks/brick-b4/brick serverc:/bricks/brick-c4/brick serverd:/bricks/brick-d4/brick servera:/bricks/brick-a5/brick serverb:/bricks/brick-b5/brick serverc:/bricks/brick-c5/brick serverd:/bricks/brick-d5/brick servera:/bricks/brick-a6/brick serverb:/bricks/brick-b6/brick serverc:/bricks/brick-c6/brick serverd:/bricks/brick-d6/brick

[root@servera ~]# gluster volume create distdispvol disperse-data 4 redundancy 2 $(</root/brick.txt) force

volume create: distdispvol: success: please start the volume to access data

[root@servera ~]# gluster volume start distdispvol

volume start: distdispvol: success

[root@servera ~]# gluster volume info distdispvol

Volume Name: distdispvol

Type: Distributed-Disperse

Volume ID: 7ed166a5-cb55-47c7-8f5a-b0b8ddcc52fc

Status: Started

Number of Bricks: 2 x (4 + 2) = 12

Transport-type: tcp

Bricks:

Brick1: servera:/bricks/brick-a4/brick

Brick2: serverb:/bricks/brick-b4/brick

Brick3: serverc:/bricks/brick-c4/brick

Brick4: serverd:/bricks/brick-d4/brick

Brick5: servera:/bricks/brick-a5/brick

Brick6: serverb:/bricks/brick-b5/brick

Brick7: serverc:/bricks/brick-c5/brick

Brick8: serverd:/bricks/brick-d5/brick

Brick9: servera:/bricks/brick-a6/brick

Brick10: serverb:/bricks/brick-b6/brick

Brick11: serverc:/bricks/brick-c6/brick

Brick12: serverd:/bricks/brick-d6/brick

Options Reconfigured:

performance.readdir-ahead: on

3. 评分脚本

[student@workstation ~]$ lab creatingvolumes-lab grade

4. 重置环境

reset workstation,servera,serverb,serverc,serverd

总结

-

讲解各种卷的用途与创建。

-

删除已创建的卷需要删除隐藏数据目录和目录扩展属性。

RHCA认证需要经历5门的学习与考试,还是需要花不少时间去学习与备考的,好好加油,可以噶🤪。

以上就是【金鱼哥】对 第四章 创建不同类型的卷 的简述和讲解。希望能对看到此文章的小伙伴有所帮助。

💾红帽认证专栏系列:

RHCSA专栏:戏说 RHCSA 认证

RHCE专栏:戏说 RHCE 认证

此文章收录在RHCA专栏:RHCA 回忆录

如果这篇【文章】有帮助到你,希望可以给【金鱼哥】点个赞👍,创作不易,相比官方的陈述,我更喜欢用【通俗易懂】的文笔去讲解每一个知识点。

如果有对【运维技术】感兴趣,也欢迎关注❤️❤️❤️ 【金鱼哥】❤️❤️❤️,我将会给你带来巨大的【收获与惊喜】💕💕!

- 点赞

- 收藏

- 关注作者

评论(0)