金鱼哥RHCA回忆录:RH236创建volume

前两个章节学习了添加可信任池和创建Brick后,就来到了本章创建volume,要配置好前面的的步骤才可以配置volume。

🎹 个人简介:大家好,我是 金鱼哥,CSDN运维领域新星创作者,华为云·云享专家,阿里云社区·专家博主

📚个人资质:CCNA、HCNP、CSNA(网络分析师),软考初级、中级网络工程师、RHCSA、RHCE、RHCA、RHCI、ITIL😜

💬格言:努力不一定成功,但要想成功就必须努力🔥

创建volume语法:

gluster volume create NAME VOLUEM-TYPE OPTIONS

若不清楚可以使用gluster volume help查询

创建volume,只需要信任池内的任一节点(server)创建就好

gluster volume create vol1 \

node1:/brick/brick1/brick \

node2:/brick/brick1/brick

# 创建一个volume,叫vol1,由node1和node2上的各一个brick组成

gluster volume start vol1

# 启动volume

gluster volume stop vol1

# 停止volume

gluster volume info

gluster volume status

# 查看volume的信息

showmount -e node1

showmount -e node2

# 注:查看nfs共享

发现共享的是volume名称,并不是具体的brick,volume会在信任池中的所有节点中查看到

cat /etc/exports

# 发现并没有共享条目

也就是说,client并不是通过NFS提供服务的,而是通过glusterd服务提供NFS,client去挂载volume的时候,访问任何一个节点的glusterd都可以挂载。

课本练习

[student@workstation ~]$ lab setup-volume setup

Testing if server and serverb are ready for lab exercise work:

• Testing if servera is reachable............................. PASS

• Testing if serverb is reachable............................. PASS

• Testing runtime firewall on servera for glusterfs........... PASS

• Testing permanent firewall on servera for glusterfs......... PASS

• Testing runtime firewall on serverb for glusterfs........... PASS

• Testing permanent firewall on serverb for glusterfs......... PASS

• Testing if glusterd is active on servera.................... PASS

• Testing if glusterd is active on serverb.................... PASS

• Testing if glusterd is active on servera.................... PASS

• Testing if glusterd is active on serverb.................... PASS

• Testing if both servera and serverb are in pool............. PASS

Infrastructure setup status:................................... PASS

Testing if all required bricks are present:

• Testing for thinly provisioned pool on servera.............. PASS

• Testing for thinly provisioned pool size on servera......... PASS

• Testing for mounted file system and type on servera......... PASS

• Testing for brick SELinux context on servera................ PASS

• Testing for thinly provisioned pool on serverb.............. PASS

• Testing for thinly provisioned pool size on serverb......... PASS

• Testing for mounted file system and type on serverb......... PASS

• Testing for brick SELinux context on serverb................ PASS

Brick setup status............................................. PASS

1. 创建volume并进行启动与查看。

[root@servera ~]# gluster volume create firstvol servera:/bricks/brick-a1/brick serverb:/bricks/brick-b1/brick

volume create: firstvol: success: please start the volume to access data

[root@servera ~]# gluster volume info firstvol

Volume Name: firstvol

Type: Distribute

Volume ID: ba0aa98b-55d5-4bc1-a28c-c2096756e98a

Status: Created

Number of Bricks: 2

Transport-type: tcp

Bricks:

Brick1: servera:/bricks/brick-a1/brick

Brick2: serverb:/bricks/brick-b1/brick

Options Reconfigured:

performance.readdir-ahead: on

[root@servera ~]# gluster volume start firstvol

volume start: firstvol: success

[root@servera ~]# gluster volume info firstvol

Volume Name: firstvol

Type: Distribute

Volume ID: ba0aa98b-55d5-4bc1-a28c-c2096756e98a

Status: Started

Number of Bricks: 2

Transport-type: tcp

Bricks:

Brick1: servera:/bricks/brick-a1/brick

Brick2: serverb:/bricks/brick-b1/brick

Options Reconfigured:

performance.readdir-ahead: on

2. 挂载并进行测试。

[root@workstation ~]# yum -y install glusterfs-fuse

[root@workstation ~]# mount -t glusterfs servera:firstvol /mnt

[root@workstation ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

/dev/vda1 xfs 10G 3.1G 7.0G 31% /

devtmpfs devtmpfs 902M 0 902M 0% /dev

tmpfs tmpfs 920M 84K 920M 1% /dev/shm

tmpfs tmpfs 920M 41M 880M 5% /run

tmpfs tmpfs 920M 0 920M 0% /sys/fs/cgroup

tmpfs tmpfs 184M 16K 184M 1% /run/user/42

tmpfs tmpfs 184M 0 184M 0% /run/user/0

servera:firstvol fuse.glusterfs 4.0G 66M 4.0G 2% /mnt

[root@workstation ~]# touch /mnt/file{0..0100}

[root@workstation ~]# umount /mnt/

3. 检查servera和serverb上bricks的内容。

[root@servera ~]# ls /bricks/brick-a1/brick/

file0001 file0009 file0017 file0028 file0038 file0046 file0055 file0067 file0074 file0087

file0003 file0010 file0019 file0029 file0039 file0047 file0057 file0068 file0077 file0088

file0004 file0012 file0023 file0030 file0040 file0048 file0059 file0069 file0081 file0091

file0005 file0015 file0024 file0031 file0041 file0051 file0060 file0071 file0082 file0092

file0008 file0016 file0025 file0032 file0042 file0054 file0064 file0073 file0086 file0097

[root@serverb ~]# ls /bricks/brick-b1/brick/

file0000 file0014 file0027 file0043 file0053 file0065 file0078 file0089 file0098

file0002 file0018 file0033 file0044 file0056 file0066 file0079 file0090 file0099

file0006 file0020 file0034 file0045 file0058 file0070 file0080 file0093 file0100

file0007 file0021 file0035 file0049 file0061 file0072 file0083 file0094

file0011 file0022 file0036 file0050 file0062 file0075 file0084 file0095

file0013 file0026 file0037 file0052 file0063 file0076 file0085 file0096

4. 评分脚本

[root@workstation ~]# lab setup-volume grade

章节实验

[root@workstation ~]# lab basicconfig setup

Testing if serverc and serverd are ready for lab exercise work:

• Testing if serverc is reachable............................. PASS

• Testing if glusterd is active on serverc.................... PASS

• Testing if glusterd is active on serverc.................... PASS

• Testing if serverd is reachable............................. PASS

• Testing if glusterd is active on serverd.................... PASS

• Testing if glusterd is active on serverd.................... PASS

1. 将serverc和serverd组合到一个可信的存储池中。

[root@serverc ~]# firewall-cmd --permanent --add-service=glusterfs

success

[root@serverc ~]# firewall-cmd --reload

success

[root@serverd ~]# firewall-cmd --permanent --add-service=glusterfs

success

[root@serverd ~]# firewall-cmd --reload

success

[root@serverc ~]# gluster peer probe serverd.lab.example.com

peer probe: success.

[root@serverc ~]# gluster pool list

UUID Hostname State

49e5e2e3-7372-46c9-82f6-d8a990bc44b9 serverd.lab.example.com Connected

f69b7199-c19f-4717-9935-c9d3a6f69d3c localhost

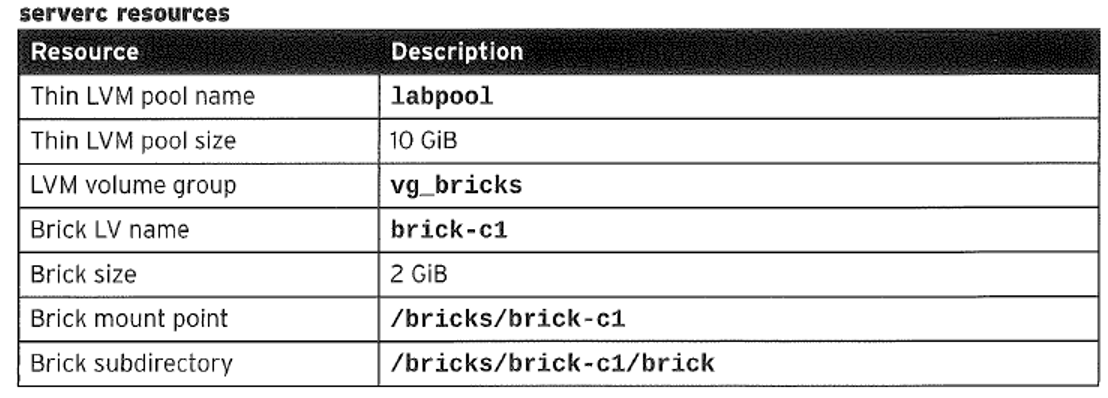

2. 创建卷所需的两个bricks。

[root@serverc ~]# lvcreate -L 10G -T vg_bricks/labpool

Logical volume "labpool" created.

[root@serverc ~]# lvcreate -V 2G -T vg_bricks/labpool -n brick-c1

Logical volume "brick-c1" created.

[root@serverc ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick-c1

meta-data=/dev/vg_bricks/brick-c1 isize=512 agcount=8, agsize=65520 blks

= sectsz=512 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=4096 blocks=524160, imaxpct=25

= sunit=16 swidth=16 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=16 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@serverc ~]# mkdir -p /bricks/brick-c1

[root@serverc ~]# blkid

/dev/vda1: UUID="2460ab6e-e869-4011-acae-31b2e8c05a3b" TYPE="xfs"

/dev/vdb: UUID="KlhHmY-vcmo-8qHh-nkio-soPV-8yIS-zoQPpg" TYPE="LVM2_member"

/dev/mapper/vg_bricks-brick--c1: UUID="51b267fe-7a06-4327-b32d-36e8eaaaea7b" TYPE="xfs"

[root@serverc ~]# echo "UUID=51b267fe-7a06-4327-b32d-36e8eaaaea7b /bricks/brick-c1 xfs defaults 1 2" >> /etc/fstab

[root@serverc ~]# mount -a

[root@serverc ~]# mkdir -p /bricks/brick-c1/brick

[root@serverc ~]# semanage fcontext -a -t glusterd_brick_t /bricks/brick-c1/brick

[root@serverc ~]# restorecon -Rv /bricks

restorecon reset /bricks/brick-c1 context system_u:object_r:unlabeled_t:s0->system_u:object_r:default_t:s0

restorecon reset /bricks/brick-c1/brick context unconfined_u:object_r:unlabeled_t:s0->unconfined_u:object_r:glusterd_brick_t:s0

[root@serverd ~]# lvcreate -L 10G -T vg_bricks/labpool

Logical volume "labpool" created.

[root@serverd ~]# lvcreate -V 2G -T vg_bricks/labpool -n brick-d1

Logical volume "brick-d1" created.

[root@serverd ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick-d1

meta-data=/dev/vg_bricks/brick-d1 isize=512 agcount=8, agsize=65520 blks

= sectsz=512 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=4096 blocks=524160, imaxpct=25

= sunit=16 swidth=16 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=16 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@serverd ~]# mkdir -p /bricks/brick-d1

[root@serverd ~]# blkid

/dev/vda1: UUID="2460ab6e-e869-4011-acae-31b2e8c05a3b" TYPE="xfs"

/dev/vdb: UUID="KlhHmY-vcmo-8qHh-nkio-soPV-8yIS-zoQPpg" TYPE="LVM2_member"

/dev/mapper/vg_bricks-brick--d1: UUID="a9551e17-7e59-4422-aebd-106c08bf914a" TYPE="xfs"

[root@serverd ~]# echo "UUID=a9551e17-7e59-4422-aebd-106c08bf914a /bricks/brick-d1 xfs defaults 1 2" >> /etc/fstab

[root@serverd ~]# mount -a

[root@serverd ~]# mkdir /bricks/brick-d1/brick

[root@serverd ~]# semanage fcontext -a -t glusterd_brick_t /bricks/brick-d1/brick

[root@serverd ~]# restorecon -Rv /bricks

restorecon reset /bricks/brick-d1 context system_u:object_r:unlabeled_t:s0->system_u:object_r:default_t:s0

restorecon reset /bricks/brick-d1/brick context unconfined_u:object_r:unlabeled_t:s0->unconfined_u:object_r:glusterd_brick_t:s0

3. 创建和启动卷。

[root@serverc ~]# gluster volume create labvol serverc:/bricks/brick-c1/brick serverd:/bricks/brick-d1/brick

volume create: labvol: success: please start the volume to access data

[root@serverc ~]# gluster volume start labvol

volume start: labvol: success

4. 评分脚本

[root@workstation ~]# lab basicconfig grade

5. 重置环境

reset workstation,servera,serverb,serverc,serverd

总结

-

在信任池内的任一节点创建volume即可。

-

client并不是通过NFS提供服务的,而是通过glusterd服务提供NFS。

RHCA认证需要经历5门的学习与考试,还是需要花不少时间去学习与备考的,好好加油,可以噶🤪。

以上就是【金鱼哥】对 第三章 配置红帽GLUSTER存储–创建volume和章节实验 的简述和讲解。希望能对看到此文章的小伙伴有所帮助。

💾红帽认证专栏系列:

RHCSA专栏:戏说 RHCSA 认证

RHCE专栏:戏说 RHCE 认证

此文章收录在RHCA专栏:RHCA 回忆录

如果这篇【文章】有帮助到你,希望可以给【金鱼哥】点个赞👍,创作不易,相比官方的陈述,我更喜欢用【通俗易懂】的文笔去讲解每一个知识点。

如果有对【运维技术】感兴趣,也欢迎关注❤️❤️❤️ 【金鱼哥】❤️❤️❤️,我将会给你带来巨大的【收获与惊喜】💕💕!

- 点赞

- 收藏

- 关注作者

评论(0)