使用transformer的YOLOv7及TensorRT部署

最近在github上看到一个博主开源的YOLOv7仓库都惊呆了,YOLOv6都还没出来怎么就到YOLOv7了

稍微看了下,原来作者是基于这两年来很火的transformer做的检测和分割模型,测试的效果都非常棒,比YOLOv5效果好很多。由此可见,基于Transformer based的检测模型才是未来。你会发现它学到的东西非常合理,比从一大堆boudingbox里面选择概率的范式要好一点。话不多说,先上代码链接:https://github.com/jinfagang/yolov7

开源的YOLOv7功能很强大,支持 YOLO, DETR, AnchorDETR等等。作者声称发现很多开源检测框架,比如YOLOv5、EfficientDetection都有自己的弱点。例如,YOLOv5实际上设计过度,太多混乱的代码。更令人惊讶的是,pytorch中至少有20多个不同版本的YOLOv3-YOLOv4的重新实现,其中99.99%是完全错误的,你既不能训练你的数据集,也不能使其与原paper相比。所以有了作者开源的这个仓库!该repo 支持DETR等模型的ONNX导出,并且可以进行tensorrt推理。

该repo提供了以下的工作:

-

YOLOv4 contained with CSP-Darknet53;

-

YOLOv7 arch with resnets backbone;

-

GridMask augmentation from PP-YOLO included;

-

Mosiac transform supported with a custom datasetmapper;

-

YOLOv7 arch Swin-Transformer support (higher accuracy but lower speed);

-

RandomColorDistortion, RandomExpand, RandomCrop, RandomFlip;

-

CIoU loss (DIoU, GIoU) and label smoothing (from YOLOv5 & YOLOv4);

-

YOLOv7 Res2net + FPN supported;

-

Pyramid Vision Transformer v2 (PVTv2) supported

-

YOLOX s,m,l backbone and PAFPN added, we have a new combination of YOLOX backbone and pafpn;

-

YOLOv7 with Res2Net-v1d backbone, we found res2net-v1d have a better accuracy then darknet53;

-

Added PPYOLOv2 PAN neck with SPP and dropblock;

-

YOLOX arch added, now you can train YOLOX model (anchor free yolo) as well;

-

DETR: transformer based detection model and onnx export supported, as well as TensorRT acceleration;

-

AnchorDETR: Faster converge version of detr, now supported!

仓库提供了快速检测Quick start和train自己数据集的代码及操作流程,也提供了许多预训练模型可供下载,读者可依据自己的需要选择下载对应的检测模型。

快速运行demo代码

python3 demo.py --config-file configs/wearmask/darknet53.yaml --input ./datasets/wearmask/images/val2017 --opts MODEL.WEIGHTS output/model_0009999.pth

实例分割

python demo.py --config-file configs/coco/sparseinst/sparse_inst_r50vd_giam_aug.yaml --video-input ~/Movies/Videos/86277963_nb2-1-80.flv -c 0.4 --opts MODEL.WEIGHTS weights/sparse_inst_r50vd_giam_aug_8bc5b3.pth

基于detectron2新推出的LazyConfig系统,使用LazyConfig模型运行

python3 demo_lazyconfig.py --config-file configs/new_baselines/panoptic_fpn_regnetx_0.4g.py --opts train.init_checkpoint=output/model_0004999.pth

训练数据集

python train_net.py --config-file configs/coco/darknet53.yaml --num-gpus 1

如果你想训练YOLOX,使用 config file configs/coco/yolox_s.yaml

导出 ONNX && TensorRT && TVM

detr

python export_onnx.py --config-file detr/config/file

SparseInst

python export_onnx.py --config-file configs/coco/sparseinst/sparse_inst_r50_giam_aug.yaml --video-input ~/Videos/a.flv --opts MODEL.WEIGHTS weights/sparse_inst_r50_giam_aug_2b7d68.pth INPUT.MIN_SIZE_TEST 512

具体的操作流程可以去原仓库看,都有详细的解析!

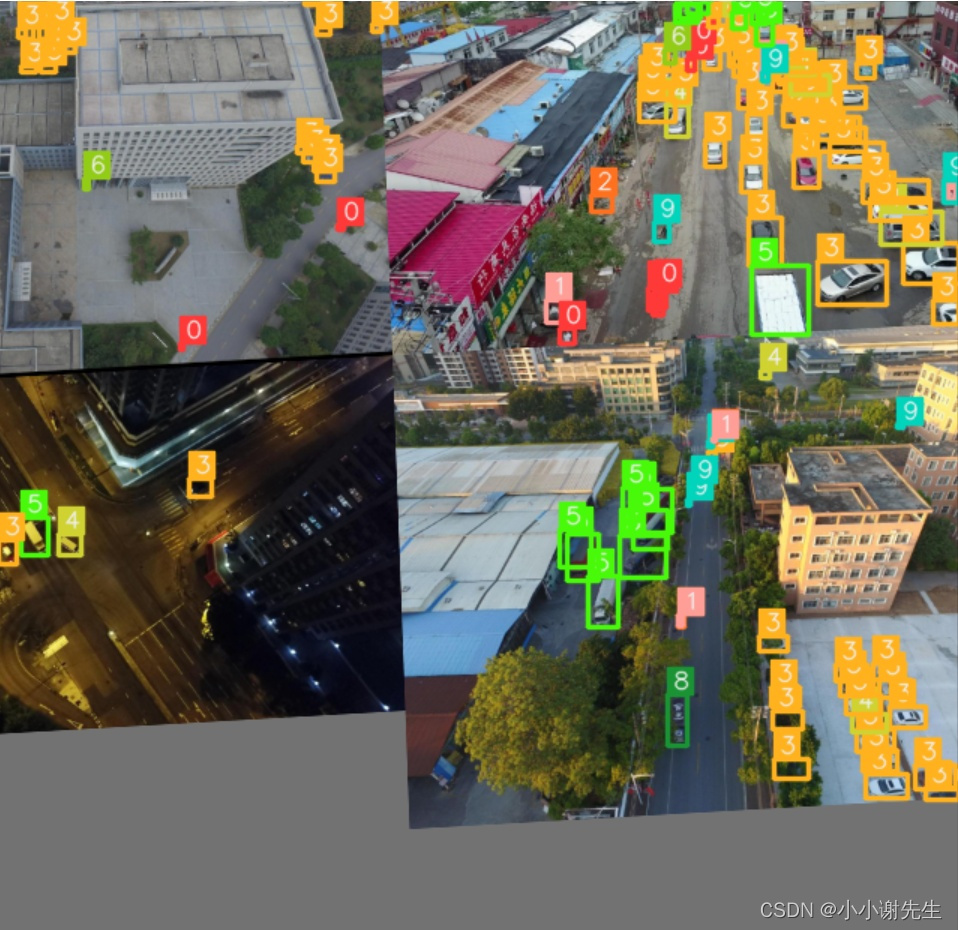

检测结果

参考链接

[1]https://manaai.cn/aisolution_detail.html?id=7[2]https://github.com/jinfagang/yolov7

文章来源: blog.csdn.net,作者:小小谢先生,版权归原作者所有,如需转载,请联系作者。

原文链接:blog.csdn.net/xiewenrui1996/article/details/124605570

- 点赞

- 收藏

- 关注作者

评论(0)