探索云原生技术之基石-Docker容器入门篇(4)【与云原生的故事】

❤️作者简介:2022新星计划第三季云原生与云计算赛道Top5🏅、华为云享专家🏅、云原生领域潜力新星🏅

💛博客首页:C站个人主页🌞

💗作者目的:如有错误请指正,将来会不断的完善笔记,帮助更多的Java爱好者入门,共同进步!

探索云原生技术之基石-Docker容器入门篇(4)

本博文一共有7篇,如下

- 探索云原生技术之基石-Docker容器入门篇(1)

- 探索云原生技术之基石-Docker容器入门篇(2)

- 探索云原生技术之基石-Docker容器入门篇(3)

- 探索云原生技术之基石-Docker容器入门篇(4),=>由于篇幅过长,所以另起一篇

等你对Docker有一定理解的时候可以看高级篇,不过不太建议。

- 探索云原生技术之基石-Docker容器高级篇(1)

- 探索云原生技术之基石-Docker容器高级篇(2)

- 探索云原生技术之基石-Docker容器高级篇(3)-视情况而定

剧透:未来将出云原生技术-Kubernetes(k8s),此时的你可以对Docker进行统一管理、动态扩缩容等等。

看完之后你会对Docker有一定的理解,并能熟练的使用Docker进行容器化开发、以及Docker部署微服务、Docker网络等等。干起来!

什么是云原生

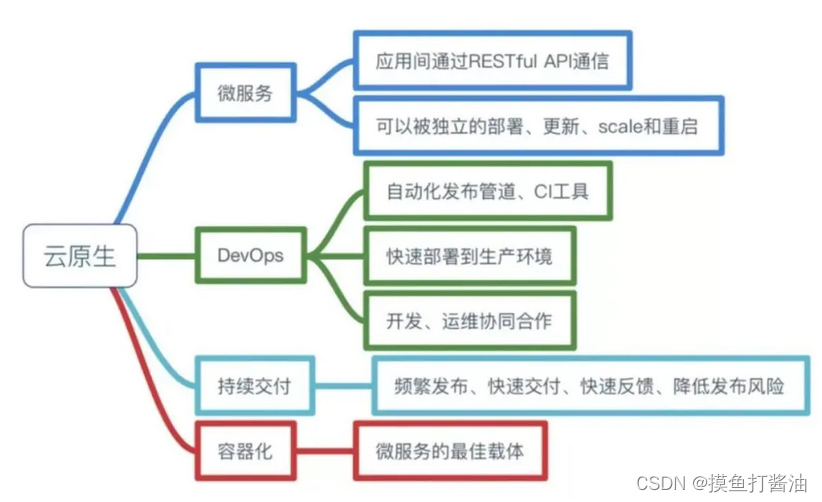

Pivotal公司的Matt Stine于2013年首次提出云原生(Cloud-Native)的概念;2015年,云原生刚推广时,Matt Stine在《迁移到云原生架构》一书中定义了符合云原生架构的几个特征:12因素、微服务、自敏捷架构、基于API协作、扛脆弱性;到了2017年,Matt Stine在接受InfoQ采访时又改了口风,将云原生架构归纳为模块化、可观察、可部署、可测试、可替换、可处理6特质;而Pivotal最新官网对云原生概括为4个要点:DevOps+持续交付+微服务+容器。

总而言之,符合云原生架构的应用程序应该是:采用开源堆栈(K8S+Docker)进行容器化,基于微服务架构提高灵活性和可维护性,借助敏捷方法、DevOps支持持续迭代和运维自动化,利用云平台设施实现弹性伸缩、动态调度、优化资源利用率。

(此处摘选自《知乎-华为云官方帐号》)

什么是Docker

- Docker 是一个开源的应用容器引擎,基于Go语言开发。

- Docker 可以让开发者打包他们的应用以及依赖包到一个轻量级、可移植的容器中,然后发布到任何流行的 Linux 机器上,也可以实现虚拟化。

- 容器是完全使用沙箱机制(容器实例相互隔离),容器性能开销极低(高性能)。

总而言之:

Docker是一个高性能的容器引擎;

可以把本地源代码、配置文件、依赖、环境通通打包成一个容器即可以到处运行;

使用Docker安装软件十分方便,而且安装的软件十分精简,方便扩展。

Docker安装常用的软件

Ubuntu安装

- 拉取Ubuntu镜像

$ docker pull ubuntu

- 运行Ubuntu

$ docker run -it --name MyUbuntu ubuntu

[root@aubin ~]# docker run -it --name MyUbuntu ubuntu

root@9cb5882e5c7d:/# ls

bin dev home lib32 libx32 mnt proc run srv tmp var

boot etc lib lib64 media opt root sbin sys usr

FastDFS安装

$ docker search fastdfs

$ docker pull delron/fastdfs

$ docker images

$ docker run -dti --network=host --name tracker -v /var/fdfs/tracker:/var/fdfs -v /etc/localtime:/etc/localtime delron/fastdfs tracker

docker run -dti --network=host --name storage -e TRACKER_SERVER=192.168.184.132:22122 -v /var/fdfs/storage:/var/fdfs -v /etc/localtime:/etc/localtime delron/fastdfs storage

$ docker ps #查看容器id

$ docker exec -it 容器id /bin/bash

#下面这两行命令可以不写,就默认配置

$ vi /etc/fdfs/storage.conf

$ vi /usr/local/nginx/conf/nginx.conf

$ firewall-cmd --zone=public --permanent --add-port=8888/tcp

$ firewall-cmd --zone=public --permanent --add-port=22122/tcp

$ firewall-cmd --zone=public --permanent --add-port=23000/tcp

#重启防火墙

$ systemctl restart firewalld

#自启动

$ docker update --restart=always tracker

$ docker update --restart=always storage

RabbitMQ安装

$ docker pull rabbitmq #从Docker仓库拉取最新的RabbitMQ镜像

$ docker run -d --hostname my-rabbit --name yblog-rabbit -p 15672:15672 -p 5672:5672 rabbitmq #运行RabbitMQ镜像

$ docker exec -it yblog-rabbit /bin/bash #进入RabbitMQ容器

$ rabbitmq-plugins enable rabbitmq_management #安装RabbitMQ的插件

MySQL5.7安装(生产环境)

拉取MySQL镜像

$ docker pull mysql:5.7

启动mysql并挂载容器数据卷

$ docker run -d -p 3306:3306 --privileged=true -v /bf/mysql/log:/var/log/mysql -v /bf/mysql/data:/var/lib/mysql -v /bf/mysql/conf:/etc/mysql/conf.d -e MYSQL_ROOT_PASSWORD=123456 --name mysql mysql:5.7

在宿主机所在conf目录新建my.cnf文件

- 如果不指定配置文件会导致编码问题(就如下面那样)

查看MySQL编码

mysql> show variables like 'character%';

+--------------------------+----------------------------+

| Variable_name | Value |

+--------------------------+----------------------------+

| character_set_client | latin1 |

| character_set_connection | latin1 |

| character_set_database | latin1 |

| character_set_filesystem | binary |

| character_set_results | latin1 |

| character_set_server | latin1 |

| character_set_system | utf8 |

| character_sets_dir | /usr/share/mysql/charsets/ |

+--------------------------+----------------------------+

8 rows in set (0.01 sec)

-

可以看出默认的mysql编码格式是:latin1,这样对我们明显是不行的

-

我们要修改成UTF-8或者utf-8mb4

my.cnf 文件:

[client]

default_character_set=utf8

[mysqld]

collation_server=utf8_general_ci

character_set_server=utf8

利用my.cnf文件,解决编码问题

[root@aubin ~]# cd /bf/mysql/conf

[root@aubin conf]# vim my.cnf

- 然后把上面的my.cnf内容复制进去。

重启容器

$ docker restart mysql

登录MySQL

mysql -u root -p

再次查看编码

mysql> show variables like 'character%';

+--------------------------+----------------------------+

| Variable_name | Value |

+--------------------------+----------------------------+

| character_set_client | utf8 |

| character_set_connection | utf8 |

| character_set_database | utf8 |

| character_set_filesystem | binary |

| character_set_results | utf8 |

| character_set_server | utf8 |

| character_set_system | utf8 |

| character_sets_dir | /usr/share/mysql/charsets/ |

+--------------------------+----------------------------+

8 rows in set (0.01 sec)

- 大功告成了

进入MySQL客户端,查看MySQL编码

show variables like 'character%';

进入MySQL客户端

$ docker exec -it mysql /bin/bash

登录

mysql -u 你的MySQL帐号 -p

- 然后回车。

- 输入密码即可。

Redis6.2.6安装(生产环境)

- 拉取redis镜像

$ docker pull redis6.2.6

- 创建宿主机redis配置文件夹

$ mkdir /home/redis

$ mkdir /home/redis/data

$ vim redis.conf

- 再次目录创建redis.conf,内容如下:

bind 0.0.0.0

protected-mode no

# Accept connections on the specified port, default is 6379 (IANA #815344).

# If port 0 is specified Redis will not listen on a TCP socket.

port 6379

tcp-backlog 511

# Close the connection after a client is idle for N seconds (0 to disable)

timeout 0

tcp-keepalive 300

daemonize no

supervised no

pidfile /var/run/redis_6379.pid

loglevel notice

logfile "/data/redis.log"

databases 16

always-show-logo yes

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

# The filename where to dump the DB

dbfilename dump.rdb

dir /data

replica-serve-stale-data yes

replica-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

replica-priority 100

# A Redis master is able to list the address and port of the attached

# replicas in different ways. For example the "INFO replication" section

# offers this information, which is used, among other tools, by

# Redis Sentinel in order to discover replica instances.

# Another place where this info is available is in the output of the

# "ROLE" command of a master.

#

# The listed IP and address normally reported by a replica is obtained

# in the following way:

#

# IP: The address is auto detected by checking the peer address

# of the socket used by the replica to connect with the master.

#

# Port: The port is communicated by the replica during the replication

# handshake, and is normally the port that the replica is using to

# listen for connections.

#

# However when port forwarding or Network Address Translation (NAT) is

# used, the replica may be actually reachable via different IP and port

# pairs. The following two options can be used by a replica in order to

# report to its master a specific set of IP and port, so that both INFO

# and ROLE will report those values.

#

# There is no need to use both the options if you need to override just

# the port or the IP address.

#

# replica-announce-ip 5.5.5.5

# replica-announce-port 1234

################################## SECURITY ###################################

# Require clients to issue AUTH <PASSWORD> before processing any other

# commands. This might be useful in environments in which you do not trust

# others with access to the host running redis-server.

#

# This should stay commented out for backward compatibility and because most

# people do not need auth (e.g. they run their own servers).

#

# Warning: since Redis is pretty fast an outside user can try up to

# 150k passwords per second against a good box. This means that you should

# use a very strong password otherwise it will be very easy to break.

#

requirepass

# Command renaming.

#

# It is possible to change the name of dangerous commands in a shared

# environment. For instance the CONFIG command may be renamed into something

# hard to guess so that it will still be available for internal-use tools

# but not available for general clients.

#

# Example:

#

# rename-command CONFIG b840fc02d524045429941cc15f59e41cb7be6c52

#

# It is also possible to completely kill a command by renaming it into

# an empty string:

#

# rename-command CONFIG ""

#

# Please note that changing the name of commands that are logged into the

# AOF file or transmitted to replicas may cause problems.

################################### CLIENTS ####################################

# Set the max number of connected clients at the same time. By default

# this limit is set to 10000 clients, however if the Redis server is not

# able to configure the process file limit to allow for the specified limit

# the max number of allowed clients is set to the current file limit

# minus 32 (as Redis reserves a few file descriptors for internal uses).

#

# Once the limit is reached Redis will close all the new connections sending

# an error 'max number of clients reached'.

#

# maxclients 10000

############################## MEMORY MANAGEMENT ################################

# Set a memory usage limit to the specified amount of bytes.

# When the memory limit is reached Redis will try to remove keys

# according to the eviction policy selected (see maxmemory-policy).

#

# If Redis can't remove keys according to the policy, or if the policy is

# set to 'noeviction', Redis will start to reply with errors to commands

# that would use more memory, like SET, LPUSH, and so on, and will continue

# to reply to read-only commands like GET.

#

# This option is usually useful when using Redis as an LRU or LFU cache, or to

# set a hard memory limit for an instance (using the 'noeviction' policy).

#

# WARNING: If you have replicas attached to an instance with maxmemory on,

# the size of the output buffers needed to feed the replicas are subtracted

# from the used memory count, so that network problems / resyncs will

# not trigger a loop where keys are evicted, and in turn the output

# buffer of replicas is full with DELs of keys evicted triggering the deletion

# of more keys, and so forth until the database is completely emptied.

#

# In short... if you have replicas attached it is suggested that you set a lower

# limit for maxmemory so that there is some free RAM on the system for replica

# output buffers (but this is not needed if the policy is 'noeviction').

#

# maxmemory <bytes>

# MAXMEMORY POLICY: how Redis will select what to remove when maxmemory

# is reached. You can select among five behaviors:

#

# volatile-lru -> Evict using approximated LRU among the keys with an expire set.

# allkeys-lru -> Evict any key using approximated LRU.

# volatile-lfu -> Evict using approximated LFU among the keys with an expire set.

# allkeys-lfu -> Evict any key using approximated LFU.

# volatile-random -> Remove a random key among the ones with an expire set.

# allkeys-random -> Remove a random key, any key.

# volatile-ttl -> Remove the key with the nearest expire time (minor TTL)

# noeviction -> Don't evict anything, just return an error on write operations.

#

# LRU means Least Recently Used

# LFU means Least Frequently Used

#

# Both LRU, LFU and volatile-ttl are implemented using approximated

# randomized algorithms.

#

# Note: with any of the above policies, Redis will return an error on write

# operations, when there are no suitable keys for eviction.

#

# At the date of writing these commands are: set setnx setex append

# incr decr rpush lpush rpushx lpushx linsert lset rpoplpush sadd

# sinter sinterstore sunion sunionstore sdiff sdiffstore zadd zincrby

# zunionstore zinterstore hset hsetnx hmset hincrby incrby decrby

# getset mset msetnx exec sort

#

# The default is:

#

# maxmemory-policy noeviction

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

replica-lazy-flush no

appendonly yes

# The name of the append only file (default: "appendonly.aof")

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble yes

lua-time-limit 5000

slowlog-log-slower-than 10000

# There is no limit to this length. Just be aware that it will consume memory.

# You can reclaim memory used by the slow log with SLOWLOG RESET.

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

stream-node-max-bytes 4096

stream-node-max-entries 100

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit replica 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

dynamic-hz yes

# When a child rewrites the AOF file, if the following option is enabled

# the file will be fsync-ed every 32 MB of data generated. This is useful

# in order to commit the file to the disk more incrementally and avoid

# big latency spikes.

aof-rewrite-incremental-fsync yes

# When redis saves RDB file, if the following option is enabled

# the file will be fsync-ed every 32 MB of data generated. This is useful

# in order to commit the file to the disk more incrementally and avoid

# big latency spikes.

rdb-save-incremental-fsync yes

- 运行Redis

$ docker run --restart always -d -v /home/redis/redis.conf:/usr/local/etc/redis/redis.conf -v /home/redis/data:/data --name redis -p 6379:6379 redis:6.2.6 redis-server /usr/local/etc/redis/redis.conf

- 进入redis容器

$ docker exec -it redis /bin/bash

- 进入redis-cli

root@5d9912d20e4a:/data# redis-cli

127.0.0.1:6379> set k1 v1

OK

查看Redis版本

root@5d9912d20e4a:/data# redis-server -v

Redis server v=6.2.6 sha=00000000:0 malloc=jemalloc-5.1.0 bits=64 build=b61f37314a089f19

Nginx最新版安装

- 拉取镜像

$ docker pull nginx

- 运行容器

docker run --name mynginx -p 80:80 -d nginx

- 使用浏览器访问nginx:虚拟机的ip:80

Tomcat服务器安装

- 拉取最新的Tomcat

$ docker pull tomcat

- 运行容器

[root@aubin ~]# docker run --name mytomcat -p 8080:8080 -v $PWD/test:/usr/local/tomcat/webapps/test -d tomcat

a956f63b4aa08014e8584e2f8f9b04cf2603c0e7144f917bb146b947492f2419

docker安装最新版Tomcat无法访问

此时我们会发现访问不了这个Tomcat,那是因为webapps目录没有任何东西,此时我们要这样做。

- 把webapps.dist目录的内容复制到webapps目录中(因为webapps.dist包含了Tomcat的首页html)

使用pwd命令检查一下当前目录

root@a956f63b4aa0:/usr/local/tomcat# pwd

/usr/local/tomcat

开始复制(重点)

cp -r webapps.dist/* ./webapps

- 最后把webapps.dist删除即可,反正也没用了。

rm -rf webapps.dist

- OK,执行完上述命令之后就完成了!

MongoDB安装

- 拉取镜像

$ docker pull mongo:latest

- 运行MongoDB容器

$ docker run -itd --name mongo -p 27017:27017 mongo --auth

- –auth:意思是需要密码才能访问容器服务。

$ docker exec -it mongo mongo admin

# 创建一个名为 admin,密码为 123456 的用户。

> db.createUser({ user:'admin',pwd:'123456',roles:[ { role:'userAdminAnyDatabase', db: 'admin'},"readWriteAnyDatabase"]});

# 尝试使用上面创建的用户信息进行连接。

> db.auth('admin', '123456')

❤️💛🧡本章结束,我们下一章(高级篇)见❤️💛🧡

【与云原生的故事】有奖征文火热进行中:https://bbs.huaweicloud.com/blogs/345260

- 点赞

- 收藏

- 关注作者

评论(0)