Hadoop快速入门——第二章、分布式集群(第四节、搭建开发环境)

【摘要】 Hadoop快速入门——第二章、分布式集群引包:<dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.7.3</version></dependency>可以先安装一下【Big Data Tools】安装完成后需要重新启动一下。个人建议,先...

Hadoop快速入门——第二章、分布式集群

引包:

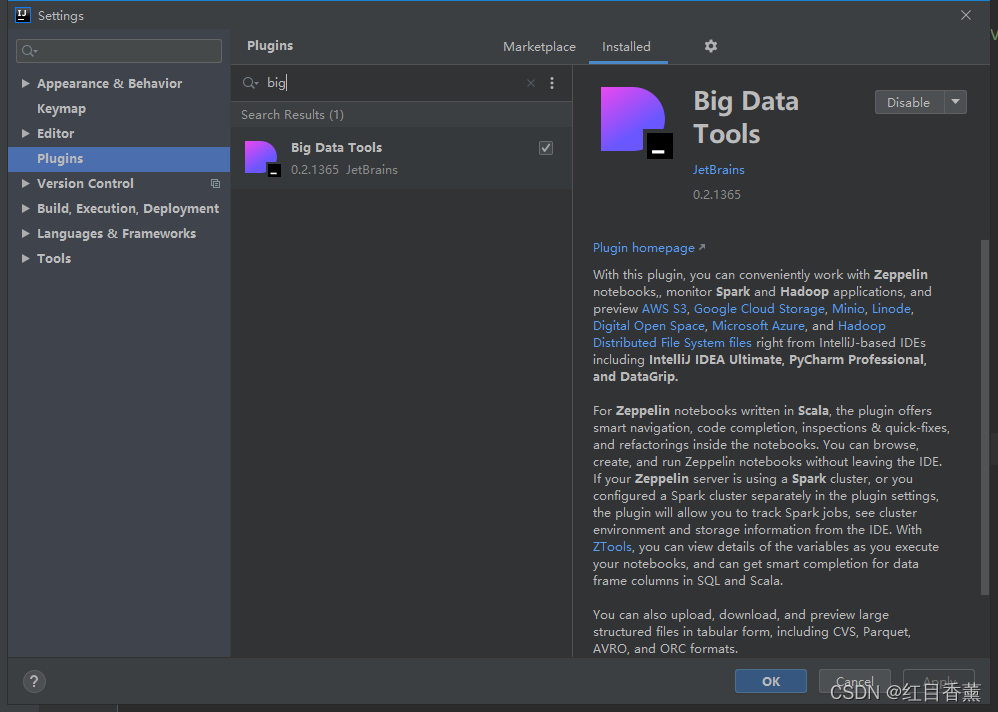

可以先安装一下【Big Data Tools】

安装完成后需要重新启动一下。

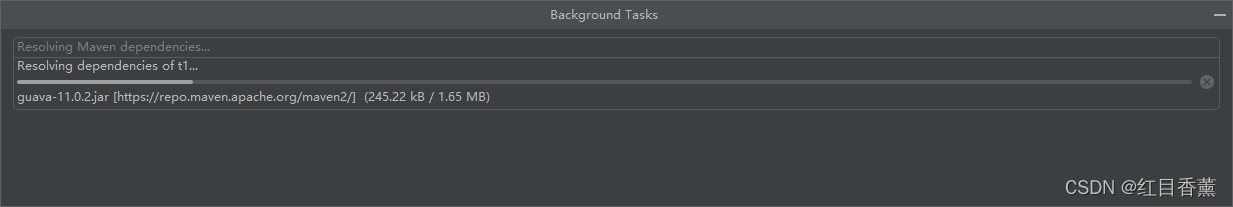

个人建议,先改一下【镜像】位置为国内的,我就没改,直接update了,玩了好几把【连连看】都没下载完毕。

创建测试类:

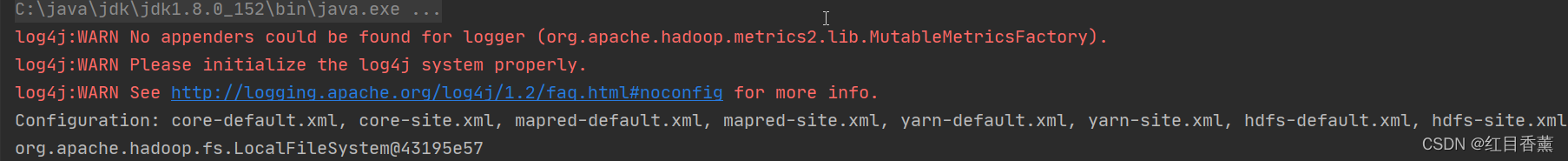

输出:

Configuration: core-default.xml, core-site.xml, mapred-default.xml, mapred-site.xml, yarn-default.xml, yarn-site.xml, hdfs-default.xml, hdfs-site.xml

org.apache.hadoop.fs.LocalFileSystem@43195e57

文件操作:

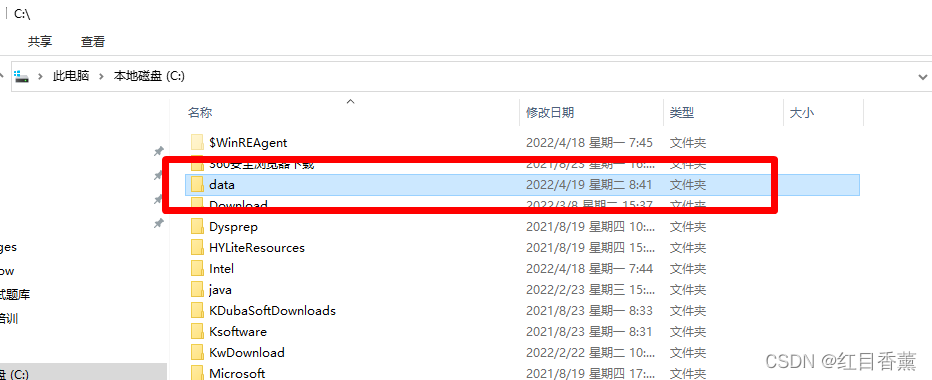

mkdirs:创建文件夹

会创建在【C盘的根目录】

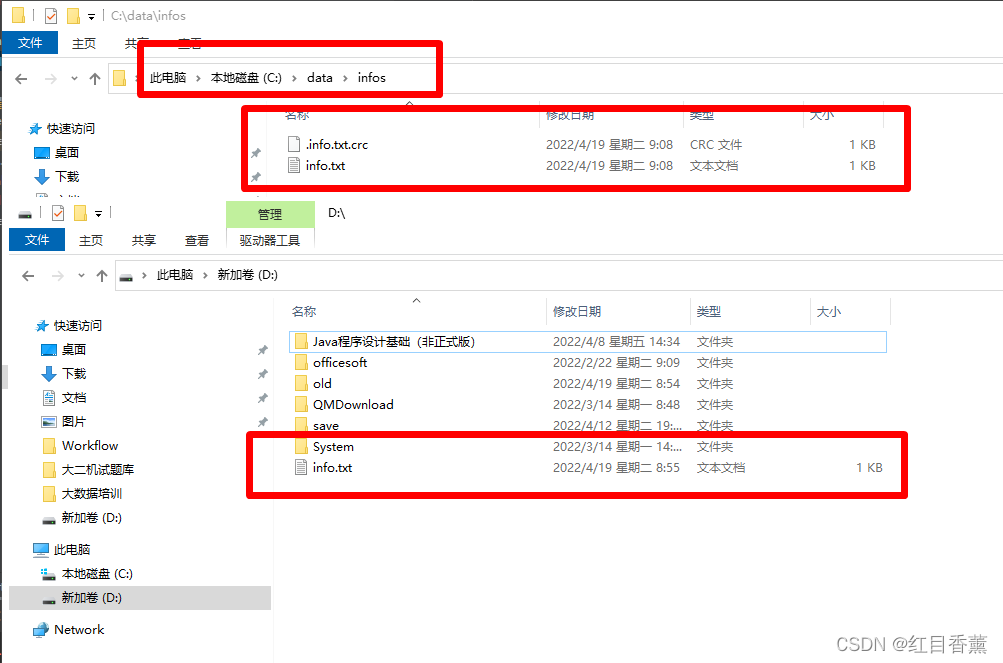

copyFromLocalFile:复制文件到服务器(本地模拟)

本地效果:

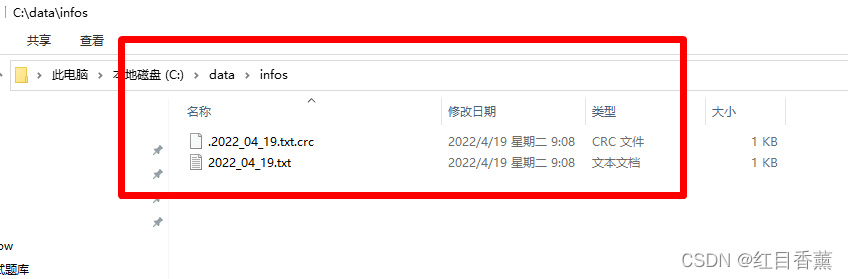

修改文件名称【rename】:

本地效果:

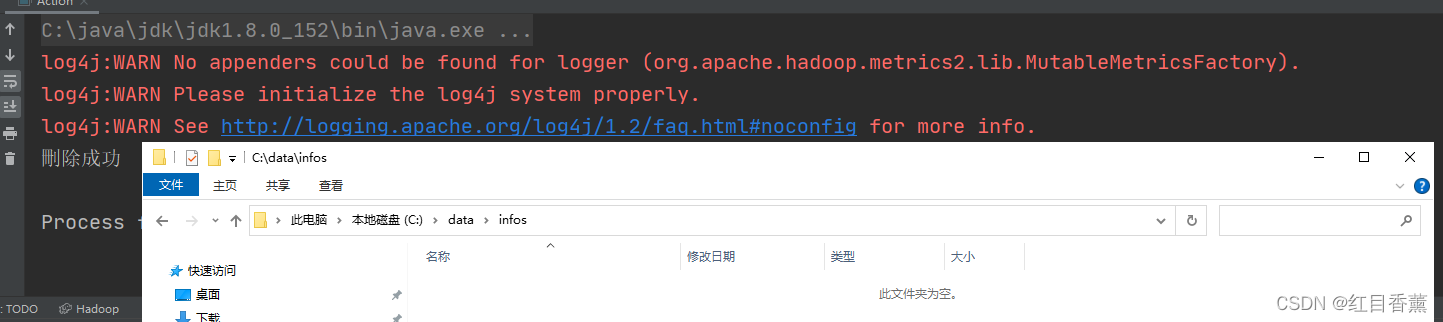

删除文件deleteOnExit:

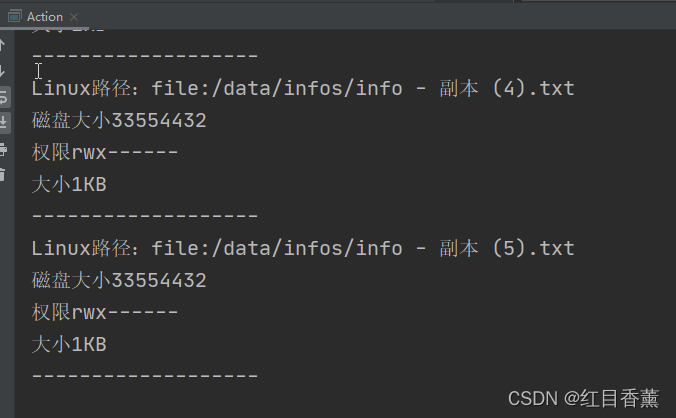

查看目录信息:

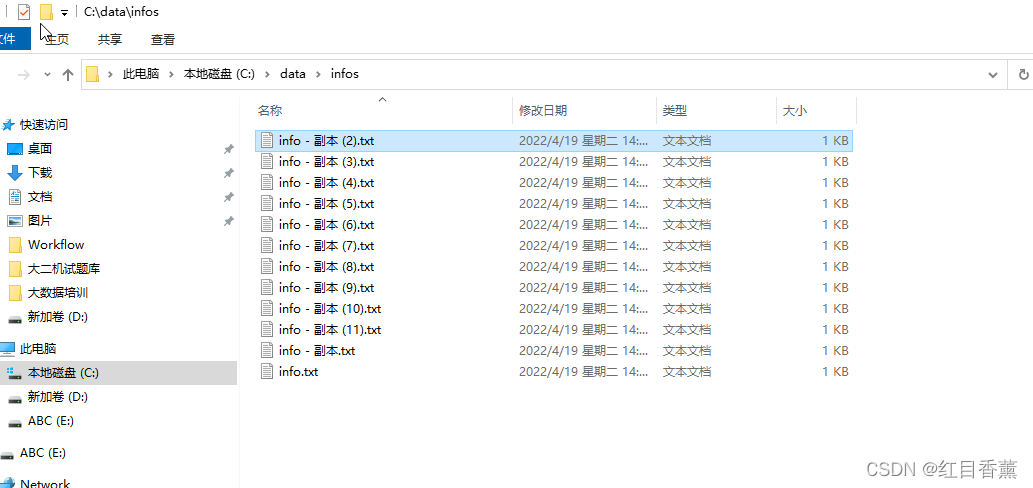

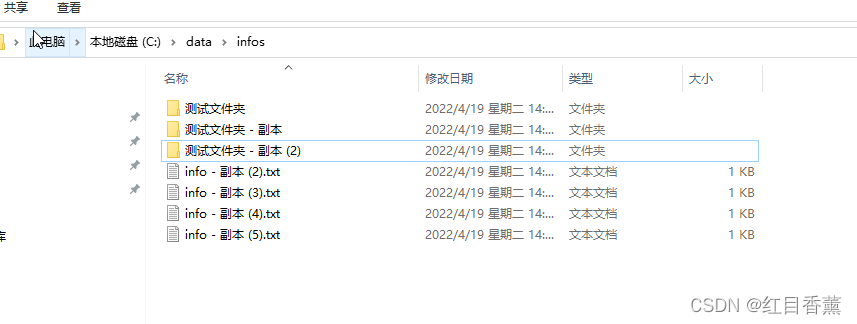

做一些测试文件:

遍历【/data/下的所有文件】

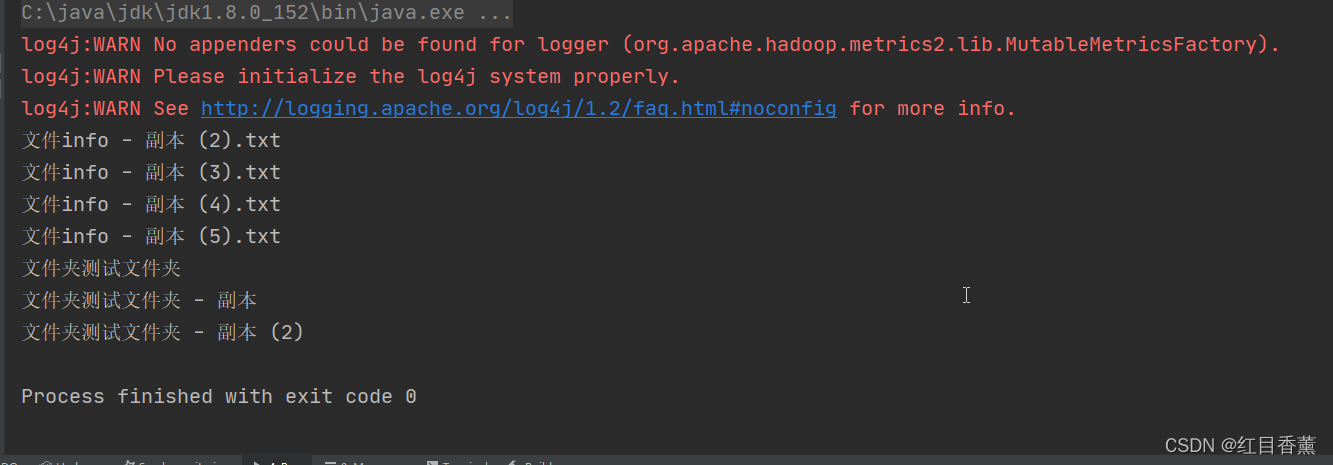

遍历文件以及文件夹listStatus:

编码:

效果:

获取所有节点信息(win系统上看不到)

HDFS 的设计特点

能够存储超大文件

流式数据访问

商用硬件

不能处理低时间延迟的数据访问

不能存放大量小文件

无法高效实现多用户写入或者任意修改文件

【声明】本内容来自华为云开发者社区博主,不代表华为云及华为云开发者社区的观点和立场。转载时必须标注文章的来源(华为云社区)、文章链接、文章作者等基本信息,否则作者和本社区有权追究责任。如果您发现本社区中有涉嫌抄袭的内容,欢迎发送邮件进行举报,并提供相关证据,一经查实,本社区将立刻删除涉嫌侵权内容,举报邮箱:

cloudbbs@huaweicloud.com

- 点赞

- 收藏

- 关注作者

评论(0)