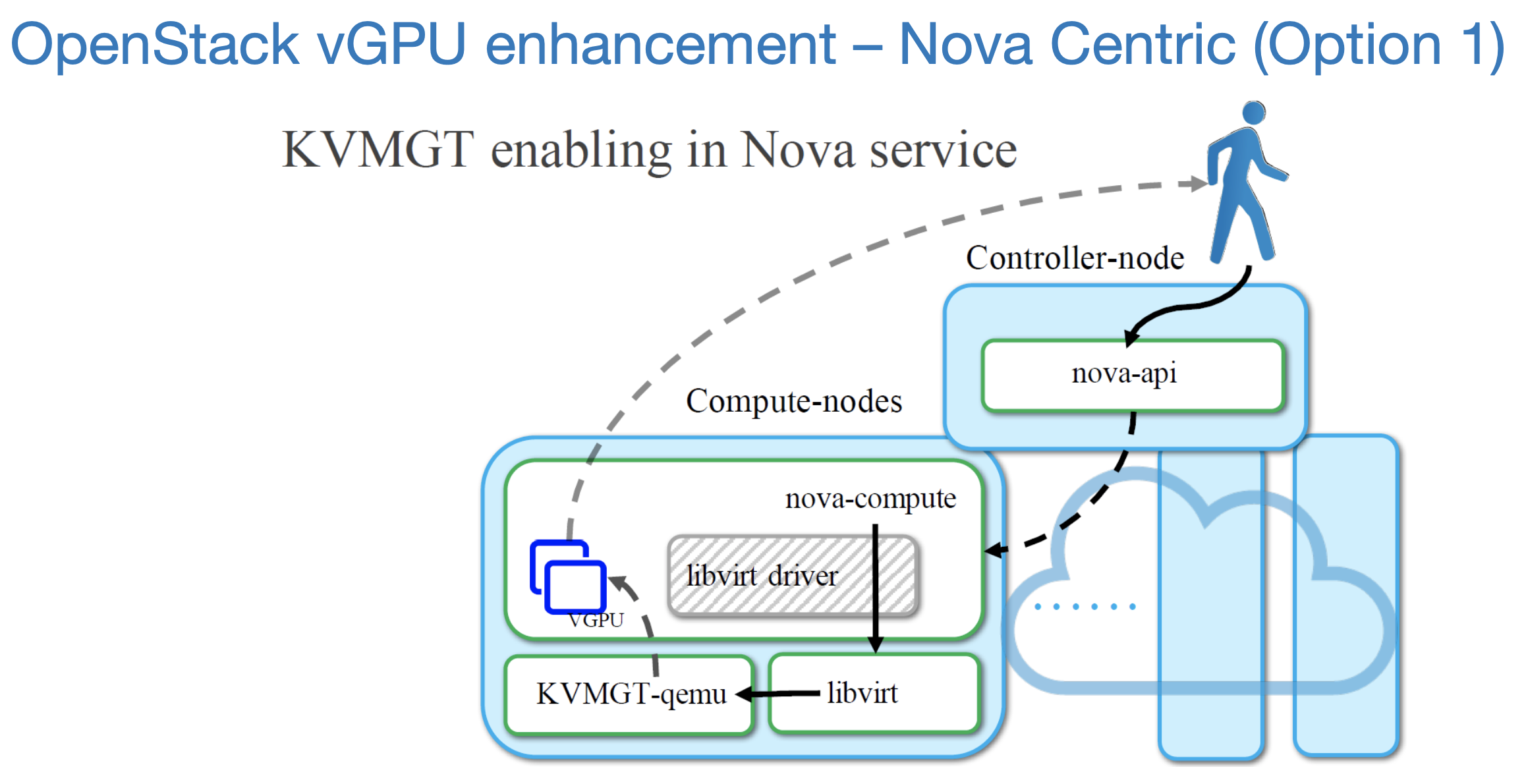

Nova — 启动 GPU Passthrough 虚拟机(Nova Centric 模式)

【摘要】

目录

文章目录

目录环境HostOS 配置OpenStack 配置VM 配置

环境

CentOS 7.9OpenStack TrainNVIDIA Tesla K40

HostOS 配...

目录

环境

- CentOS 7.9

- OpenStack Train

- NVIDIA Tesla K40

HostOS 配置

- BIOS 开启 Intel VT-x、VT-d(Intel VT for Directed I/O)硬件辅助虚拟化功能,以及 Onboard VGA(图像显示卡)功能。

egrep -c '(vmx|svm)' /proc/cpuinfo

- 1

- Linux Kernel 开启 IOMMU 功能,使能 Intel VT-d。

$ vi /etc/default/grub

...

GRUB_CMDLINE_LINUX="... intel_iommu=on"

# MBR BIOS

$ grub2-mkconfig -o /boot/grub2/grub.cfg

# UEFI BIOS

$ grub2-mkconfig -o /boot/efi/EFI/centos/grub.cfg

$ reboot

$ dmesg | grep -i iommu

...

[ 0.000000] Command line: BOOT_IMAGE=/boot/vmlinuz-3.10.0-862.6.3.el7.x86_64 root=UUID=4e83b2b5-5ff1-4b1b-af0f-3f6a7f8275ea ro intel_iommu=on crashkernel=auto rhgb quiet

[ 0.000000] Kernel command line: BOOT_IMAGE=/boot/vmlinuz-3.10.0-862.6.3.el7.x86_64 root=UUID=4e83b2b5-5ff1-4b1b-af0f-3f6a7f8275ea ro intel_iommu=on crashkernel=auto rhgb quiet

[ 0.000000] DMAR: IOMMU enabled

[ 0.257808] DMAR-IR: IOAPIC id 3 under DRHD base 0xfbffc000 IOMMU 0

[ 0.257810] DMAR-IR: IOAPIC id 1 under DRHD base 0xd7ffc000 IOMMU 1

[ 0.257812] DMAR-IR: IOAPIC id 2 under DRHD base 0xd7ffc000 IOMMU 1

$ cat /proc/cmdline | grep iommu

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 查看 GPU 设备的 PCIe 配置信息,可见一个 PCIe GPU 设备实际上由 4 个子设备(VGA、Audio、USB、Serial bus)组成。

$ yum install pciutils -y

$ lspci -nn | grep NVIDIA

...

06:00.0 VGA compatible controller [0300]: NVIDIA Corporation Device [10de:1e04] (rev a1)

06:00.1 Audio device [0403]: NVIDIA Corporation Device [10de:10f7] (rev a1)

06:00.2 USB controller [0c03]: NVIDIA Corporation Device [10de:1ad6] (rev a1)

06:00.3 Serial bus controller [0c80]: NVIDIA Corporation Device [10de:1ad7] (rev a1)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 为 GPU 设备配置 VFIO(Virtual Function I/O)驱动,使得整个 PCIe GPU 设备的所有子设备都能够 Passthrough 到 VM。因为,同一个 PCIe 插槽上的若干个子设备,会被分配到同一个 IOMMU Group。进行 PCIe GPU Passthrough 时,需要将 IOMMU Group 中的所有设备都透传给同一个 VM,否则 nova-compute 会触发错误:Please ensure all devices within the iommu_group are bound to their vfio bus driver.

# 禁用 GPU 的默认驱动,为了保证设备不被宿主机使用。

$ vi /etc/modprobe.d/blacklist.conf

...

blacklist nvidia # for VGA

blacklist snd_hda_intel # for Audio

blacklist xhci_hcd # for USB

blacklist nouveau

blacklist nvidiafb

blacklist vga16fb

# 重建新的 initramfs 镜像文件。

mv /boot/initramfs-$(uname -r).img /boot/initramfs-$(uname -r).img.bak

dracut /boot/initramfs-$(uname -r).img $(uname -r)

# 加载 VFIO 驱动。

$ vi /etc/modules-load.d/openstack-gpu.conf

...

vfio_pci

vfio

vfio_iommu_type1

pci_stub

kvm

kvm_intel

# 配置 PCIe GPU 设备的 4 个子设备都使用 VFIO 驱动。

$ vi /etc/modprobe.d/vfio.conf

options vfio-pci ids=10de:1e04,10de:10f7,10de:1ad6,10de:1ad7

# 写入到系统启动项。

$ echo 'vfio-pci' > /etc/modules-load.d/vfio-pci.conf

$ reboot

$ dmesg | grep -i vfio

[ 6.755346] VFIO - User Level meta-driver version: 0.3

[ 6.803197] vfio_pci: add [10de:1b06[ffff:ffff]] class 0x000000/00000000

[ 6.803306] vfio_pci: add [10de:10ef[ffff:ffff]] class 0x000000/00000000

$ lspci -vv -s 06:00.0 | grep driver

Kernel driver in use: vfio-pci

$ lspci -vv -s 06:00.1 | grep driver

Kernel driver in use: vfio-pci

$ lspci -vv -s 06:00.2 | grep driver

Kernel driver in use: vfio-pci

$ lspci -vv -s 06:00.3 | grep driver

Kernel driver in use: vfio-pci

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

OpenStack 配置

- 查看 PCIe 设备的 [Verdor ID:Product ID]。

$ lspci -v -s 06:00.0

$ lspci -v -s 06:00.1

$ lspci -v -s 06:00.2

$ lspci -v -s 06:00.3

- 1

- 2

- 3

- 4

- 配置 nova-scheduler service,启用 PciPassthroughFilter 调度过滤器。

[filter_scheduler]

...

enabled_filters = ...,PciPassthroughFilter

available_filters = nova.scheduler.filters.all_filters

- 1

- 2

- 3

- 4

- 配置 nova-api service,登记 GPU 设备信息,需要根据 GPU 具体型号的实际情况来设置 device_type。

[pci]

# 2080

alias = {"name":"nv2080vga","product_id":"1e04","vendor_id":"10de","device_type":"type-PCI"}

alias = {"name":"nv2080aud","product_id":"10f7","vendor_id":"10de","device_type":"type-PCI"}

alias = {"name":"nv2080usb","product_id":"1ad6","vendor_id":"10de","device_type":"type-PCI"}

alias = {"name":"nv2080bus","product_id":"1ad7","vendor_id":"10de","device_type":"type-PCI"}

## T4 默认支持基于 SR-IOV 的 vGPU,所以默认的 device_type 为 PF。

#alias = {"name":"nvT43D","product_id":"1eb8","vendor_id":"10de","device_type":"type-PF"}

## 1080

#alias = {"name":"nv1080vga","product_id":"1b06","vendor_id":"10de","device_type":"type-PCI"}

#alias = {"name":"nv1080aud","product_id":"10ef","vendor_id":"10de","device_type":"type-PCI"}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 配置 nova-compute service。

[libvirt]

...

cpu_mode = host-passthrough

[pci]

...

alias = {"name":"nv2080vga","product_id":"1e04","vendor_id":"10de","device_type":"type-PCI"}

alias = {"name":"nv2080aud","product_id":"10f7","vendor_id":"10de","device_type":"type-PCI"}

alias = {"name":"nv2080usb","product_id":"1ad6","vendor_id":"10de","device_type":"type-PCI"}

alias = {"name":"nv2080bus","product_id":"1ad7","vendor_id":"10de","device_type":"type-PCI"}

passthrough_whitelist = [{ "vendor_id": "10de", "product_id": "1e04" },

{ "vendor_id": "10de", "product_id": "10f7" },

{ "vendor_id": "10de", "product_id": "1ad6" },

{ "vendor_id": "10de", "product_id": "1ad7" }]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 创建 GPU flavor。

openstack flavor create --public --ram 2048 --disk 20 --vcpus 2 m1.large

# openstack flavor set FLAVOR-NAME --property pci_passthrough:alias=ALIAS:COUNT

openstack flavor set m1.large --property pci_passthrough:alias='nv2080vga:1,nv2080aud:1,nv2080usb:1,nv2080bus:1'

- 1

- 2

- 3

- 4

- 隐藏 VM 的 Hypervisor ID,NIVIDIA GPU 的驱动程序会检测自己是否跑在 VM 里,如果是,则会出错。所以需要对 GPU 驱动程序隐藏 VM 的 Hypervisor ID。

$ openstack image set [IMG-UUID] --property img_hide_hypervisor_id=true

# VM 中执行

$ cpuid | grep hypervisor_id

hypervisor_id = " @ @ "

hypervisor_id = " @ @ "

- 1

- 2

- 3

- 4

- 5

- 6

VM 配置

- 查看 GPU 信息。

lspci -k

- 1

- GuestOS 配置。

$ rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

$ rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

$ yum install dkms gcc kernel-devel kernel-headers

# 禁用系统默认安装的 nouveau 驱动

$ echo -e "blacklist nouveau\noptions nouveau modeset=0" > /etc/modprobe.d/blacklist.conf

# 重建新的 initramfs 镜像文件。

mv /boot/initramfs-$(uname -r).img /boot/initramfs-$(uname -r).img.bak

dracut /boot/initramfs-$(uname -r).img $(uname -r)

reboot

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 安装 GPU 驱动程序。

$ sh NVIDIA-Linux-x86_64-450.80.02.run --kernel-source-path=/usr/src/kernels/3.10.0-514.el7.x86_64 -k $(uname -r) --dkms -s -no-x-check -no-nouveau-check -no-opengl-files

$ nvidia-smi

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 450.80.02 Driver Version: 450.80.02 CUDA Version: 11.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:06.0 Off | 0 |

| N/A 73C P0 29W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 安装 CUDA 驱动程序。

yum install cuda cuda-drivers nvidia-driver-latest-dkms

- 1

- 配置 Docker 使用 GPU。

$ yum install docker-ce nvidia-docker2

$ vim /etc/docker/daemon.json

...

{

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

}

}

$ systemctl daemon-reload && systemctl restart docker

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 下载 CUDA 容器镜像。

$ docker pull nvidia/cuda:11.0-base

$ nvidia-docker run -it {image_id} /bin/bash

> nvidia-smi

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 455.45.01 Driver Version: 455.45.01 CUDA Version: 11.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:06.0 Off | 0 |

| N/A 56C P0 19W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

文章来源: is-cloud.blog.csdn.net,作者:范桂飓,版权归原作者所有,如需转载,请联系作者。

原文链接:is-cloud.blog.csdn.net/article/details/124086486

【版权声明】本文为华为云社区用户转载文章,如果您发现本社区中有涉嫌抄袭的内容,欢迎发送邮件进行举报,并提供相关证据,一经查实,本社区将立刻删除涉嫌侵权内容,举报邮箱:

cloudbbs@huaweicloud.com

- 点赞

- 收藏

- 关注作者

评论(0)