bays朴素贝叶斯文本挖掘Chinese

【摘要】 bays朴素贝叶斯文本挖掘Chinese

1 数据准备

##read and clean

sms1<-readLines("sms_labelled.txt",encoding="UTF-8")

2 数据预览

num<-nchar(sms1)

type<-substr(sms1,1,1)#the first character is email type

text<-substr(sms1,3,max(num))#the second character is blank

smsd<-data.frame(type,text,num,stringsAsFactors = F) #Record the number of characters per line

发现数据第一个字符是短信类型,第二个字符是空格,于是对我们求的y和文本x信息进行提取,num是查看每行数据有多少个字符

index0<-order(num,decreasing = T)#Returns a subscript index sorted by num

排序后返会的是下标

3 数据清洗

#tapply The data is grouped and calculated according to the second parameter

index0<-tapply(index0,type[index0],function(x) x[1:1000])#select the first 1000 items by group

index0<-c(index0$`0`,index0$`1`)#Group by 0 and 1 to form a subscript vector

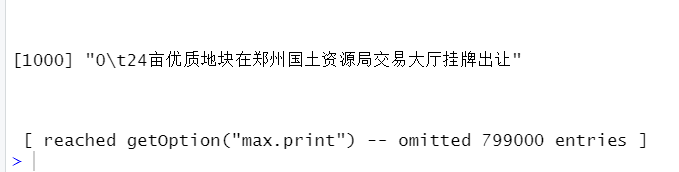

text0<-text[index0] #Text sorted by the first two thousand characters

type0<-type[index0] #Type sorted by the first two thousand characters

class<-factor(type0,labels=c("ham","spam")) # 0 and 1 forward message types

由于原始文本行数太多,这里分别提取了垃圾和非垃圾邮件的前1000条数据

##clean and segment words

text1<-gsub("[^\u4E00-\u9FA5]"," ",text0) #Clean non Chinese characters

去除其中的非中文字符

library(jiebaR)

library(tm)

##self build stopwords

work=worker(bylines = T,stop_word = "stop.txt") #Stop word text

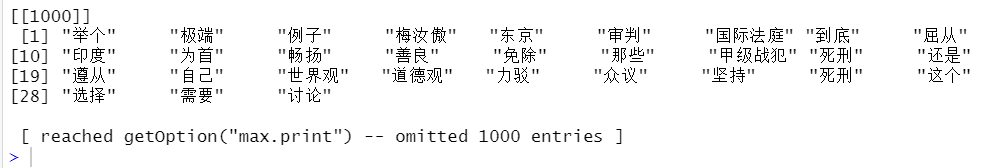

text2<-segment(text1,work) # segment text

text2<-lapply(text2,function(x) x[nchar(x)>1]) #Filter text with more than 1 characters

加载包,依靠停用词列表删除多余的停用词

textC<-tm_map(textC,function(x) removeWords(x,"我们"))#remove "我们"

inspect(textC)

4 设置测试集和训练集

##split

set.seed(12)

index1<-sample(1:length(class),length(class)*0.9) #Divide test set and training set

text_tr<-textC[index1]

text_te<-textC[-index1]

inspect(text_tr)

5 词云预览

##wordcloud

library(wordcloud)

index2<-class[index1]=="spam" #select spam

pal<-brewer.pal(6,"Dark2") # wordcloud color

par(family='STKaiti')

wordcloud(text_tr,max.words=40,random.order=F,scale=c(4,0.5),colors=pal)

wordcloud(text_tr,max.words =150, scale=c(4,0.5),

random.order=F, colors = pal,family="STKaiti")

wordcloud(text_tr[index2],max.words =150, scale=c(4,0.5),

random.order=F, colors = pal,family="STKaiti")

wordcloud(text_tr[!index2],max.words =150, scale=c(4,0.5),

random.order=F, colors = pal,family="STKaiti")

6 生成模型数据

##freq words

text_trdtm<-DocumentTermMatrix(text_tr)#Corpus generated word document matrix

inspect(text_trdtm)

ncol(text_trdtm)# Number of columns in word docment matrix

dict<-findFreqTerms(text_trdtm,5)# Words appearing above 5 are considered as high_frequency words

text_tedtm<-DocumentTermMatrix(text_te,list(dictionary=dict)) #select column

train<-apply(text_trdtm[,dict],2,function(x) ifelse(x,"yes","no"))# 1 and 0 to yes and no

test<-apply(text_tedtm,2,function(x) ifelse(x,"yes","no"))

inspect(test)

将出现在5次以上的词设为高频词,过滤掉出现次数低于5次的词语

7 建模与模型预测

##model

library(e1071)

library(gmodels)

sms<-naiveBayes(train,class[index1],laplace = 1)

testpre<-predict(sms,test,type="class")

CrossTable(testpre,class[-index1],prop.chisq = F,prop.t=F,dnn=c("actual","preedicted"))

【声明】本内容来自华为云开发者社区博主,不代表华为云及华为云开发者社区的观点和立场。转载时必须标注文章的来源(华为云社区)、文章链接、文章作者等基本信息,否则作者和本社区有权追究责任。如果您发现本社区中有涉嫌抄袭的内容,欢迎发送邮件进行举报,并提供相关证据,一经查实,本社区将立刻删除涉嫌侵权内容,举报邮箱:

cloudbbs@huaweicloud.com

- 点赞

- 收藏

- 关注作者

评论(0)