【愚公系列】2022年03月 微信小程序-系统相机

【摘要】 前言小程序调用相机可以用camera组件,也可以用wx.createCameraContext(this)接口。第一种就是简单的相机第二种选择照片里选择拍照然后调系统默认相机系统相机功能有很多,比如拍照存储,录像,如果在加上一些AI功能,还可以识别车牌,身份证进行多样化组合功能实现, camera系统相机属性:属性类型默认值必填说明最低版本modestringnormal否应用模式,只在初...

前言

小程序调用相机可以用camera组件,也可以用wx.createCameraContext(this)接口。

- 第一种就是简单的相机

- 第二种选择照片里选择拍照然后调系统默认相机

系统相机功能有很多,比如拍照存储,录像,如果在加上一些AI功能,还可以识别车牌,身份证进行多样化组合功能实现, camera系统相机属性:

| 属性 | 类型 | 默认值 | 必填 | 说明 | 最低版本 |

|---|---|---|---|---|---|

| mode | string | normal | 否 | 应用模式,只在初始化时有效,不能动态变更 | 2.1.0 |

| resolution | string | medium | 否 | 分辨率,不支持动态修改 | 2.10.0 |

| device-position | string | back | 否 | 摄像头朝向 | 1.0.0 |

| flash | string | auto | 否 | 闪光灯,值为auto, on, off | 1.0.0 |

| frame-size | string | medium | 否 | 指定期望的相机帧数据尺寸 | 2.7.0 |

| bindstop | eventhandle | 否 | 摄像头在非正常终止时触发,如退出后台等情况 | 1.0.0 | |

| binderror | eventhandle | 否 | 用户不允许使用摄像头时触发 | 1.0.0 | |

| bindinitdone | eventhandle | 否 | 相机初始化完成时触发,e.detail = {maxZoom} |

2.7.0 | |

| bindscancode | eventhandle | 否 | 在扫码识别成功时触发,仅在 mode=“scanCode” 时生效 | 2.1.0 |

mode子属性

| 合法值 | 说明 |

|---|---|

| normal | 相机模式 |

| scanCode | 扫码模式 |

resolution子属性

| 合法值 | 说明 |

|---|---|

| low | 低 |

| medium | 中 |

| high | 高 |

device-position子属性

| 合法值 | 说明 |

|---|---|

| front | 前置 |

| back | 后置 |

flash子属性

| 合法值 | 说明 |

|---|---|

| auto | 自动 |

| on | 打开 |

| off | 关闭 |

| torch | 常亮 |

frame-size子属性

| 合法值 | 说明 |

|---|---|

| small | 小尺寸帧数据 |

| medium | 中尺寸帧数据 |

| large | 大尺寸帧数据 |

一、系统相机

<import src="../../../common/head.wxml" />

<import src="../../../common/foot.wxml" />

<view class="container page" data-weui-theme="{{theme}}">

<template is="head" data="{{title: 'camera'}}"/>

<view class="page-body">

<view class="page-body-wrapper">

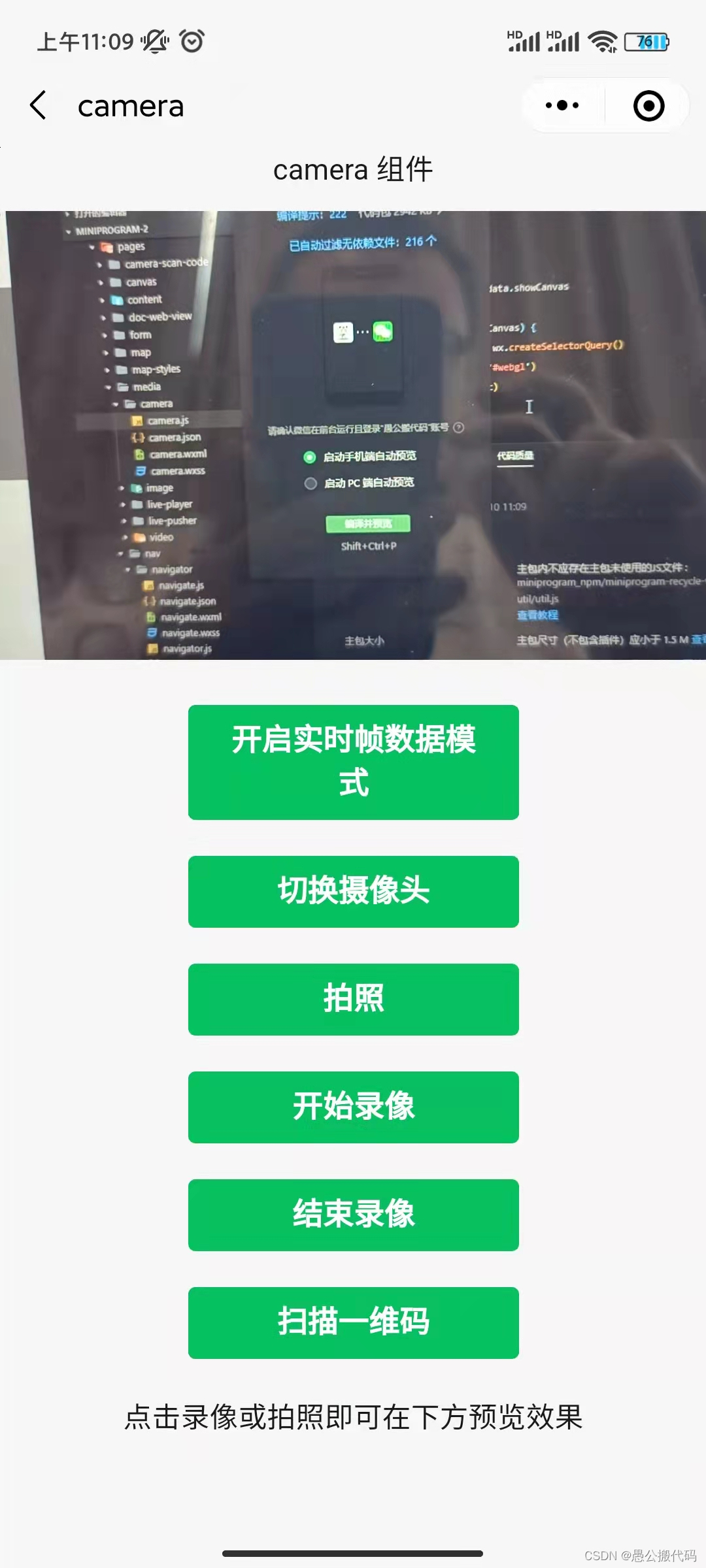

<view style="margin-bottom: 10px"> camera 组件 </view>

<camera

flash="off"

device-position="{{position}}"

binderror="error"

>

</camera>

<view wx:if="{{showCanvas}}" class="info-container">

<view style="margin: 10px 0">使用实时数据帧在 canvas 组件的展示</view>

<view>

帧高度:{{ frameHeight }} 帧宽度:{{ frameWidth }}

</view>

<canvas

id="webgl"

type="webgl"

canvas-id="canvas"

style="width: {{width}}px; height: {{height}}px;"

>

</canvas>

</view>

<view class="btn-area first-btn">

<button bindtap="handleShowCanvas" type="primary">{{showCanvas ? "关闭实时帧数据模式": "开启实时帧数据模式"}}</button>

</view>

<view class="btn-area">

<button type="primary" bindtap="togglePosition">切换摄像头</button>

</view>

<view class="btn-area">

<button type="primary" bindtap="takePhoto">拍照</button>

</view>

<view class="btn-area">

<button type="primary" bindtap="startRecord">开始录像</button>

</view>

<view class="btn-area">

<button type="primary" bindtap="stopRecord">结束录像</button>

</view>

<view class="btn-area">

<navigator url="/packageComponent/pages/media/camera-scan-code/camera-scan-code" hover-class="none">

<button type="primary">扫描一维码</button>

</navigator>

</view>

<view class="preview-tips">点击录像或拍照即可在下方预览效果</view>

<image wx:if="{{src}}" mode="widthFix" class="photo" src="{{src}}"></image>

<video wx:if="{{videoSrc}}" class="video" src="{{videoSrc}}"></video>

</view>

</view>

<template is="foot" />

</view>

const vs = `

attribute vec3 aPos;

attribute vec2 aVertexTextureCoord;

varying highp vec2 vTextureCoord;

void main(void){

gl_Position = vec4(aPos, 1);

vTextureCoord = aVertexTextureCoord;

}

`

const fs = `

varying highp vec2 vTextureCoord;

uniform sampler2D uSampler;

void main(void) {

gl_FragColor = texture2D(uSampler, vTextureCoord);

}

`

const vertex = [

-1, -1, 0.0,

1, -1, 0.0,

1, 1, 0.0,

-1, 1, 0.0

]

const vertexIndice = [

0, 1, 2,

0, 2, 3

]

const texCoords = [

0.0, 0.0,

1.0, 0.0,

1.0, 1.0,

0.0, 1.0

]

function createShader(gl, src, type) {

const shader = gl.createShader(type)

gl.shaderSource(shader, src)

gl.compileShader(shader)

if (!gl.getShaderParameter(shader, gl.COMPILE_STATUS)) {

console.error(`Error compiling shader: ${gl.getShaderInfoLog(shader)}`)

}

return shader

}

const buffers = {}

function createRenderer(canvas, width, height) {

const gl = canvas.getContext('webgl')

if (!gl) {

console.error('Unable to get webgl context.')

return null

}

const info = wx.getSystemInfoSync()

gl.canvas.width = info.pixelRatio * width

gl.canvas.height = info.pixelRatio * height

gl.viewport(0, 0, gl.drawingBufferWidth, gl.drawingBufferHeight)

const vertexShader = createShader(gl, vs, gl.VERTEX_SHADER)

const fragmentShader = createShader(gl, fs, gl.FRAGMENT_SHADER)

const program = gl.createProgram()

gl.attachShader(program, vertexShader)

gl.attachShader(program, fragmentShader)

gl.linkProgram(program)

if (!gl.getProgramParameter(program, gl.LINK_STATUS)) {

console.error('Unable to initialize the shader program.')

return null

}

gl.useProgram(program)

const texture = gl.createTexture()

gl.activeTexture(gl.TEXTURE0)

gl.bindTexture(gl.TEXTURE_2D, texture)

gl.pixelStorei(gl.UNPACK_FLIP_Y_WEBGL, true)

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.NEAREST)

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.NEAREST)

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE)

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE)

gl.bindTexture(gl.TEXTURE_2D, null)

buffers.vertexBuffer = gl.createBuffer()

gl.bindBuffer(gl.ARRAY_BUFFER, buffers.vertexBuffer)

gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(vertex), gl.STATIC_DRAW)

buffers.vertexIndiceBuffer = gl.createBuffer()

gl.bindBuffer(gl.ELEMENT_ARRAY_BUFFER, buffers.vertexIndiceBuffer)

gl.bufferData(gl.ELEMENT_ARRAY_BUFFER, new Uint16Array(vertexIndice), gl.STATIC_DRAW)

const aVertexPosition = gl.getAttribLocation(program, 'aPos')

gl.vertexAttribPointer(aVertexPosition, 3, gl.FLOAT, false, 0, 0)

gl.enableVertexAttribArray(aVertexPosition)

buffers.trianglesTexCoordBuffer = gl.createBuffer()

gl.bindBuffer(gl.ARRAY_BUFFER, buffers.trianglesTexCoordBuffer)

gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(texCoords), gl.STATIC_DRAW)

const vertexTexCoordAttribute = gl.getAttribLocation(program, 'aVertexTextureCoord')

gl.enableVertexAttribArray(vertexTexCoordAttribute)

gl.vertexAttribPointer(vertexTexCoordAttribute, 2, gl.FLOAT, false, 0, 0)

const samplerUniform = gl.getUniformLocation(program, 'uSampler')

gl.uniform1i(samplerUniform, 0)

return (arrayBuffer, width, height) => {

gl.bindTexture(gl.TEXTURE_2D, texture)

gl.texImage2D(

gl.TEXTURE_2D, 0, gl.RGBA, width, height, 0, gl.RGBA, gl.UNSIGNED_BYTE, arrayBuffer

)

gl.drawElements(gl.TRIANGLES, 6, gl.UNSIGNED_SHORT, 0)

}

}

Page({

onShareAppMessage() {

return {

title: 'camera',

path: 'packageComponent/pages/media/camera/camera'

}

},

data: {

theme: 'light',

src: '',

videoSrc: '',

position: 'back',

mode: 'scanCode',

result: {},

frameWidth: 0,

frameHeight: 0,

width: 288,

height: 358,

showCanvas: false,

},

onReady() {

this.ctx = wx.createCameraContext()

// const selector = wx.createSelectorQuery();

// selector.select('#webgl')

// .node(this.init)

// .exec()

},

init(res) {

if (this.listener) {

this.listener.stop()

}

const canvas = res.node

const render = createRenderer(canvas, this.data.width, this.data.height)

// if (!render || typeof render !== 'function') return

this.listener = this.ctx.onCameraFrame((frame) => {

render(new Uint8Array(frame.data), frame.width, frame.height)

const {

frameWidth,

frameHeight,

} = this.data

if (frameWidth === frame.width && frameHeight === frame.height) return

this.setData({

frameWidth: frame.width,

frameHeight: frame.height,

})

})

this.listener.start()

},

takePhoto() {

this.ctx.takePhoto({

quality: 'high',

success: (res) => {

this.setData({

src: res.tempImagePath

})

}

})

},

startRecord() {

this.ctx.startRecord({

success: () => {

console.log('startRecord')

}

})

},

stopRecord() {

this.ctx.stopRecord({

success: (res) => {

this.setData({

src: res.tempThumbPath,

videoSrc: res.tempVideoPath

})

}

})

},

togglePosition() {

this.setData({

position: this.data.position === 'front'

? 'back' : 'front'

})

},

error(e) {

console.log(e.detail)

},

handleShowCanvas() {

this.setData({

showCanvas: !this.data.showCanvas

}, () => {

if (this.data.showCanvas) {

const selector = wx.createSelectorQuery()

selector.select('#webgl')

.node(this.init)

.exec()

}

})

},

onLoad() {

this.setData({

theme: wx.getSystemInfoSync().theme || 'light'

})

if (wx.onThemeChange) {

wx.onThemeChange(({theme}) => {

this.setData({theme})

})

}

}

})

【声明】本内容来自华为云开发者社区博主,不代表华为云及华为云开发者社区的观点和立场。转载时必须标注文章的来源(华为云社区)、文章链接、文章作者等基本信息,否则作者和本社区有权追究责任。如果您发现本社区中有涉嫌抄袭的内容,欢迎发送邮件进行举报,并提供相关证据,一经查实,本社区将立刻删除涉嫌侵权内容,举报邮箱:

cloudbbs@huaweicloud.com

- 点赞

- 收藏

- 关注作者

评论(0)