【综述】A Comprehensive Survey on Graph NeuralNetworks(2)

目录

前言

Hello!小伙伴!

非常感谢您阅读海轰的文章,倘若文中有错误的地方,欢迎您指出~

自我介绍 ଘ(੭ˊᵕˋ)੭

昵称:海轰

标签:程序猿|C++选手|学生

简介:因C语言结识编程,随后转入计算机专业,获得过国家奖学金,有幸在竞赛中拿过一些国奖、省奖…已保研。

学习经验:扎实基础 + 多做笔记 + 多敲代码 + 多思考 + 学好英语!

唯有努力💪

文章仅作为自己的学习笔记 用于知识体系建立以及复习

知其然 知其所以然!

专业名词

- directed acyclic graph 有向无环图

笔记

Recurrent Graphneural networks 递归图神经网络

Recurrent graph neural networks (RecGNNs) are mostly pi-oneer works of GNNs. They apply the same set of parametersrecurrently over nodes in a graph to extract high-level noderepresentations. Constrained by computational power, earlierresearch mainly focused on directed acyclic graphs [13], [80].

递归图神经网络(Recurrent graph neural networks,RECGNN)是GNN的重要组成部分。它们在图中的节点上应用相同的参数集来提取高级节点表示。受计算能力的限制,早期的研究主要集中在有向无环图[13],[80]。

Graph Neural Network (GNN2) proposed by Scarselli etal. extends prior recurrent models to handle general types ofgraphs, e.g., acyclic, cyclic, directed, and undirected graphs[15]. Based on an information diffusion mechanism, GNNupdates nodes’ states by exchanging neighborhood informationrecurrently until a stable equilibrium is reached.

Scarselli等人提出的图形神经网络(GNN*2)。扩展了以前的递归模型,以处理一般类型的图,例如无环图、循环图、有向图和无向图[15]。基于信息扩散机制,GNN通过反复交换邻域信息来更新节点状态,直到达到稳定平衡 。

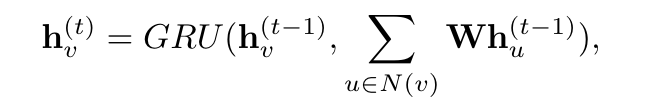

Gated Graph Neural Network (GGNN) [17] employs a gatedrecurrent unit (GRU) [81] as a recurrent function, reducing therecurrence to a fixed number of steps. The advantage is that itno longer needs to constrain parameters to ensure convergence

门控图神经网络(GGNN)[17]使用门控电流单元(GRU)[81]作为递归函数,将递归减少到固定的步数。优点是它不再需要约束参数以确保收敛

A node hidden state is updated by its previous hidden statesand its neighboring hidden states, defined a

节点隐藏状态由其以前的隐藏状态及其相邻的隐藏状态(定义为

Different from GNN* and GraphESN,GGNN uses the back-propagation through time (BPTT) algo-rithm to learn the model parameters. This can be problematicfor large graphs, as GGNN needs to run the recurrent functionmultiple times over all nodes, requiring the intermediate statesof all nodes to be stored in memory

与GNN*和GraphESN不同,GGNN使用时间反向传播(BPTT)算法来学习模型参数。这对于大型图来说可能是个问题,因为GGNN需要在所有节点上多次运行递归函数,需要将所有节点的中间状态存储在内存中

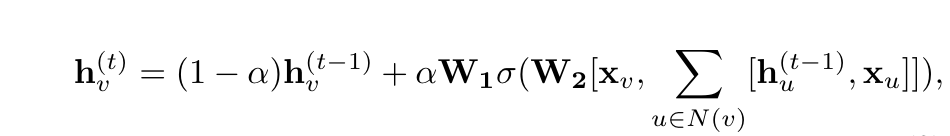

Stochastic Steady-state Embedding (SSE) proposes a learn-ing algorithm that is more scalable to large graphs [18]. SSEupdates node hidden states recurrently in a stochastic andasynchronous fashion. It alternatively samples a batch of nodesfor state update and a batch of nodes for gradient computation.To maintain stability, the recurrent function of SSE is definedas a weighted average of the historical states and new states,which takes the form

随机稳态嵌入(SSE)提出了一种学习算法,该算法更适用于大型图[18]。SSE以随机同步的方式重复更新节点隐藏状态。它也可以对一批节点进行采样以进行状态更新,并对一批节点进行梯度计算。为了保持稳定性,SSE的回归函数定义为历史状态和新状态的加权平均,其形式为

Convolutional graph neural networks 卷积图神经网络(ConvGNNs)

Instead of iterating node states with contractive constraints, ConvGNNsaddress the cyclic mutual dependencies architecturally using afixed number of layers with different weights in each layer

onvGNN不使用压缩约束来迭代节点状态,而是使用固定数量的层,在每层中使用不同的权重,在体系结构上解决循环相互依赖关系。

RecGNNs v.s. ConvGNNs

- RECGNS在更新节点表示时使用同样的图递归层(Grec)

- ConvGNS在更新节点表示时使用不同的图卷积层(Gconv)

ConvGNNs fallinto two categories, spectral-based and spatial-based.

ConvGNS分为两类,基于光谱的和基于空间的。

Spectral-based ConvGNNs 基于光谱的ConvGNS

Spectral CNN facesthree limitations. First, any perturbation to a graph results ina change of eigenbasis. Second, the learned filters are domaindependent, meaning they cannot be applied to a graph with adifferent structure. Third, eigen-decomposition requiresO(n3)computational complexity. In follow-up works, ChebNet [21]and GCN [22] reduce the computational complexity toO(m)by making several approximations and simplifications

基于光谱的CNN面临三个限制:

- 首先,对图的任何扰动都会导致特征基的变化。

- 其次,学习到的过滤器依赖于域,这意味着它们不能应用于具有不同结构的图。

- 第三,特征分解需要计算复杂度。

在后续工作中,ChebNet[21]和GCN[22]通过进行多次近似和简化,也降低了计算复杂度(m)

Spatial-based ConvGNNs 基于空间的convgns

Analogous to the convolutional operation of a conventionalCNN on an image, spatial-based methods define graph convo-lutions based on a node’s spatial relations.

与传统CNN对图像的卷积运算类似,基于空间的方法基于节点的空间关系定义图卷积。

The spatial graph convolutional operationessentially propagates node information along edges

空间图卷积运算本质上是沿着边传播节点信息

Comparison between spectral and spatial models 光谱模型和空间模型的比较

spatial modelsare preferred over spectral models due to efficiency, generality,and flexibility issues.

由于效率、通用性和灵活性问题,空间模型优于光谱模型。

First, spectral models are less efficientthan spatial models. Spectral models either need to performeigenvector computation or handle the whole graph at thesame time. Spatial models are more scalable to large graphsas they directly perform convolutions in the graph domain viainformation propagation.

首先,光谱模型的效率低于空间模型。

- 光谱模型要么需要进行矢量计算,要么同时处理整个图形。

- 空间模型更适合于大型图形,因为它们通过信息传播直接在图形域中执行卷积。

Second, spectral models which rely on a graph Fourier basis generalize poorly to new graphs. They assume a fixed graph. Any perturbationsto a graph would result in a change of eigenbasis. Spatial-based models, on the other hand, perform graph convolutionslocally on each node where weights can be easily shared acrossdifferent locations and structures.

其次,依赖于图傅里叶基的谱模型很难推广到新的图。他们假设一个固定的图形。对图形的任何扰动都会导致特征基的变化。另一方面,基于空间的模型在每个节点上局部执行图卷积,在这些节点上,权重可以很容易地跨不同的位置和结构共享。

Third, spectral-based modelsare limited to operate on undirected graphs. Spatial-basedmodels are more flexible to handle multi-source graph inputssuch as edge inputs [15], [27], [86], [95], [96], directed graphs[25], [72], signed graphs [97], and heterogeneous graphs [98],[99], because these graph inputs can be incorporated into theaggregation function easily

第三,基于谱的模型仅限于对无向图进行操作。基于空间的模型更灵活地处理多源图形输入,例如边输入[15]、[27]、[86]、[95]、[96]、有向图[25]、[72]、符号图[97]和异构图[98]、[99],因为这些图形输入可以很容易地合并到聚合函数中

Graph Pooling Modules 图池模块

After a GNN generates node features, we can use themfor the final task. But using all these features directly can becomputationally challenging, thus, a down-sampling strategyis needed. Depending on the objective and the role it playsin the network, different names are given to this strategy: (1)the pooling operation aims to reduce the size of parametersby down-sampling the nodes to generate smaller representa-tions and thus avoid overfitting, permutation invariance, andcomputational complexity issues; (2) the readout operation ismainly used to generate graph-level representation based onnode representations.

在GNN生成节点特征后,我们可以将它们用于最终任务。但直接使用所有这些特性可能会带来计算上的挑战,因此,需要一种下采样策略。

根据目标及其在网络中扮演的角色,该策略有不同的名称:

- (1)池操作旨在通过对节点进行下采样来减少参数的大小,从而生成更小的表示,从而避免过度拟合、排列不变性,以及计算复杂性问题;

- (2) 读出操作主要用于基于节点表示生成图形级表示。

它们的机制非常相似。

VC dimension VC维度

The VC dimension is a measure of modelcomplexity defined as the largest number of points that canbe shattered by a model.

VC维度是模型复杂度的度量,定义为一个模型可以打破的最大点数

Graph isomorphism 图同构

Two graphs are isomorphic if they aretopologically identical

如果两个图在拓扑上相同,那么它们是同构的

Equivariance and invariance 等变与不变性

A GNN must be an equivariantfunction when performing node-level tasks and must be aninvariant function when performing graph-level tasks

GNN在

- 执行节点级任务时必须是一个等变量函数,

- 执行图形级任务时必须是一个变量函数

In order to achieveequivariance or invariance, components of a GNN must beinvariant to node orderings. Maron et al.

为了实现等效性或不变性,GNN的组件必须随节点顺序而变化

Universal approximation 万能近似

It is well known that multi-perceptron feedforward neural networks with one hidden layercan approximate any Borel measurable functions [105]

众所周知,具有一个隐藏层的多感知器前馈神经网络可以逼近任何Borel可测函数[105]

结语

参考:《A Comprehensive Survey on Graph NeuralNetworks》

总结:有一些专业名词还是不太认识,速度很慢,只能了解一些概念上的大概,详细原理没有深入了解

文章仅作为学习笔记,记录从0到1的一个过程

希望对您有一点点帮助,如有错误欢迎小伙伴指正

文章来源: haihong.blog.csdn.net,作者:海轰Pro,版权归原作者所有,如需转载,请联系作者。

原文链接:haihong.blog.csdn.net/article/details/123203984

- 点赞

- 收藏

- 关注作者

评论(0)