PG高可用之Citus分布式集群搭建及使用

Citus集群简介

Citus是Postgres的开源扩展,将Postgres转换成一个分布式数据库,在集群的多个节点上分发数据和查询,具有像分片、分布式SQL引擎、复制表和分布式表等特性。

因为Citus是Postgres的扩展(而不是一个独立的代码分支),所以当你使用Citus时,你也在使用Postgres,可以利用最新的Postgres特性、工具和生态系统。

Citus是一款基于PostgreSQL的开源分布式数据库,自动继承了PostgreSQL强大的SQL支持能力和应用生态(不仅是客户端协议的兼容还包括服务端扩展和管理工具的完全兼容)。Citus是PostgreSQL的扩展(not a fork),采用shared nothing架构,节点之间无共享数据,由协调器节点和Work节点构成一个数据库集群。专注于高性能HTAP分布式数据库。

相比单机PostgreSQL,Citus可以使用更多的CPU核心,更多的内存数量,保存更多的数据。通过向集群添加节点,可以轻松的扩展数据库。与其他类似的基于PostgreSQL的分布式方案,比如Greenplum,PostgreSQL-XL相比,citus最大的不同在于它是一个PostgreSQL扩展而不是一个独立的代码分支。 Citus可以用很小的代价和更快的速度紧跟PostgreSQL的版本演进;同时又能最大程度的保证数据库的稳定性和兼容性。

Citus支持新版本PostgreSQL的特性,并保持与现有工具的兼容。 Citus使用分片和复制在多台机器上横向扩展PostgreSQL。它的查询引擎将在这些服务器上执行SQL进行并行化查询,以便在大型数据集上实现实时(不到一秒)的响应。

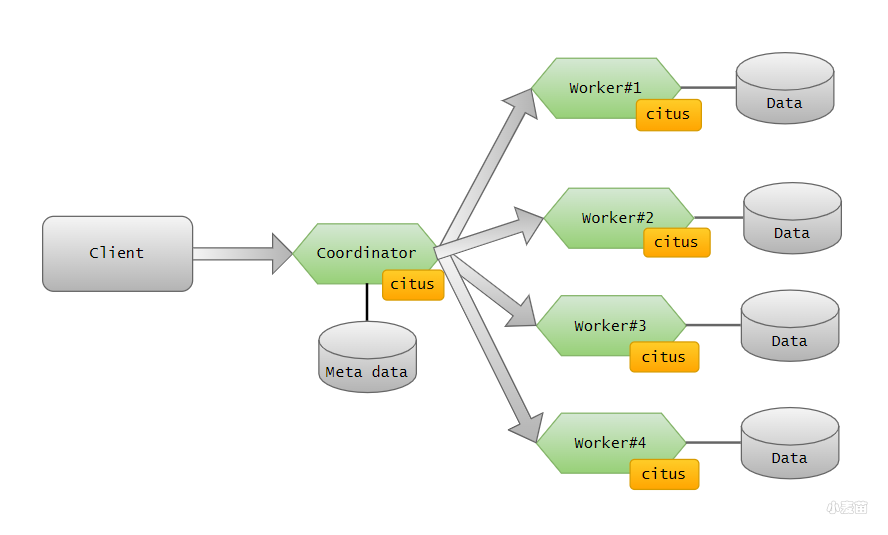

Citus集群由一个中心的协调节点(CN)和若干个工作节点(Worker)构成。

coordinate:协调节点,一般称为cn,存储所有元数据,不存实际数据,该节点直接对用户开放,等于一个客户端。

worker:工作节点,不存储元数据,存储实际数据。执行协调节点发来的查询请求。一般不直接对用户开放。

环境

| 主机名 | IP | 角色 | 端口 | 备注 |

|---|---|---|---|---|

| coordinate | 172.72.6.80 | coordinate | 5432 | 安装PG 13.3 + Citus 10.2.4 |

| worker1 | 172.72.6.81 | worker | 5432 | 安装PG 13.3 + Citus 10.2.4 |

| worker2 | 172.72.6.82 | worker | 5432 | 安装PG 13.3 + Citus 10.2.4 |

| worker3 | 172.72.6.83 | worker | 5432 | 安装PG 13.3 + Citus 10.2.4 |

| worker4 | 172.72.6.84 | worker | 5432 | 安装PG 13.3 + Citus 10.2.4 |

-- 网卡

docker network create --subnet=172.72.6.0/24 pg-network

-- pg cn

docker rm -f lhrpgcituscn80

docker run -d --name lhrpgcituscn80 -h lhrpgcituscn80 \

--net=pg-network --ip 172.72.6.80 \

-p 64380:5432 \

-v /sys/fs/cgroup:/sys/fs/cgroup \

--privileged=true lhrbest/lhrpgall:2.0 \

/usr/sbin/init

docker rm -f lhrpgcitusdn81

docker run -d --name lhrpgcitusdn81 -h lhrpgcitusdn81 \

--net=pg-network --ip 172.72.6.81 \

-p 64381:5432 \

-v /sys/fs/cgroup:/sys/fs/cgroup \

--privileged=true lhrbest/lhrpgall:2.0 \

/usr/sbin/init

docker rm -f lhrpgcitusdn82

docker run -d --name lhrpgcitusdn82 -h lhrpgcitusdn82 \

--net=pg-network --ip 172.72.6.82 \

-p 64382:5432 \

-v /sys/fs/cgroup:/sys/fs/cgroup \

--privileged=true lhrbest/lhrpgall:2.0 \

/usr/sbin/init

docker rm -f lhrpgcitusdn83

docker run -d --name lhrpgcitusdn83 -h lhrpgcitusdn83 \

--net=pg-network --ip 172.72.6.83 \

-p 64383:5432 \

-v /sys/fs/cgroup:/sys/fs/cgroup \

--privileged=true lhrbest/lhrpgall:2.0 \

/usr/sbin/init

docker rm -f lhrpgcitusdn84

docker run -d --name lhrpgcitusdn84 -h lhrpgcitusdn84 \

--net=pg-network --ip 172.72.6.84 \

-p 64384:5432 \

-v /sys/fs/cgroup:/sys/fs/cgroup \

--privileged=true lhrbest/lhrpgall:2.0 \

/usr/sbin/init

[root@docker35 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0183e7a9704a lhrbest/lhrpgall:2.0 "/usr/sbin/init" 6 seconds ago Up 3 seconds 0.0.0.0:64384->5432/tcp, :::64384->5432/tcp lhrpgcitusdn84

877d897a5a76 lhrbest/lhrpgall:2.0 "/usr/sbin/init" 8 seconds ago Up 6 seconds 0.0.0.0:64383->5432/tcp, :::64383->5432/tcp lhrpgcitusdn83

98dafcefc505 lhrbest/lhrpgall:2.0 "/usr/sbin/init" 10 seconds ago Up 7 seconds 0.0.0.0:64382->5432/tcp, :::64382->5432/tcp lhrpgcitusdn82

04510e0bfa96 lhrbest/lhrpgall:2.0 "/usr/sbin/init" 11 seconds ago Up 10 seconds 0.0.0.0:64381->5432/tcp, :::64381->5432/tcp lhrpgcitusdn81

8cf991b0633f lhrbest/lhrpgall:2.0 "/usr/sbin/init" 13 seconds ago Up 11 seconds 0.0.0.0:64380->5432/tcp, :::64380->5432/tcp lhrpgcituscn80

防火墙修改

其中,coordinate节点的pg_hba.conf配置:

cat >> /var/lib/pgsql/13/data/pg_hba.conf <<"EOF"

host all all 0.0.0.0/0 md5

EOF

worker节点的pg_hba.conf配置:

cat >> /var/lib/pgsql/13/data/pg_hba.conf <<"EOF"

host all all 172.72.6.0/24 trust

EOF

安装citus

在每个节点上都安装citus,包括cn和dn。

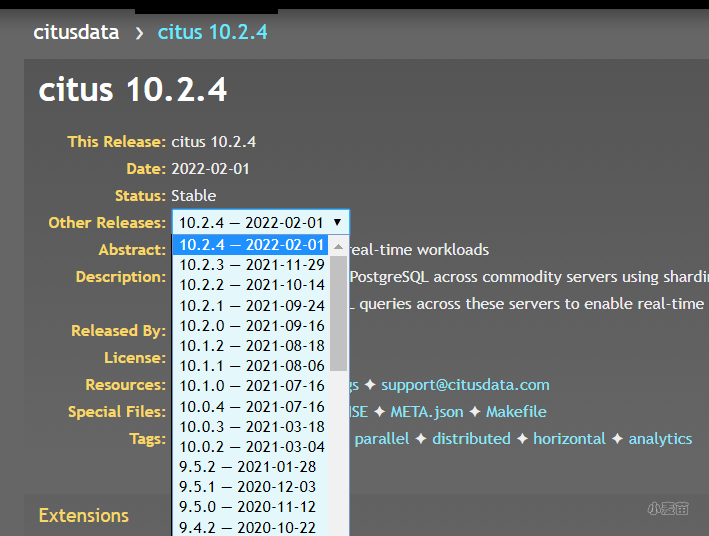

可以在以下位置下载citus的源码:

https://github.com/citusdata/citus/releases

https://pgxn.org/dist/citus/10.2.4/

最新版本10.2.4,如下:

-- yum直接安装

yum list | grep citus

yum install -y citus_13

su - postgresql

psql

create database lhrdb;

\c lhrdb

alter system set shared_preload_libraries='citus';

select * from pg_available_extensions where name='citus';

pg_ctl restart

psql -d lhrdb

create extension citus;

\dx

\dx+ citus

集群配置

协调节点新增工作节点

管理操作仅仅在协调节点(cn)上操作:

[postgres@lhrpgcituscn80 ~]$ psql -d lhrdb

psql (13.3)

Type "help" for help.

lhrdb=#

-- 节点可以是ip或者dns name

SELECT * from master_add_node('172.72.6.81', 5432);

SELECT * from master_add_node('172.72.6.82', 5432);

SELECT * from master_add_node('172.72.6.83', 5432);

SELECT * from master_add_node('172.72.6.84', 5432);

-- 查看工作节点:

lhrdb=# SELECT * FROM master_get_active_worker_nodes();

node_name | node_port

-------------+-----------

172.72.6.81 | 5432

172.72.6.83 | 5432

172.72.6.84 | 5432

172.72.6.82 | 5432

(4 rows)

lhrdb=# select * from pg_dist_node;

nodeid | groupid | nodename | nodeport | noderack | hasmetadata | isactive | noderole | nodecluster | metadatasynced | shouldhaveshards

--------+---------+-------------+----------+----------+-------------+----------+----------+-------------+----------------+------------------

1 | 1 | 172.72.6.81 | 5432 | default | f | t | primary | default | f | t

2 | 2 | 172.72.6.82 | 5432 | default | f | t | primary | default | f | t

3 | 3 | 172.72.6.83 | 5432 | default | f | t | primary | default | f | t

4 | 4 | 172.72.6.84 | 5432 | default | f | t | primary | default | f | t

(4 rows)

创建分片表

lhrdb=# create table test(id int primary key ,name varchar);

#配置分片策略

#设置分片数,4个主机,设置分片4,每个主机一个分片

lhrdb=# set citus.shard_count=4;

# 配置副本数

lhrdb=# set citus.shard_replication_factor=2;

lhrdb=# SELECT create_distributed_table('test', 'id', 'hash');

lhrdb=# insert into test select id,md5(random()::text) from generate_series(1,500) as id;

# 查看分片分布

lhrdb=# select * from citus_tables;

table_name | citus_table_type | distribution_column | colocation_id | table_size | shard_count | table_owner | access_method

------------+------------------+---------------------+---------------+------------+-------------+-------------+---------------

test | distributed | id | 1 | 384 kB | 4 | postgres | heap

(1 row)

lhrdb=# select * from master_get_table_metadata('test');

logical_relid | part_storage_type | part_method | part_key | part_replica_count | part_max_size | part_placement_policy

---------------+-------------------+-------------+----------+--------------------+---------------+-----------------------

16995 | t | h | id | 2 | 1073741824 | 2

(1 row)

lhrdb=# select * from pg_dist_placement where shardid in (select shardid from pg_dist_shard where logicalrelid='test'::regclass);

placementid | shardid | shardstate | shardlength | groupid

-------------+---------+------------+-------------+---------

1 | 102008 | 1 | 0 | 1

2 | 102008 | 1 | 0 | 2

3 | 102009 | 1 | 0 | 2

4 | 102009 | 1 | 0 | 3

5 | 102010 | 1 | 0 | 3

6 | 102010 | 1 | 0 | 4

7 | 102011 | 1 | 0 | 4

8 | 102011 | 1 | 0 | 1

(8 rows)

lhrdb=# SELECT * from pg_dist_shard_placement order by shardid, placementid;

shardid | shardstate | shardlength | nodename | nodeport | placementid

---------+------------+-------------+-------------+----------+-------------

102008 | 1 | 0 | 172.72.6.81 | 5432 | 1

102008 | 1 | 0 | 172.72.6.82 | 5432 | 2

102009 | 1 | 0 | 172.72.6.82 | 5432 | 3

102009 | 1 | 0 | 172.72.6.83 | 5432 | 4

102010 | 1 | 0 | 172.72.6.83 | 5432 | 5

102010 | 1 | 0 | 172.72.6.84 | 5432 | 6

102011 | 1 | 0 | 172.72.6.84 | 5432 | 7

102011 | 1 | 0 | 172.72.6.81 | 5432 | 8

(8 rows)

lhrdb=# select count(*) from test;

count

-------

500

(1 row)

-- 查看分片表

[postgres@lhrpgcitusdn84 ~]$ psql -U postgres -h 172.72.6.80 -d lhrdb -c "\dt";

List of relations

Schema | Name | Type | Owner

--------+------+-------+----------

public | test | table | postgres

(1 row)

[postgres@lhrpgcitusdn84 ~]$ psql -U postgres -h 172.72.6.81 -d lhrdb -c "\dt";

List of relations

Schema | Name | Type | Owner

--------+-------------+-------+----------

public | test_102008 | table | postgres

public | test_102011 | table | postgres

(2 rows)

[postgres@lhrpgcitusdn84 ~]$ psql -U postgres -h 172.72.6.82 -d lhrdb -c "\dt";

List of relations

Schema | Name | Type | Owner

--------+-------------+-------+----------

public | test_102008 | table | postgres

public | test_102009 | table | postgres

(2 rows)

[postgres@lhrpgcitusdn84 ~]$ psql -U postgres -h 172.72.6.83 -d lhrdb -c "\dt";

List of relations

Schema | Name | Type | Owner

--------+-------------+-------+----------

public | test_102009 | table | postgres

public | test_102010 | table | postgres

(2 rows)

[postgres@lhrpgcitusdn84 ~]$ psql -U postgres -h 172.72.6.84 -d lhrdb -c "\dt";

List of relations

Schema | Name | Type | Owner

--------+-------------+-------+----------

public | test_102010 | table | postgres

public | test_102011 | table | postgres

(2 rows)

有4个worker,所以数据分片为4,每个分片,做两个副本。

通过分片分布,如102008分布在172.72.6.81,172.72.6.82上,同理102009分布在172.72.6.82,172.72.6.83上。

假设6.81机器宕机了,集群访问102008原先是方位6.81的,现在会自动访问6.82上的102008分片。也就是说,单个数据节点故障,集群还能正常用,通过多设置副本,多个节点故障也能更强壮。

CN节点的进程:

[root@lhrpgcituscn80 /]# ps -ef|grep post

postgres 1589 0 0 10:27 ? 00:00:00 /usr/pgsql-13/bin/postgres -D /var/lib/pgsql/13/data/

postgres 1590 1589 0 10:27 ? 00:00:00 postgres: logger

postgres 1592 1589 0 10:27 ? 00:00:00 postgres: checkpointer

postgres 1593 1589 0 10:27 ? 00:00:00 postgres: background writer

postgres 1594 1589 0 10:27 ? 00:00:00 postgres: walwriter

postgres 1595 1589 0 10:27 ? 00:00:00 postgres: autovacuum launcher

postgres 1596 1589 0 10:27 ? 00:00:00 postgres: stats collector

postgres 1597 1589 0 10:27 ? 00:00:00 postgres: logical replication launcher

postgres 1641 1589 0 10:28 ? 00:00:03 postgres: Citus Maintenance Daemon: 16430/10

DN节点的进程:

[root@lhrpgcitusdn81 /]# ps -ef|grep post

postgres 8661 0 0 11:09 ? 00:00:00 /usr/pgsql-13/bin/postgres -D /var/lib/pgsql/13/data/

postgres 8662 8661 0 11:09 ? 00:00:00 postgres: logger

postgres 8665 8661 0 11:09 ? 00:00:00 postgres: checkpointer

postgres 8666 8661 0 11:09 ? 00:00:00 postgres: background writer

postgres 8667 8661 0 11:09 ? 00:00:00 postgres: walwriter

postgres 8668 8661 0 11:09 ? 00:00:00 postgres: autovacuum launcher

postgres 8669 8661 0 11:09 ? 00:00:00 postgres: stats collector

postgres 8670 8661 0 11:09 ? 00:00:00 postgres: logical replication launcher

postgres 8710 8661 0 11:10 ? 00:00:00 postgres: Citus Maintenance Daemon: 13255/10

postgres 8720 8661 0 11:10 ? 00:00:00 postgres: Citus Maintenance Daemon: 16430/10

postgres 9591 8661 0 11:25 ? 00:00:00 postgres: postgres lhrdb 172.72.6.80(58852) idle

postgres 13145 8661 0 12:27 ? 00:00:00 postgres: postgres lhrdb 172.72.6.80(58998) idle

所有变量查询,可以使用tab键自动返回相关变量:

lhrdb=# set citus.

citus.all_modifications_commutative citus.count_distinct_error_rate

citus.enable_binary_protocol citus.enable_local_execution

citus.enable_repartition_joins citus.explain_analyze_sort_method

citus.local_hostname citus.log_remote_commands

citus.max_cached_connection_lifetime citus.max_intermediate_result_size

citus.multi_shard_modify_mode citus.node_connection_timeout

citus.propagate_set_commands citus.shard_count

citus.shard_placement_policy citus.task_assignment_policy

citus.values_materialization_threshold citus.writable_standby_coordinator

citus.coordinator_aggregation_strategy citus.defer_drop_after_shard_move

citus.enable_deadlock_prevention citus.enable_local_reference_table_foreign_keys

citus.explain_all_tasks citus.limit_clause_row_fetch_count

citus.local_table_join_policy citus.max_adaptive_executor_pool_size

citus.max_cached_conns_per_worker citus.multi_shard_commit_protocol

citus.multi_task_query_log_level citus.partition_buffer_size

citus.remote_task_check_interval citus.shard_max_size

citus.shard_replication_factor citus.task_executor_type

citus.worker_min_messages

lhrdb=# set citus.shard_

citus.shard_count citus.shard_max_size

citus.shard_placement_policy citus.shard_replication_factor

lhrdb=# show citus.shard_count ;

citus.shard_count

-------------------

32

(1 row)

查看所有执行计划

默认情况下,Citus中查看执行计划会省略大部分不同节点的相同计划,如果想查看完整的查询计划,会话设置如下:

lhrdb=# explain select count(*) from test;

QUERY PLAN

------------------------------------------------------------------------------------------

Aggregate (cost=250.00..250.02 rows=1 width=8)

-> Custom Scan (Citus Adaptive) (cost=0.00..0.00 rows=100000 width=8)

Task Count: 4

Tasks Shown: One of 4

-> Task

Node: host=172.72.6.81 port=5432 dbname=lhrdb

-> Aggregate (cost=2.49..2.50 rows=1 width=8)

-> Seq Scan on test_102008 test (cost=0.00..2.19 rows=119 width=0)

(8 rows)

lhrdb=# SET citus.explain_all_tasks = 'TRUE';

SET

lhrdb=# explain select count(*) from test;

QUERY PLAN

------------------------------------------------------------------------------------------

Aggregate (cost=250.00..250.02 rows=1 width=8)

-> Custom Scan (Citus Adaptive) (cost=0.00..0.00 rows=100000 width=8)

Task Count: 4

Tasks Shown: All

-> Task

Node: host=172.72.6.81 port=5432 dbname=lhrdb

-> Aggregate (cost=2.49..2.50 rows=1 width=8)

-> Seq Scan on test_102008 test (cost=0.00..2.19 rows=119 width=0)

-> Task

Node: host=172.72.6.82 port=5432 dbname=lhrdb

-> Aggregate (cost=3.73..3.73 rows=1 width=8)

-> Seq Scan on test_102009 test (cost=0.00..3.38 rows=138 width=0)

-> Task

Node: host=172.72.6.83 port=5432 dbname=lhrdb

-> Aggregate (cost=2.47..2.48 rows=1 width=8)

-> Seq Scan on test_102010 test (cost=0.00..2.18 rows=118 width=0)

-> Task

Node: host=172.72.6.84 port=5432 dbname=lhrdb

-> Aggregate (cost=3.56..3.57 rows=1 width=8)

-> Seq Scan on test_102011 test (cost=0.00..3.25 rows=125 width=0)

(20 rows)

- 点赞

- 收藏

- 关注作者

评论(0)