【论文代码】GraphSAGE(更新ing)

一、官方代码

除了tensorflow版本,作者还开源了一个简单、易扩展的pytorch版本,其中用的数据集比较小(不是论文中的数据集)。

Cora数据集由机器学习论文组成。 这些论文分为以下七个类别之一:

基于案例

遗传算法

神经网络

概率方法

强化学习

规则学习

理论

这些论文的选择方式是,在最终语料库中,每篇论文引用或被至少一篇其他论文引用。整个语料库中有 2708篇论文。

在词干堵塞和去除词尾后,只剩下 1433个 唯一的单词。文档频率小于10的所有单词都被删除。

1.1 加载数据

1.2 Unsupervised Loss

1.3 Models

1.4 评估与模型使用

1.5 Main

二、PyG版本

x i ′ = W 1 x i + W 2 ⋅ m e a n j ∈ N ( i ) x j \mathbf{x}^{\prime}_i = \mathbf{W}_1 \mathbf{x}_i + \mathbf{W}_2 \cdot \mathrm{mean}_{j \in \mathcal{N(i)}} \mathbf{x}_j xi′=W1xi+W2⋅meanj∈N(i)xj

class SAGEConv(MessagePassing):

(1)in_channels (int or tuple): Size of each input sample, or :obj:-1 to derive the size from the first input(s) to the forward method.A tuple corresponds to the sizes of source and target dimensionalities.

(2)out_channels (int): Size of each output sample.

(3)normalize (bool, optional): If set to :obj:True, output features will be :math: ℓ 2 \ell_2 ℓ2-normalized, i.e., :math: x i ′ ∥ x i ′ ∥ 2 \frac{\mathbf{x}^{\prime}_i} {\| \mathbf{x}^{\prime}_i \|_2} ∥xi′∥2xi′. (default: :obj:False)

(4)root_weight (bool, optional): If set to :obj:False, the layer will not add transformed root node features to the output.(default: :obj:True)

(5)bias (bool, optional): If set to :obj:False, the layer will not learn an additive bias. (default: :obj:True)

(6)**kwargs (optional): Additional arguments of

官方代码:https://github.com/williamleif/graphsage-simple/

如果我们使用pytorch的PyG也能很方便调用:

# -*- coding: utf-8 -*-

"""

Created on Fri Oct 8 23:16:13 2021

@author: 86493

"""

import torch

from torch_geometric.datasets import Planetoid

from torch_geometric.transforms import NormalizeFeatures

dataset = Planetoid(root='C:/dataset/Cora/processed', name='Cora', transform=NormalizeFeatures())

print()

print(f'Dataset: {dataset}:')

print('======================')

print(f'Number of graphs: {len(dataset)}')

print(f'Number of features: {dataset.num_features}')

print(f'Number of classes: {dataset.num_classes}')

data = dataset[0] # Get the first graph object.

print()

print(data)

print('======================')

# Gather some statistics about the graph.

print(f'Number of nodes: {data.num_nodes}')

print(f'Number of edges: {data.num_edges}')

print(f'Average node degree: {data.num_edges / data.num_nodes:.2f}')

print(f'Number of training nodes: {data.train_mask.sum()}')

print(f'Training node label rate: {int(data.train_mask.sum()) / data.num_nodes:.2f}')

print(f'Contains isolated nodes: {data.has_isolated_nodes()}')

print(f'Contains self-loops: {data.has_self_loops()}')

print(f'Is undirected: {data.is_undirected()}')

# 2.可视化节点表征分布的方法

import matplotlib.pyplot as plt

from sklearn.manifold import TSNE

def visualize(h, color):

z = TSNE(n_components=2).fit_transform(h.detach().cpu().numpy())

plt.figure(figsize=(10,10))

plt.xticks([])

plt.yticks([])

plt.scatter(z[:, 0], z[:, 1], s=70, c=color, cmap="Set2")

plt.show()

# 网络的构造

import torch

from torch.nn import Linear

import torch.nn.functional as F

"""

from torch_geometric.nn import GCNConv

class GCN(torch.nn.Module):

def __init__(self, hidden_channels):

super(GCN, self).__init__()

torch.manual_seed(12345)

self.conv1 = GCNConv(dataset.num_features, hidden_channels)

self.conv2 = GCNConv(hidden_channels, dataset.num_classes)

def forward(self, x, edge_index):

x = self.conv1(x, edge_index)

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.conv2(x, edge_index)

return x

"""

from torch_geometric.nn import SAGEConv

class SAGE(torch.nn.Module):

def __init__(self, hidden_channels):

super(SAGE, self).__init__()

torch.manual_seed(12345)

self.conv1 = SAGEConv(dataset.num_features, hidden_channels)

self.conv2 = SAGEConv(hidden_channels, dataset.num_classes)

def forward(self, x, edge_index):

x = self.conv1(x, edge_index)

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.conv2(x, edge_index)

return x

model = SAGE(hidden_channels=16)

print(model)

# 可视化由未经训练的图神经网络生成的节点表征

model = SAGE(hidden_channels=16)

model.eval()

out = model(data.x, data.edge_index)

visualize(out, color=data.y)

# 图神经网络的训练

model = SAGE(hidden_channels=16)

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4)

criterion = torch.nn.CrossEntropyLoss()

def train():

model.train()

optimizer.zero_grad() # Clear gradients.

out = model(data.x, data.edge_index) # Perform a single forward pass.

loss = criterion(out[data.train_mask], data.y[data.train_mask]) # Compute the loss solely based on the training nodes.

loss.backward() # Derive gradients.

optimizer.step() # Update parameters based on gradients.

return loss

for epoch in range(1, 201):

loss = train()

print(f'Epoch: {epoch:03d}, Loss: {loss:.4f}')

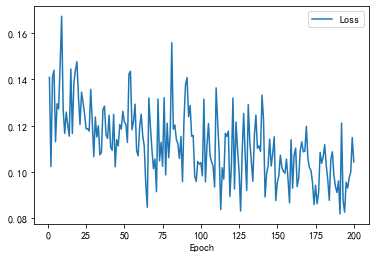

# 增加loss折线图

import pandas as pd

df = pd.DataFrame(columns = ["Loss"]) # columns列名

df.index.name = "Epoch"

for epoch in range(1, 201):

loss = train()

#df.loc[epoch] = loss.item()

df.loc[epoch] = loss.item()

df.plot()

# 图神经网络的测试

def test():

model.eval()

out = model(data.x, data.edge_index)

pred = out.argmax(dim=1) # Use the class with highest probability.

test_correct = pred[data.test_mask] == data.y[data.test_mask] # Check against ground-truth labels.

test_acc = int(test_correct.sum()) / int(data.test_mask.sum()) # Derive ratio of correct predictions.

return test_acc

test_acc = test()

print(f'Test Accuracy: {test_acc:.4f}')

# 可视化由训练后的图神经网络生成的节点表征

model.eval()

out = model(data.x, data.edge_index)

visualize(out, color=data.y)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

打印出的结果为:

Dataset: Cora():

======================

Number of graphs: 1

Number of features: 1433

Number of classes: 7

Data(

x=[2708, 1433], edge_index=[2, 10556],

y=[2708], train_mask=[2708],

val_mask=[2708], test_mask=[2708]

)

======================

Number of nodes: 2708

Number of edges: 10556

Average node degree: 3.90

Number of training nodes: 140

Training node label rate: 0.05

Contains isolated nodes: False

Contains self-loops: False

Is undirected: True

SAGE(

(conv1): SAGEConv(1433, 16)

(conv2): SAGEConv(16, 7)

)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

可视化的图如上所示,也可以可视化loss的200个epoch的折线图:

Reference

(1)https://github.com/twjiang/graphSAGE-pytorch/tree/master/src

(2)https://zhuanlan.zhihu.com/p/410407148

(3)https://blog.csdn.net/weixin_44027006/article/details/116888648

(4)GraphSAGE 代码解析(二) - layers.py

(5)https://www.zhihu.com/search?q=GraphSAGE%E4%BB%A3%E7%A0%81PyG%E8%A7%A3%E8%AF%BB&utm_content=search_history&type=content

文章来源: andyguo.blog.csdn.net,作者:山顶夕景,版权归原作者所有,如需转载,请联系作者。

原文链接:andyguo.blog.csdn.net/article/details/120677580

- 点赞

- 收藏

- 关注作者

评论(0)