tbase配置hdfs备份

【摘要】 1,系统环境变量配置export JAVA_HOME=/opt/jdk1.8.0_40export HADOOP_HOME=/opt/hadoop-3.3.0export PATH=$PATH:$JAVA_HOME/binexport PATH=$PATH:/opt/hadoop-3.3.0/bin/:/opt/hadoop-3.3.0/sbin2,配置hadoop,使用hadoop用户//...

1,系统环境变量配置

export JAVA_HOME=/opt/jdk1.8.0_40

export HADOOP_HOME=/opt/hadoop-3.3.0

export PATH=$PATH:$JAVA_HOME/bin

export PATH=$PATH:/opt/hadoop-3.3.0/bin/:/opt/hadoop-3.3.0/sbin2,配置hadoop,使用hadoop用户

//本次测试使用了,单节点,伪分布式模式,搭建HDFS文件系统,用于tbase备份使用。

2.1 修改配置文件

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.5.200:9000</value>

</property>hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>2.2 启动hdfs新建备份目录

#启动之前配置hadoop ssh等效性,免密码认证。

ssh db01 date

Thu Dec 16 16:54:22 CST 2021

#初始化和启动hdfs

hdfs namenode -format

start-dfs.sh

#新建备份目录

hdfs dfs -mkdir /backup

hdfs dfs -chown tbase:tbase /backup

hdfs dfs -ls /2.3 确认目录情况

[hadoop@db01 hadoop]$ hdfs dfs -ls /

Found 1 items

drwxr-xr-x - tbase tbase 0 2021-12-16 16:20 /backup

[hadoop@db01 hadoop]$3.在所有tbase节点,配置hadoop客户端

3.1环境变量配置

export JAVA_HOME=/opt/jdk1.8.0_40

export CLASSPATH=:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export HADOOP_HOME=/opt/hadoop-3.3.0

export PATH=$PATH:$JAVA_HOME/bin

export PATH=$PATH:$HADOOP_HOME/bin/:$HADOOP_HOME/sbin3.2hadoop客户端变量使用jdk

hadoop-env.sh

export JAVA_HOME=/opt/jdk1.8.0_403.3core-site.xml文件配置

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.5.200:9000</value>

</property>

</configuration>4,重启oss服务

su - tbase

cd install/tbase_oss/tools/op/

sh stop.sh

sh start.sh5,tbase配置备份

运维管理--备份管理//稍等,时间可能比较慢。

6.查看配置信息

7,查看备份任务

[root@db02 opt]# ps -ef|grep back

root 31 2 0 13:58 ? 00:00:00 [writeback]

tbase 13764 121141 0 16:48 pts/0 00:00:00 bash -c cd /data/tbase/user_1/tdata_00/test_1/data/dn001 && tar cf - * --exclude pg_wal --exclude pg_log --exclude pg_2pc --exclude postmaster.opts --exclude postmaster.pid --exclude backup_label | hadoop fs -put - hdfs://192.168.5.200:9000/backup/test_1/base_bkp_2021_12_16/dn001_192.168.5.201_11000/2021_12_16_16_45_24/base.tar

tbase 13767 13764 0 16:48 pts/0 00:00:00 tar cf - backup_label.old base current_logfiles global gtm_proxy.conf pg_2pc pgbouncer.ini pg_commit_ts pg_datapump pg_dynshmem pg_hba.conf pg_hba.conf.backup pg_ident.conf pg_log pg_logical pg_multixact pg_notify pg_replslot pg_serial pg_snapshots pg_stat pg_stat_tmp pg_subtrans pg_tblspc pg_twophase PG_VERSION pg_wal pg_xact pg_xlog_archive.conf pg_xlog_archive.sh pgxz_license postgresql.auto.conf postgresql.conf postgresql.conf.back postgresql.conf.backup postgresql.conf.user postgresql.conf.user.backup postmaster.opts postmaster.pid recovery.conf userlist.txt --exclude pg_wal --exclude pg_log --exclude pg_2pc --exclude postmaster.opts --exclude postmaster.pid --exclude backup_label

tbase 13768 13764 37 16:48 pts/0 00:00:27 /opt/jdk1.8.0_40/bin/java -Dproc_fs -Djava.net.preferIPv4Stack=true -Dyarn.log.dir=/opt/hadoop-3.3.0/logs -Dyarn.log.file=hadoop.log -Dyarn.home.dir=/opt/hadoop-3.3.0 -Dyarn.root.logger=INFO,console -Djava.library.path=/opt/hadoop-3.3.0/lib/native -Dhadoop.log.dir=/opt/hadoop-3.3.0/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/opt/hadoop-3.3.0 -Dhadoop.id.str=tbase -Dhadoop.root.logger=INFO,console -Dhadoop.policy.file=hadoop-policy.xml -Dhadoop.security.logger=INFO,NullAppender org.apache.hadoop.fs.FsShell -put - hdfs://192.168.5.200:9000/backup/test_1/base_bkp_2021_12_16/dn001_192.168.5.201_11000/2021_12_16_16_45_24/base.tar[hadoop@db01 hadoop]$ ps -ef|grep back

root 31 2 0 13:58 ? 00:00:00 [writeback]

tbase 11718 4504 81 16:48 ? 00:00:20 /opt/jdk1.8.0_40/bin/java -Dproc_fs -Djava.net.preferIPv4Stack=true -Dyarn.log.dir=/opt/hadoop-3.3.0/logs -Dyarn.log.file=hadoop.log -Dyarn.home.dir=/opt/hadoop-3.3.0 -Dyarn.root.logger=INFO,console -Djava.library.path=/opt/hadoop-3.3.0/lib/native -Dhadoop.log.dir=/opt/hadoop-3.3.0/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/opt/hadoop-3.3.0 -Dhadoop.id.str=tbase -Dhadoop.root.logger=INFO,console -Dhadoop.policy.file=hadoop-policy.xml -Dhadoop.security.logger=INFO,NullAppender org.apache.hadoop.fs.FsShell -ls hdfs://192.168.5.200:9000/backup/test_1/xlog_bkp/gtm002/20211216/192.168.5.200_11002/

tbase 12494 4504 88 16:48 ? 00:00:16 /opt/jdk1.8.0_40/bin/java -Dproc_fs -Djava.net.preferIPv4Stack=true -Dyarn.log.dir=/opt/hadoop-3.3.0/logs -Dyarn.log.file=hadoop.log -Dyarn.home.dir=/opt/hadoop-3.3.0 -Dyarn.root.logger=INFO,console -Djava.library.path=/opt/hadoop-3.3.0/lib/native -Dhadoop.log.dir=/opt/hadoop-3.3.0/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/opt/hadoop-3.3.0 -Dhadoop.id.str=tbase -Dhadoop.root.logger=INFO,console -Dhadoop.policy.file=hadoop-policy.xml -Dhadoop.security.logger=INFO,NullAppender org.apache.hadoop.fs.FsShell -ls hdfs://192.168.5.200:9000/backup/test_1

tbase 12672 4504 87 16:48 pts/0 00:00:14 /opt/jdk1.8.0_40/bin/java -Dproc_fs -Djava.net.preferIPv4Stack=true -Dyarn.log.dir=/opt/hadoop-3.3.0/logs -Dyarn.log.file=hadoop.log -Dyarn.home.dir=/opt/hadoop-3.3.0 -Dyarn.root.logger=INFO,console -Djava.library.path=/opt/hadoop-3.3.0/lib/native -Dhadoop.log.dir=/opt/hadoop-3.3.0/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/opt/hadoop-3.3.0 -Dhadoop.id.str=tbase -Dhadoop.root.logger=INFO,console -Dhadoop.policy.file=hadoop-policy.xml -Dhadoop.security.logger=INFO,NullAppender org.apache.hadoop.fs.FsShell -rm -r hdfs://192.168.5.200:9000/backup/test_1/base_bkp_2021_12_16/gtm002_192.168.5.200_11002/2021_12_16_16_45_248,查看备份记录

#增量备份记录

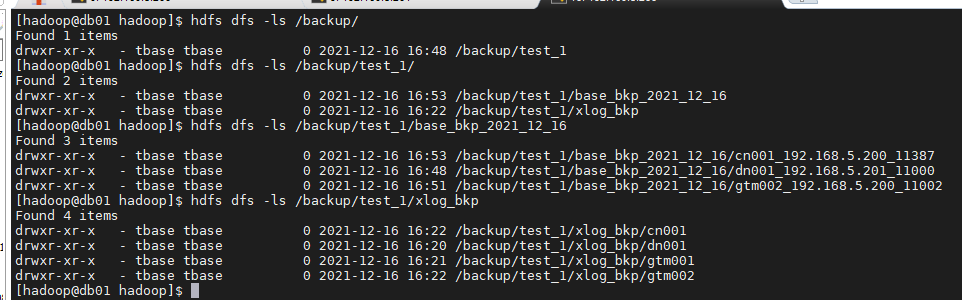

9,hdfs检查备份文件信息

[hadoop@db01 hadoop]$ hdfs dfs -ls /backup/

Found 1 items

drwxr-xr-x - tbase tbase 0 2021-12-16 16:48 /backup/test_1

[hadoop@db01 hadoop]$ hdfs dfs -ls /backup/test_1/

Found 2 items

drwxr-xr-x - tbase tbase 0 2021-12-16 16:53 /backup/test_1/base_bkp_2021_12_16

drwxr-xr-x - tbase tbase 0 2021-12-16 16:22 /backup/test_1/xlog_bkp

[hadoop@db01 hadoop]$ hdfs dfs -ls /backup/test_1/base_bkp_2021_12_16

Found 3 items

drwxr-xr-x - tbase tbase 0 2021-12-16 16:53 /backup/test_1/base_bkp_2021_12_16/cn001_192.168.5.200_11387

drwxr-xr-x - tbase tbase 0 2021-12-16 16:48 /backup/test_1/base_bkp_2021_12_16/dn001_192.168.5.201_11000

drwxr-xr-x - tbase tbase 0 2021-12-16 16:51 /backup/test_1/base_bkp_2021_12_16/gtm002_192.168.5.200_11002

[hadoop@db01 hadoop]$ hdfs dfs -ls /backup/test_1/xlog_bkp

Found 4 items

drwxr-xr-x - tbase tbase 0 2021-12-16 16:22 /backup/test_1/xlog_bkp/cn001

drwxr-xr-x - tbase tbase 0 2021-12-16 16:20 /backup/test_1/xlog_bkp/dn001

drwxr-xr-x - tbase tbase 0 2021-12-16 16:21 /backup/test_1/xlog_bkp/gtm001

drwxr-xr-x - tbase tbase 0 2021-12-16 16:22 /backup/test_1/xlog_bkp/gtm002

[hadoop@db01 hadoop]$

【声明】本内容来自华为云开发者社区博主,不代表华为云及华为云开发者社区的观点和立场。转载时必须标注文章的来源(华为云社区)、文章链接、文章作者等基本信息,否则作者和本社区有权追究责任。如果您发现本社区中有涉嫌抄袭的内容,欢迎发送邮件进行举报,并提供相关证据,一经查实,本社区将立刻删除涉嫌侵权内容,举报邮箱:

cloudbbs@huaweicloud.com

- 点赞

- 收藏

- 关注作者

评论(0)