图片旋转对于识别模式带来的变化

【摘要】

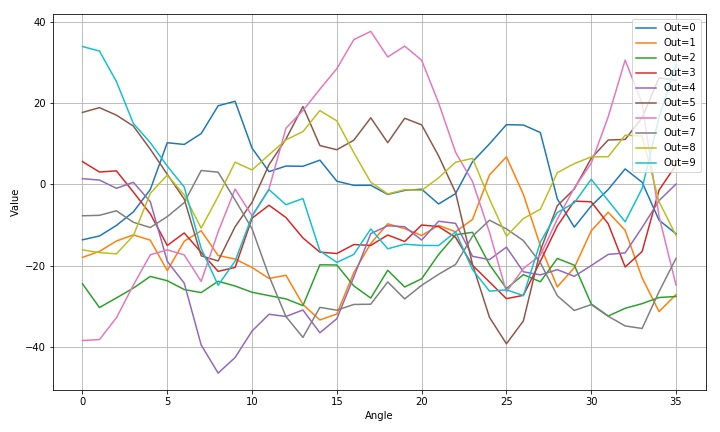

简 介: 测试了七段数字图片段角度对于识别的影响。可以看到对于6,9两个数字,在旋转过程中他们之间会相互转换。2,5在旋转180°之后,它们与自己相同,会出现两个识别相同。0,8与其他数字自检有...

简 介: 测试了七段数字图片段角度对于识别的影响。可以看到对于6,9两个数字,在旋转过程中他们之间会相互转换。2,5在旋转180°之后,它们与自己相同,会出现两个识别相同。0,8与其他数字自检有着比较多的差异。 1,7两个数字有着较强的交叉。

关键词: 七段数字,LCD

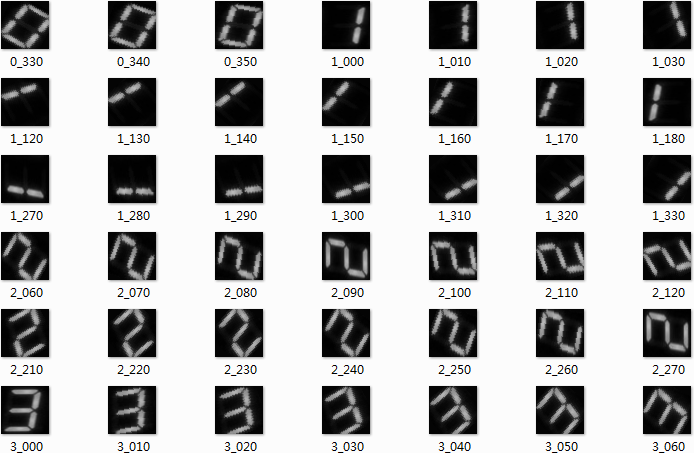

▲ 图1 旋转的模式

§01 旋转图片

在博文 模型扫描识别图片 对于 七段数码数字模型 进行扫描测试,也就是利用对七段数码图片进行扫描识别,给出了波动的结果。

下面测试一下数字旋转对应的输出结果。

1.1 旋转图片

选择下面LED图片中的数码图像作为旋转测试。

▲ 图1.1.1 用于旋转测试的图片

1.1.1 分割形成旋转图片

(1)分割代码

from headm import * # =

import cv2

from paddle.vision.transforms import rotate

backgroundfile = '/home/aistudio/work/7seg/rotate/220112235650.BMP'

img = cv2.imread(backgroundfile)

printt(img.shape)

backcolor = mean(mean(mean(img, axis=0), axis=0))

printt(backcolor)

imgfile1 = '/home/aistudio/work/7seg/rotate/052-01234.JPG'

imgfile2 = '/home/aistudio/work/7seg/rotate/053-56789.JPG'

cutdir = '/home/aistudio/work/7seg/rotate/cutdir'

outsize = 48

rotateangle = range(0, 360, 10)

def cutimg(imgfile):

img = cv2.imread(imgfile)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

imgwidth = gray.shape[1]

imgheight = gray.shape[0]

for i in range(5):

left = int(imgwidth * i // 5)

right = int(imgwidth * (i + 1) // 5)

data = gray[:, left:right]

data = cv2.resize(data, (outsize,outsize))

for r in rotateangle:

bgdim = ones((512,512)) * backcolor

n1 = 256-24

n2 = 256+24

bgdim[n1:n2, n1:n2] = data

rotdata = rotate(bgdim, r)

data1 = rotdata[n1:n2,n1:n2]

fn = os.path.join(cutdir, '%d_%03d.BMP'%(i+5, r))

cv2.imwrite(fn, data1)

cutimg(imgfile2)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

(2)分割旋转结果

▲ 图1.1.2 旋转后的结果

from headm import * # =

import cv2

cutdir = '/home/aistudio/work/7seg/rotate/cutdir'

filedim = os.listdir(cutdir)

rotateangle = range(0, 360, 10)

gifpath = '/home/aistudio/GIF'

if not os.path.isdir(gifpath):

os.makedirs(gifpath)

gifdim = os.listdir(gifpath)

for f in gifdim:

fn = os.path.join(gifpath, f)

if os.path.isfile(fn):

os.remove(fn)

count = 0

for r in rotateangle:

plt.clf()

plt.figure(figsize=(10, 4))

for i in range(2):

for j in range(5):

n = i*5+j

fn = os.path.join(cutdir, '%d_%03d.BMP'%(n, r))

img = cv2.imread(fn)

plt.subplot(2,5,i*5+j+1)

plt.imshow(img)

savefile = os.path.join(gifpath, '%03d.jpg'%count)

count += 1

plt.savefig(savefile)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

▲ 图 数字旋转

1.2 模型识别结果

1.2.1 网络识别代码

import sys,os,math,time

sys.path.append("/home/aistudio/external-libraries")

import matplotlib.pyplot as plt

from numpy import *

import paddle

import paddle.fluid as fluid

import cv2

import cv2

imgwidth = 48

imgheight = 48

inputchannel = 1

kernelsize = 5

targetsize = 10

ftwidth = ((imgwidth-kernelsize+1)//2-kernelsize+1)//2

ftheight = ((imgheight-kernelsize+1)//2-kernelsize+1)//2

class lenet(paddle.nn.Layer):

def __init__(self, ):

super(lenet, self).__init__()

self.conv1 = paddle.nn.Conv2D(in_channels=inputchannel, out_channels=6, kernel_size=kernelsize, stride=1, padding=0)

self.conv2 = paddle.nn.Conv2D(in_channels=6, out_channels=16, kernel_size=kernelsize, stride=1, padding=0)

self.mp1 = paddle.nn.MaxPool2D(kernel_size=2, stride=2)

self.mp2 = paddle.nn.MaxPool2D(kernel_size=2, stride=2)

self.L1 = paddle.nn.Linear(in_features=ftwidth*ftheight*16, out_features=120)

self.L2 = paddle.nn.Linear(in_features=120, out_features=86)

self.L3 = paddle.nn.Linear(in_features=86, out_features=targetsize)

def forward(self, x):

x = self.conv1(x)

x = paddle.nn.functional.relu(x)

x = self.mp1(x)

x = self.conv2(x)

x = paddle.nn.functional.relu(x)

x = self.mp2(x)

x = paddle.flatten(x, start_axis=1, stop_axis=-1)

x = self.L1(x)

x = paddle.nn.functional.relu(x)

x = self.L2(x)

x = paddle.nn.functional.relu(x)

x = self.L3(x)

return x

model = lenet()

model.set_state_dict(paddle.load('/home/aistudio/work/seg7model4_1_all.pdparams'))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

cutdir = '/home/aistudio/work/7seg/rotate/cutdir'

rotateangle = range(0, 360, 10)

def modelpre(n, degree):

fn = os.path.join(cutdir, '%d_%03d.BMP'%(n, degree))

img = cv2.imread(fn)

data = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

data = data - mean(data)

stdd = std(data)

data = data/stdd

inputs = data[newaxis, :, :]

preid = model(paddle.to_tensor([inputs], dtype='float32'))

return preid.numpy()

outdim = []

for r in rotateangle:

out = modelpre(0, r)

outdim.append(out[0])

print(outdim)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

1.2.2 绘制结果

plt.clf()

plt.figure(figsize=(10,6))

for i in range(10):

plt.plot([a[i] for a in outdim], label='Out=%d'%i)

plt.xlabel("Angle")

plt.ylabel("Value")

plt.grid(True)

plt.tight_layout()

plt.legend(loc='upper right')

plt.savefig('/home/aistudio/stdout.jpg')

plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

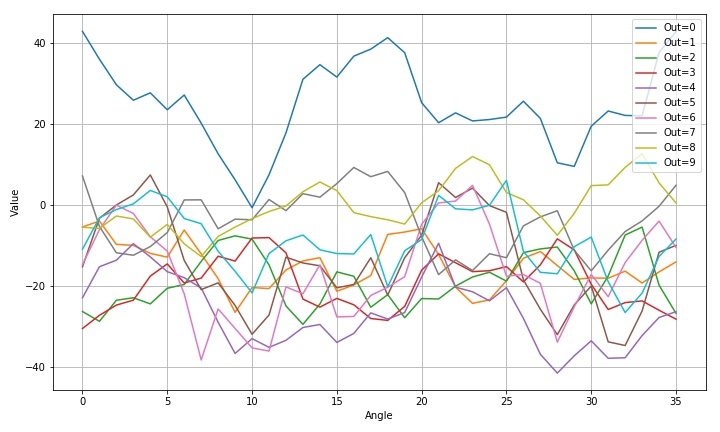

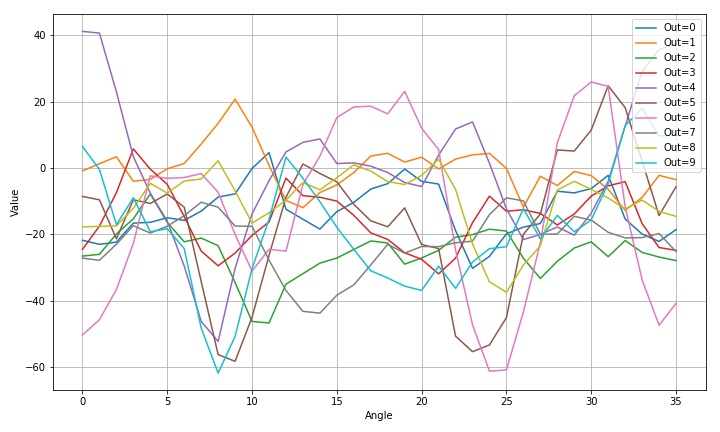

▲ 图 数字0旋转

▲ 图1.2.1 数字0旋转输出结果

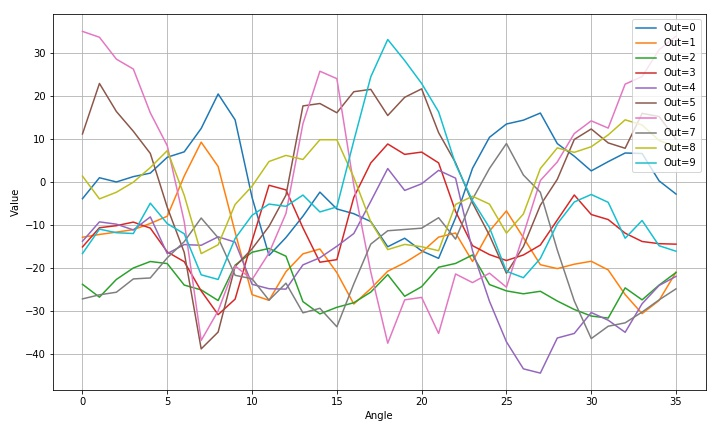

▲ 图 数字1旋转

▲ 图1.2.2 数字1旋转识别结果

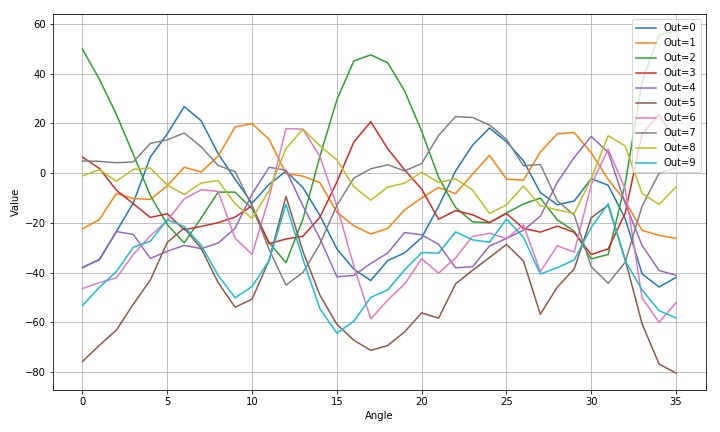

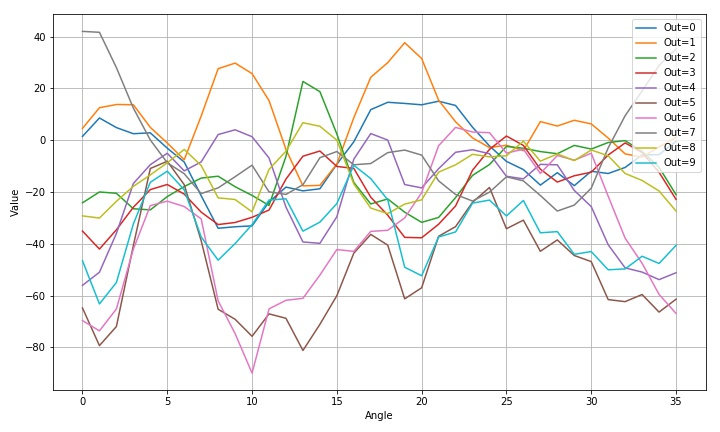

▲ 图 数字2旋转

▲ 图1.2.3 数字2旋转识别结果

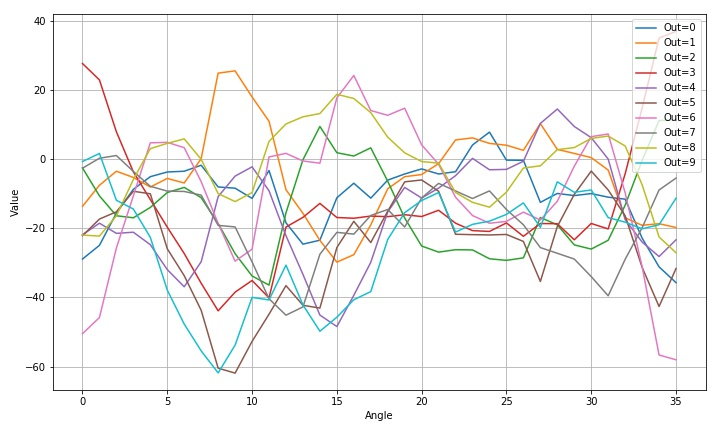

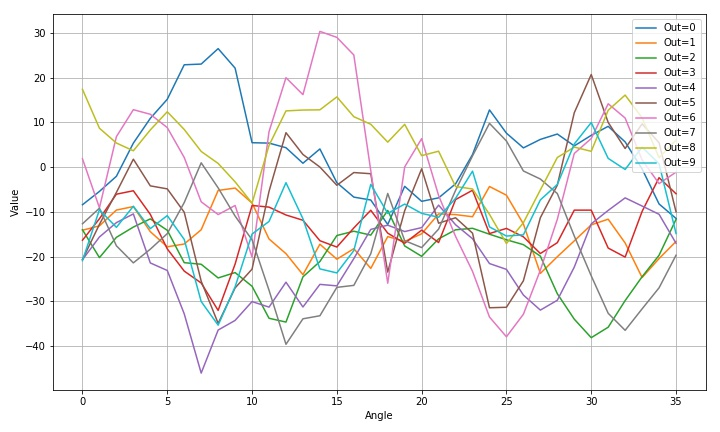

▲ 图 数字3旋转

▲ 图1.2.4 数字3旋转识别结果

▲ 图 数字4旋转

▲ 图1.2.6 数字4旋转识别结果

▲ 图 数字5旋转

▲ 图1.2.7 数字5旋转识别结果

▲ 图 数字6旋转

▲ 图1.2.8 数字6旋转识别结果

▲ 图 数字7旋转

▲ 图1.2.10 数字7旋转识别结果

▲ 图 数字8旋转

▲ 图1.2.12 数字8旋转识别结果

▲ 图 数字9旋转

▲ 图1.2.13 数字9旋转识别结果

※ 旋转总结 ※

测试了七段数字图片段角度对于识别的影响。可以看到对于6,9两个数字,在旋转过程中他们之间会相互转换。2,5在旋转180°之后,它们与自己相同,会出现两个识别相同。0,8与其他数字自检有着比较多的差异。 1,7两个数字有着较强的交叉。

■ 相关文献链接:

● 相关图表链接:

- 图1.1.1 用于旋转测试的图片

- 图1.1.2 旋转后的结果

- 图 数字旋转

- 图 数字0旋转

- 图1.2.1 数字0旋转输出结果

- 图 数字1旋转

- 图1.2.2 数字1旋转识别结果

- 图 数字2旋转

- 图1.2.3 数字2旋转识别结果

- 图 数字3旋转

- 图1.2.4 数字3旋转识别结果

- 图 数字4旋转

- 图1.2.6 数字4旋转识别结果

- 图 数字5旋转

- 图1.2.7 数字5旋转识别结果

- 图 数字6旋转

- 图1.2.8 数字6旋转识别结果

- 图 数字7旋转

- 图1.2.10 数字7旋转识别结果

- 图 数字8旋转

- 图1.2.12 数字8旋转识别结果

- 图 数字9旋转

- 图1.2.13 数字9旋转识别结果

模型识别数字

▲ 图2.1 识别数字

from headm import * # =

import paddle

import paddle.fluid as fluid

import cv2

import cv2

imgwidth = 48

imgheight = 48

inputchannel = 1

kernelsize = 5

targetsize = 10

ftwidth = ((imgwidth-kernelsize+1)//2-kernelsize+1)//2

ftheight = ((imgheight-kernelsize+1)//2-kernelsize+1)//2

class lenet(paddle.nn.Layer):

def __init__(self, ):

super(lenet, self).__init__()

self.conv1 = paddle.nn.Conv2D(in_channels=inputchannel, out_channels=6, kernel_size=kernelsize, stride=1, padding=0)

self.conv2 = paddle.nn.Conv2D(in_channels=6, out_channels=16, kernel_size=kernelsize, stride=1, padding=0)

self.mp1 = paddle.nn.MaxPool2D(kernel_size=2, stride=2)

self.mp2 = paddle.nn.MaxPool2D(kernel_size=2, stride=2)

self.L1 = paddle.nn.Linear(in_features=ftwidth*ftheight*16, out_features=120)

self.L2 = paddle.nn.Linear(in_features=120, out_features=86)

self.L3 = paddle.nn.Linear(in_features=86, out_features=targetsize)

def forward(self, x):

x = self.conv1(x)

x = paddle.nn.functional.relu(x)

x = self.mp1(x)

x = self.conv2(x)

x = paddle.nn.functional.relu(x)

x = self.mp2(x)

x = paddle.flatten(x, start_axis=1, stop_axis=-1)

x = self.L1(x)

x = paddle.nn.functional.relu(x)

x = self.L2(x)

x = paddle.nn.functional.relu(x)

x = self.L3(x)

return x

model = lenet()

model.set_state_dict(paddle.load('/home/aistudio/work/seg7model4_1_all.pdparams'))

cutdir = '/home/aistudio/work/7seg/rotate/cutdir'

rotateangle = range(0, 360, 10)

def modelpre(n, degree):

fn = os.path.join(cutdir, '%d_%03d.BMP'%(n, degree))

img = cv2.imread(fn)

data = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

data = data - mean(data)

stdd = std(data)

data = data/stdd

inputs = data[newaxis, :, :]

preid = model(paddle.to_tensor([inputs], dtype='float32'))

return preid.numpy()

outdim = []

for r in rotateangle:

out = modelpre(9, r)

outdim.append(out[0])

plt.clf()

plt.figure(figsize=(10,6))

for i in range(10):

plt.plot([a[i] for a in outdim], label='Out=%d'%i)

plt.xlabel("Angle")

plt.ylabel("Value")

plt.grid(True)

plt.tight_layout()

plt.legend(loc='upper right')

plt.savefig('/home/aistudio/stdout.jpg')

plt.show()

imgfile = '/home/aistudio/work/7seg/rotate/220113000554.JPG'

OUT_SIZE = 48

def pic2netinput(imgfile):

img = cv2.imread(imgfile)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

imgwidth = gray.shape[1]

imgheight = gray.shape[0]

f = os.path.basename(imgfile)

numstr = '1234'

numnum = len(numstr)

imgarray = []

labeldim = []

for i in range(numnum):

left = imgwidth * i // numnum

right = imgwidth * (i + 1) // numnum

data = gray[0:imgheight, left:right]

dataout = cv2.resize(data, (OUT_SIZE, OUT_SIZE))

dataout = dataout - mean(dataout)

stdd = std(dataout)

dataout = dataout / stdd

if numstr[i].isdigit():

imgarray.append(dataout[newaxis,:,:])

labeldim.append(int(numstr[i]))

test_i = paddle.to_tensor(imgarray, dtype='float32')

test_label = array(labeldim)

test_l = paddle.to_tensor(test_label[:, newaxis], dtype='int64')

return test_i, test_l

def checkimglabel(imgfile):

inp, label = pic2netinput(imgfile)

preout = model(inp)

preid = paddle.argmax(preout, axis=1).numpy().flatten()

label = label.numpy().flatten()

error = where(label != preid)[0]

printt(preid:, label:)

checkimglabel(imgfile)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

文章来源: zhuoqing.blog.csdn.net,作者:卓晴,版权归原作者所有,如需转载,请联系作者。

原文链接:zhuoqing.blog.csdn.net/article/details/122462749

【版权声明】本文为华为云社区用户转载文章,如果您发现本社区中有涉嫌抄袭的内容,欢迎发送邮件进行举报,并提供相关证据,一经查实,本社区将立刻删除涉嫌侵权内容,举报邮箱:

cloudbbs@huaweicloud.com

- 点赞

- 收藏

- 关注作者

评论(0)