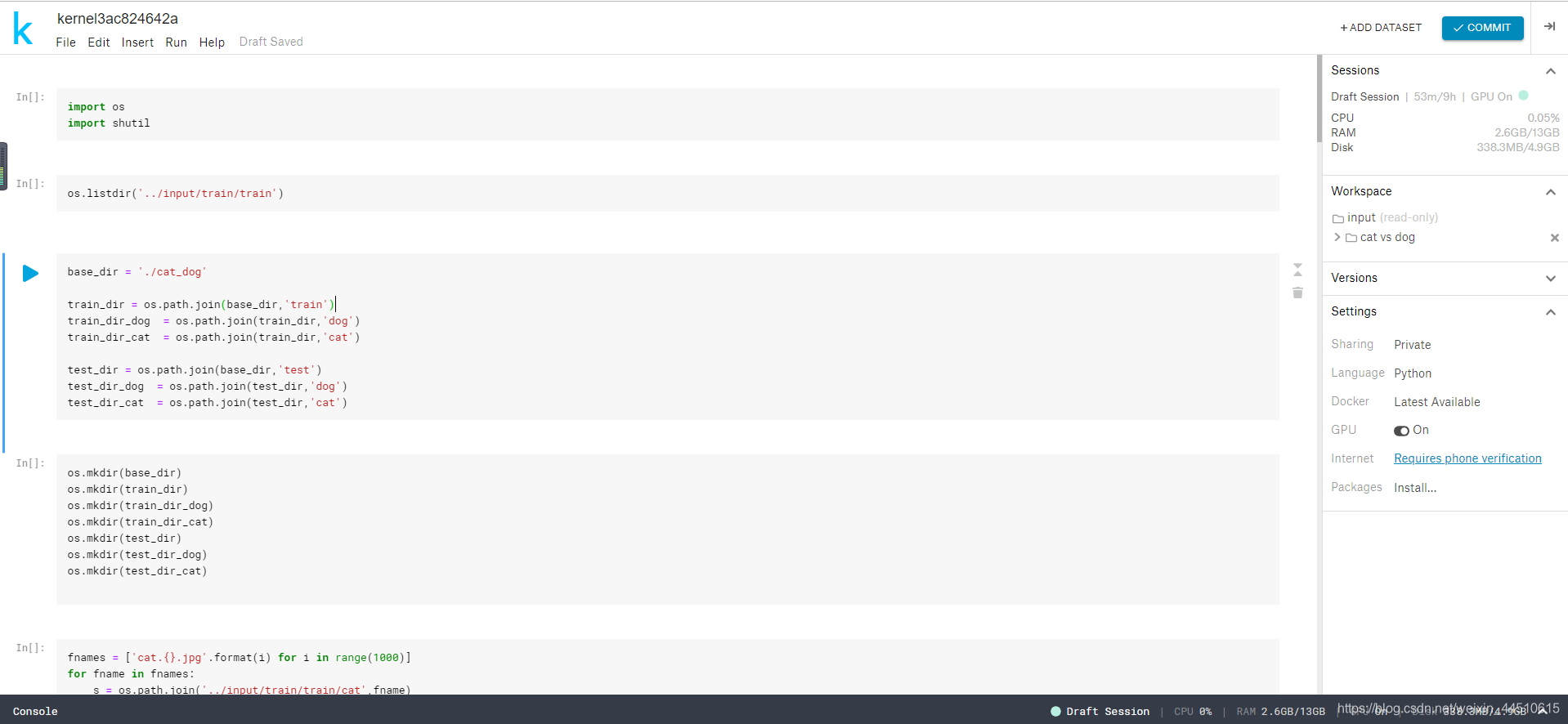

kaggle(一)训练猫狗数据集

【摘要】 记录第一次使用kaggle训练猫狗数据集

import os

import shutil

12

os.listdir('../input/train/train')

1

base_dir = './cat_dog'

train_dir = os.path.join(base_dir,'train')

train_dir_dog = os.path.join...

记录第一次使用kaggle训练猫狗数据集

import os

import shutil

- 1

- 2

os.listdir('../input/train/train')

- 1

base_dir = './cat_dog'

train_dir = os.path.join(base_dir,'train')

train_dir_dog = os.path.join(train_dir,'dog')

train_dir_cat = os.path.join(train_dir,'cat')

test_dir = os.path.join(base_dir,'test')

test_dir_dog = os.path.join(test_dir,'dog')

test_dir_cat = os.path.join(test_dir,'cat')

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

os.mkdir(base_dir)

os.mkdir(train_dir)

os.mkdir(train_dir_dog)

os.mkdir(train_dir_cat)

os.mkdir(test_dir)

os.mkdir(test_dir_dog)

os.mkdir(test_dir_cat)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

fnames = ['cat.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames: s = os.path.join('../input/train/train/cat',fname) d = os.path.join(train_dir_cat,fname) shutil.copyfile(s,d)

- 1

- 2

- 3

- 4

- 5

fnames = ['cat.{}.jpg'.format(i) for i in range(1000,1500)]

for fname in fnames: s = os.path.join('../input/train/train/cat',fname) d = os.path.join(test_dir_cat,fname) shutil.copyfile(s,d)

- 1

- 2

- 3

- 4

- 5

fnames = ['dog.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames: s = os.path.join('../input/train/train/dog',fname) d = os.path.join(train_dir_dog,fname) shutil.copyfile(s,d)

- 1

- 2

- 3

- 4

- 5

fnames = ['dog.{}.jpg'.format(i) for i in range(1000,1500)]

for fname in fnames: s = os.path.join('../input/train/train/dog',fname) d = os.path.join(test_dir_dog,fname) shutil.copyfile(s,d)

- 1

- 2

- 3

- 4

- 5

- 读取图片

- 将图片解码

- 预处理图片

- 图片归一化

import keras

- 1

from keras import layers

- 1

from keras.preprocessing.image import ImageDataGenerator

- 1

train_datagen = ImageDataGenerator(rescale=1/255)

- 1

test_datagen = ImageDataGenerator(rescale=1/255)

- 1

train_generator = train_datagen.flow_from_directory(train_dir, target_size=(200,200), batch_size=20, class_mode='binary')

- 1

- 2

- 3

- 4

Found 2000 images belonging to 2 classes.

- 1

test_generator = train_datagen.flow_from_directory(test_dir, target_size=(200,200), batch_size=20, class_mode='binary')

- 1

- 2

- 3

- 4

Found 1000 images belonging to 2 classes.

- 1

model = keras.Sequential()

- 1

model.add(layers.Conv2D(64,(3,3), activation='relu',input_shape=(200,200,3)))

model.add(layers.Conv2D(64,(3,3), activation='relu'))

model.add(layers.MaxPool2D())

model.add(layers.Dropout(0.25))

model.add(layers.Conv2D(64,(3,3), activation='relu',input_shape=(200,200,3)))

model.add(layers.Conv2D(64,(3,3), activation='relu'))

model.add(layers.MaxPool2D())

model.add(layers.Dropout(0.25))

model.add(layers.Conv2D(64,(3,3), activation='relu',input_shape=(200,200,3)))

model.add(layers.Conv2D(64,(3,3), activation='relu'))

model.add(layers.MaxPool2D())

model.add(layers.Dropout(0.25))

model.add(layers.Flatten())

model.add(layers.Dense(236,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

model.summary()

- 1

_________________________________________________________________

Layer (type) Output Shape Param # =================================================================

conv2d_25 (Conv2D) (None, 198, 198, 64) 1792 _________________________________________________________________

conv2d_26 (Conv2D) (None, 196, 196, 64) 36928 _________________________________________________________________

max_pooling2d_13 (MaxPooling (None, 98, 98, 64) 0 _________________________________________________________________

dropout_13 (Dropout) (None, 98, 98, 64) 0 _________________________________________________________________

conv2d_27 (Conv2D) (None, 96, 96, 64) 36928 _________________________________________________________________

conv2d_28 (Conv2D) (None, 94, 94, 64) 36928 _________________________________________________________________

max_pooling2d_14 (MaxPooling (None, 47, 47, 64) 0 _________________________________________________________________

dropout_14 (Dropout) (None, 47, 47, 64) 0 _________________________________________________________________

conv2d_29 (Conv2D) (None, 45, 45, 64) 36928 _________________________________________________________________

conv2d_30 (Conv2D) (None, 43, 43, 64) 36928 _________________________________________________________________

max_pooling2d_15 (MaxPooling (None, 21, 21, 64) 0 _________________________________________________________________

dropout_15 (Dropout) (None, 21, 21, 64) 0 _________________________________________________________________

flatten_5 (Flatten) (None, 28224) 0 _________________________________________________________________

dense_9 (Dense) (None, 236) 6661100 _________________________________________________________________

dense_10 (Dense) (None, 1) 237 =================================================================

Total params: 6,847,769

Trainable params: 6,847,769

Non-trainable params: 0

_________________________________________________________________

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

model.compile(optimizer=keras.optimizers.Adam(lr=0.0001), loss='binary_crossentropy',metrics=['acc'])

- 1

- 2

history = model.fit_generator(train_generator, epochs=30, steps_per_epoch=100, validation_data=test_generator, validation_steps=50 )

- 1

- 2

- 3

- 4

- 5

- 6

Epoch 1/30

100/100 [==============================] - 13s 131ms/step - loss: 0.6946 - acc: 0.4925 - val_loss: 0.6932 - val_acc: 0.5160

Epoch 2/30

100/100 [==============================] - 12s 116ms/step - loss: 0.6742 - acc: 0.5565 - val_loss: 0.6644 - val_acc: 0.6010

Epoch 3/30

100/100 [==============================] - 12s 116ms/step - loss: 0.6338 - acc: 0.6310 - val_loss: 0.6401 - val_acc: 0.6220

Epoch 4/30

100/100 [==============================] - 12s 116ms/step - loss: 0.6109 - acc: 0.6560 - val_loss: 0.6295 - val_acc: 0.6340

Epoch 5/30

100/100 [==============================] - 12s 116ms/step - loss: 0.5745 - acc: 0.6800 - val_loss: 0.6064 - val_acc: 0.6550

Epoch 6/30

100/100 [==============================] - 12s 116ms/step - loss: 0.5480 - acc: 0.7095 - val_loss: 0.5911 - val_acc: 0.6830

Epoch 7/30

100/100 [==============================] - 12s 116ms/step - loss: 0.5269 - acc: 0.7335 - val_loss: 0.5984 - val_acc: 0.6680

Epoch 8/30

100/100 [==============================] - 12s 116ms/step - loss: 0.5025 - acc: 0.7495 - val_loss: 0.5934 - val_acc: 0.6750

Epoch 9/30

100/100 [==============================] - 12s 116ms/step - loss: 0.4760 - acc: 0.7700 - val_loss: 0.6232 - val_acc: 0.6610

Epoch 10/30

100/100 [==============================] - 12s 116ms/step - loss: 0.4377 - acc: 0.7985 - val_loss: 0.6410 - val_acc: 0.6560

Epoch 11/30

100/100 [==============================] - 12s 117ms/step - loss: 0.4045 - acc: 0.8180 - val_loss: 0.6176 - val_acc: 0.6830

Epoch 12/30

100/100 [==============================] - 12s 116ms/step - loss: 0.3727 - acc: 0.8305 - val_loss: 0.6343 - val_acc: 0.6700

Epoch 13/30

100/100 [==============================] - 12s 116ms/step - loss: 0.3227 - acc: 0.8570 - val_loss: 0.6890 - val_acc: 0.6730

Epoch 14/30

100/100 [==============================] - 12s 117ms/step - loss: 0.2678 - acc: 0.8825 - val_loss: 0.7858 - val_acc: 0.6830

Epoch 15/30

100/100 [==============================] - 12s 116ms/step - loss: 0.2491 - acc: 0.8985 - val_loss: 0.7528 - val_acc: 0.6920

Epoch 16/30

100/100 [==============================] - 12s 116ms/step - loss: 0.1818 - acc: 0.9235 - val_loss: 0.8449 - val_acc: 0.6930

Epoch 17/30

100/100 [==============================] - 12s 117ms/step - loss: 0.1204 - acc: 0.9580 - val_loss: 0.9760 - val_acc: 0.6860

Epoch 18/30

100/100 [==============================] - 12s 118ms/step - loss: 0.0775 - acc: 0.9705 - val_loss: 1.1756 - val_acc: 0.6880

Epoch 19/30

100/100 [==============================] - 12s 116ms/step - loss: 0.0548 - acc: 0.9865 - val_loss: 1.3155 - val_acc: 0.6920

Epoch 20/30

100/100 [==============================] - 12s 116ms/step - loss: 0.0605 - acc: 0.9785 - val_loss: 1.6551 - val_acc: 0.6600

Epoch 21/30

100/100 [==============================] - 12s 117ms/step - loss: 0.0670 - acc: 0.9765 - val_loss: 1.2751 - val_acc: 0.6780

Epoch 22/30

100/100 [==============================] - 12s 117ms/step - loss: 0.0348 - acc: 0.9910 - val_loss: 1.4547 - val_acc: 0.6890

Epoch 23/30

100/100 [==============================] - 12s 116ms/step - loss: 0.0511 - acc: 0.9825 - val_loss: 1.3448 - val_acc: 0.6840

Epoch 24/30

100/100 [==============================] - 12s 116ms/step - loss: 0.0269 - acc: 0.9915 - val_loss: 1.5894 - val_acc: 0.6850

Epoch 25/30

100/100 [==============================] - 12s 116ms/step - loss: 0.0147 - acc: 0.9980 - val_loss: 1.7083 - val_acc: 0.6590

Epoch 26/30

100/100 [==============================] - 12s 118ms/step - loss: 0.0097 - acc: 0.9980 - val_loss: 1.8089 - val_acc: 0.6830

Epoch 27/30

100/100 [==============================] - 12s 116ms/step - loss: 0.0074 - acc: 0.9985 - val_loss: 2.1671 - val_acc: 0.6730

Epoch 28/30

100/100 [==============================] - 12s 116ms/step - loss: 0.0068 - acc: 0.9995 - val_loss: 1.8426 - val_acc: 0.6950

Epoch 29/30

100/100 [==============================] - 12s 116ms/step - loss: 0.0024 - acc: 1.0000 - val_loss: 2.0147 - val_acc: 0.6840

Epoch 30/30

100/100 [==============================] - 12s 118ms/step - loss: 0.0463 - acc: 0.9855 - val_loss: 1.8073 - val_acc: 0.6670

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

import matplotlib.pyplot as plt

%matplotlib inline

- 1

- 2

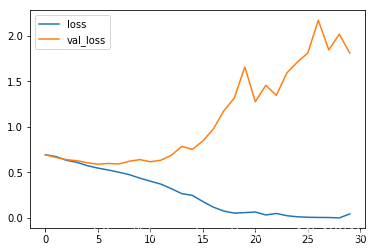

plt.plot(history.epoch,history.history['loss'],label='loss')

plt.plot(history.epoch,history.history['val_loss'],label='val_loss')

plt.legend()

- 1

- 2

- 3

- 4

<matplotlib.legend.Legend at 0x7f1c9763a278>

- 1

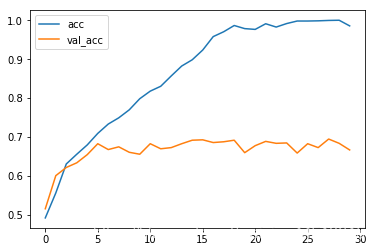

plt.plot(history .epoch,history.history['acc'],label='acc')

plt.plot(history.epoch,history.history['val_acc'],label='val_acc')

plt.legend()

- 1

- 2

- 3

<matplotlib.legend.Legend at 0x7f1c9759cb00>

- 1

虽然有严重的过拟合,但值得记录

文章来源: maoli.blog.csdn.net,作者:刘润森!,版权归原作者所有,如需转载,请联系作者。

原文链接:maoli.blog.csdn.net/article/details/89197055

【版权声明】本文为华为云社区用户转载文章,如果您发现本社区中有涉嫌抄袭的内容,欢迎发送邮件进行举报,并提供相关证据,一经查实,本社区将立刻删除涉嫌侵权内容,举报邮箱:

cloudbbs@huaweicloud.com

- 点赞

- 收藏

- 关注作者

评论(0)