全网最全python爬虫系统进阶学习(附原代码)学完可就业

个人公众号 yk 坤帝

后台回复 scrapy 获取整理资源

1.认识爬虫

1.豆瓣电影爬取

2.肯德基餐厅查询

3.破解百度翻译

4.搜狗首页

5.网页采集器

6.药监总局相关数据爬取

1.bs4解析基础

2.bs4案例

3.xpath解析基础

4.xpath解析案例-4k图片解析爬取

5.xpath解析案例-58二手房

6.xpath解析案例-爬取站长素材中免费简历模板

7.xpath解析案例-全国城市名称爬取

8.正则解析

9.正则解析-分页爬取

10.爬取图片

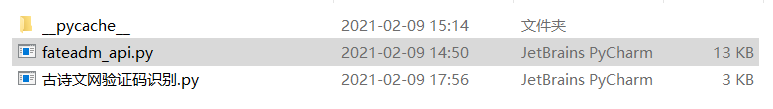

1.古诗文网验证码识别

fateadm_api.py(识别需要的配置,建议放在同一文件夹下)

调用api接口

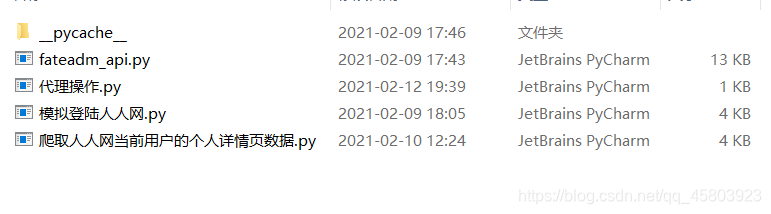

1.代理操作

2.模拟登陆人人网

3.模拟登陆人人网

1.aiohttp实现多任务异步爬虫

2.flask服务

3.多任务协程

4.多任务异步爬虫

5.示例

6.同步爬虫

7.线程池基本使用

8.线程池在爬虫案例中的应用

9.协程

1.selenium基础用法

2.selenium其他自动操作

3.12306登录示例代码

4.动作链与iframe的处理

5.谷歌无头浏览器+反检测

6.基于selenium实现1236模拟登录

7.模拟登录qq空间

1.各种项目实战,scrapy各种配置修改

2.bossPro示例

3.bossPro示例

4.数据库示例

第0关 认识爬虫

1、初始爬虫

爬虫,从本质上来说,就是利用程序在网上拿到对我们有价值的数据。

2、明晰路径

2-1、浏览器工作原理

(1)解析数据:当服务器把数据响应给浏览器之后,浏览器并不会直接把数据丢给我们。因为这些数据是用计算机的语言写的,浏览器还要把这些数据翻译成我们能看得懂的内容;

(2)提取数据:我们就可以在拿到的数据中,挑选出对我们有用的数据;

(3)存储数据:将挑选出来的有用数据保存在某一文件/数据库中。

2-2、爬虫工作原理

(1)获取数据:爬虫程序会根据我们提供的网址,向服务器发起请求,然后返回数据;

(2)解析数据:爬虫程序会把服务器返回的数据解析成我们能读懂的格式;

(3)提取数据:爬虫程序再从中提取出我们需要的数据;

(4)储存数据:爬虫程序把这些有用的数据保存起来,便于你日后的使用和分析。

————————————————

版权声明:本文为CSDN博主「yk 坤帝」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/qq_45803923/article/details/116133325

1.豆瓣电影爬取

import requests

import json

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = "https://movie.douban.com/j/chart/top_list"

params = {

'type': '24',

'interval_id': '100:90',

'action': '',

'start': '0',#从第几部电影开始取

'limit': '20'#一次取出的电影的个数

}

response = requests.get(url,params = params,headers = headers)

list_data = response.json()

fp = open('douban.json','w',encoding= 'utf-8')

json.dump(list_data,fp = fp,ensure_ascii= False)

print('over!!!!')

2.肯德基餐厅查询

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=keyword'

word = input('请输入一个地址:')

params = {

'cname': '',

'pid': '',

'keyword': word,

'pageIndex': '1',

'pageSize': '10'

}

response = requests.post(url,params = params ,headers = headers)

page_text = response.text

fileName = word + '.txt'

with open(fileName,'w',encoding= 'utf-8') as f:

f.write(page_text)

3.破解百度翻译

import requests

import json

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

post_url = 'https://fanyi.baidu.com/sug'

word = input('enter a word:')

data = {

'kw':word

}

response = requests.post(url = post_url,data = data,headers = headers)

dic_obj = response.json()

fileName = word + '.json'

fp = open(fileName,'w',encoding= 'utf-8')

#ensure_ascii = False,中文不能用ascii代码

json.dump(dic_obj,fp = fp,ensure_ascii = False)

print('over!')

4.搜狗首页

import requests

url = 'https://www.sogou.com/?pid=sogou-site-d5da28d4865fb927'

response = requests.get(url)

page_text = response.text

print(page_text)

with open('./sougou.html','w',encoding= 'utf-8') as fp:

fp.write(page_text)

print('爬取数据结束!!!')

5.网页采集器

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://www.sogou.com/sogou'

kw = input('enter a word:')

param = {

'query':kw

}

response = requests.get(url,params = param,headers = headers)

page_text = response.text

fileName = kw +'.html'

with open(fileName,'w',encoding= 'utf-8') as fp:

fp.write(page_text)

print(fileName,'保存成功!!!')

6.药监总局相关数据爬取

import requests

import json

url = "http://scxk.nmpa.gov.cn:81/xk/itownet/portalAction.do?method=getXkzsList"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4385.0 Safari/537.36'

}

for page in range(1,6):

page = str(page)

data = {

'on': 'true',

'page': page,

'pageSize': '15',

'productName':'',

'conditionType': '1',

'applyname': '',

'applysn':''

}

json_ids = requests.post(url,data = data,headers = headers).json()

id_list = []

for dic in json_ids['list']:

id_list.append(dic['ID'])

#print(id_list)

post_url = 'http://scxk.nmpa.gov.cn:81/xk/itownet/portalAction.do?method=getXkzsById'

all_data_list = []

for id in id_list:

data = {

'id':id

}

datail_json = requests.post(url = post_url,data = data,headers = headers).json()

#print(datail_json,'---------------------over')

all_data_list.append(datail_json)

fp = open('allData.json','w',encoding='utf-8')

json.dump(all_data_list,fp = fp,ensure_ascii= False)

print('over!!!')

1.bs4解析基础

from bs4 import BeautifulSoup

fp = open('第三章 数据分析/text.html','r',encoding='utf-8')

soup = BeautifulSoup(fp,'lxml')

#print(soup)

#print(soup.a)

#print(soup.div)

#print(soup.find('div'))

#print(soup.find('div',class_="song"))

#print(soup.find_all('a'))

#print(soup.select('.tang'))

#print(soup.select('.tang > ul > li >a')[0].text)

#print(soup.find('div',class_="song").text)

#print(soup.find('div',class_="song").string)

print(soup.select('.tang > ul > li >a')[0]['href'])

2.bs4案例

from bs4 import BeautifulSoup

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = "http://sanguo.5000yan.com/"

page_text = requests.get(url ,headers = headers).content

#print(page_text)

soup = BeautifulSoup(page_text,'lxml')

li_list = soup.select('.list > ul > li')

fp = open('./sanguo.txt','w',encoding='utf-8')

for li in li_list:

title = li.a.string

#print(title)

detail_url = 'http://sanguo.5000yan.com/'+li.a['href']

print(detail_url)

detail_page_text = requests.get(detail_url,headers = headers).content

detail_soup = BeautifulSoup(detail_page_text,'lxml')

div_tag = detail_soup.find('div',class_="grap")

content = div_tag.text

fp.write(title+":"+content+'\n')

print(title,'爬取成功!!!')

3.xpath解析基础

from lxml import etree

tree = etree.parse('第三章 数据分析/text.html')

# r = tree.xpath('/html/head/title')

# print(r)

# r = tree.xpath('/html/body/div')

# print(r)

# r = tree.xpath('/html//div')

# print(r)

# r = tree.xpath('//div')

# print(r)

# r = tree.xpath('//div[@class="song"]')

# print(r)

# r = tree.xpath('//div[@class="song"]/P[3]')

# print(r)

# r = tree.xpath('//div[@class="tang"]//li[5]/a/text()')

# print(r)

# r = tree.xpath('//li[7]/i/text()')

# print(r)

# r = tree.xpath('//li[7]//text()')

# print(r)

# r = tree.xpath('//div[@class="tang"]//text()')

# print(r)

# r = tree.xpath('//div[@class="song"]/img/@src')

# print(r)

4.xpath解析案例-4k图片解析爬取

import requests

from lxml import etree

import os

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'http://pic.netbian.com/4kmeinv/'

response = requests.get(url,headers = headers)

#response.encoding=response.apparent_encoding

#response.encoding = 'utf-8'

page_text = response.text

tree = etree.HTML(page_text)

li_list = tree.xpath('//div[@class="slist"]/ul/li')

# if not os.path.exists('./picLibs'):

# os.mkdir('./picLibs')

for li in li_list:

img_src = 'http://pic.netbian.com/'+li.xpath('./a/img/@src')[0]

img_name = li.xpath('./a/img/@alt')[0]+'.jpg'

img_name = img_name.encode('iso-8859-1').decode('gbk')

# print(img_name,img_src)

# print(type(img_name))

img_data = requests.get(url = img_src,headers = headers).content

img_path ='picLibs/'+img_name

#print(img_path)

with open(img_path,'wb') as fp:

fp.write(img_data)

print(img_name,"下载成功")

5.xpath解析案例-58二手房

import requests

from lxml import etree

url = 'https://bj.58.com/ershoufang/p2/'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

page_text = requests.get(url=url,headers = headers).text

tree = etree.HTML(page_text)

li_list = tree.xpath('//section[@class="list-left"]/section[2]/div')

fp = open('58.txt','w',encoding='utf-8')

for li in li_list:

title = li.xpath('./a/div[2]/div/div/h3/text()')[0]

print(title)

fp.write(title+'\n')

6.xpath解析案例-爬取站长素材中免费简历模板

import requests

from lxml import etree

import os

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://www.aqistudy.cn/historydata/'

page_text = requests.get(url,headers = headers).text

7.xpath解析案例-全国城市名称爬取

import requests

from lxml import etree

import os

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://www.aqistudy.cn/historydata/'

page_text = requests.get(url,headers = headers).text

tree = etree.HTML(page_text)

# holt_li_list = tree.xpath('//div[@class="bottom"]/ul/li')

# all_city_name = []

# for li in holt_li_list:

# host_city_name = li.xpath('./a/text()')[0]

# all_city_name.append(host_city_name)

# city_name_list = tree.xpath('//div[@class="bottom"]/ul/div[2]/li')

# for li in city_name_list:

# city_name = li.xpath('./a/text()')[0]

# all_city_name.append(city_name)

# print(all_city_name,len(all_city_name))

#holt_li_list = tree.xpath('//div[@class="bottom"]/ul//li')

holt_li_list = tree.xpath('//div[@class="bottom"]/ul/li | //div[@class="bottom"]/ul/div[2]/li')

all_city_name = []

for li in holt_li_list:

host_city_name = li.xpath('./a/text()')[0]

all_city_name.append(host_city_name)

print(all_city_name,len(all_city_name))

8.正则解析

import requests

import re

import os

if not os.path.exists('./qiutuLibs'):

os.mkdir('./qiutuLibs')

url = 'https://www.qiushibaike.com/imgrank/'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4385.0 Safari/537.36'

}

page_text = requests.get(url,headers = headers).text

ex = '<div class="thumb">.*?<img src="(.*?)" alt.*?</div>'

img_src_list = re.findall(ex,page_text,re.S)

print(img_src_list)

for src in img_src_list:

src = 'https:' + src

img_data = requests.get(url = src,headers = headers).content

img_name = src.split('/')[-1]

imgPath = './qiutuLibs/'+img_name

with open(imgPath,'wb') as fp:

fp.write(img_data)

print(img_name,"下载完成!!!!!")

9.正则解析-分页爬取

import requests

import re

import os

if not os.path.exists('./qiutuLibs'):

os.mkdir('./qiutuLibs')

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4385.0 Safari/537.36'

}

url = 'https://www.qiushibaike.com/imgrank/page/%d/'

for pageNum in range(1,3):

new_url = format(url%pageNum)

page_text = requests.get(new_url,headers = headers).text

ex = '<div class="thumb">.*?<img src="(.*?)" alt.*?</div>'

img_src_list = re.findall(ex,page_text,re.S)

print(img_src_list)

for src in img_src_list:

src = 'https:' + src

img_data = requests.get(url = src,headers = headers).content

img_name = src.split('/')[-1]

imgPath = './qiutuLibs/'+img_name

with open(imgPath,'wb') as fp:

fp.write(img_data)

print(img_name,"下载完成!!!!!")

10.爬取图片

import requests

url = 'https://pic.qiushibaike.com/system/pictures/12404/124047919/medium/R7Y2UOCDRBXF2MIQ.jpg'

img_data = requests.get(url).content

with open('qiutu.jpg','wb') as fp:

fp.write(img_data)

1.古诗文网验证码识别

开发者账号密码可以申请

import requests

from lxml import etree

from fateadm_api import FateadmApi

def TestFunc(imgPath,codyType):

pd_id = "xxxxxx" #用户中心页可以查询到pd信息

pd_key = "xxxxxxxx"

app_id = "xxxxxxx" #开发者分成用的账号,在开发者中心可以查询到

app_key = "xxxxxxx"

#识别类型,

#具体类型可以查看官方网站的价格页选择具体的类型,不清楚类型的,可以咨询客服

pred_type = codyType

api = FateadmApi(app_id, app_key, pd_id, pd_key)

# 查询余额

balance = api.QueryBalcExtend() # 直接返余额

# api.QueryBalc()

# 通过文件形式识别:

file_name = imgPath

# 多网站类型时,需要增加src_url参数,具体请参考api文档: http://docs.fateadm.com/web/#/1?page_id=6

result = api.PredictFromFileExtend(pred_type,file_name) # 直接返回识别结果

#rsp = api.PredictFromFile(pred_type, file_name) # 返回详细识别结果

'''

# 如果不是通过文件识别,则调用Predict接口:

# result = api.PredictExtend(pred_type,data) # 直接返回识别结果

rsp = api.Predict(pred_type,data) # 返回详细的识别结果

'''

# just_flag = False

# if just_flag :

# if rsp.ret_code == 0:

# #识别的结果如果与预期不符,可以调用这个接口将预期不符的订单退款

# # 退款仅在正常识别出结果后,无法通过网站验证的情况,请勿非法或者滥用,否则可能进行封号处理

# api.Justice( rsp.request_id)

#card_id = "123"

#card_key = "123"

#充值

#api.Charge(card_id, card_key)

#LOG("print in testfunc")

return result

# if __name__ == "__main__":

# TestFunc()

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://so.gushiwen.cn/user/login.aspx?from=http://so.gushiwen.cn/user/collect.aspx'

page_text = requests.get(url,headers = headers).text

tree = etree.HTML(page_text)

code_img_src = 'https://so.gushiwen.cn' + tree.xpath('//*[@id="imgCode"]/@src')[0]

img_data = requests.get(code_img_src,headers = headers).content

with open('./code.jpg','wb') as fp:

fp.write(img_data)

code_text = TestFunc('code.jpg',30400)

print('识别结果为:' + code_text)

code_text = TestFunc('code.jpg',30400)

print('识别结果为:' + code_text)

fateadm_api.py(识别需要的配置,建议放在同一文件夹下)

调用api接口

字数20000字限制,无法上传更多,见谅,公众号上有完整资源。

个人公众号 yk 坤帝

后台回复 scrapy 获取整理资源

- 点赞

- 收藏

- 关注作者

评论(0)