GaussDB T 分布式集群部署(1)

GaussDB 强大于ORACLE的一个核心在于其可以部署分布式集群,且有实际部署使用案例,理论上水平横向支持扩展1024个DN节点,实际案例中已有500+DN的大规模分布式集群。本节简要记录4节点单机房分布式高可用架构部署过程。

环境说明:

4台虚拟机:

操作系统 redhat 7.5 mini安装

Gauss1 2C 8G 100G 192.168.10.11/16 10.10.10.11/16

Gauss2 1C 6G 100G 192.168.10.12/16 10.10.10.12/16

Gauss3 1C 6G 100G 192.168.10.13/16 10.10.10.13/16

Gauss4 1C 6G 100G 192.168.10.14/16 10.10.10.14/16

注:磁盘规划要点:每个实例至少保证20G,比如下边部署方案中除了4节点是3实例外,其他3个节点都是4个实例;本例网络平面分业务平面(192.168.0.0)和维护平面(10.10.0.0),生产环境还需加入备份平面,以提升网络独立性和安全性;使用默认模版安装对内存要求较高,可通过修改建库模版文件中内存参数降低内存消耗。修改后每个节点2G内存即可。

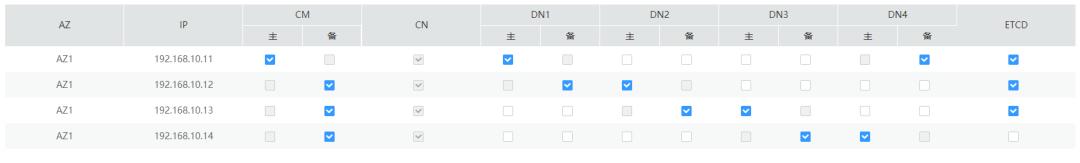

部署方案:

注:

CN: 协调节点,负责分解任务,调度任务分片在DN上并行执行;

CM: (Cluster Manager) 集群管理模块,管理和监控各个实例运行情况,确保系统稳定运行;

ETCD: 是一个高可用的分布式键值(KEY-VALUE)数据库,收集实例状态信息,CM从ETCD获取实例状态信息;

DN: (DataNode)数据节点,负责存储业务数据,执行数据查询结果并向CN返回结果;

AZ1:1号机房(单机房);

1.系统初始化(所有主机均执行):

1> 关闭防火墙

[root@Gauss1 ~]# systemctl status firewalld.service[root@Gauss1 ~]# systemctl stop firewalld.service[root@Gauss1 ~]# systemctl disable firewalld.service[root@Gauss1 ~]# firewall-cmd --statenot running[root@Gauss1 ~]#

2> 优化内核参数

/etc/sysctl.conf追加如下内容:

net.ipv4.tcp_tw_reuse = 1net.ipv4.tcp_tw_recycle = 1net.ipv4.tcp_keepalive_time = 30net.ipv4.tcp_keepalive_probes = 9net.ipv4.tcp_keepalive_intvl = 30net.ipv4.tcp_retries2 = 80vm.overcommit_memory = 0net.ipv4.tcp_rmem = 8192 250000 16777216net.core.wmem_max = 21299200net.core.rmem_max = 21299200net.core.wmem_default = 21299200net.core.rmem_default = 21299200kernel.sem = 250 6400000 1000 25600vm.min_free_kbytes = 314572 --预留系统内存5%net.core.somaxconn = 65535net.ipv4.tcp_syncookies = 1net.core.netdev_max_backlog = 65535net.ipv4.tcp_max_syn_backlog = 65535net.ipv4.tcp_wmem = 8192 250000 16777216net.ipv4.tcp_max_tw_buckets = 10000

增加完成后执行sysctl -p刷新

3> 安装依赖包

配置本地YUM源:

echo "/dev/sr0 mnt/iso iso9660 defaults,loop 0 0" >> etc/fstabmkdir -p mnt/isorm -rf etc/yum.repos.d/*cat >> etc/yum.repos.d/ios.repo <<EOF[ios]name=iosbaseurl=file:///mnt/isoenabled=1gpgcheck=0EOFmount -ayum repolist

安装依赖包

[root@Gauss1 yum.repos.d]# unset uninstall_rpm;for i in zlib readline gcc python python-devel perl-ExtUtils-Embed readline-devel zlib-devel lsof;do rpm -q $i &>/dev/null || uninstall_rpm="$uninstall_rpm $i";done ;[[ -z "$uninstall_rpm" ]] && echo -e "\nuninstall_rpm:\n\tOK.OK.OK" || echo -e "\nuninstall_rpm:\n\t$uninstall_rpm"uninstall_rpm:gcc python-devel perl-ExtUtils-Embed readline-devel zlib-devel lsof[root@Gauss1 yum.repos.d]# yum -y install gcc python-devel perl-ExtUtils-Embed readline-devel zlib-devel lsof[root@Gauss1 yum.repos.d]# unset uninstall_rpm;for i in zlib readline gcc python python-devel perl-ExtUtils-Embed readline-devel zlib-devel lsof;do rpm -q $i &>/dev/null || uninstall_rpm="$uninstall_rpm $i";done ;[[ -z "$uninstall_rpm" ]] && echo -e "\nuninstall_rpm:\n\tOK.OK.OK" || echo -e "\nuninstall_rpm:\n\t$uninstall_rpm"uninstall_rpm:OK.OK.OK[root@Gauss1 yum.repos.d]#

2.上传安装包(后续操作仅一个节点)

[root@Gauss1 ~]# mkdir -p /opt/software/gaussdb[root@Gauss1 ~]# cd /opt/software/gaussdb[root@Gauss1 gaussdb]# rz[root@Gauss1 gaussdb]# tar -zxvf GaussDB_100_1.0.0-CLUSTER-REDHAT7.5-64bit.tar.g[root@Gauss1 gaussdb]# lltotal 134984-rw-r--r--. 1 root root 86248211 Dec 14 20:18 GaussDB_100_1.0.0-CLUSTER-REDHAT7.5-64bit.tar.gz-rw-r--r--. 1 root root 44364163 Jul 29 19:14 GaussDB_100_1.0.0-CM-REDHAT-64bit.tar.gz-rw-r--r--. 1 root root 7478115 Jul 29 19:16 GaussDB_100_1.0.0-DATABASE-REDHAT-64bit.tar.gz-rw-r--r--. 1 root root 118593 Jul 29 19:12 GaussDB_100_1.0.0-ROACH-REDHAT-64bit.tar.gzdrwx------. 16 root root 4096 Jul 29 19:18 libdrwx------. 6 root root 4096 Jul 29 19:11 scriptdrwxr-xr-x. 5 root root 49 Jul 29 19:11 shardingscript[root@Gauss1 gaussdb]#

3.编辑集群配置文件

创建配置文件/opt/software/gaussdb/clusterconfig.xml,内容如下:

<?xml version="1.0" encoding="UTF-8"?><ROOT><CLUSTER><PARAM name="clusterName" value="kevin"/><PARAM name="nodeNames" value="Gauss1,Gauss2,Gauss3,Gauss4"/><PARAM name="gaussdbAppPath" value="/opt/gaussdb/app"/><PARAM name="gaussdbLogPath" value="/opt/gaussdb/log"/><PARAM name="archiveLogPath" value="/opt/gaussdb/arch_log"/><PARAM name="redoLogPath" value="/opt/gaussdb/redo_log"/><PARAM name="tmpMppdbPath" value="/opt/gaussdb/temp"/><PARAM name="gaussdbToolPath" value="/opt/gaussdb/gaussTools/wisequery"/><PARAM name="dbdiagPath" value="/opt/gaussdb/dbdiag"/><PARAM name="datanodeType" value="DN_ZENITH_HA"/><PARAM name="clusterType" value="mutil-AZ"/><PARAM name="coordinatorType" value="CN_ZENITH_ZSHARDING"/></CLUSTER><DEVICELIST><DEVICE sn="1000001"><PARAM name="name" value="Gauss1"/><PARAM name="azName" value="AZ1"/><PARAM name="azPriority" value="1"/><PARAM name="backIp1" value="192.168.10.11"/><PARAM name="sshIp1" value="192.168.10.11"/><PARAM name="innerManageIP" value="10.10.10.11"/><PARAM name="cmsNum" value="1"/><PARAM name="cmServerPortBase" value="21000"/><PARAM name="cmServerListenIp1" value="192.168.10.11,192.168.10.12,192.168.10.13,192.168.10.14"/><PARAM name="cmServerHaIp1" value="192.168.10.11,192.168.10.12,192.168.10.13,192.168.10.14"/><PARAM name="cmServerlevel" value="1"/><PARAM name="cmServerRelation" value="Gauss1,Gauss2,Gauss3,Gauss4"/><PARAM name="dataNum" value="1"/><PARAM name="dataPortBase" value="40000"/><PARAM name="dataNode1" value="/opt/gaussdb/data/dn1,Gauss2,/opt/gaussdb/data/dn1"/><PARAM name="cooNum" value="1"/><PARAM name="cooPortBase" value="8000"/><PARAM name="cooListenIp1" value="192.168.10.11"/><PARAM name="cooDir1" value="/opt/gaussdb/data/cn"/><PARAM name="etcdNum" value="1"/><PARAM name="etcdListenPort" value="2379"/><PARAM name="etcdHaPort" value="2380"/><PARAM name="etcdListenIp1" value="192.168.10.11"/><PARAM name="etcdHaIp1" value="192.168.10.11"/><PARAM name="etcdDir1" value="/opt/huawei/gaussdb/data/etcd/data_etcd1"/></DEVICE><DEVICE sn="1000002"><PARAM name="name" value="Gauss2"/><PARAM name="azName" value="AZ1"/><PARAM name="azPriority" value="1"/><PARAM name="backIp1" value="192.168.10.12"/><PARAM name="sshIp1" value="192.168.10.12"/><PARAM name="innerManageIP" value="10.10.10.12"/><PARAM name="dataNum" value="1"/><PARAM name="dataPortBase" value="40000"/><PARAM name="dataNode1" value="/opt/gaussdb/data/dn2,Gauss3,/opt/gaussdb/data/dn2"/><PARAM name="cooNum" value="1"/><PARAM name="cooPortBase" value="8001"/><PARAM name="cooListenIp1" value="192.168.10.12"/><PARAM name="cooDir1" value="/opt/gaussdb/data/cn"/><PARAM name="etcdNum" value="1"/><PARAM name="etcdListenPort" value="2379"/><PARAM name="etcdHaPort" value="2380"/><PARAM name="etcdListenIp1" value="192.168.10.12"/><PARAM name="etcdHaIp1" value="192.168.10.12"/><PARAM name="etcdDir1" value="/opt/huawei/gaussdb/data/etcd/data_etcd1"/></DEVICE><DEVICE sn="1000003"><PARAM name="name" value="Gauss3"/><PARAM name="azName" value="AZ1"/><PARAM name="azPriority" value="1"/><PARAM name="backIp1" value="192.168.10.13"/><PARAM name="sshIp1" value="192.168.10.13"/><PARAM name="innerManageIP" value="10.10.10.13"/><PARAM name="dataNum" value="1"/><PARAM name="dataPortBase" value="40000"/><PARAM name="dataNode1" value="/opt/gaussdb/data/dn3,Gauss4,/opt/gaussdb/data/dn3"/><PARAM name="cooNum" value="1"/><PARAM name="cooPortBase" value="8000"/><PARAM name="cooListenIp1" value="192.168.10.13"/><PARAM name="cooDir1" value="/opt/gaussdb/data/cn"/><PARAM name="etcdNum" value="1"/><PARAM name="etcdListenPort" value="2379"/><PARAM name="etcdHaPort" value="2380"/><PARAM name="etcdListenIp1" value="192.168.10.13"/><PARAM name="etcdHaIp1" value="192.168.10.13"/><PARAM name="etcdDir1" value="/opt/huawei/gaussdb/data/etcd/data_etcd1"/></DEVICE><DEVICE sn="1000004"><PARAM name="name" value="Gauss4"/><PARAM name="azName" value="AZ1"/><PARAM name="azPriority" value="1"/><PARAM name="backIp1" value="192.168.10.14"/><PARAM name="sshIp1" value="192.168.10.14"/><PARAM name="innerManageIP" value="10.10.10.14"/><PARAM name="dataNum" value="1"/><PARAM name="dataPortBase" value="40000"/><PARAM name="dataNode1" value="/opt/gaussdb/data/dn4,Gauss1,/opt/gaussdb/data/dn4"/><PARAM name="cooNum" value="1"/><PARAM name="cooPortBase" value="8000"/><PARAM name="cooListenIp1" value="192.168.10.14"/><PARAM name="cooDir1" value="/opt/gaussdb/data/cn"/></DEVICE></DEVICELIST></ROOT>

4.集群初始化

执行环境初始化脚本,执行过程会自动配置安装环境,创建用户,环境变量,目录等。

[root@Gauss1 script]# ./gs_preinstall -U omm -G dbgrp -X opt/software/gaussdb/clusterconfig.xmlParsing the configuration file.Successfully parsed the configuration file.Installing the tools on the local node.Successfully installed the tools on the local node.Are you sure you want to create trust for root (yes/no)? yesPlease enter password for root.Password:Creating SSH trust for the root permission user.Checking network information.All nodes in the network are Normal.Successfully checked network information.Creating SSH trust.Creating the local key file.Successfully created the local key files.Appending local ID to authorized_keys.Successfully appended local ID to authorized_keys.Updating the known_hosts file.Successfully updated the known_hosts file.Appending authorized_key on the remote node.Successfully appended authorized_key on all remote node.Checking common authentication file content.Successfully checked common authentication content.Distributing SSH trust file to all node.Successfully distributed SSH trust file to all node.Verifying SSH trust on all hosts.Successfully verified SSH trust on all hosts.Successfully created SSH trust.Successfully created SSH trust for the root permission user.[GAUSS-52406] : The package type "" is inconsistent with the Cpu type "X86".[root@Gauss1 script]#

报错处理:

root@Gauss1 ~]# cd /opt/gaussdb/log/omm/om[root@Gauss1 om]# lltotal 20-rw-------. 1 root root 1953 Dec 14 20:44 gs_local-2019-12-14_204431.log-rw-------. 1 root root 9723 Dec 14 20:45 gs_preinstall-2019-12-14_204425.log-rw-------. 1 root root 13 Dec 14 20:44 topDirPath.dat[root@Gauss1 om]# tail gs_preinstall-2019-12-14_204425.log2019-12-14 20:45:08.844963][gs_sshexkey][LOG]:Successfully appended authorized_key on all remote node.[2019-12-14 20:45:08.845034][gs_sshexkey][LOG]:Checking common authentication file content.[2019-12-14 20:45:08.845412][gs_sshexkey][LOG]:Successfully checked common authentication content.[2019-12-14 20:45:08.845484][gs_sshexkey][LOG]:Distributing SSH trust file to all node.[2019-12-14 20:45:09.251582][gs_sshexkey][LOG]:Successfully distributed SSH trust file to all node.[2019-12-14 20:45:09.251687][gs_sshexkey][LOG]:Verifying SSH trust on all hosts.[2019-12-14 20:45:09.658347][gs_sshexkey][LOG]:Successfully verified SSH trust on all hosts.[2019-12-14 20:45:09.658447][gs_sshexkey][LOG]:Successfully created SSH trust.[2019-12-14 20:45:09.681885][gs_preinstall][LOG]:Successfully created SSH trust for the root permission user.[2019-12-14 20:45:10.539785][gs_preinstall][ERROR]:[GAUSS-52406] : The package type "" is inconsistent with the Cpu type "X86".Traceback (most recent call last)File "./gs_preinstall", line 507, in <module>File "/opt/software/gaussdb/script/impl/preinstall/PreinstallImpl.py", line 1861, in run[root@Gauss1 om]# vi opt/software/gaussdb/script/impl/preinstall/PreinstallImpl.py1760 self.createTrustForRoot()1761 #cpu Detection1762 #self.getAllCpu() --注释掉1763 #ram Detection1764 self.getAllRam()1765 # LVM

再次执行

[root@Gauss1 script]# ./gs_preinstall -U omm -G dbgrp -X opt/software/gaussdb/clusterconfig.xmlParsing the configuration file.Successfully parsed the configuration file.Installing the tools on the local node.Successfully installed the tools on the local node.Are you sure you want to create trust for root (yes/no)? yesPlease enter password for root.Password:Creating SSH trust for the root permission user.Checking network information.All nodes in the network are Normal.Successfully checked network information.Creating SSH trust.Creating the local key file.Successfully created the local key files.Appending local ID to authorized_keys.Successfully appended local ID to authorized_keys.Updating the known_hosts file.Successfully updated the known_hosts file.Appending authorized_key on the remote node.Successfully appended authorized_key on all remote node.Checking common authentication file content.Successfully checked common authentication content.Distributing SSH trust file to all node.Successfully distributed SSH trust file to all node.Verifying SSH trust on all hosts.Successfully verified SSH trust on all hosts.Successfully created SSH trust.Successfully created SSH trust for the root permission user.All host RAM is consistentPass over configuring LVMDistributing package.Successfully distributed package.Are you sure you want to create the user[omm] and create trust for it (yes/no)? yesPlease enter password for cluster user.Password:Please enter password for cluster user again.Password:Creating [omm] user on all nodes.Successfully created [omm] user on all nodes.Installing the tools in the cluster.Successfully installed the tools in the cluster.Checking hostname mapping.Successfully checked hostname mapping.Creating SSH trust for [omm] user.Please enter password for current user[omm].Password:Checking network information.All nodes in the network are Normal.Successfully checked network information.Creating SSH trust.Creating the local key file.Successfully created the local key files.Appending local ID to authorized_keys.Successfully appended local ID to authorized_keys.Updating the known_hosts file.Successfully updated the known_hosts file.Appending authorized_key on the remote node.Successfully appended authorized_key on all remote node.Checking common authentication file content.Successfully checked common authentication content.Distributing SSH trust file to all node.Successfully distributed SSH trust file to all node.Verifying SSH trust on all hosts.Successfully verified SSH trust on all hosts.Successfully created SSH trust.Successfully created SSH trust for [omm] user.Checking OS version.Successfully checked OS version.Creating cluster's path.Successfully created cluster's path.Setting SCTP service.Successfully set SCTP service.Set and check OS parameter.Successfully set NTP service.Setting OS parameters.Successfully set OS parameters.Warning: Installation environment contains some warning messages.Please get more details by "/opt/software/gaussdb/script/gs_checkos -i A -h Gauss1,Gauss2,Gauss3,Gauss4 -X opt/software/gaussdb/clusterconfig.xml".Set and check OS parameter completed.Preparing CRON service.Successfully prepared CRON service.Preparing SSH service.Successfully prepared SSH service.Setting user environmental variables.Successfully set user environmental variables.Configuring alarms on the cluster nodes.Successfully configured alarms on the cluster nodes.Setting the dynamic link library.Successfully set the dynamic link library.Fixing server package owner.Successfully fixed server package owner.Create logrotate service.Successfully create logrotate service.Setting finish flag.Successfully set finish flag.check time consistency(maximum execution time 10 minutes).Time consistent is running(20/20)...Preinstallation succeeded.[root@Gauss1 script]#

执行告警的脚本

[root@Gauss1 script]# opt/software/gaussdb/script/gs_checkos -i A -h Gauss1,Gauss2,Gauss3,Gauss4 -X opt/software/gaussdb/clusterconfig.xmlChecking items:A1. [ OS version status ] : NormalA2. [ Kernel version status ] : NormalA3. [ Unicode status ] : NormalA4. [ Time zone status ] : NormalA5. [ Swap memory status ] : NormalA6. [ System control parameters status ] : NormalA7. [ File system configuration status ] : NormalA8. [ Disk configuration status ] : NormalA9. [ Pre-read block size status ] : NormalA10.[ IO scheduler status ] : NormalA12.[ Time consistency status ] : WarningA13.[ Firewall service status ] : NormalA14.[ THP service status ] : NormalA15.[ Storcli tool status ] : NormalTotal numbers:14. Abnormal numbers:0. Warning numbers:1.[root@Gauss1 script]#

分布式环境对时间要求很高,测试环境配置Gauss1为时钟服务器,其他节点同步

Gauss1:

[root@Gauss1 ~]# yum -y install ntp ntpdate[root@Gauss1 ~]# echo server 127.127.1.0 iburst >> /etc/ntp.conf[root@Gauss1 ~]# systemctl restart ntpd[root@Gauss1 ~]# systemctl enable ntpd[root@Gauss1 ~]# ntpq -premote refid st t when poll reach delay offset jitter==============================================================================*LOCAL(0) .LOCL. 5 l 12 64 1 0.000 0.000 0.000[root@Gauss1 ~]#

Gauss2,3,4做如下配置:

[root@Gauss2 ~]# yum -y install ntp ntpdate[root@Gauss2 ~]# echo server 192.168.10.11 >> /etc/ntp.conf[root@Gauss2 ~]# echo restrict 192.168.10.11 nomodify notrap noquery >> /etc/ntp.conf[root@Gauss2 ~]# ntpdate -u 192.168.10.11[root@Gauss2 ~]# hwclock -w[root@Gauss2 ~]# systemctl restart ntpd[root@Gauss2 ~]# systemctl enable ntpd[root@Gauss2 ~]# ntpq -premote refid st t when poll reach delay offset jitter==============================================================================Gauss1 LOCAL(0) 6 u 10 64 1 0.416 197.856 0.000[root@Gauss2 ~]#

重新检测:

[root@Gauss1 ~]# /opt/software/gaussdb/script/gs_checkos -i A -h Gauss1,Gauss2,Gauss3,Gauss4 -X /opt/software/gaussdb/clusterconfig.xmlChecking items:A1. [ OS version status ] : NormalA2. [ Kernel version status ] : NormalA3. [ Unicode status ] : NormalA4. [ Time zone status ] : NormalA5. [ Swap memory status ] : NormalA6. [ System control parameters status ] : NormalA7. [ File system configuration status ] : NormalA8. [ Disk configuration status ] : NormalA9. [ Pre-read block size status ] : NormalA10.[ IO scheduler status ] : NormalA12.[ Time consistency status ] : NormalA13.[ Firewall service status ] : NormalA14.[ THP service status ] : NormalA15.[ Storcli tool status ] : NormalTotal numbers:14. Abnormal numbers:0. Warning numbers:0.[root@Gauss1 ~]#

本文转自“墨天轮”社区GaussDB频道

<未完待续>

下一篇: GaussDB T 分布式集群部署(2)https://bbs.huaweicloud.com/blogs/140821

- 点赞

- 收藏

- 关注作者

评论(0)